Imagine a world where you no longer need to juggle tabs, fill out forms, or click through endless menus. Instead, you simply tell a digital assistant, “Transfer this amount, draft an email with these subjects, send me a summary, and update my calendar.” That vision is no longer science fiction; it marks a profound shift in how we think about user interfaces.

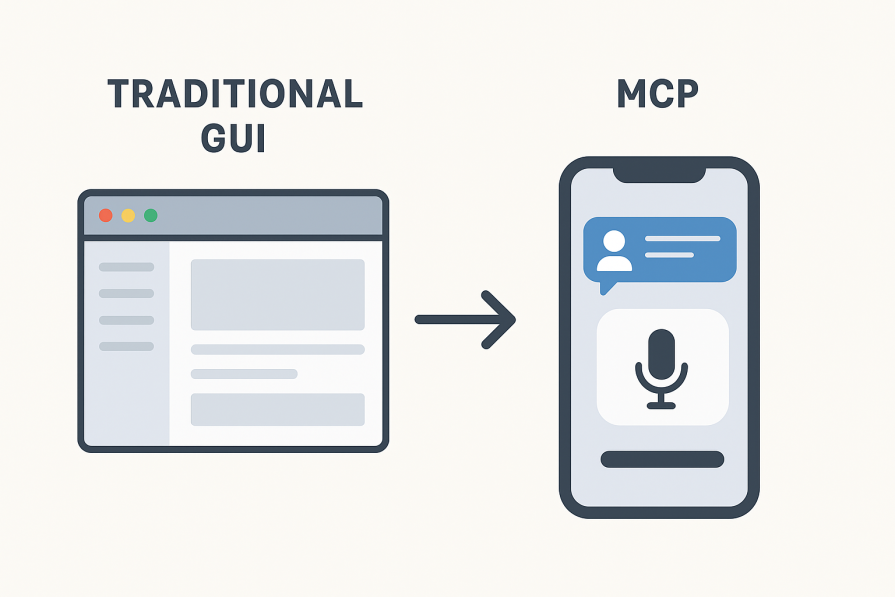

For decades, the web has revolved around screens, buttons, and forms. But that long-standing paradigm is now being reimagined. We’re entering an era where voice- and prompt-driven agents take center stage, powered by multi-channel, multi-capability, and multi-service frameworks known as the Model Context Protocol (MCP).

In this article, we’ll explore how these agents are poised to transform the way humans and machines collaborate – moving beyond static frontends toward dynamic, context-aware systems. For developers and frontend teams, this means designing not just for users, but for agents: crafting intelligent interactions and workflows that make sense in an agent-first world.

The path forward calls for rethinking architecture, user experience, and developer experience alike, while navigating the new challenges and design patterns that come with this paradigm shift.

The Replay is a weekly newsletter for dev and engineering leaders.

Delivered once a week, it's your curated guide to the most important conversations around frontend dev, emerging AI tools, and the state of modern software.

Kent C. Dodds, the renowned creator of Epic React and Testing JavaScript, and co-founder of Remix, recently shared his thoughts on the MCP on an episode of Podrocket, a web development podcast from LogRocket.

According to Dodds, “MCP is the latest way of thinking about how agents connect and operate. MCP can be understood as multi-channel, multi-capability, and multi-service – all working together to make a single assistant far more powerful than the tools we are used to today”.

Instead of being locked into one platform or one function, an MCP-powered agent can fluidly move between tasks and services, stitching them together into a seamless experience.

This is where the idea of a Jarvis-like assistant comes in – an intelligent agent designed to understand natural language, whether spoken or typed. Unlike today’s limited assistants, a Jarvis agent isn’t bound to one action or domain. It can send emails, update calendars, manage files, or even tap into third-party APIs to get work done.

These assistants are always available, quick to respond, and proactive in anticipating your needs. What truly sets them apart is their ability to carry context across interactions, remembering what matters to you and operating within a framework built on privacy and trust.

We already see early glimpses of this future in today’s chatbots and smart assistants. They’re functional, but fragmented. A Jarvis-style agent unifies those isolated capabilities into one fluid, context-aware system – offering a glimpse of the next generation of human–machine collaboration.

The move toward agent-first interfaces is not happening by chance. It is being pushed forward by a blend of human behaviour and rapid advances in technology. People are growing weary of constantly switching between countless apps and websites, and what they really crave is a seamless way to get things done.

At the same time, the tools that power natural language interactions have matured to a point where this transformation feels inevitable. The convergence of user demand and technological readiness has set the stage for a new kind of interface – one that’s conversational, context-aware, and capable of doing more than ever before.

Let’s take a closer look at the key forces driving this change.

We’re seeing growing fatigue with traditional navigation. Opening a new app for every task feels increasingly clunky and fragmented. The desire for simplicity is reshaping user expectations – people now want assistants that can handle tasks in one seamless, continuous flow.

Artificial intelligence (AI) and large language models (LLMs) have completely reshaped how natural language is understood and processed. Today, talking or typing a request often feels faster and more intuitive than navigating through menus or filling out forms.

The growing adoption of APIs and microservices now allows agents to move effortlessly across platforms – from calendars and email to file storage and third-party systems. Tasks that once required manual switching between apps can now flow automatically through seamless integrations.

Companies understand that reducing friction is key to keeping users engaged. Agents that can anticipate needs and manage complexity quietly in the background not only boost productivity but also build lasting loyalty.

When you combine all these factors, the path forward becomes clear – we’re not just imagining a future filled with Jarvis-like assistants; we’re already taking the first steps toward it.

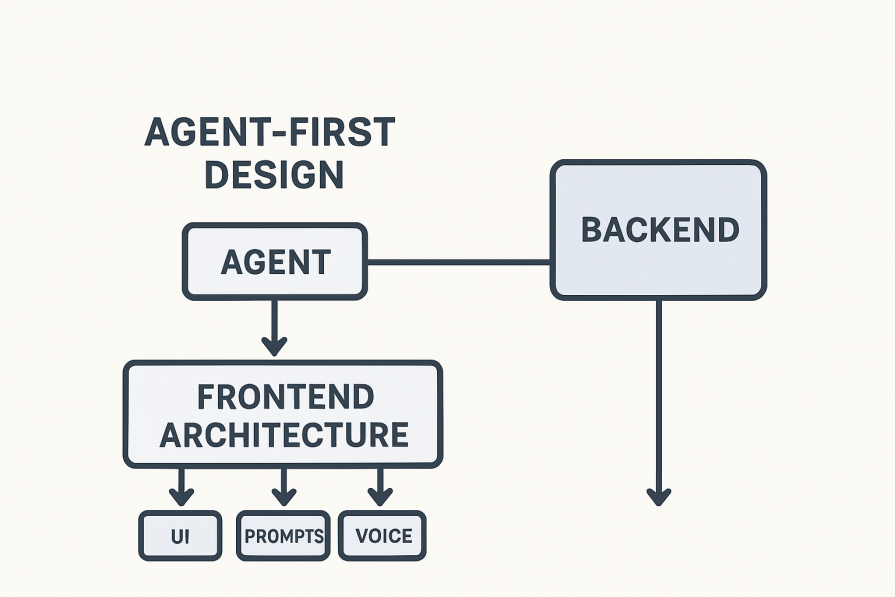

The rise of agent-first interfaces isn’t just changing the experience for end users – it’s also transforming how developers think about building applications.

Traditionally, the frontend has centered on static pages, components, and interactions that lived entirely within the browser. But now, the challenge is designing workflows and systems that make sense for both humans and agents.

This evolution calls for a fundamental rethinking of frontend architecture and the developer experience that supports it.

Instead of designing screens packed with buttons and menus, teams now need to focus on workflows that agents can interpret and act on. The frontend is no longer just a visual layer – it’s becoming a structured set of instructions and flows that an agent can trigger, understand, and adapt in real time.

Behind the scenes, the backend and middleware of most MCPs require more powerful orchestration. Since agents rely on microservices, connectors to external platforms, and controllers that can coordinate actions across multiple systems – the backend becomes the silent partner that enables agents to extend their reach.

Now, agents must remember what a user said, what they meant, and how it connects to previous requests. This means developers need robust systems to manage state and context across prompts, sessions, and services without losing accuracy or trust.

The data privacy and data governing laws must be strictly adhered to since agents often touch sensitive data, and trust boundaries matter more than ever. Therefore, developers must carefully define who has access to what, how data flows between services, and how to enforce consent at every step.

Testing and debugging an agent is very different from checking a graphical interface. Developers need new ways to trace decision paths – understand why an agent responded a certain way, and reproduce complex conversational states.

To keep pace, a new generation of tools is emerging – from environments for prompt design and frameworks for API chaining to orchestration platforms and testing setups for voice interactions. The developer experience is shifting away from building static components and toward crafting and refining intelligent conversations.

Taken together, these changes mark a fundamental shift in how frontend teams work. They’re no longer designing just for human clicks and taps, but for intelligent agents that act on behalf of humans across an entire ecosystem of connected services.

Developers and designers now need to think in terms of conversations, flows, and multimodal signals – especially as agents become the primary way people interact with technology. Design must evolve far beyond screens and buttons.

Agent-first design is less about crafting polished layouts and more about creating interactions that feel natural, reliable, and accessible to everyone.

Voice and text prompts are the most obvious starting points, but they are not the only ways people will engage with agents. Gestures and haptics may also play a role in making experiences more fluid. Each modality demands careful design so that users feel in control regardless of how they choose to communicate.

Designing for conversations means thinking about turn-taking, clarity, and what happens when the agent does not understand. A smooth fallback system prevents frustration and helps maintain trust.

At the core of any agent interaction lies the design of prompts. This is where context, triggers and switching between different tasks must be handled.

A well-structured prompt flow ensures that agents interpret intent accurately without losing context.

Traditional UI patterns – like confirmations, summaries, and undo actions – still matter, but they take on new meaning in an agent-driven world. These checkpoints are essential for preventing unexpected behavior and reassuring users that they remain in control.

Agents shouldn’t rely exclusively on voice or text. In many cases, a quick visual cue or a simple UI element can help confirm an action or highlight important information. The real challenge lies in knowing when to stay voice-only and when to enrich the interaction with visual feedback.

Agent-first design has the potential to make technology more inclusive, particularly for people with visual or physical impairments. But that potential can be lost if accessibility isn’t a core priority. Designers must ensure that voice, text, and gestures are all usable, adaptable, and inclusive of diverse needs.

In short, designing for agents means thinking like a conversational architect rather than a page designer – focusing on interactions that feel human, predictable, and adaptable across different contexts and modalities.

The shift from traditional websites to agent-powered platforms is more than a technical upgrade. It changes how people experience technology in their daily lives and how businesses define value in the digital space. These agents help to reduce the friction of everyday tasks but also introduce new questions about trust, privacy, and control.

At the same time, product teams and companies must adapt to new definitions of success and fresh growth opportunities.

For everyday users, agents mean fewer clicks, fewer logins, and far less time spent jumping between platforms. Instead of manually coordinating tasks, an assistant can now connect the dots automatically. The tradeoff, however, is trust – users must feel confident sharing sensitive information, and over-reliance on agents can raise new concerns about transparency and control.

For companies, this shift means reimagining what a product actually is. Instead of delivering a standalone web experience, teams must think about how their services integrate into agent workflows. The value lies in becoming part of a larger ecosystem of tasks, and that opens the door to new revenue models built around seamless integration.

Agents thrive when they can move fluidly across services. This puts pressure on businesses to open their platforms through APIs, partnerships, and integrations. The companies that succeed will be those that position themselves as indispensable nodes in an interconnected web of services rather than isolated silos.

With agents in charge, traditional measures like page views or clicks lose relevance. The new yardsticks are task success rates, error frequencies, and overall user satisfaction with agent-driven workflows. Businesses must learn to measure value not in terms of visits, but in terms of outcomes.

In essence, the shift to agent platforms transforms the relationship between users, businesses, and technology itself. It moves the focus from screens to outcomes, from standalone apps to connected ecosystems, and from attention-based metrics to trust and productivity.

While AI agents hold immense potential to enhance productivity and decision-making, their deployment comes with a set of significant challenges and risks that must be carefully managed.

One of the most pressing challenges is reliability. Agents can misinterpret prompts, generate misleading or fabricated information – often referred to as “hallucinations” – or fail unpredictably under certain conditions.

These reliability gaps can erode user trust and, in some cases, lead to costly or even harmful outcomes if not carefully mitigated.

Security and privacy are equally critical concerns. AI systems often handle sensitive user data, raising questions around data leaks, consent, and ownership. Without strong safeguards and transparent policies, users risk exposing confidential information or losing control over how their data is used.

Another major challenge is over-automation. While automation can streamline workflows, too much reliance on autonomous agents can reduce human oversight and create frustration when users can’t understand or override an agent’s decisions. Striking the right balance between automation and user control is essential.

User experience (UX) adds another layer of complexity. Designing interfaces that can handle the wide range of edge cases AI agents encounter is no small task. Systems must be built to degrade gracefully, ensuring that even when an agent fails, the overall experience remains functional, coherent, and trustworthy.

Finally, ethical concerns cut across all these challenges – from questions of fairness, bias, and surveillance to growing worries about user over-dependence on AI. These issues continue to shape the conversation around responsible AI development. Ensuring that agents act transparently, fairly, and with respect for user autonomy is essential to their long-term acceptance and societal benefit.

As most organizations choose to consider exploring AI agent integration, a structured and incremental approach is key to achieving meaningful results.

The journey should start with prototyping, which entails building small, focused agent-driven features within existing products.

For instance, teams should experiment with smart assistants that handle specific tasks or offer contextual suggestions within an app. This type of controlled experimentation helps teams learn from real user interactions without overcommitting resources.

Next, teams should prioritize research and user testing to understand how people naturally interact with agents. Observing how users talk, type, or navigate – compared to traditional interfaces — provides valuable insights into effective prompt design, conversational flow, and voice-based experiences. Early user feedback also plays a crucial role in shaping decisions and refining both functionality and usability to make interactions more human-centered.

Investing in the right tooling is another foundational step for developing internal frameworks, robust APIs, and logging systems – which allow teams to monitor agent performance, track metrics, and continuously improve reliability. A solid technical infrastructure ensures scalability as agent capabilities expand.

Equally important is extending the design system to support new interaction patterns. Teams need to create consistent guidelines for agent responses, prompts, and voice interfaces that align with existing brand experiences while introducing new, conversational modes of interaction.

Strategic integration should also be a priority. By identifying and connecting the key services or APIs users rely on, teams can enable agents to perform genuinely useful actions – bridging data and functionality seamlessly across platforms.

Finally, governance and ethics must guide every stage of development. Establishing clear policies for privacy, access control, data handling, and transparency is essential to maintaining user trust. A responsible framework not only mitigates risks but also strengthens credibility as AI becomes a more integral part of digital products.

Looking ahead, AI agents are set to become seamlessly woven into daily life and work. Imagine a personal Jarvis that manages errands, schedules, payments, and household tasks through natural conversation – adapting to your habits and preferences over time.

In the workplace, agents could act as intelligent collaborators, unifying data across tools like Slack, email, and calendars. A single prompt might retrieve reports, summarize updates, or schedule meetings, transforming how teams communicate and access knowledge.

Beyond individual use, agents will extend into connected ecosystems – from phones and vehicles to smart homes and IoT systems — enabling context-aware interactions that anticipate needs and respond proactively.

As this evolution unfolds, the role of graphical user interfaces (GUIs) will shift. Visual interfaces won’t disappear but will complement conversational and voice-driven interactions, blending voice, gesture, and visuals into fluid, multimodal experiences.

Finally, the convergence of AI with VR, AR, and ambient computing points toward immersive environments where agents appear as virtual presences – guiding, assisting, and enhancing how we live and work every day.

User interfaces are entering a new era. As AI agents move from experimental tools to everyday interfaces, they’re redefining how people interact with technology – shifting from clicks and screens to conversation, context, and intent.

For frontend and product teams, this means rethinking design from the ground up. Starting small with prototypes, prompt-based experiences, and frameworks built on trust and usability will help teams shape how the next generation of digital products feels.

Just as the web freed us from desktop software, AI agents could free us from traditional GUIs. Realizing that vision depends on building responsibly and with empathy – ensuring this revolution enhances, not complicates, the way we live and work.

Happy coding!

Compare the top AI development tools and models of February 2026. View updated rankings, feature breakdowns, and find the best fit for you.

Broken npm packages often fail due to small packaging mistakes. This guide shows how to use Publint to validate exports, entry points, and module formats before publishing.

Discover what’s new in The Replay, LogRocket’s newsletter for dev and engineering leaders, in the February 11th issue.

Cut React LCP from 28s to ~1s with a four-phase framework covering bundle analysis, React optimizations, SSR, and asset/image tuning.

Hey there, want to help make our blog better?

Join LogRocket’s Content Advisory Board. You’ll help inform the type of content we create and get access to exclusive meetups, social accreditation, and swag.

Sign up now