For the past months I’ve been working on Wave — an open source, AI-native terminal that knows your context (we know, the hook still needs work).

In this time, we’ve gone from zero daily active users (DAU) and zero GitHub stars at launch in November 2023 to approximately 3000 DAU and 12k GitHub stars today.

My biggest lesson: The tried and trusted SaaS go-to-market (GTM) playbook doesn’t apply for AI-native products. We’re consciously breaking all my cardinal rules, such as:

At the end of the day, AI-native products require a different approach to GTM. Keep reading to hear what I’ve learned and leave with actionable takeaways you can bring back to your product team.

Wave is a terminal, therefore a “devtool.” Bringing a devtool to market today kind of sucks. Without a well-integrated AI it just feels silly (prove me wrong).

However, building something “AI-native” comes with the great risk of becoming obsolete overnight. You’re damned if you don’t, and you’re damned if you do.

So, we’re prepared to pivot. We’ve already evolved from “modern, open source terminal for better developer workflows” to “AI-native terminal that sees your entire workspace.” The journey continues, and throughout it our product will likely change.

Our number one focus is to build strong, stable social distribution channels that can grow and adapt with us.

We’re bringing our early customer profile (influencers) along for the ride, inviting them to co-create Wave with us. And doing so means breaking rule number one: We’re bringing an imperfect product into a crowded space, for an audience that traditionally expects perfection.

People are drowning in gen AI tools — they don’t want to hear about yet another new thing, let alone consider paying for one.

I’ve been visiting offline AI events (“AI builders,” “AI assisted coding”) to get a read on excitement levels about our value proposition, bringing a variety of hooks and landing pages. I assumed these events would be full of innovators/early adopters eager to get their hands on something new.

Think again.

I heard the advice “don’t try to keep up with new tools, just try the stuff you’ve heard multiple good friends talk about” again and again.

This poses a huge problem for new players. A hackernews/producthunt launch won’t cut it — you need to be near omnipresent to even get early adopters to give you a try.

To become omnipresent, AI-native companies like Wave need to be architecturally designed around social sharing.

What does that mean? We’re already doing the obvious things, but so is everyone else:

As a slightly prominent product person I’ve noticed AI-native tools reaching out to me with monetary incentives and the incentive to be part of a “cool council” to plug their tools.

This is nice, but a couple of occasional brand ambassadors won’t create the omnipresence we need. I’d like to go beyond just the sporadic influencer post by integrating influencers much deeper into what we’re doing.

We’re already trying to validate ways that users organically bring in other users, before we’ve hit PMF (and way before we’re thinking about monetization).

I’ve always preached that growth comes after PMF (“don’t try to scale something before you know the market cares”), but now I’m validating what viral growth loops (sharing vibe coded apps, watermarks/badges, competitions) might work before PMF.

For SaaS, my golden rule has always been: sell first, build later.

For Wave (AI-native), my rule has shifted to: Establish distribution channels (with an unfinished product), build/learn/pivot, sell.

Why? Because the product is fundamentally unstable. Foundational models evolve monthly, and as a16z partner Bryan Kim notes, competitors can replicate features almost instantly. In this new market, the only sustainable advantage is velocity, making “momentum as a moat.”

This flips the script: distribution comes way before monetization. The primary goal is to build a sticky, loyal audience and a robust distribution channel. This channel becomes the asset you own, even if the product underneath it needs to pivot completely.

Genspark illustrates this perfectly. It initially positioned itself as an AI search engine and attracted five million users — securing its distribution. But in late 2024, it observed users evolving from information queries (“summarize this market”) to outcome-oriented commands (“create a pitch deck about this market”).

It pivoted completely to an “AI Agentic Engine.” The result? A reported $36 million in ARR in just 45 days. That pivot was only possible because it had already built the distribution channel.

I’ve been doing ICP alignment workshops with B2B SaaS companies for years. Throughout this time, I’ve been preaching about the importance of identifying a meaningful, narrow ICP.

Multiple ICPs to pick from? Choose the ICP with the most compelling reason to buy, which usually happens to be the ICP in the most excruciating pain.

But I’m ignoring my own advice with Wave.

Wave’s ICP is vague as hell. Instead of targeting a nice, neatly confined ICP like “experienced DevOps people at startups,” we’re targeting anyone who’s excited about using a terminal that gives you a terminal, web browser, file manager/previewer, remote servers, and an integrated AI orchestrator all in one box.

Why? The group of people using the terminal has grown massively thanks to vibe-coding and AI assisted coding. We’ve gone from (n) developers to (n) somewhat technical people using terminals and IDEs.

To cast a narrow net around an ICP today feels foolish. Our ECP is concrete, and selected based on our primary goals.

Our goals:

Fast learning is even more important for AI native than for non-AI SaaS for two big reason:

We need fast learning, to keep our finger on the user-expectation-pulse, understand what users are trying to do, understand edge cases and failed modes, etc. Ideally our early users give us loads of feedback, and are trying the more difficult paths (if our AI can handle difficult things, it can most likely also handle easier things).

My former playbook for identifying a problem worth solving for a pre-PMF SaaS startup relied heavily on audience interviews. I’d ask people fitting my ICP to talk me through their jobs-to-be-done and focus on friction points, to uncover what’s top of mind for them, hopefully leading me to an interesting “hair-on-fire” problem.

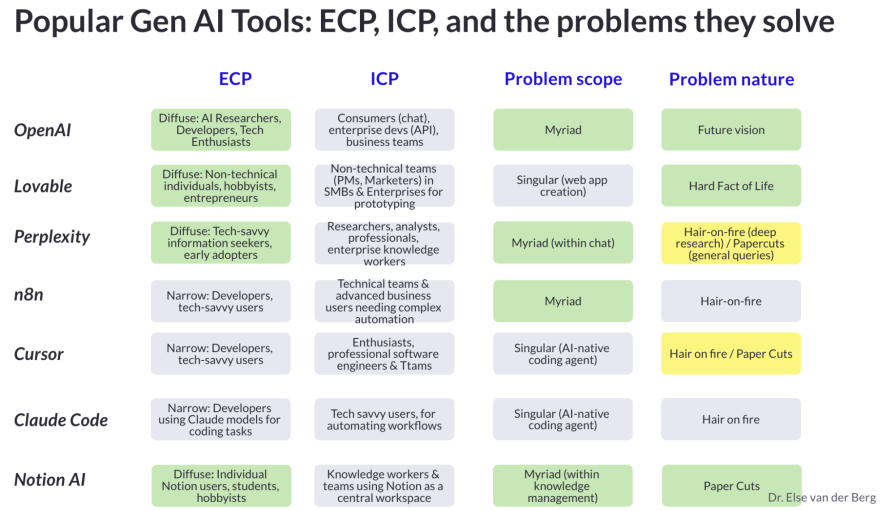

Some AI-native companies were built around a particular hair-on-fire problem (e.g. write high quality code faster), but there are many others that solve:

This comparison chart above shows how a diffuse ECP tends to correlate with a “myriad” problem space, and how several successful AI companies were built on problems that I wouldn’t consider “hair-on-fire.”

Lovable responds to a “hard fact of life” problem. Had you asked me — a non-technical product manager — early 2022 about the pain points I face, I would have never said “I wish I could use natural language to create a functional application by myself.”

I assumed that I would always need an expensive team of developers for that and accepted it as a hard fact of life.

Cursor attempts to tackle the hair-on-fire problem for developers of needing to ship high-quality code fast. But it also eliminates a thousand tiny, individual frustrations that developers experience every single day (looking up syntax, writing boilerplate code, multi-line edits, etc).

Notion AI targets any team that needs a knowledge base (pretty diffuse), can be used for ten or hundreds of use cases, and is a typical paper cut product.

Before Notion AI, users weren’t necessarily saying ,”My work is impossible because I can’t summarize my notes.” However, they frequently experienced the minor but persistent annoyances that Notion AI now solves.

I’m very interested in the 1000 little paper cuts strategy, since LLMs excel at tasks that are context-rich but low in complexity, which perfectly describes most paper cuts. Before AI, automating these tasks was often impossible or too expensive because they required a human-like understanding of nuance that traditional software lacks.

And as Amazon proved, there is power in aggregation: fixing hundreds of tiny customer experience deficiencies collectively can create a massively superior product.

The risk is obvious though. It’s very, very hard to craft a compelling, simple hook by listing the 1000 little paper cuts your product solves.

I’m currently facing this with Wave. In an ideal world, solving 1000 little paper cuts together will create a holistic value prop.

Compare Cursor. By automating the constant stream of minor developer tasks — looking up syntax, writing boilerplate, refactoring a function — Cursor solves the larger “hair on fire” problem of slow development cycles.

We’re not there yet, and as a seasoned PM it’s quite awkward for me to interview developers, and not have a clear answer to their first question “What problem does it solve?”

As I move ahead with Wave, here’s my list of priorities:

The journey from “modern terminal” to “AI-native workspace” continues. Wish us luck and let me know your thoughts on this new SaaS playbook.

Featured image source: IconScout

LogRocket identifies friction points in the user experience so you can make informed decisions about product and design changes that must happen to hit your goals.

With LogRocket, you can understand the scope of the issues affecting your product and prioritize the changes that need to be made. LogRocket simplifies workflows by allowing Engineering, Product, UX, and Design teams to work from the same data as you, eliminating any confusion about what needs to be done.

Get your teams on the same page — try LogRocket today.

Maryam Ashoori, VP of Product and Engineering at IBM’s Watsonx platform, talks about the messy reality of enterprise AI deployment.

A product manager’s guide to deciding when automation is enough, when AI adds value, and how to make the tradeoffs intentionally.

How AI reshaped product management in 2025 and what PMs must rethink in 2026 to stay effective in a rapidly changing product landscape.

Deepika Manglani, VP of Product at the LA Times, talks about how she’s bringing the 140-year-old institution into the future.