As predictions about the power of AI have come true, it’s become almost impossible to avoid it. AI now integrates into almost every aspect of your daily life. That said, people have a range of emotions towards its increasing use.

Some take a nonchalant approach, others lean passive aggressive, and in some cases verbal sparring can even emerge from discussion around its merits. To help you navigate these conversations, this article covers the basics of tech adoption, provides an overview of the present and future state of AI, and details how to approach using AI in your everyday life and workplace.

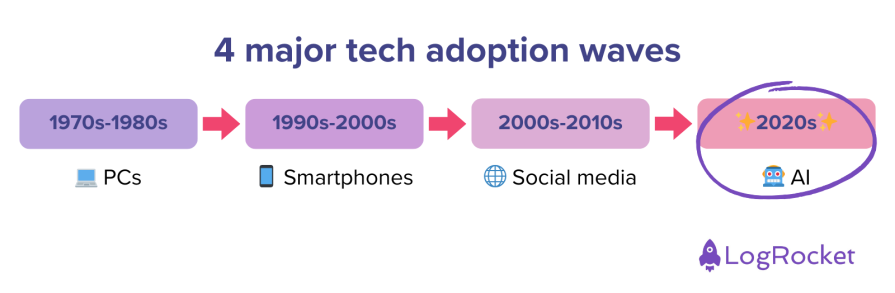

Before understanding how you can use AI in your daily work, it’s helpful to have some general familiarity with how technology adoption waves have gone in the past:

More than a decade ago, with my investing hat on, I distinctly remember researching the top five tech trends of the next decade. Back then, people were raving about the future of big data and cloud computing.

In recent years, the narrative has shifted. Unless you’ve been living under a rock for the last decade, you’ve undoubtedly heard the macro forecasts and trends of AI, but until recently, maybe it hadn’t hit home:

AI and its impact on humanity will undoubtedly go down in history, for better or for worse.

For many people, the term artificial intelligence was a nebulous topic for tech nerds until the introduction of ChatGPT, an AI tool everyday users can interact with directly.

ChatGPT effectively rendered Google and traditional SEO obsolete, setting off a firestorm of urgency among the tech giants to prove they had equivalent capabilities to capture and retain market share. Microsoft introduced Copilot, Google introduced Gemini, OpenAI became a household name, and suddenly the process for searching and retrieving information seemed to take on a mind of its own.

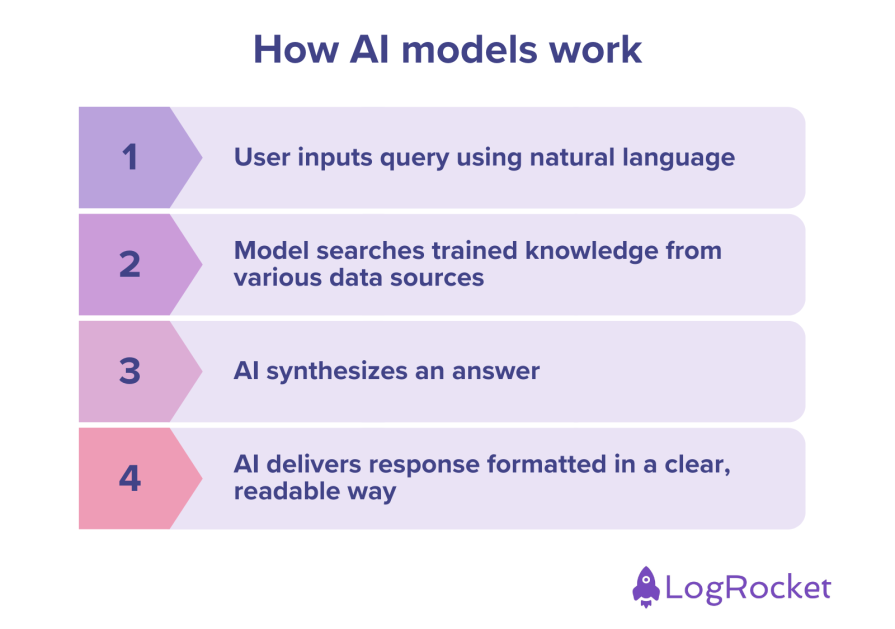

If you haven’t used one of these tools yet, the way it basically works is:

Some advanced models use retrieval-augmented generation (RAG) to pull external data for more accurate answers. Some systems consider context to provide a more personalized response (e.g., anything else they know about you, including a history of topics you’ve searched or conversations you’ve had with the software in the past). Some of them provide sources of their information, and occasionally you’ll receive two responses and be asked to select the one you prefer, which helps to further train the model.

I know friends whose companies still hadn’t transitioned to a culture of remote work before the pandemic. They were forced to buy everyone laptops instead of desktops and quickly adopt a culture of virtual meetings, which felt foreign to them.

On the opposite end of the spectrum, I know companies dueling for a seat at the tech futurists table, eager to develop the next technology to catapult us into the next era of advanced computing, machine learning, robotics, and more.

But what is an era, anyway? Things are constantly morphing and shifting. It’s tough to draw a finite line, especially when, like today, technology advancements are moving so rapidly.

I frame eras through the lens of general adoption.

The research, innovation, and early adoption phases might occur several years to decades before a major wave occurs. For AI, that was a decade ago, as microprocessors, GPUs, TPUs, big data, and cloud hosting became the norm.

With more advanced computing power available to train machine learning models, huge data sets available upon which to train them, and cloud hosting democratizing access to distributed computing frameworks like Kubernetes, teams were empowered to work in parallel instead of sequentially, priming us for the explosive growth we see in technology.

The open sourcing and general availability of AI is enabling the adoption wave. It’s readily available, it’s easy to use, and it’s useful.

I read a couple of articles about the future of AI back in 2015 and 2016 that blew my mind and led me into a deeper dive about our future with AI, like “Superintelligence” by Nick Bostrom, “The Artificial Intelligence Contagion” by Daniel Barnhizer, and “How to Create a Mind and “The Singularity is Near” by Ray Kurzweil. I devoured countless articles and had the sincere privilege of working for a quantum technology company for two years on the bleeding edge of innovation.

Our future isn’t just full of artificial intelligence; it’ll be powered by faster computing than we can possibly imagine, driven by quantum processors that harness the energy of quantum physics — that which occurs at the subatomic level. This is why world renowned agencies like the National Institutes of Standards in Technology (NIST) recently published new standards for cryptographic security: to give companies a north star in how to prepare over the coming years before Q day occurs, which is the day quantum computers will be able to crack today’s state-of-the-art encryption.

And that’s only in one category associated with quantum technology. Others I’ll not delve into here include sensing, computing (hardware and software), communication, etc.

Applying a small amount of imagination about our future, you can easily foresee the inevitable blending of AI, robotics, and quantum technology will vanquish humans’ current superpower of — you guessed it — intelligence.

With that stark reality in mind, how can you use AI today to meaningfully speed up while defending your intelligence and ability to think critically? Debating the overall ethics and morals of AI is outside the scope of this article, but the use of AI tools in day-to-day work appears to be a current hot topic of debate within companies and among colleagues.

For example, Anthropic (an AI safety and research company) recently announced they’re no longer accepting AI-generated resumes or applications. The writing and publishing industry has stated for several years they will not accept any work produced by artificial intelligence.

More conservative organizations aren’t yet adopting AI widely (psst… whispers on the street say people are still using it on the side) while other companies are rapidly trying all types of AI tools for efficiency in daily work, including deploying company-wide AI toolsets to develop intelligence on the company’s proprietary data.

One important consideration is checking with your company on their policy for AI tools. For example, if your company already has an Enterprise contract with Google, you might have Gemini included in your suite of tools, in which case you should use it instead of ChatGPT, keeping your company’s proprietary information contained within your secure boundaries.

Based on my experiences thus far, I find AI to be extremely efficient in assisting me with administrative work I might otherwise spend hours on, such as:

This allows me to farm out administrative tasks while reserving my own thought blocks for bigger themes like strategic planning, shaping narratives / storytelling, and creative development. In turn, I’ve seen even higher efficiency and outcomes from my work than usual, which are traits I strongly value.

The bigger question remains how and when to disclaim your use of AI. Or has it become so prevalent it’s generally expected you should be using AI and there’s no need to disclaim it?

Did people feel this way about the introduction of typewriters detracting from the connection of handwriting? We’re left wondering things like:

Some people seem determined to root out perpetrators who use AI and don’t disclaim it, while others naturally embed these tools as workflow enhancements without thinking twice about it.

Based on my experience using ChatGPT, Gemini, and other AI assistant tools embedded into platforms like Canva, Figma, etc., if you’re simply using them as research aids, or to generate new slide titles, ideas, images, etc. you don’t need to disclaim.

For example, I wouldn’t disclaim every search I performed on Google as I prepared a document, but I might cite a few sources of statistics or other key points.

However, if you’re feeding a long, complicated prompt into an AI tool, then copying and pasting the response into a document, that might leave room for a disclaimer. Wherever possible, don’t defer entirely to AI. Manually edit to add context, knowledge, and value so the reader knows the content is fresh and relevant.

Here’s a general guide regarding when to disclose AI use:

| Task | Disclosure needed? |

| General research tasks | No, but cite sources or key points as appropriate |

| Job description generated with AI | Maybe, if mostly AI-written |

| AI-generated insights for specific research tasks | Yes, if response is copy-pasted directly |

| AI-generated reports or marketing copy | Yes, if not further edited |

| AI-assisted brainstorming | No |

| AI-generated graphics | Maybe, depending on the use case (e.g., probably not for internal use, probably yes for client-facing materials) |

Keep in mind that there’s no real hard and fast rule about when disclosure is necessary — at least, not yet.

Read your company policies about AI. Talk about it with your colleagues. Observe team norms, then conform to them or make the case to shift those norms to fit today’s reality and tomorrow’s promise — the promise of an advanced human society augmented, if not overrun, by AI. And above all, don’t stop thinking.

Featured image source: IconScout

LogRocket identifies friction points in the user experience so you can make informed decisions about product and design changes that must happen to hit your goals.

With LogRocket, you can understand the scope of the issues affecting your product and prioritize the changes that need to be made. LogRocket simplifies workflows by allowing Engineering, Product, UX, and Design teams to work from the same data as you, eliminating any confusion about what needs to be done.

Get your teams on the same page — try LogRocket today.

Rahul Chaudhari covers Amazon’s “customer backwards” approach and how he used it to unlock $500M of value via a homepage redesign.

A practical guide for PMs on using session replay safely. Learn what data to capture, how to mask PII, and balance UX insight with trust.

Maryam Ashoori, VP of Product and Engineering at IBM’s Watsonx platform, talks about the messy reality of enterprise AI deployment.

A product manager’s guide to deciding when automation is enough, when AI adds value, and how to make the tradeoffs intentionally.