If you’ve been following the news, you’ve definitely heard about the explosion in people adopting AI for all sorts of use cases. As a product manager, your manager has probably already asked you how you can incorporate AI in your product (if they haven’t already).

In this blog post, we’ll cover the most common ways companies are integrating AI into their products, even though it sometimes seems unnecessary. We’ll review examples spanning from small startups just entering the market and looking to innovate in this rapidly evolving space to some of the biggest tech companies in the world. We’ll end with how you should be thinking about whether or not you should incorporate it into your product.

It might seem like the AI boom is driven solely by OpenAI and its product, ChatGPT. After all, ChatGPT has the fastest-growing consumer user base in history, according to a UBS research report.

But OpenAI and ChatGPT are just one, albeit large, portion of the current AI wave. The larger trend is really driven by the introduction of generative AI. Compared to older predictive models, generative AI models aim to output data rather than try to predict the right answer. In the example of natural language processing, generative AI tries to predict the next word in a sentence to output data, whereas older NLP algorithms might seek to do something like classify whether or not the text is positive or negative.

Interestingly, you can use generative AI models for classification as well, but the mechanism whereby you get to the answer is different. Because of this new type of model, generative AI models are prone to hallucinating answers that aren’t necessarily correct but sound eerily logical.

On the flip side, this flexibility also means that generative AI models can also accomplish a broad set of use cases that predictive models couldn’t before. Generative AI is being used to accomplish amazing use cases — from companies like Synthesia generating videos to image generation with Stable Diffusion to text and chat generation with ChatGPT.

But let’s focus on ChatGPT for a bit, and what makes it so special. ChatGPT is a generative AI model that falls into the class of LLMs, which stands for large language models. These models are unique in that they are trained on a lot of data — more data than ever before. These large models can uniquely accomplish a bunch of surprising use cases beyond just text generation because they are trained on a huge corpus of data. LLMs have been used to write blog posts, write code, and even make decisions on how to execute a specific workflow that eventually results in a real-world effect.

Almost every single company is thinking about how to incorporate AI into their company strategy. On the one hand, big banks like JPMorgan and tech giants like Samsung are seeking to limit ChatGPT usage in their organizations. After all, Samsung did suffer a security scare when internal data was leaked.

On the other hand, Google is scrambling to stay in the race with OpenAI, seeking to embed AI across all its products. Microsoft has gone even further down this path, partnering closely with OpenAI to improve the Bing experience.

What’s particularly unique about the current AI trend is that the quick adoption of AI is not just limited to startups, who are usually early adopters. In fact, huge public companies have almost been faster at churning out AI features, as they have access to large data sets (and resources) that startups don’t have.

With that in mind, let’s dig into the common ways companies are integrating AI into their products.

It’s hard to keep up with the news when it seems like every other day, there is a company launching its new “AI” product to the world. However, it’s helpful to group all these new products into various themes so that we can start to analyze how we might consider leveraging AI to beef up our own products.

First up is choosing to directly tack AI onto an existing product. This is probably the most obvious way to integrate AI features but is by no means the best way.

Essentially, ChatGPT makes it possible to call its API and basically recreate the chatbot experience. A simple way to claim that you have added AI to your product is by building an embedded chatbot in your product that calls out to the ChatGPT endpoints. Your chatbot basically behaves a lot like ChatGPT (with some minor nuances depending on how you formulate the prompts and set the bot up).

The reason why this might be useful is that it allows users to directly leverage the power of ChatGPT in the tools they are already using. So rather than having to pop out into a new web page to interact with ChatGPT, you can use it right where you are.

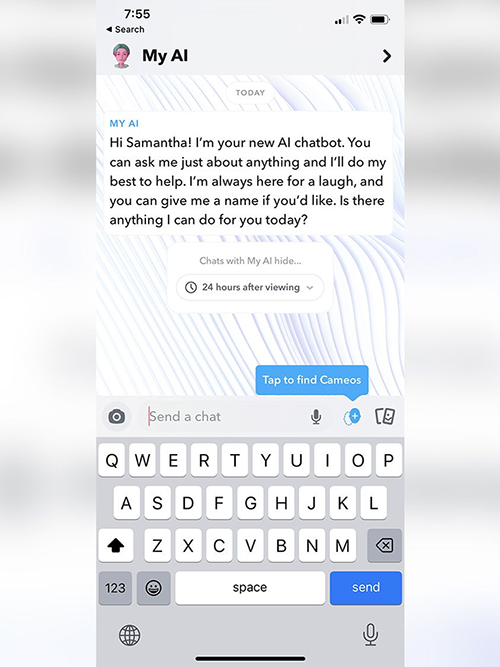

Snapchat’s most recent release of MyAI is a great example of this. Users can interact with a friendly chatbot directly on Snapchat. Is it necessary? Probably not. But AI is new and exciting, so Snapchat went ahead with it anyway:

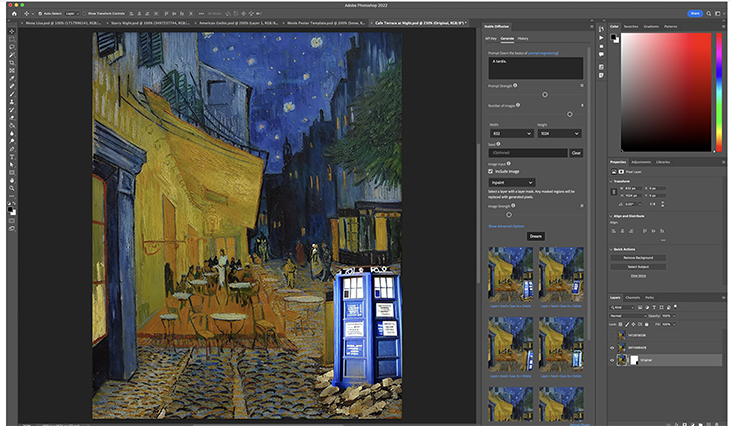

Adobe’s support for Stable Diffusion as an app in Photoshop is also similar — you can leverage Stable Diffusion to create images directly in the place you work (in this case, Photoshop):

Slack’s support for different bots as apps is another example of this, where you can actually access different LLMs (not just ChatGPT) directly in Slack.

Many small startups or solo entrepreneurs are also trying to tack AI onto existing products via plugins and extensions. Similarly, the goal here is to embed ChatGPT functionality directly in tools that people already use. You’ve probably seen all the Chrome extensions that pull up ChatGPT in a sidebar, or perhaps you’ve seen the tools that embed directly in Gmail, LinkedIn, or Google Search. These are all examples of entrepreneurs seeking to tack AI onto existing products.

A twist on the first approach of taking on AI is actually piping proprietary data into the AI models first before exposing it. This is quite an interesting position to take for larger companies because they have the data needed to fine-tune models.

What is fine-tuning? Well, most LLMs allow for a fine-tuning process where you can provide the LLM with more examples of what you want it to be good at. Alternatively, some companies are actually using some search algorithms to find data before piping it into an LLM to ensure more “correct” results. This is an incredibly powerful concept for inserting AI into existing products because data is what makes models good. As an existing company, you can leverage your industry expertise to create a defensible moat for your AI feature.

So what are some examples of this in practice?

Well, Shutterstock (like Adobe) allows you to generate images with text, but they trained the LLMs — in this case, using a combination of DALL-E and LG’s Exaone — using datasets licensed from Shutterstock.

Quizlet launched Q-Chat, which leverages Quizlet’s existing content library to provide a better quiz bot experience.

Instacart’s ChatGPT functionality taps into Instacart’s existing catalog to generate more relevant recipes and grocery lists.

All of these companies have taken the chat interface ChatGPT provides and augmented it with proprietary data.

Beyond simply adding a smarter chatbot in your UI, companies are actually using ChatGPT to completely rethink the UX of web apps. In the past, when you used a website or web app, you would probably expect to click around and you’d have to learn where things are to accomplish what you’re hoping to.

With ChatGPT, companies are actually starting to think about natural language prompts as a new way of interacting with apps. Rather than clicking buttons in a specific order, you’re telling the chatbot what you want to accomplish, and the chatbot will figure out how to do it.

We mentioned Instacart earlier when talking about leveraging proprietary data, but their chatbot also makes it easier to order the ingredients you need for a recipe. HubSpot released an experimental feature that layers a chatbot on top of their CRM so that you can talk to the chatbot and accomplish tasks. Perhaps someday when we hit GPT 10, we won’t even need the UIs that all of us use today.

Companies are also thinking about how to use AI to accomplish existing jobs more efficiently. Wendy’s is trying to use AI to take orders in drive-thrus. AutoGPT is trying to leverage GPT to enable a flexible system that can accomplish any task. Zapier NLA and Slack Workflows are coming up with ways to speed up workflows using GPT.

There are huge opportunities to leverage GPT beyond just writing blog posts or generating tweets.

Finally, some companies are doing away with the chatbot interface altogether and instead are using GPT to accomplish complex tasks behind the scenes with the click of a button. For example, Slack’s GPT integration includes a nice “summarization” button that instantly summarizes text.

You could probably create a chatbot and tell the chatbot to summarize text for you, but sometimes buttons are a faster path to value than typing the entire prompt out. Notion similarly has prompts as you type that guide you towards using their AI in the right way.

Clearbit in particular is offering an interesting twist on using AI, where they actually leverage AI to summarize company descriptions and categorize them into industry codes. The output of this is simply the industry code — no chatbot, no generated text.

So as a product manager, how should you go about deciding how to incorporate AI into your product? It’s easy to think that you’re falling behind your competitors, but it’s important to remember all the first principles that guide your usual product roadmapping decision-making.

Don’t just tack AI onto your product because you can, make sure that it fits into the overall ethos of your product. Now, let’s be real, it’s probably a really good idea to think about how to incorporate AI into your product, but how you do it is really critical.

This might perhaps be a controversial opinion, but a chat isn’t always the best interface to interact with a product, so don’t layer on a chatbot simply because you can. Instead, here are some steps you can walk through to think about whether or not you want to implement AI into your product, and how you should do it:

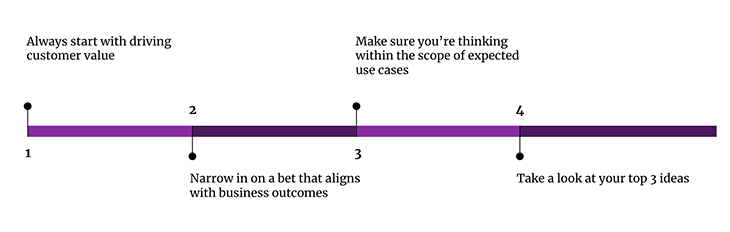

What are some common problems that your customers and users bring up time and time again? How are they blocked when leveraging your product? What additional features do they need to feel like superheroes?

Write down a list and order it by user pain. Put the things that cause the user the most pain, or have the most potential to relieve user pain, at the very top.

Now that you have a prioritized list focused on customer value, take a second pass at that list based on business outcomes. Is the CEO trying to expand into a new market? Is the focus on driving net new revenue or retention? What product areas are most critical?

Finding the overlap in the Venn diagram between user pain and business goals is where the magic happens.

Ok, if you really wanted to, you could just tack on a chatbot interface that is essentially ChatGPT in your product. But how do you know if that’s actually the right approach?

Well, this is where thinking through the surface area of your product, what typical use cases you cover, and your expected user flow is really important. If people typically interact with your product via chat (e.g. Snapchat or Intercom), it might not be too crazy to do this.

But if your users are interacting with your web app daily in a non-chat way (like the Adobe example), perhaps you can embed AI as a way to accomplish a job your user wants to accomplish rather than shoehorning a chatbot into the product.

Remember, features don’t come for free. If you expand your surface area beyond your usual use case, you’re going to have to support that surface area going forward.

Now that you’ve prioritized ways to truly drive customer value that aligns with your business goals and falls within the surface area of your product vision, you’re ready to think tactically. See if any of the top three ideas can actually be solved with AI.

Think about ways that you can build AI moats, either through proprietary data, or unique ways to chain LLMs together or auto-generate prompts that are more user-friendly. If everything comes together, AI is great! But if AI doesn’t solve your top three problems, be wary.

We can’t deny that our work lives (and even our personal lives) are going to change forever with this new wave of AI technologies. In fact, in my day job, I’ve started using AI every day! Specifically, I write a lot of SQL to analyze data, and AI has really helped me reduce the amount of repetitive code I have to write daily.

As product managers, even if you aren’t ready to integrate AI into your product, it’s definitely time to get your hands dirty. Start adopting AI, learning the new user flows that it’s introducing, and thinking about how you can apply it in your organization.

Featured image source: IconScout

LogRocket identifies friction points in the user experience so you can make informed decisions about product and design changes that must happen to hit your goals.

With LogRocket, you can understand the scope of the issues affecting your product and prioritize the changes that need to be made. LogRocket simplifies workflows by allowing Engineering, Product, UX, and Design teams to work from the same data as you, eliminating any confusion about what needs to be done.

Get your teams on the same page — try LogRocket today.

A practical guide for PMs on using session replay safely. Learn what data to capture, how to mask PII, and balance UX insight with trust.

Maryam Ashoori, VP of Product and Engineering at IBM’s Watsonx platform, talks about the messy reality of enterprise AI deployment.

A product manager’s guide to deciding when automation is enough, when AI adds value, and how to make the tradeoffs intentionally.

How AI reshaped product management in 2025 and what PMs must rethink in 2026 to stay effective in a rapidly changing product landscape.