Since becoming a solopreneur in 2022, I’ve had the pleasure of helping nine startups move to a more outcome-driven, product-led approach to product management. And they all shared a common desire — that their leaders build autonomous, empowered product teams.

Here are the five most important, sometimes surprising, lessons I learned. I promise I’ll steer clear from regurgitating the obvious stuff you’ve heard a hundred times already.

Who is it for? That is the single most important question to answer about your business.

Defining your ideal customer profile (ICP), finding the right persona to address and build for, and segmenting your target audience to identify your beachhead segment aren’t just marketing topics.

These are the fundamental strategic decisions that underpin your entire business model. And they should, a hundred percent, be under the purview of the founding team.

Defining and iterating on your ICP and your beachhead segment is a continuous process that should involve the entire leadership team.

But be mindful of these two terms.

Your ICP describes the hypothetical customer that would benefit the most from a company’s product or service and, in turn, provide the most value to the company.

The beachhead segment is a segment within the ICP that is in the most excruciating pain. And you use a beachhead segment to gain traction, prove its value proposition, and establish a foothold before expanding to other segments.

Basically, the success of the startups I worked with started by creating a point solution for a niche audience and expanded from there because:

In fact, I recently watched a talk by Sam Altman, which echos my experiences perfectly:

“All of our successes started with capturing a large part of a small market. Many of our failures failed because their initial markets were too big. You want to find the smallest possible cohesive subset of your market, the most narrow smallest market you can find, with users that desperately need what you’re doing, and go after that first. Once you take over that market, then you can expand.”

Everyone struggles with focus and prioritization. Choosing one thing means to temporarily close the door on another, and that’s scary.

The problem with trying multiple things at once is that you’re not allowing yourself or your team to try hard enough at that one specific thing to learn conclusively whether it worked or not and why. By shooting out of too many barrels, you’re blocking yourself from learning.

Here’s the catch-22: startups struggle to pin down their ICP or beachhead early on because they lack real-world knowledge. They simply don’t know yet which niche has an overlapping set of unmet needs for them to address. They have two options:

I used to advise early-stage startups to choose option one, limiting the number of ICPs to 3–5. But in recent years, I have pivoted to option two. Here’s why:

Most early-stage startups I worked with consisted of <30 FTE teams.

Even when limiting the potential ICPs to as few as three, the focus became too diluted, and our small teams struggled to detect patterns.

For a relatively unfamiliar ICP — depending on how niche you’re willing to go — it usually takes at least 10 interviews before you start seeing patterns.

Because within your self-defined niches, there can be a variety of “hidden” segments — some people will experience a pain point much more strongly than others. Even if you start hearing some things repeated after 10 interviews, this is just a first and relatively weaker signal.

So, you need to follow up with a variety of tests amongst that same niche audience. If you expand your ICP too soon, your test results will be noisy.

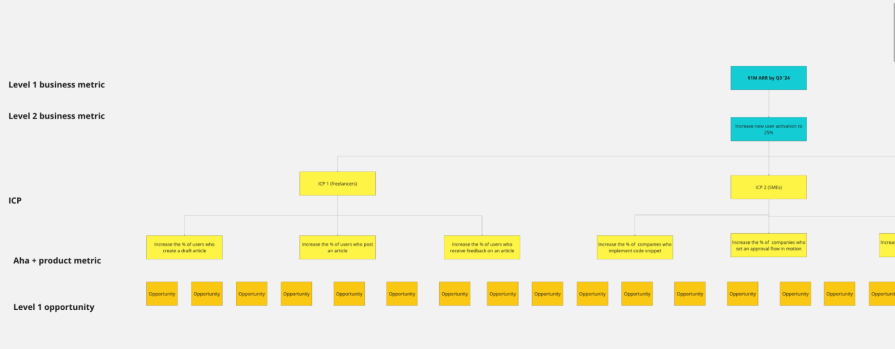

Anyone who has tried to merge 3+ ICPs into an opportunity solution tree will be able to echo how painfully broad that tree becomes:

This point about hyperfocus isn’t only valid for picking an ICP. It’s equally important when setting goals, deciding which projects to pursue, which acquisition channels to try, etc.

As my friend Maja Voje says, “Hyperfocus on what’s mission critical.”

I’ve never managed to help move a product organization from feature/stakeholder-driven to outcome-driven/product-led without the CEO very much on board, ideally as the main driver.

You can’t change a product team as an island. You’re trying to enable a systemic shift.

And I’ve often needed to lean on a convicted CEO to help push that shift through.

Imagine a new head of product decides that the product team should no longer simply churn out the features that sales and marketing are asking for. Instead, they should explore the opportunities that will likely drive an increase in user activation for a niche ICP.

Sales and marketing will naturally buck, especially if the company’s business metrics have been showing slow but steady growth up until now.

Why trade in something that sort of works for something new and risky that the team is unfamiliar with?

You’ll never win in a dogmatic debate about “how product management should be done,” and it’s impossible to change someone’s mind if they’re not open to changing it.

So, if it’s the head of product versus the head of sales, with no one to break the tie, it’s hard to move forward.

My most successful projects were driven by a strong leader with a healthy risk appetite, willing to trade in small, incremental optimizations to create something truly outstanding and differentiated.

A great example of a company’s CEO changing the way his teams worked is Brian Chesky of Airbnb.

After realizing that Airbnb’s product development process was moving far too slowly, and teams were getting caught up in meeting marathons, busy work, and politics, Chesky himself was the primary driver for making bold, headline-making changes. Remember when he turned product into product marketing?

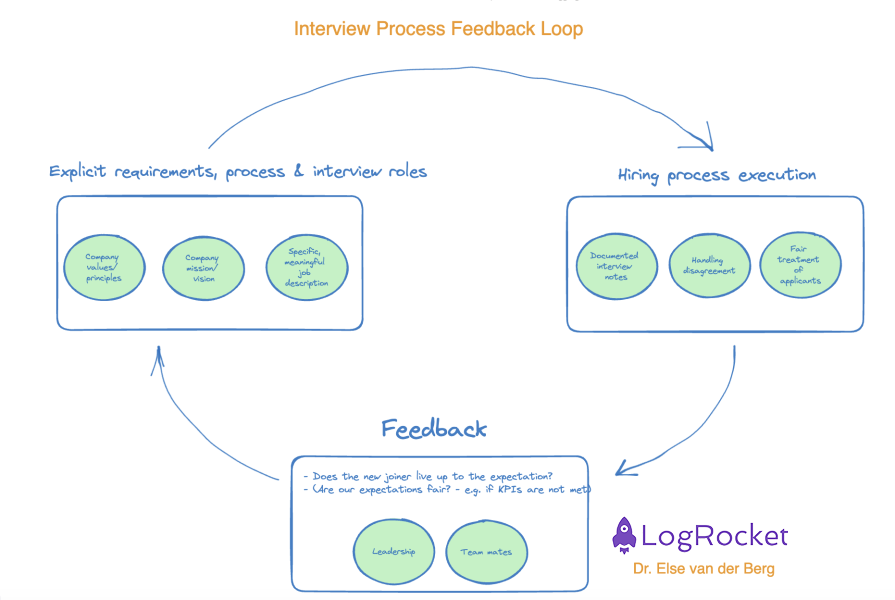

But, well, most startups have poor hiring processes.

And these poor hiring processes at most startups and scale-ups are the root cause of the weak teams they get stuck with.

I’m amazed by how informal and unprepared leaders go into interviews with applicants. If you don’t allow gut feeling into your product management process, why accept it when you hire?

Before the interview, agree upfront:

After the interview:

After hiring:

Here is the last little nugget in this section: motivation is great, but experience does matter. If it’s between hiring three junior people and one very experienced person, I’d pick the latter.

Bad data will break your decision-making process and set you back lightyears.

I’ve spent far too much time in my career diving into user analytics data and thinking, “This doesn’t make sense.”

We love to talk about how data-driven or data-informed we are, but data is easy to mess up.

Data tracking can be implemented incorrectly (implementation), reports or dashboards can be built poorly (execution), or the data can be interpreted falsely (interpretation).

This can end up costing you dearly:

Only those who have a deep understanding of the business and think critically can catch errors in data.

When you incorrectly implement segmentation or the SDK of your product analytics tool, things go sour. I’ve seen it all — firing off the wrong events at the wrong time, not logging certain users, and messing up the association of users to accounts.

Secondly, without a well-thought-out event tracking plan (what do we track, why, which properties should we store?) and documented naming conventions, things can quickly get out of hand.

I don’t believe you should always hire a data engineer before any other role on the data team to avoid such disasters — such statements usually lack real-world nuance.

But it is important that someone properly thinks through what the end state should look like.

I’m all for democratizing data, but there’s an obvious problem with having non-data-experts self-serve — messing up the filters, segmenting incorrectly, running the wrong report for the use case, and then coming to incorrect conclusions based on the wrong data.

Here, I’m thinking of Dr. Mario Lenz’s solid advice: “We allow everybody to play around with data — but key metrics come via “certified” dashboards from the data team.”

Last, you must grapple with the story behind the data you’re looking at.

Why do we see peaks or dips? Why are there outliers? Could the data be skewed in some way? What exactly is being measured here, and what does this tell us? What actions should we take, and what do we forecast will happen if we take those actions?

If either implementation or execution got messed up, interpretation will definitely go pear-shaped.

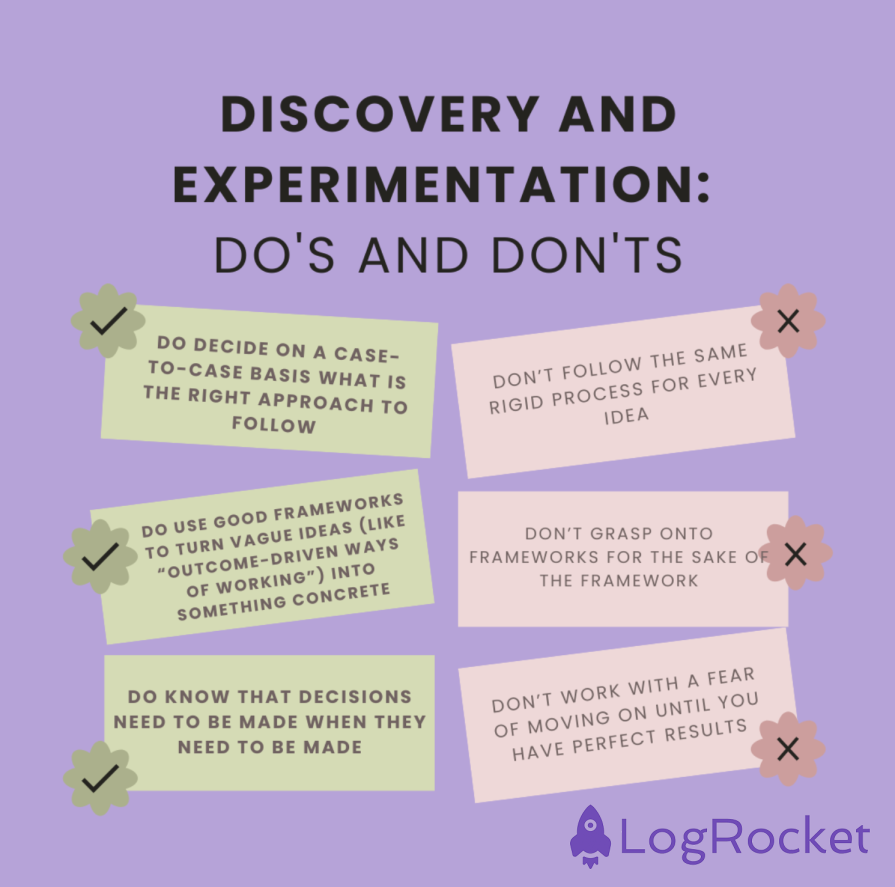

The goal of discovery and experimentation is to reduce the risk of building the wrong thing.

Building the wrong thing is bad for two main reasons. One, delivery is expensive. And two, delivering the wrong features leads to a bloated, undifferentiated product without a clear value proposition. Hard to use, even harder to sell.

But if you’re to invest in robust discovery and experimentation processes to reduce the risk to zero, be mindful. Discovery and experimentation also cost money. Good product managers, designers, and engineers aren’t cheap, and they usually have extra costs associated with them.

And we can never reduce our risk to 0.

Basically, the four big risks we’re trying to reduce with discovery and experimentation are:

However, we can only reduce these risks until a certain point; we will never be 100 percent sure.

So, to discover or not to discover?

Just remember that some things don’t require a full-blown discovery and experimentation process.

Below are the typical symptoms of a team getting lost in the discovery and experimentation rabbit holes and ways how to fix it:

We must see every opportunity validated by at least 10 customers bringing it up, and we need at least a statistically significant A/B test giving us a thumbs up.

Ed Biden differentiates between red phases (“just ship the thing”) and blue phases (where we should take a more outcome-driven, experimentative approach).

Getting stuck in dogmatic debates with their colleagues in other departments “because this is the right way of doing product” — you’ll lose sight of the context and the goal.

Telling an immature team, “From now on, your work should be about increasing our free-to-paid rate by 10 percent by the end of next quarter,” is doomed to fail without any tools or explanation about how they should do this.

Frameworks like impact mapping or opportunity solution trees can help.

You risk facing prolonged delays, excessive analysis, and missed opportunities. The quest for perfection often results in paralysis where no progress is made, stifling innovation and responsiveness.

You often can’t afford to wait 6–12 months for our A/B Test to give the green light. The most important thing to strive for is speeding up your time to insight. In many cases, you will learn faster by just shipping something, even with imperfect test results.

I’ve had to learn these lessons the hard way, and I’m still sharpening and adding new lessons along the road.

In my experience, the founders who are the most successful at niching down, focusing on what’s mission-critical, investing in “the right amount” of discovery and experimentation, and building empowered product teams are the ones who have lived through painful failures in the past.

It’s helpful to read about someone’s experiences on paper.

But running into the wall yourself tends to have far more impact. Take this from someone who has run into many, many walls.

LogRocket identifies friction points in the user experience so you can make informed decisions about product and design changes that must happen to hit your goals.

With LogRocket, you can understand the scope of the issues affecting your product and prioritize the changes that need to be made. LogRocket simplifies workflows by allowing Engineering, Product, UX, and Design teams to work from the same data as you, eliminating any confusion about what needs to be done.

Get your teams on the same page — try LogRocket today.

Maryam Ashoori, VP of Product and Engineering at IBM’s Watsonx platform, talks about the messy reality of enterprise AI deployment.

A product manager’s guide to deciding when automation is enough, when AI adds value, and how to make the tradeoffs intentionally.

How AI reshaped product management in 2025 and what PMs must rethink in 2026 to stay effective in a rapidly changing product landscape.

Deepika Manglani, VP of Product at the LA Times, talks about how she’s bringing the 140-year-old institution into the future.