Asma Syeda is Director of Product Management at Zoom, where she leads UCaaS integrations and drives innovation through AI and automation to augment the agent customer experience. She brings over 17 years of product management experience at companies including Dell, AAA, and Rogers, and excels at turning complex challenges into market-winning solutions. Asma is also the founder of the AI Guild and the founder of Women in AI North America. She’s passionate about diversity and creating meaningful connections between technology and customers.

In our conversation, Asma talks about the importance of responsible AI and shares best practices for companies to ensure their AI technology remains ethical, including by asking diverse questions and committing to algorithmic transparency. She discusses how she lays out risks and unintended consequences early in AI adoption conversations, as well as how she works to turn AI skeptics into passionate advocates.

The shift from traditional product management to more AI-driven development is indeed a big cultural change, especially in high-stakes industries like finance or telecom, where risk aversion is natural.

Early in my career, I was very excited about an AI solution that could revolutionize customer service in telecom, and I spent weeks perfecting the technical architecture. But, when I presented my findings to the team, I was met with crossed arms and skeptical faces. The head of customer service said, “Well, this sounds like you’re trying to replace us with bots or robots.” That moment taught me that AI transformation isn’t really about technology; it’s more about people.

From my experience leading these AI initiatives, I’ve learned that the journey starts with building shared understanding and trust among all stakeholders, whether it’s engineers, business leaders, designers, etc. One of the biggest challenges is that AI feels like a black box, even to highly technical teams. My first strategy is transparency through education. I take the time to invest in 1:1, cross-functional AI workshops where we demystify what AI can do and cannot do, what data it needs, and what limitations to expect. This paints the big picture and sets the ground for what we are able to expect from AI.

The biggest mindset shift I advocate for is moving from “AI will change everything” to “AI will help us be better at what we already care about.” When teams see AI as an amplifier of their expertise rather than a replacement, that’s when you get true buy-in and the culture shift becomes sustainable.

Sure. I worked with a banking team at a previous job, and we began using AI to optimize loan approvals. It saved everyone over eight hours a week. Once people experienced how AI improved their lives, they wanted to find more areas to implement it, including as a complex fraud detection system.

I have another example from a telecom project I worked on. I find it helpful to ensure that every AI has a human champion from each discipline, and this was one of the first times that this experiment really paid off. The network engineer on the project became so passionate about predictive maintenance that he started leading demos, and when his colleagues and peers saw the excitement, their resistance completely melted away.

Many AI transformation projects involve a large volume of data and legacy systems that have been held onto for a long time. This often creates a lot of functional dependency. Balancing AI innovation with the realities of legacy systems is one of the most challenging and fascinating aspects of product leadership today.

Early in my career, I watched companies chase “AI for AI’s sake” — deploying models that solved non-critical issues. Today, the most impactful AI initiatives tie directly to core business metrics, whether that’s customer lifetime value, operational efficiency, or employee productivity.

In large enterprises, legacy systems are treated like anchors. They are battle-tested repositories of real business logic and the organization’s technical health is actually its domain expertise crystallized in code. So the company holds onto them, but those systems sometimes hold the company back from the future of AI.

I find that smaller companies are often faster to adopt AI because they don’t have that legacy dependency. Smaller companies may be better positioned to build AI from the ground up, whereas enterprise companies may build middleware integrations between legacy systems and then layer AI on top.

Regardless of the approach, you have to consider the problem you’re trying to solve. I’ve watched startups with pristine tech stacks build AI that is technically impressive but commercially irrelevant, and I’ve seen companies with 20-year-old mainframes build AI that generates millions in value.

There’s a lot of appetite for AI right now. There’s also a lot of noise, so it’s hard to find the true differentiations. It’s important to consider how technology is reshaping how organizations think, and then how we can measure success.

During my 18 years of building AI products, I’ve identified a few universal truths. First, start with the problem, not the algorithm. Early in my career, I watched companies chase AI just for the sake of it and deploy models on every use case. That’s not wise, because the most impactful initiatives always tie directly to business metrics.

Traditional KPIs are lagging indicators of yesterday’s business model. When you force AI to optimize for customer acquisition cost, you might miss that AI could eliminate the need for acquisition entirely by turning existing customers into exponential value generators.

For example, at a bank I worked at, we mapped AI’s role to a North Star KPI, which was reducing customer effort. This forced teams to ask, “Does this chatbot actually simplify workflows, or is it just a shiny toy?” Mapping business KPIs and metrics to understand how you are transforming (not optimizing) is the best way to understand what use cases you can leverage AI for.

Yes, and this hits close to home for me. A few years ago, I led an AI risk engine project that the team was very proud of. This initiative was meant to eliminate bias and speed up the identification of customers who were approaching financial risks. By catching this early, we could help the org mitigate risk.

Three months after launch, we discovered that the AI solution was systematically filtering out qualified candidates from certain demographics. Without intending to, or realizing it, we had actually built our biases right into the algorithm. That failure taught me that good intentions aren’t enough. We need to be very deliberate about the structures and practices that we want to put in place to catch what we cannot see.

I recommend starting small and thinking big, because the beauty of responsible AI governance is that it doesn’t require a massive budget or a team of ethicists. Every company, from five people to 5,000, can start with just three fundamental practices.

The first is what I call the “diverse questioning table.” Before any AI project kicks off, I gather people with different perspectives, including technical folks, frontline employees, customer service reps, customers, and more. I ask questions around who could be harmed from this, what could go wrong, and what assumptions we’re making about our users. I’ve seen million-dollar mistakes caught in these 30-minute conversations — that is game-changing.

The second item is algorithmic transparency. That doesn’t mean your org should publish its code, but it should document and explain in plain English what its AI systems can do. How are decisions made, and what data is used? I like to say that if you can’t explain it to your grandmother, you probably don’t understand it well enough to deploy it safely.

The third is what I call “embedded accountability.” From my perspective, governance isn’t about bureaucracy or slowing innovation, it’s about building trust in society at large. Instead of having separate ethic committees that meet quarterly and just review some check boxes, I prefer to build responsibility into existing workflows.

In places I’ve worked, we assigned what we called a “red team” to actively try to break the system by finding edge cases. As we do continuous monitoring and build in feedback loops, AI models can degrade and behave unexpectedly over time, especially around user data. It’s important to set up mechanisms to track performance, detect biases or errors, and enable rapid response. This type of operational vigilance keeps AI safe in production because it’s not about compliance, it’s about continuous learning.

By creating simple checklists and clear escalation paths, and celebrating teams that catch problems early, responsible AI becomes a part of the company’s culture.

This is a very relevant challenge. I’ve been in those pressure cooker moments where the CEO says, “We need an AI feature launched in six weeks, or we lose to our competitor.” In my early career, I would have just said “yes,” and worried about ethics later, but I learned that is the most expensive approach. Fixing ethical issues post-launch costs around 10X more than addressing them from the start. The key is to make ethics an integral part of the product development lifecycle, rather than treating it as a check mark.

The first strategy is to accelerate ethical considerations in development. This means involving different perspectives early on, and sometimes even looping in external experts to flag potential risks or unintended consequences. With this approach, you get many sets of eyes looking at the same thing. I also ask front-loaded questions, which are hard conversations, in project kickoffs. I’ll present nightmare scenarios, which are real-world examples of AI failures from other companies and their business impact.

Then, to keep up with ambitious timelines, I use ethical MVPs. Building the full AI system, we prototype the riskiest ethical scenario first so that we can demonstrate fairness across user groups and explain decisions clearly. This actually speeds up development because it enables us to catch fundamental flaws before writing a thousand lines of code.

I also recommend regular AI impact assessments, which are similar to environmental impact studies. Every six months, we evaluate who this AI is actually affecting. Are the outcomes fair across different groups? What unintended consequences have emerged?

Absolutely. In one of my telecom projects, we were working on a risk engine, and found it actually eliminated people who were new to a certain country. This group of consumers were trying to establish themselves in a new location, but they didn’t have a line of credit or a credit score. The ethics or compliance guidelines we had built into our system were working against those people, and we lost a lot of customers because the AI didn’t approve them.

The purpose of this project was to speed up the approval process, so this was an ironic scenario. I encourage all product owners and product managers to be responsible AI champions. It makes a big difference. When leaders set the tone that responsible AI is non-negotiable, it encourages the whole team to prioritize long-term impact and speed.

Everyone is just obsessed with AI right now. Often, we use AI in our workstreams and have different AIs that we can use depending on the task. We might wonder if ChatGPT is better than Perplexity, for example, but they essentially do the same thing. The real question is, “How is this making me successful in something that I really care about?” That’s what companies should be asking, too. I’ve seen companies spend an incredible amount of money building sophisticated AI that users try to bypass because it solves the wrong problem beautifully.

But as product leaders, our job is to cut through the noise and ask, “Does this AI capability truly create meaningful value for our users and business — or is it just tech novelty?” My first filter is what I call the “so what?” test. Instead of asking myself, “Can AI do this?,” I ask, “Should AI do this?”

More importantly, I question what will happen if AI does this perfectly and what happens if it fails completely? Here’s the kicker — if the answer to failure is that users shrug and use the old way, you’re building noise, not differentiation. But if the answer to success is that users can’t imagine going back, that’s when you know you’re onto something transformative.

As AI evolves, product leaders should be cognizant about how they approach AI strategies and what signals they should watch for to avoid vendor lock-in or shallow integrations. Most of the time, we try to layer AI on top of our existing systems. We try to integrate what we have with some kind of shiny AI tools, and that’s a constant dilemma. The real question is to understand what we control and what we should never control.

Many companies are building what they should be buying and buying what they should be building. In other words, companies are investing in infrastructure and building to the AI wherein they should be buying that because infrastructure becomes a commodity after a certain period of time. What they should really be building are more LLMs and investing in those kinds of models, which can be tweaked and built in for their business logic.

So, stop trying to build everything and buy nothing. Start asking, “What makes us unique, and how do we double down on that while letting others handle the commodity work?” That’s not strategy — that’s survival.

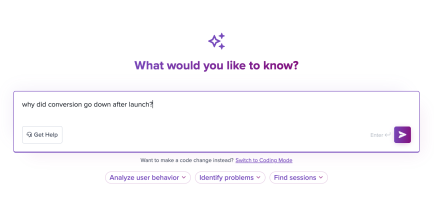

LogRocket identifies friction points in the user experience so you can make informed decisions about product and design changes that must happen to hit your goals.

With LogRocket, you can understand the scope of the issues affecting your product and prioritize the changes that need to be made. LogRocket simplifies workflows by allowing Engineering, Product, UX, and Design teams to work from the same data as you, eliminating any confusion about what needs to be done.

Get your teams on the same page — try LogRocket today.

Melissa Douros talks about how finserv companies can design for the “Cortisol UI“ by building experiences that reduce anxiety and build trust.

Introducing Ask Galileo: AI that answers any question about your users’ experience in seconds by unifying session replays, support tickets, and product data.

The rise of the product builder. Learn why modern PMs must prototype, test ideas, and ship faster using AI tools.

Rahul Chaudhari covers Amazon’s “customer backwards” approach and how he used it to unlock $500M of value via a homepage redesign.