As a product manager, you’ll face scenarios where your product team has created multiple variations of a design or some copy and it isn’t clear which variation would be best. The only way to ensure that the best variation is chosen is to run a test (successfully).

Although some product teams are put off by the time that’s needed to do this, it’s usually faster than debating it and definitely faster than if you were to choose the wrong variation first (not that you’d even know). It’s also definitive — you’ll get quantitative proof indicating which version is best, which you’ll never get through having a debate.

There are two ways to approach this: you can use A/B testing, which most people are familiar with, or you can use multivariate testing, which most people avoid learning about properly (big mistake) because they believe that it’s complicated (it isn’t).

In this article, we’ll learn what multivariate testing is, how it compares to A/B testing (spoiler: it’s actually not that much more complicated), and why it’s great for product management.

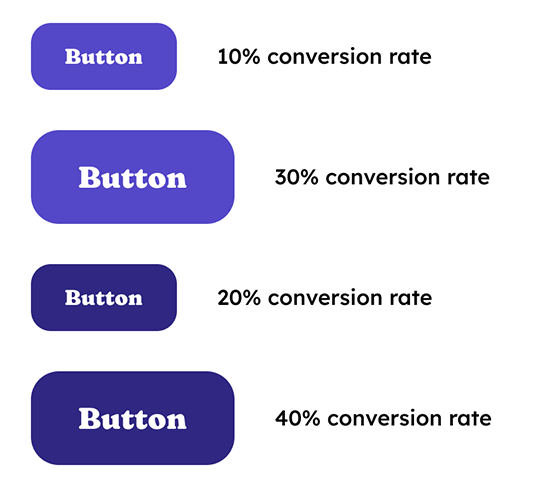

Multivariate testing is a type of testing where multiple variables are tested at once, unlike A/B testing where only one variable is tested at a time. It’s used to definitively identify which design, snippet of copy, or combination of the two is the best out of multiple variations:

Let’s take a deeper look.

To better understand the advantages that multivariate testing has over A/B testing, let’s clarify what A/B testing is and when it’s less useful.

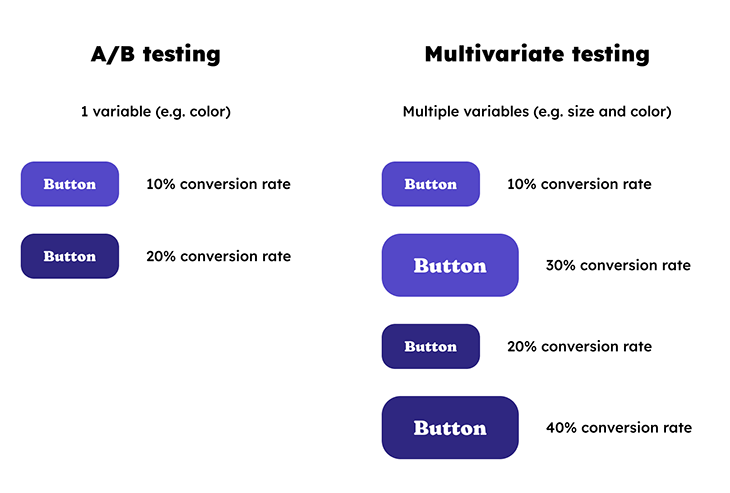

With A/B testing, we’d test how well version B compares to version A. Version B would be identical to version A minus only one change, so that we can accurately measure and observe the impact of said change. If we were to test additional variants of said change, then it’d essentially be an A/B/C/etc. testing because, still, only one variable is changing.

If there were multiple changes or variables, it’d be a multivariate test. With multivariate testing, we test every combination of changes to ensure that the best one is revealed. Failure to do so would mean never finding out if an untested combination of changes would’ve been better.

It’d also be impossible to determine the impact of each change, so if we were to decide that there’s room for improvement, we wouldn’t be able to move forward without moving backward first since we wouldn’t understand which changes were good and which changes were bad.

In short, A/B testing is less useful when there are multiple variables involved since they’d have to be tested one after the other (which takes longer), whereas multivariate testing handles all variables at once. So, what can be accomplished using several consecutive A/B tests can also be accomplished using a single multivariate test (which is faster), assuming that we run a fair experiment:

One of the reasons why multivariate testing is so great for product management — in addition to the benefits mentioned above that are more focused on the end-user — is that it helps product teams settle debates quickly. In short, it boosts productivity and ensures that product teams aren’t wasting too much time debating which choices to make when a simple test can reveal it all.

In addition to this, multivariate testing facilitates a more democratic product design culture where everyone’s ideas are put to the test (no pun intended) rather than being outright rejected.

I wouldn’t necessarily describe multivariate testing as complicated, just more complicated than A/B testing. There’s definitely a misconception that multivariate testing is tricky and time-consuming, but it’s just not true — here’s all you’d need to consider:

First of all, more care is needed. To get the full story, we mustn’t leave out any variants (as mentioned above). For example, if we were to test two variables (e.g., size and color, as shown in the image above) and both variables had two variants (e.g., medium and large, and blue and dark blue), that would total four variants. The more variants there are, the easier it is to forget one (or several).

Second of all, more test subjects are needed to get enough data on each variant. This means that products with few users or willing participants are unlikely to benefit from multivariate testing. To add to this, when running multivariate tests on a live app or website, it’s common practice to only show variants to a fraction of users to reduce disruption, so a larger sample size is needed to execute multivariate tests effectively.

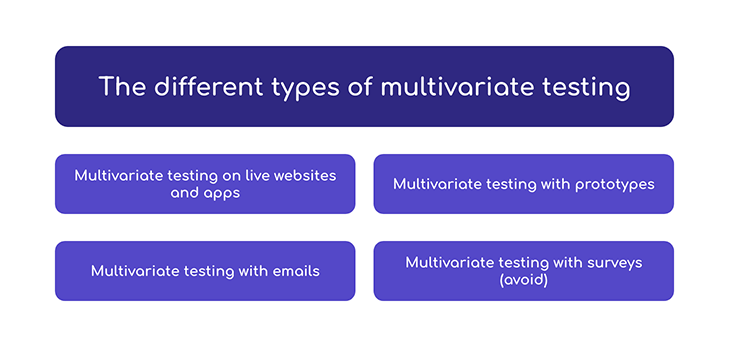

The best way to understand the different ways that we can utilize multivariate testing is to look at the different types of multivariate testing tools that exist:

Firstly, there are multivariate testing tools that we can install on live websites — think traditional analytics tools but with the ability to measure/observe/compare multiple variations.

Naturally, these are useful for experimenting on websites that are already on the internet. They work by enabling us to create variants of our design and/or its content using a visual editor. The different variations are then shown to a segment of different users, after which the best variation is revealed. As a bonus, some of these tools can automatically generate the necessary variations in accordance with the changes made in the visual editor.

Google Optimize (which is free), Optimizely Web Experimentation, VWO, Convert, Freshworks, and Crazy Egg are the multivariate testing tools that I think are worth looking at (some of them offer more than testing though).

To test more complex changes where alternative HTML or JavaScript is needed, a developer would be required — this is really tricky and requires a lot of resources (developer bandwidth, API knowledge, etc.). It’s the reason why teams think that multivariate testing is too complicated. However, thanks to modern advancements in CSS and the fact that we’re more akin to the “one change at a time” approach, we don’t really have to worry about that anymore.

Some of the aforementioned tools work for mobile apps too. The approach from a technological standpoint is different (the tech is deployed server-side instead of client-side), but overall it’s the same concept.

Next, prototype testing tools. These essentially enable us to test the prototypes that our product team makes with prototyping tools such as Figma or Sketch. They’re handy for scenarios where we don’t have a live app or website yet, and also for testing ideas before implementation (which is faster and cheaper).

The other upside is that UX designers typically have (or should have) prototype testing tools in their toolbox already, used for other types of testing.

The downside is that they require recruited participants, whereas testing on live apps and websites happens behind the scenes. I’d recommend making the most of having recruited participants by including additional qualitative questions such as, “What could we have done better?” (perhaps there’s another change that you could have tested, but didn’t even think of?).

As far as I’m aware, Maze is the only prototype testing tool that offers true multivariate testing where participants are only shown one version. It’s advertised as A/B testing but we can in fact include as many variations as we want (although designers will need to carefully craft all of them). Other tools claim to offer A/B/multivariate testing, but it’s actually preference testing (where participants are shown all variations at the same time, which skews the results).

We can also run multivariate tests on email campaigns. In addition to experimenting with design and content, this also enables us to experiment with different subject lines, and we don’t even need any special tools for this because almost all email marketing tools have these features.

Also, email marketing tools are typically able to deliver the winning variation automatically after multivariate testing on a subset of subscribers first. This can boost the success rate of email campaigns quite significantly with minimal effort.

Lastly, survey tools enable product teams to share snippets of copy, upload screenshots of designs/design elements, and even embed functional prototypes in some cases; however, these are more akin to the preference tests mentioned earlier since respondents are shown all variations at once. I’d recommend avoiding this approach because we just won’t be able to yield realistic data from such a fake scenario.

Plus, even the best survey tools (Typeform for example) don’t provide analytics on the level that multivariate testing tools do. They’re great, but not for multivariate testing.

All-in-all, the slight added complexity that comes with multivariate testing is definitely worth it if it means being able to test multiple variables. You’ll get a definitive answer in a fraction of the time that it would take running consecutive A/B tests. This isn’t to say that A/B testing is bad, just save it for scenarios where only one variable needs to be tested.

Choosing A/B testing when multivariate testing is the right approach or not doing any kind of testing leaves us in the dark about which designs or snippets of copy we should use; however, this doesn’t mean that we should test every little thing. Time is money, so we must pick our battles and accept that sometimes the project just needs to move forward. For things of lesser importance, it’s fine for experts to rely on relevant heuristics.

And finally, no matter how quick and easy preference testing is, avoid it completely as it just doesn’t put the respondent in a real-enough scenario for their feedback to be credible.

Featured image source: IconScout

LogRocket identifies friction points in the user experience so you can make informed decisions about product and design changes that must happen to hit your goals.

With LogRocket, you can understand the scope of the issues affecting your product and prioritize the changes that need to be made. LogRocket simplifies workflows by allowing Engineering, Product, UX, and Design teams to work from the same data as you, eliminating any confusion about what needs to be done.

Get your teams on the same page — try LogRocket today.

A practical guide for PMs on using session replay safely. Learn what data to capture, how to mask PII, and balance UX insight with trust.

Maryam Ashoori, VP of Product and Engineering at IBM’s Watsonx platform, talks about the messy reality of enterprise AI deployment.

A product manager’s guide to deciding when automation is enough, when AI adds value, and how to make the tradeoffs intentionally.

How AI reshaped product management in 2025 and what PMs must rethink in 2026 to stay effective in a rapidly changing product landscape.