Back in November 2022, I read a blog post called “Introducing ChatGPT.” The link spread like wildfire, everywhere from Twitter to LinkedIn. A few days later, I downloaded the app and started chatting with what they called an LLM. At the time, no one knew that AI would become such an important part of our day-to-day life.

Since then, a lot has changed in the field. From generative AI to agentic AI, new technologies have helped technologists to build AI-powered products for a wide range of businesses. This rapid progress has put product managers in an interesting position, as many teams are now being asked to build AI solutions.

But does every product actually require an AI solution? I don’t think so. In this blog, I want to share my thoughts on how to identify which problems call for AI versus manual solutions, why not every solution needs AI, and when a hybrid approach makes the most sense.

This article proposes a practical decision framework to help you determine whether a problem should be solved using automation, AI, or a hybrid of both.

While automation and AI solutions differ technically in many ways, the core distinction comes down to determinism versus probability.

A deterministic system produces the same output every time for a given input:

Input → fixed logic → output

Examples include:

A probabilistic system, on the other hand, produces an output based on likelihood rather than certainty:

Input → inference → best-guess output

Examples include:

In these cases, two people or two models may produce different but equally acceptable answers.

As a PM, choosing between these two approaches requires intention, because they create very different product behaviors:

Choosing incorrectly can have a meaningful impact on user trust, user experience, operational cost, debugging difficulty, and long-term product scalability.

This is a product decision, not an engineering one, which means you need to make the choice deliberately and with a clear understanding of the tradeoffs.

In most cases, automation should be your default choice unless there’s a strong reason not to use it. AI shouldn’t be used simply for the sake of it.

Automation works best when the problem is rule-based, predictable, and repeatable. In these cases, AI rarely adds value. Instead, it introduces unnecessary risk.

Below are several signals that indicate a problem is well-suited for automation.

In rule-based problems, there’s exactly one correct answer. Variability isn’t acceptable and typically increases risk. Tax calculations, interest computation, or leave balance updates are some good examples.

When different users provide the same inputs, the expectation is the same result every time. If variability would be considered a bug rather than an improvement, automation is the right solution.

If you can clearly describe the logic without relying on judgment or interpretation, the problem is a strong candidate for automation. Statements such as “if amount is greater than X, require approval,” “if a user is inactive for 14 days, send a reminder,” or “if the SLA is breached, escalate the ticket” are unambiguous.

When logic can be documented, reviewed, and agreed upon by stakeholders, AI doesn’t add value. It only introduces uncertainty.

In domains where mistakes lead to financial loss, legal exposure, or compliance violations, reliability matters more than sophistication. Automation is safer because its behavior is predictable, errors can be traced back to specific rules, and fixes are straightforward to implement and verify.

AI systems, by contrast, fail probabilistically and are harder to debug, which makes them risky in high-stakes scenarios.

Rule-based problems typically have a limited and well-understood set of exceptions. Teams know what those edge cases are, how often they occur, their frequency, and how to handle them.

When edge cases are bounded, rule-based systems scale more effectively than AI. Introducing AI in these situations often increases complexity without reducing failure modes.

If users, auditors, or regulators may ask, “Why did this happen?”, automation is the safer choice. Rule-based systems provide clear, inspectable answers: a specific rule was triggered under specific conditions.

AI systems often cannot offer this level of transparency. When explainability is a requirement, AI becomes a liability rather than an advantage.

AI is the better choice when a problem cannot be reduced to fixed rules and instead requires interpretation, judgment, or pattern recognition. These are probabilistic problems, where outputs are based on likelihood rather than certainty.

These situations tend to share a few common signals.

When there’s no single “right” answer, the problem is probabilistic by nature. Different people may arrive at different but equally acceptable outcomes, and in these cases, variability is expected and often desirable.

Decisions such as whether a message sounds helpful or rude, which response best answers a user’s question, or what content is most relevant to a user depend heavily on interpretation and context. When disagreement is normal, rule-based systems start to break down because they’re designed to enforce one outcome, not manage ambiguity.

If you struggle to write rules without immediately adding exceptions, it’s a strong signal that the problem isn’t well suited for automation.

Frequent rule updates usually indicate that the system is trying to approximate human judgment using static logic, which does not scale. AI performs better in environments where context matters, inputs change frequently, and exceptions are common rather than rare.

Text, images, audio, and free-form user behavior are poor fits for automation. Writing deterministic rules for natural language or visual interpretation quickly results in fragile and incomplete systems.

AI is specifically designed to handle these inputs through natural language understanding, image and video classification, and behavioral pattern detection.

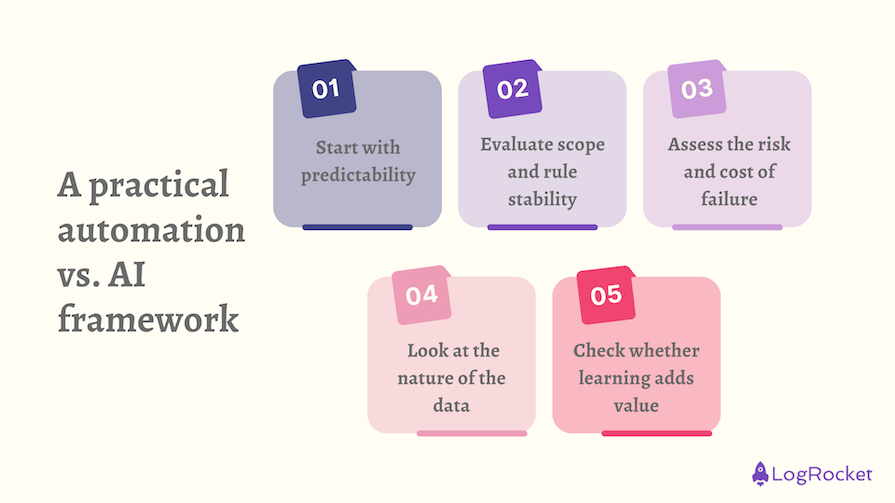

As a PM, you don’t need a complex model to decide between automation and AI. You need a clear set of questions that force intentional trade-offs. The goal is to choose the simplest system that reliably meets user expectations, not the most sophisticated one.

After building multiple automation and AI products, here’s the framework I use to decide between the two:

Ask whether the same input should always produce the same output. If users expect consistency and would consider variability a defect, the problem is deterministic, and automation is the right starting point.

If users can accept different but reasonable outcomes, the problem may justify AI.

Ask whether the rules are clearly defined and stable over time. If logic can be written, documented, and rarely changes, automation will scale better and be easier to maintain.

If rules require frequent updates, exceptions keep growing, or context matters more than thresholds, AI becomes the more practical choice.

Consider what happens when the system is wrong. If errors lead to financial loss, legal exposure, or compliance violations, that’s a strong signal to automate.

If errors are low-impact and recoverable, AI becomes a more acceptable option.

Structured inputs like numbers, enums, and fixed fields are ideal for automation. Unstructured inputs like text, images, or behavior patterns are where AI provides real value.

Trying to automate unstructured data usually results in fragile systems that are expensive to maintain.

Ask whether the system gets meaningfully better as it processes more data. If performance improves over time through learning, AI can create a long-term advantage.

If outcomes only change when rules are manually updated, automation is sufficient.

Whether we like it or not, AI is changing the product management landscape. But amidst this change, strong PMs are the ones who understand when to use AI and when simple automation is enough.

That judgement comes from first-hand experience of building products in both domains and learning through practice. It also comes from thinking in first principles: asking whether a problem is deterministic or probabilistic, whether rules are stable or constantly changing, whether errors are tolerable, and whether users expect consistency or adaptability.

Most importantly, it comes from gathering insights that no one else on the team is positioned to collect and using them to make the right call. The goal isn’t to build AI-powered products, but to develop a clear understanding of where AI adds value and where it does not.

Featured image source: IconScout

LogRocket identifies friction points in the user experience so you can make informed decisions about product and design changes that must happen to hit your goals.

With LogRocket, you can understand the scope of the issues affecting your product and prioritize the changes that need to be made. LogRocket simplifies workflows by allowing Engineering, Product, UX, and Design teams to work from the same data as you, eliminating any confusion about what needs to be done.

Get your teams on the same page — try LogRocket today.

How AI reshaped product management in 2025 and what PMs must rethink in 2026 to stay effective in a rapidly changing product landscape.

Deepika Manglani, VP of Product at the LA Times, talks about how she’s bringing the 140-year-old institution into the future.

Burnout often starts with good intentions. How product managers can stop being the bottleneck and lead with focus.

Should PMs iterate or reinvent? Learn when small updates work, when bold change is needed, and how Slack and Adobe chose the right path.