The impact of AI on our world is undeniable. It all began with the landmark paper “Attention is all you need”, which introduced the transformer architecture and laid the foundation for everything that followed. Soon after came the rise of large language models (LLMs) like GPT-3, bringing conversational intelligence to the mainstream.

But we didn’t stop there. The next step was teaching AI to interact with the outside world through tools, enabling it to fetch data, execute code, and call APIs. Then came the push to make models reason, leading to breakthroughs like chain-of-thought prompting that allowed step-by-step logical processing.

Yet, despite these advances, most AI interactions still follow a one-turn pattern – the user asks, the model answers. What if we wanted models that could take initiative, make decisions, and perform complex tasks on their own, continuously refining their actions until a goal is achieved?

That’s where agentic AI comes in – systems designed not just to respond, but to act.

In this blog, we’ll explore how agentic AI is reshaping what’s possible with large language models, and dive into two leading frameworks – Autogen and Crew AI – that make it easier to build powerful multi-agent systems from scratch.

Before diving deeper into agents, tasks, and workflows, let’s look at how Autogen and Crew AI compare at a high level – here’s a summary of their core concepts side by side:

Here’s a quick side-by-side comparison of Autogen and Crew AI:

| Concept | Autogen | Crew AI |

|---|---|---|

| Agent definition | Defined through code using system messages | Primarily through a YAML file in terms of role, goal, and backstory |

| Task definition | Defined as a plain text description | Defined in YAML or code with both description and expected output |

| Workflow orchestration | Uses algorithms such as Round-Robin, Selector, and Magentic-One for collaboration | Uses Crews (open collaboration) or Flows (structured control) defined via the Process parameter |

| Memory | Managed via utilities like ListMemory and other storage modes |

Enabled by setting the memory parameter to True |

| Tool use | Supports tool integration through FunctionTool or built-in utilities |

Provides built-in tools (e.g., SerperDevTool, WebsiteSearchTool) for search, retrieval, and data access |

The Replay is a weekly newsletter for dev and engineering leaders.

Delivered once a week, it's your curated guide to the most important conversations around frontend dev, emerging AI tools, and the state of modern software.

Agentic AI refers to systems that can operate autonomously, making decisions and taking actions to achieve specific goals.

It usually involves a trigger (like a user prompt) to start the process, but once started, the agent can continue to operate without further human input until it reaches its goal or a predefined stopping condition is met.

But we can take it a step further and introduce the concept of multi-agent systems. In this setup, multiple agents (with specific roles and responsibilities) can interact with each other, collaborate, and share information to achieve more complex objectives. This can lead to more robust and adaptable systems that can handle a wider range of tasks. At the end of this process, we can expect the response to be more accurate, relevant, and context-aware.

Building this from scratch can be a daunting task. Fortunately, frameworks like Autogen and Crew AI make it much easier to create these intelligent, self-coordinating agents with minimal setup. Both frameworks provide APIs and abstractions that simplify the process of defining agents, orchestrating workflows, and enabling reasoning and tool use.

Autogen is an open-source framework developed by Microsoft that allows developers to create and manage multi-agent systems. It provides two main libraries:

Autogen also recently released the Autogen Studio, which provides a low-code interface to build agents with tool support.

Crew AI is another framework designed for building multi-agent systems. It uses a YAML-based configuration approach to define agents & tasks that need to be accomplished. It also supports tool use and API integrations. A unique feature that Crew AI provides is the ability to choose between Crews (which is a more open-ended collaborative approach to communication between agents) and Flows (which is a more structured approach for the flow of control).

Both frameworks support advanced capabilities like memory, tool use, and reasoning, which we’ll explore in detail in the next sections.

Both Autogen and Crew AI revolve around a few foundational ideas – agents, tasks, workflows, and tools – that work together to create coordinated, multi-agent systems.

Let’s explore each concept and see how the two frameworks approach them differently.

An agent is the core component of both frameworks. Technically speaking, an agent is an instance of a language model (LLM) with a specific role. That role is defined programmatically while creating the agent. The agent uses its role to guide its actions and decisions throughout its lifecycle & while interacting with other agents and the user.

In Autogen, agents are defined programmatically. You create an agent by specifying its model, name, and role description through parameters. Here’s an example:

model_client = OpenAIChatCompletionClient(

model="gpt-4.1-nano",

api_key="OPENAI_API_KEY",

)

travel_agent = AssistantAgent(

name="travel_assistant",

model_client=model_client,

system_message="You are a helpful travel planning assistant. Your role is to assist users in planning their trips by providing recommendations on destinations, accommodations, activities, and travel tips based on their preferences and budget.",

)

Notice how we define the agent’s role and responsibilities in Autogen through the system_message, and specify the model via the model_client parameter passed into the AssistantAgent class. This combination gives you fine-grained control over how the agent behaves and communicates.

In Crew AI, agent creation follows a more structured configuration approach. Here’s an example of how an agent is defined in a YAML file:

researcher:

role: >

Travel assistant

goal: >

Assist users in planning their trips by providing recommendations on destinations, accommodations, activities, and travel tips based on their preferences and budget.

backstory: >

You are a helpful travel planning assistant.

We add this information to an agents.yaml file. We are providing similar details as in Autogen, but in a more structured way, using the role, goal,, and backstory fields. This file can then be imported into our main script to create the agents.

In addition to this, Crew AI also provides an option to define agents programmatically using the Agent class:

research_agent = Agent(

role="Travel assistant",

goal="Assist users in planning their trips by providing recommendations on destinations, accommodations, activities, and travel tips based on their preferences and budget.",

backstory="You are a helpful travel planning assistant.",

)

Tasks are another core component of agentic systems. A task is a specific objective or goal that an agent or a group of agents needs to accomplish.

This is how we can make an agent perform a task in Autogen:

result = await agent.run(task="Plan a 4 day trip to Italy for a couple with a budget of $2000.") print(result)

This will make the agent perform the task specified in the task parameter.

Crew AI provides us with two options to define tasks. The first one is to define the task in a YAML file, like we did for agents:

research_task:

description: >

Plan a 4 day trip to Italy for a couple with a budget of $2000.

expected_output: >

A trip itenerary taking into consideration the budget and preferences.

agent: researcher

A task can also be defined programmatically using the Task class:

from crewai import Task

research_task = Task(

description="""

Plan a 4 day trip to Italy for a couple with a budget of $2000.

""",

expected_output="""

A trip itenerary taking into consideration the budget and preferences.

""",

agent=researcher

)

Both of these approaches help us get the same outcome – define the task to be performed by the agent. Also, notice how Crew AI takes a more structured approach to defining tasks by allowing us to specify the expected output.

Once we have the agents and the tasks defined, we need a way to orchestrate the collaboration between them to accomplish the tasks. This is where the different workflow mechanisms provided by both frameworks come into play.

Autogen provides several predefined algorithms to orchestrate collaboration between agents. These determine how agents take turns, share context, and make decisions during a workflow. The main orchestration modes include:

Here’s an example of how we can use the RoundRobinGroupChat orchestrator in Autogen:

from autogen_agentchat.teams import RoundRobinGroupChat

text_message_termination = TextMentionTermination("APPROVE")

team = RoundRobinGroupChat([research_agent, feedback_agent], termination_condition=text_message_termination)

result = await team.run(task="Plan a 4 day trip to Italy for a couple with a budget of $2000.")

print(result)

Notice that we are also defining a termination condition using the TextMentionTermination class. This will stop the workflow when the specified text is mentioned in the conversation, which is what the feedback agent is configured to write when it is satisfied with the plan created by the research agent.

In addition to these algorithms, there is also a swarm mode wherein each agent gets to pick the next agent to continue the conversation, and all agents share the message context.

Autogen also provides something called Workflow, which allows for a more structured collaboration among agents. The way that works is, we create graphs or diagrams specifying how the flow of data should happen, and then use the GraphFlow API to execute the workflow. You can read more about it in the Autogen documentation.

Crew AI provides two main abstractions to orchestrate the workflow between agents – Crews and Flows. Crews are more similar to the teams in Autogen, where agents can collaborate in a more open-ended manner. Flows, on the other hand, provide a more structured approach to orchestrate the workflow between agents.

When we define a crew, we can specify the process property to define how the agents will collaborate.

Here is an example:

crew = Crew(

agents=[research_agent, feedback_agent],

tasks=[research_task],

process=Process.sequential

)

crew_output = crew.kickoff()

print(f"Raw Output: {crew_output.raw}")

We defined it with the same research_agent and feedback_agent as in the Autogen example. The process property is set to Process. sequential, which means that the agents will take turns in a sequential manner to contribute to the task at hand. There are also other process types available, such as Process. Hierarchical, in which a manager model or LLM decides which agent should contribute at each step. Also, we have the kickoff method to start the crew. Notice that there’s no termination condition in this setup. The crew automatically stops once all assigned tasks have been completed.

When we define a flow, we can use different decorators provided by Crew AI, like Flow, Start, and Listen, which can help configure how the flow of control will happen between agents. It lets us have finer-grained control over the workflow orchestration. We will not go into the details of flows, but you can read more about it in the Crew AI documentation.

The agents we defined so far can only use the capabilities of their underlying language models to complete tasks. But what if we want them to interact with the external world – for example, to fetch real-time data from the web or read information from files?

That’s where tools come in. Tools extend an agent’s capabilities by allowing it to connect with external systems, APIs, or functions – enabling it to perform tasks more effectively and in a more context-aware way.

Autogen provides two main techniques through which we can provide tool-use capabilities to agents so that they can interact with the external world. First is FunctionTool. Using this utility, we can convert any Python function into a tool that can be used by agents. Here’s how that works:

async def get_temperature(location: str):

# Simulate fetching weather data from an external API

const temperature = 25 # Simulated temperature

return f"The current weather in {location} is sunny with a temperature of {temperature} °C."

weather_tool = FunctionTool(get_temperature, description="Fetch the current temperature for a given location.")

This tool can then be passed to the agent while creating it. The agent can then use this tool to fetch real-time weather data:

travel_agent = AssistantAgent(

...

tools=[weather_tool],

)

That way, the agent can invoke the tool whenever it needs to fetch the temperature for a given location before providing travel recommendations.

The second method that Autogen provides access to the external world is via built-in tools like the code execution tool.

In Crew AI, tools get more first-class support. Several built-in tools can be directly integrated with agents. Some of them are:

The tools can easily be supplied to agents while creating them. Here’s an example:

from crewai_tools import (

SerperDevTool,

WebsiteSearchTool

)

os.environ["SERPER_API_KEY"] = "Serper Key"

os.environ["OPENAI_API_KEY"] = "OpenAI Key"

search_tool = SerperDevTool()

web_rag_tool = WebsiteSearchTool()

research_agent = Agent(

...

tools=[search_tool, web_rag_tool]

)

With those tools added, the agents we created earlier can now use them to fetch real-time data from the web and perform retrieval-augmented generation (RAG), respectively, to provide better travel recommendations.

Memory allows agents to retain certain global context or information that acts as a basis for making decisions and taking actions. Both Autogen and Crew AI provide support for memory in agents.

Autogen provides a simple API called ListMemory that maintains a chronological list of memories. Here’s how we can use it:

from autogen_core.memory import ListMemory, MemoryContent, MemoryMimeType await user_memory.add(MemoryContent(content="Always suggest vegan restaurants while traveling", mime_type=MemoryMimeType.TEXT)) research_agent = AssistantAgent( ... memory=[user_memory], )

This way, all the agents will keep this memory in mind while performing their tasks (like only recommending vegan restaurants while planning the trip).

Crew AI takes a different approach to memory. It provides a simple config parameter called memory that can be set to True or False while creating an agent. If set to True, the agent will retain the context of previous interactions and use it to inform its decisions and actions in future interactions:

research_agent = Agent( ... memory=True, )

When this parameter is set to True, Crew AI will take care of RAG and memory storage on the local machine using ChromaDB for short-term memory and SQLite3 for long-term memory. It stores important context like the output of previous tasks and entities (people, places) encountered during those tasks. These can be referenced later whenever required.

Let’s put this into practice by creating a simple multi-agent system using Autogen.

We’ll build two agents – a developer agent and a reviewer agent.

This back-and-forth continues autonomously until the reviewer writes "APPROVED" – signaling that the task is complete.

Here’s the complete example:

import asyncio

from autogen_agentchat.agents import AssistantAgent

from autogen_agentchat.ui import Console

from autogen_agentchat.conditions import ExternalTermination, TextMentionTermination

from autogen_ext.models.openai import OpenAIChatCompletionClient

from autogen_agentchat.teams import RoundRobinGroupChat

model_client = OpenAIChatCompletionClient(

model="gpt-4o",

api_key="sk-oQXISwDTm2jcsope50CBRar_tvmS5mZZfogohtDXMST3BlbkFJBrJfvWOBj1bjrdNN54fHiqgk_e9xcRUmZ8p4pirSMA",

)

developer_agent = AssistantAgent(

name="developer_agent",

model_client=model_client,

system_message="You are a JS developer who can write clear, consise javascript functions for the specified task.",

model_client_stream=True,

)

reviewer_agent = AssistantAgent(

name="reviewer_agent",

model_client=model_client,

system_message="You are a senior javascript developer with more than 10 years of experience. Review the code and provide suggestions based on industry best practives to make it more maintainable, efficient and readable. Only provide suggestions, not code. Wait for the developer to submit improved code in the next message based on your feedback. Write 'APPROVED' when you have no more feedback to give.",

model_client_stream=True,

)

text_termination = TextMentionTermination("APPROVE")

async def main() -> None:

await team.reset()

await Console(team.run_stream(task="Write a function to reverse a string in javascript."))

await model_client.close()

team = RoundRobinGroupChat([developer_agent, reviewer_agent], termination_condition=text_termination)

asyncio.run(main())

It’s a single Python file. To run it, we first create a new folder. Name it autogen_example. Inside that folder, create a new Python file named autogen.py and copy the above code into it. Make sure you have Python 3.10 or above. Then, run the following commands in your terminal:

cd autogen_example python3.10 -m venv .venv # create a virtual environment source .venv/bin/activate pip install -U "autogen-agentchat" "autogen-ext[openai]"

Then run the script using the command:

python autogen.py

or

python3 autogen.py

You should be able to see a streaming response like below:

We see a conversation between the two agents, and the code is refined until it meets the reviewer’s standards and is approved. Amazing, isn’t it?

Now let’s build a similar multi-agent system using Crew AI.

In this setup, we’ll create two agents: a food_researcher and an itinerary_researcher.

To set up a Crew AI project, we’ll use the Crew CLI (Command Line Interface):

crewai create crew trip_planner

We need to select several options like the LLM provider (OpenAI), model (gpt-4o), and it initializes a git repository for us. Navigate to the repo created and open it in your favorite code editor:

cd trip_planner code.

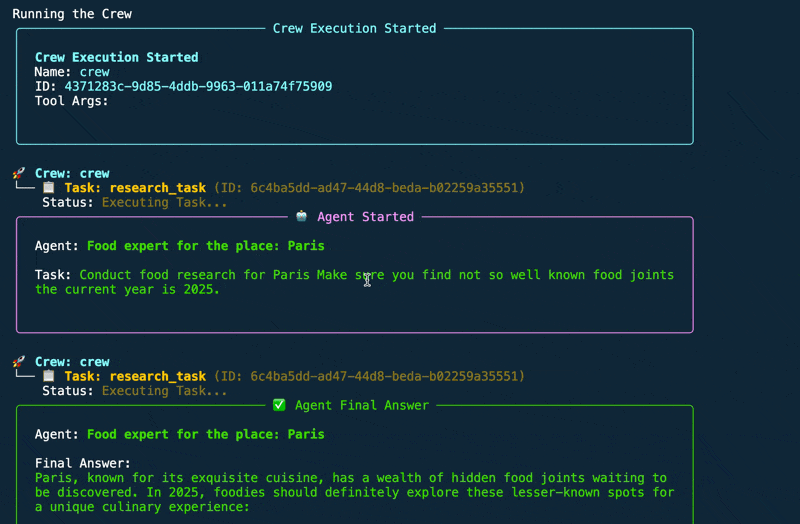

Open the agents.yaml file and the tasks.yaml file and provide the details for the two agents. You can find the details in this GitHub repository. Once the agents.yaml and tasks.yaml files are ready, we can now make the code changes in the crew.py file and the main.py file to run the crew by supplying a place. For this example, we supply “Paris”. Then, we run the crew with the command:

crewai run

You should be able to see a streaming response like below:

In the end, we see a final response with the itinerary created by the itinerary_researcher agent, incorporating the food recommendations provided by the food_researcher agent in a report.md file generated in the project folder. The CLI is styled with colors and formatted, which makes it easy to follow the conversation between the agents.

In this article, we explored the concept of agentic AI and how it enables the creation of multi-agent systems that can operate autonomously to accomplish complex goals.

We looked at two frameworks – Autogen and Crew AI – that make it easier to design, coordinate, and extend such systems through structured APIs, orchestration models, and built-in tool support.

While both frameworks share similar foundations, each brings a unique approach: Autogen offers deeper programmatic control, whereas Crew AI emphasizes configuration-driven simplicity. Together, they showcase how agentic AI is evolving from theory into practical, developer-friendly tools.

The next time you’re building an autonomous or multi-agent application, Autogen and Crew AI are two frameworks well worth exploring.

@container scroll-state: Replace JS scroll listeners nowCSS @container scroll-state lets you build sticky headers, snapping carousels, and scroll indicators without JavaScript. Here’s how to replace scroll listeners with clean, declarative state queries.

Explore 10 Web APIs that replace common JavaScript libraries and reduce npm dependencies, bundle size, and performance overhead.

Russ Miles, a software development expert and educator, joins the show to unpack why “developer productivity” platforms so often disappoint.

Discover what’s new in The Replay, LogRocket’s newsletter for dev and engineering leaders, in the February 18th issue.

Hey there, want to help make our blog better?

Join LogRocket’s Content Advisory Board. You’ll help inform the type of content we create and get access to exclusive meetups, social accreditation, and swag.

Sign up now