If you ask me what framework has the best component model, I’d say React without hesitating.

There are various reasons for this. On the one hand, React makes components without much ceremony and compromises. The model is also pretty much independent of React. With the new JSX factory, you wouldn’t even see an import of React in TypeScript or Babel.

Obviously, there must be one caveat when it comes to building every frontend application exclusively in React. Besides the obvious religious discussion of whether React is indeed the right way to do frontend, the elephant in the room is that React is … after all … just JavaScript.

It’s a JavaScript library, and it requires JavaScript to run. This means longer download times, a more bloated page, and, presumably, a not-so-great SEO ranking.

The Replay is a weekly newsletter for dev and engineering leaders.

Delivered once a week, it's your curated guide to the most important conversations around frontend dev, emerging AI tools, and the state of modern software.

So what can we do to improve the situation? An initial reaction could be one of the following:

“Oh yes, let’s just use a Node.js server instead, doing SSR, too.”

“Isn’t there something that generates already static markup? Who’s that Gatsby?”

“Hmm I want it all, but obviously an SSR framework doing Gatsby-like stuff would be good. This is Next.js level, right?”

“Sounds too complicated. Let’s just use Jekyll again.”

“Why?! Let’s welcome everybody to 2021 — serving any page as an SPA is cool.”

Maybe you tend to identify with one of these. Personally, depending on the problem, I’d go for one of the latter two. Sure, I did quite a bit of server-side rendering (SSR) with React, but very often I found that the additional complexity was not worth the effort.

Likewise, I may be one of the few people who actually dislikes Gatsby. For me, at least, it overcomplicated almost everything, and I did’t see much gain.

So is this it? Well, there is another way, of course. If we placed our application on a solid architecture, we could just write a little script and actually perform the static site generation (SSG) ourselves — no Gatsby or anything else required. This will not be as sophisticated; nevertheless, we should see some good gain.

Now what are we actually expecting here?

In this post, we will build a simple solution to transform our page created using React into a fully pre-generated set of static sites. We will still be able to hydrate this and leave our site dynamic.

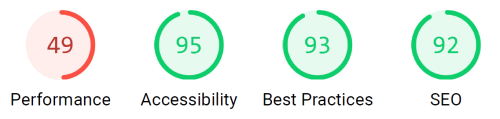

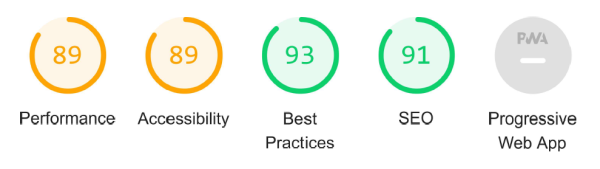

Our goal was to improve the initial rendering performance. In our Lighthouse test we saw that our own homepage was not always as well perceived as we hoped.

What we will not do is optimize the static pages so that they have only tiny fragments of JavaScript. We will always hydrate the page with the full JavaScript (which may still be lazy-loaded, but more on that later).

Even though a static pre-rendering of an SPA may be beneficial for perceived performance, we will not focus on performance optimizations. If you’re interested in performance optimization, you should take a look at this in-depth guide to performance optimization with webpack.

As boilerplate for this article, we use a fairly simple but quite common kind of React application. We install a bunch of dev dependencies (yes, we’ll be using TypeScript for transpilation purposes):

npm i webpack webpack-dev-server webpack-cli typescript ts-loader file-loader html-webpack-plugin @types/react @types/react-dom @types/react-router @types/react-router-dom --save-dev

And, of course, some runtime dependencies:

npm i react react-dom react-router-dom react-router --save

Now we set up a proper webpack.config.js to bundle the application.

module.exports = {

mode: 'production',

devtool: 'source-map',

entry: './src/index.tsx',

output: {

filename: 'app.js',

},

resolve: {

extensions: ['.ts', '.tsx', '.js'],

},

module: {

rules: [

{ test: /\.tsx?$/, loader: 'ts-loader' },

{ test: /\.(png|jpe?g|gif)$/i, loader: 'file-loader' },

],

},

};

This will support TypeScript and files as assets. Note that for a larger web app, we may need many other things, but this is sufficient for our demo.

We also should add some pages with a bit of content to see this working out. In our structure, we’ll use an index.tsx file to aggregate everything. This file can be as straightforward as:

import * as React from 'react';

import { BrowserRouter, Route, Switch } from 'react-router-dom';

import { render } from 'react-dom';

const HomePage = React.lazy(() => import('./pages/home'));

const FirstPage = React.lazy(() => import('./pages/first'));

const NotFoundPage = React.lazy(() => import('./pages/not-found'));

const App = () => (

<BrowserRouter>

<Switch>

<Route path="/" exact component={HomePage} />

<Route path="/first" exact component={FirstPage} />

<Route component={NotFoundPage} />

</Switch>

</BrowserRouter>

);

render(<App />, document.querySelector('#app'));

The problem with this approach is that we’ll need to edit this file for every new page. If we use the convention of storing the pages in the src/pages directory, we can come up with something better. We have two options:

require.context magic to “read out” the directory and get a dynamic list we can useThe second approach offers the big advantage that the routes can be part of the pages. For this approach, we’ll need another webpack loader:

npm i parcel-codegen-loader --save-dev

This now allows us to refactor the index.tsx file to look like:

import * as React from 'react';

import { BrowserRouter, Route, Switch } from 'react-router-dom';

import { render } from 'react-dom';

import Layout from './Layout';

const pages = require('./toc.codegen');

const [notFound] = pages.filter((m) => m.route === '*');

const standardPages = pages.filter((m) => m !== notFound);

const App = () => (

<BrowserRouter>

<Layout>

<Switch>

{standardPages.map((page) => (

<Route key={page.route} path={page.route} exact component={page.content} />

))}

{notFound && <Route component={notFound.content} />}

</Switch>

</Layout>

</BrowserRouter>

);

render(<App />, document.querySelector('#app'));

Now all pages are fully determined by toc.codegen, which is a module that gets generated on the fly during bundling. Additionally, we’ve added a Layout component to give our pages a bit of shared structure.

The code generator for the toc module looks like this:

const { getPages } = require('./helpers');

module.exports = () => {

const pageDetails = getPages();

const pages = pageDetails.map((page) => {

const meta = [

`"content": lazy(() => import('./${page.folder}/${page.name}'))`,

`${JSON.stringify('route')}: ${JSON.stringify(page.route)}`,

];

return `{ ${meta.join(', ')} }`;

});

return `const { lazy } = require('react');

module.exports = [${pages.join(', ')}];`;

};

We just iterate over all pages and generate a new module, exporting an array of objects with route and content properties.

At this point, our goal is to pre-render this simple (yet complete) application.

If you know SSR with React, you already know everything you need to do some basic SSG. At its core, we use the renderToString function from react-dom/server instead of render from react-dom. Assuming we have a page nested in a layout, the following code may work already:

const element = (

<MemoryRouter>

<Layout>

<Page />

</Layout>

</MemoryRouter>

);

const content = renderToString(element);

In the snippet above, we assume that the layout is fully given in a Layout component. We also assumed that React Router is used for client-side routing. Therefore, we need to provide a proper routing context. Luckily, it does not really matter whether we use HashRouter, BrowserRouter, or MemoryRouter — all provide a routing context.

Now, what is Page? Page refers to the component that actually displays the page to be pre-rendered. You may have more components that you’d like to bring in on every page. A good example would be a ScrollToTop component, which would usually be integrated like:

<Route component={ScrollToTop} />

But this would be a component we’d leave up to the hydration when our static page becomes dynamic. There’s no need to pre-render this.

Otherwise, the application itself would usually look similar to:

const app = (

<BrowserRouter>

<Route component={ScrollToTop} />

<Layout>

<Switch>

{pages

.map(page => (

<Route exact key={page.route} path={page.route} component={page.content} />

))}

<Route component={NotFound} />

</Switch>

</Layout>

</BrowserRouter>

);

hydrate(app, document.querySelector('#app'));

This is quite close to the snippet for static generation. The major difference is that here we include all pages (and hydrate), while in the SSG scenario above, we reduce the whole page content to a fixed single page.

Well, so far everything sounds straightforward and easy, right? But the problems lie under the hood.

How do we refer to assets? Let’s say we use some code like:

<img src="/foo.png" />

This may work given that foo.png exists on our server. Using full URLs would be a bit more reliable, e.g., http://example.com/foo.png. However, then we’d give up some flexibility regarding the environment.

Quite often, we’d leave such assets to bundlers such as webpack anyway. In this case, we’d have code like:

<img src={require('../assets/foo.png')} />

Here, foo.png is resolved locally at build time, then hashed, optimized, and copied over to the target directory. This is all fine. For the process described above, however, just requiring the module in Node.js will not work. We will hit an exception that foo.png is not a valid module.

Solving this is actually not complicated. Luckily, we can register additional extensions for modules in Node.js:

['.png', '.svg', '.jpg', '.jpeg', '.mp4', '.mp3', '.woff', '.tiff', '.tif', '.xml'].forEach(extension => {

require.extensions[extension] = (module, file) => {

module.exports = '/' + basename(file);

};

});

The code above assumes that the file already is/will be copied over to the root directory. Thus, we’d transform require('../assets/foo.png') to "foo.png".

What about the hashing part? If we already have a list of assets files with their hash we could go for a code like this:

const parts = basename(file).split('.');

const ext = parts.pop();

const front = parts.join('.');

const ref = files.filter(m => m.startsWith(front) && m.endsWith(ext)).pop() || '';

module.exports = '/' + ref;

This would try to find a file starting with “foo” and ending with “png,” such as foo.23fa6b.png. Rest as above.

Now that we know how to handle generic assets, we could also provide a handling mechanism for .ts and .tsx files. After all, these can be transpiled to JS in memory, too. All this work is quite unnecessary, however, as ts-node already does that. So our work essentially reduces to:

npm i ts-node --save-dev

Then register the handler for the respective file extensions using:

require('ts-node').register({

compilerOptions: {

module: 'commonjs',

target: 'es6',

jsx: 'react',

importHelpers: true,

moduleResolution: 'node',

},

transpileOnly: true,

});

Now this allows just requiring modules that are defined as .tsx files.

If we’ve written an optimized React SPA, then we will also bundle split our application at the routing level. This means that every page gets its own sub-bundle. As such, there will be a core/common bundle (usually containing the routing mechanism, React itself, some shared dependencies, etc.) and a bundle for each page. If we had 10 pages, we’d end up with 11 (or more) bundles.

But using a pre-render approach, this is not so ideal. After all, we are already pre-rendering individual pages. What if we had a sub-component on a page (e.g., a map control) that would be shared, but is so large that we’d like to put it into its own bundle?

That is, we have a component like:

const ActualMap = React.lazy(() => import('./actual-map'));

const Map = (props) => (

<React.Suspense fallback={<div>Loading Map ...</div>}>

<ActualMap {...props} />

</React.Suspense>

);

Where the actual component will be lazy-loaded. In this scenario, we will hit quite a drastic wall. React does not know how to pre-render lazy.

Luckily, we could redefine lazy to just display some placeholder (we could also go crazy here and actually load or pre-render the content, but let’s keep it simple and assume that whatever gets lazy-loaded here should actually be lazy-loaded later on):

React.lazy = () => () => React.createElement('div', undefined, 'Loading ...');

The other part that is unavailable for use in renderToString is Suspense. Well, honestly, an implementation of this would not hurt, but let’s do it ourselves.

All we need to do here is to act as if Suspense would not be there at all. So we just replace it with a fragment using the provided children.

React.Suspense = ({ children }) => React.createElement(React.Fragment, undefined, children);

Now we handle lazy loading quite efficiently, even though, depending on the scenario, we could (and maybe even want to) do much more than that.

Besides things like lazy and Suspense, other parts may be missing from React, too. One good example is useLayoutEffect. However, since useLayoutEffect usually would only apply at runtime, the easy way to support it is to just avoid it altogether.

Quite a straightforward implementation is to replace it with a no-op function. This way, useLayoutEffect is not in our way and is simply ignored:

React.useLayoutEffect = () => {};

Some of the modules we require will actually not be in CommonJS format. This is a big problem. At the time of writing, Node.js only supports CommonJS modules (ES modules are still experimental).

Luckily, there is the esm package, which gives us support for ES modules using import ... and export ... statements. Great!

Like with ts-node, we’d start by installing the dependency:

npm i esm --save-dev

And then actually using it. In this case, we need to replace the “normal” require with a new version of it. The code reads:

require = require('esm')(module);

Now we have support for ES modules, too. The only thing missing may be some DOM-related functionality.

While most often we should put conditionals in our code like so:

if (typeof window !== 'undefined') {

// ...

}

We could also fake some other globals, too. For instance, we (or some dependency we use directly or indirectly) may refer to XMLHttpRequest, XDomainRequest, or localStorage. In these cases, we could mock them by extending the global object.

With the same logic, we may mock document, or at least parts of it. There is, of course, the jsdom package, which helps with most of them already. But let’s keep it simple and to the point for now.

Just a mocking example:

global.XMLHttpRequest = class {};

global.XDomainRequest = class {};

global.localStorage = {

getItem() {

return undefined;

},

setItem() {},

};

global.document = {

title: 'sample',

querySelector() {

return {

getAttribute() {

return '';

},

};

},

};

Now we have everything to start pre-rendering our application without much trouble.

There are many ways we can generalize and use the snippets above. Personally, I like to use them in a separate module, which is evaluated/used per page using fork. This way, we always get isolated processes, which can be parallelized, too.

An example implementation is shown below:

const path = require('path');

const { readFileSync, readdirSync } = require('fs');

const { fork } = require('child_process');

const { getPages } = require('./files');

function generatePage(page, files, html, dist) {

const modPath = path.resolve(__dirname, 'ssg-kernel.js');

console.log(`Processing page "${page.name}" ...`);

return new Promise((resolve, reject) => {

const ps = fork(modPath, [], {

cwd: process.cwd(),

stdio: 'ignore',

detached: true,

});

ps.on('message', () => {

console.log(`Finished processing page "${page.name}".`);

resolve();

});

ps.on('error', () => reject(`Failed to process page "${page.name}".`));

ps.send({

source: page.path,

target: page.route,

files,

html,

dist,

});

});

}

function generatePages() {

const dist = path.resolve(__dirname, 'dist');

const index = path.resolve(dist, 'index.html');

const files = readdirSync(dist);

const html = readFileSync(index, 'utf8');

const baseDir = path.resolve(__dirname, '..');

const pages = getPages();

return Promise.all(pages.map(page => generatePage(page, files, html, dist)));

}

if (require.main === module) {

generatePages()

.then(

() => 0,

() => 1,

)

.then(process.exit);

} else {

module.exports = {

generatePage,

generatePages,

};

}

This implementation could be used as a lib as well as directly via node. That’s why we distinguish between the two cases using require.main as a discriminator. In case of a lib, we export the two functions generatePages and generatePage.

The only thing left here is the definition of getPages. For this, we could just use the definition we specified in the introductory section about a basic React application.

The ssg-kernel.js module would contain the code above. Wrapped in a proper envelope for use in a forked process, we end up with:

// ...

process.on('message', msg => {

const { source, target, files, html, dist } = msg;

setupExtensions(files);

setTimeout(() => {

const { content, outPath } = renderApp(source, target, dist);

makePage(outPath, html, content);

process.send({

content,

});

}, 100);

});

Where setupExtensions would set up the necessary modifications for require, while renderApp does the actual markup generation. makePage uses the output from renderApp to actually write out the generated page.

Using such a setup, we could pre-render our website and get a significant performance boost, as confirmed by Lighthouse:

As a nice side effect, our own website now also works — to some degree — for users without JavaScript. The full example application can be found on GitHub.

Using React for creating great static pages is actually not a big deal. There is no need to fall back to bloated and complicated frameworks. This way, we can control very well what goes in and what needs to stay out.

Additionally, we learn quite a bit about the internals of Node.js, React, and, potentially, some of the dependencies we use. Knowing what we actually use is more than just an academic exercise, but vital in case of bugs or other issues.

The performance gain of pre-rendering an SPA with this technique can be nice. More importantly, our page becomes more accessible and gets ranked higher for SEO purposes.

Where do you see pre-rendering shine? Is this only a meaningless exercise, or can React also be a good development model for writing reusable components without interactivity?

Install LogRocket via npm or script tag. LogRocket.init() must be called client-side, not

server-side

$ npm i --save logrocket

// Code:

import LogRocket from 'logrocket';

LogRocket.init('app/id');

// Add to your HTML:

<script src="https://cdn.lr-ingest.com/LogRocket.min.js"></script>

<script>window.LogRocket && window.LogRocket.init('app/id');</script>

Solve coordination problems in Islands architecture using event-driven patterns instead of localStorage polling.

Signal Forms in Angular 21 replace FormGroup pain and ControlValueAccessor complexity with a cleaner, reactive model built on signals.

Discover what’s new in The Replay, LogRocket’s newsletter for dev and engineering leaders, in the February 25th issue.

Explore how the Universal Commerce Protocol (UCP) allows AI agents to connect with merchants, handle checkout sessions, and securely process payments in real-world e-commerce flows.

Hey there, want to help make our blog better?

Join LogRocket’s Content Advisory Board. You’ll help inform the type of content we create and get access to exclusive meetups, social accreditation, and swag.

Sign up now

2 Replies to "Static site generation with React from scratch"

Awesome content Florian, I’m researching on which approach is better on building static web page for SaaS app project. And yes I was think of using Gatsby but I’m afraid it will become hard to maintain if my app scale up more bigger. And I think back to native way using pure ReactJs with lil bit of efforts, like you’ve written in this article, are might be the best approach, thank you

Thanks for nice comment! Yes indeed – this was why we made this in the first place. We tried Gatsby several times already and it always failed to deliver. It was bloated, required custom configuration, and did not work with our setup (which, honestly, was not so “exotic” – just standard TypeScript without es module interop…). In the end for what we tried to do this simple approach worked. Granted, Gatsby does a lot more (and really well if its working), but again – for our purposes that’s good enough.