We’ve all read “The Lean Startup.” We get the theory. But when it’s time to ship, somehow we mess it up anyway.

The number one mistake? Treating the MVP like a mini version of the final product — smaller, scrappier, but still held to the same success metrics as a full launch.

So we ship it (maybe call it a “beta”), and hope for activation, engagement, retention. If the numbers are good, we high-five. If they’re bad, we panic.

Either way, we often forget to ask why.

And if you can’t answer why, your MVP has failed. Because learning, not launching, is the entire reason why your MVP exists.

Let’s rewind.

Your MVP exists to test your riskiest assumptions, not to become a feature-lite version of your dream product.

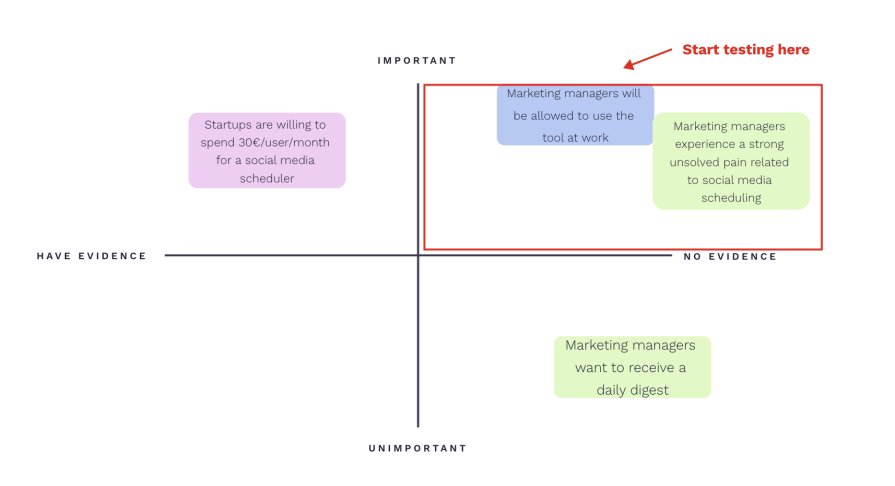

I advise every team I work with to use David Bland’s assumptions mapping framework. It forces you to get honest about what really needs to be true for your idea to work.

With assumptions mapping, your session participants generate assumptions alone, not in a group setting, to avoid group-think and get as much diversity as possible.

Each person writes down as many assumptions as possible for the proposed solution.

For example, the prompt might be: “What needs to be true for this idea to succeed?”

Then you bucket those assumptions into five categories:

Tip: Use color-coding to keep things balanced. I’ve seen technical teams generate 90 percent of their assumptions in the “feasibility” category, casually ignoring whether it’s a problem worth solving in the first place.

Once you’ve got your list, map the assumptions:

Your riskiest assumptions live in the top-left quadrant: important and no evidence. That’s where your test strategy should focus first:

When generating assumptions some of the biggest mistakes teams make include:

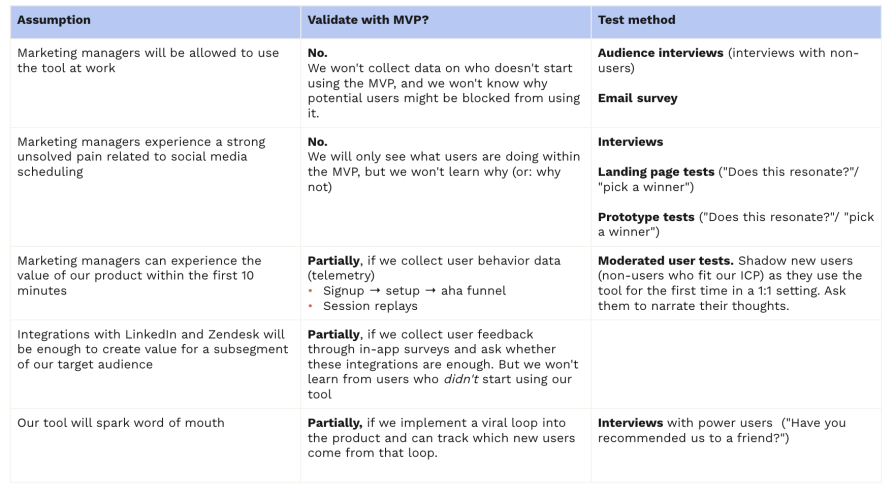

Let’s assume you’ve identified the following assumptions as the riskiest ones:

Is an MVP the best way to validate these, or should you use another test method?

The number MVP mistake? Skipping the assumptions-mapping step altogether, and instead trying to test the idea as a whole. When your MVP fails to hit its goal, you’re left staring at a number, with no idea why.

Try these two tips to maximize your learning:

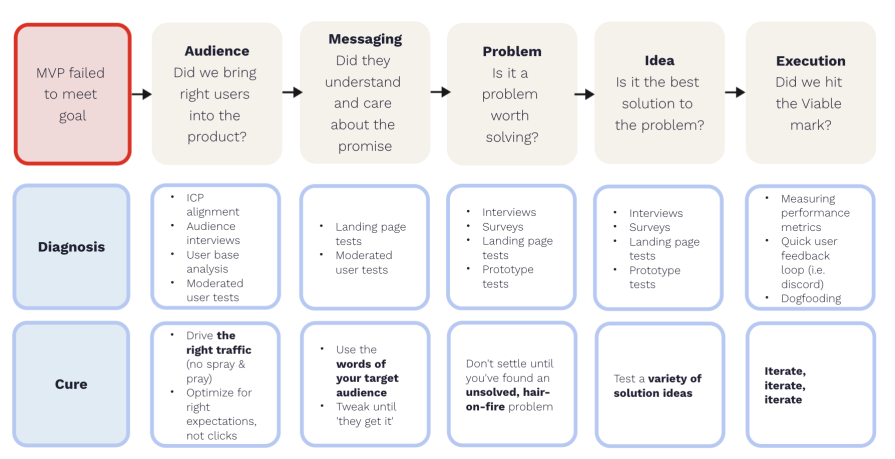

During my 15 years of working with early-stage startups I’ve identified five reasons why MVPs fail by using the AMPIE framework (audience, messaging, problem, idea, execution):

In my experience, great products are built to solve the specific pain point of a narrow target audience. Trying to serve everyone at once leads to mediocrity (at best).

One of the biggest potential failures of an MVP occurs if the users who came into your product weren’t the users you built the product for. They came in with a different set of needs and were never going to be successful in your product.

If you follow a spray and pray user acquisition strategy, optimized for clicks but not for the truth, you’ll likely see a spike in sign up rates, but kill your user retention rates.

This is also why I push back on strategies like “let’s give it away for free and see what people do.” If you’re watching the wrong people, the data is garbage.

What to do instead:

Defining, and continuously refining your ICP is step zero. It sets the entire MVP up for signal-rich learning instead of misleading noise.

Let’s assume that users can self-serve their way into your product via your landing page. Bad messaging can kill you in two ways:

You can test this with landing page tests (borrowed from Matt Lerner’s company Systm):

This test gives you brutal clarity on two questions:

Assuming you have the right users and the landing page worked… maybe the problem isn’t a hair-on-fire one. Maybe they’re fine with existing alternatives. More audience or user interviews, fake door marketing tests, and expert conversations can sniff this out.

Maybe the problem is real, but your take on solving it isn’t resonating.

I’m a big fan of user labs to gauge excitement levels about a prototype:

Did you hit the viable mark in the term minimum viable product?

Execution matters. If your MVP is too buggy, slow, or confusing, you didn’t test your idea, you tested your team’s ability to ship under pressure. Some target audiences will have higher tolerance for paper cuts than others. If your MVP quality isn’t enough to give you a real signal about your target audience, improve it.

You don’t need a polished product, but it needs to be good enough to learn from:

Most MVPs don’t get it right the first time. That’s not the problem. The problem is when you don’t know why it didn’t work. That’s where teams get stuck.

If you can isolate the “why” and validate your riskiest assumptions, then your MVP did its job.

Remember, success isn’t 5,000 active users — success is knowing what to do next.

Featured image source: IconScout

LogRocket identifies friction points in the user experience so you can make informed decisions about product and design changes that must happen to hit your goals.

With LogRocket, you can understand the scope of the issues affecting your product and prioritize the changes that need to be made. LogRocket simplifies workflows by allowing Engineering, Product, UX, and Design teams to work from the same data as you, eliminating any confusion about what needs to be done.

Get your teams on the same page — try LogRocket today.

Rahul Chaudhari covers Amazon’s “customer backwards” approach and how he used it to unlock $500M of value via a homepage redesign.

A practical guide for PMs on using session replay safely. Learn what data to capture, how to mask PII, and balance UX insight with trust.

Maryam Ashoori, VP of Product and Engineering at IBM’s Watsonx platform, talks about the messy reality of enterprise AI deployment.

A product manager’s guide to deciding when automation is enough, when AI adds value, and how to make the tradeoffs intentionally.