The optimal sprint length has always been a discussion-provoking topic.

Although there seems to be a general consensus that two-week sprints are the way to go — or, at least, every company or client I’ve worked for has adopted a biweekly cadence — there’s no guarantee this is the most appropriate length for a given context.

Your work cadence tremendously impacts your day-to-day work, and it’s not only about meetings. For some reason, I’ve always found the ideal sprint length a fascinating topic and thus have experimented heavily throughout my career.

In this article, I’ll summarize my various sprint-length experiments, what they taught me, and the key takeaways. I hope they’ll inspire you to experiment with your own process.

I’ve experimented with changing sprint length roughly 15 times in my career. I ran these experiments in various contexts:

These experiments enabled me to understand the various tradeoffs and consequences of different sprint cadences and helped me develop a framework for choosing the right length.

Without any further ado, let’s dig in.

I call these “standard” sprints because, based on my experience, most companies operate on a biweekly sprint basis.

The point of the standard two-week sprint is to balance the overhead of regular scrum ceremonies and provide the team enough focused time without compromising planning flexibility. This often works, but it’s far from a silver bullet. While running biweekly sprints in various setups, I’ve noticed a few recurring patterns:

The fundamental basis for biweekly sprints — that they balance the number of meetings — seems to be true in most cases. If organized well, it gives you a whole week free of scrum meetings other than dailies, and it’s a blessing for both the team and the product manager.

Two weeks is longer than you think. New opportunities appear on a weekly basis, and while we shouldn’t chase every new shiny object, often, these opportunities are actually worth pivoting toward.

Whether it’s because we learned something new or finally got approval/sign-off for an initiative one day after sprint planning, parking an idea for two weeks might not make sense. In my experience, it often results in re-planning a few days after the start of the sprint, adding more overhead and distraction.

This is especially common for larger teams. The idea behind the sprint goal is to give the team a shared objective for the sprint. But what if these can be achieved within a week or so?

We often ended up reaching our goal mid-sprint and switching to another goal, which killed the idea of a “single objective.”

I’ve noticed that team members often resort to a “let’s cover it on retro” mindset when issues arise. It’s easy to write that off as a culture problem, but let’s take a reality check: with busy calendars and goals, it’s easy to say, “I don’t have time for that today. I’ll cover it in our retro in four days.” By then, these topics are often outdated or we simply forget about them.

Weekly sprints have always been tempting to me. They come with a promise of better predictability and faster learning and iterations.

Today, weekly sprints are usually my go-to format for brand new teams. The idea is that, if we plan and inspect our work every week, we can learn faster and go through the forming and storming phases of team building quickly.

But as you can expect, the world is rarely black and white. Here’s what I learned by experimenting with one-week sprints:

Although weekly retros do give more formal opportunities to inspect and adapt, the shorter timebox and higher frequency are sometimes counterproductive.

On the one hand, weekly retrospectives made us work on more atomic, up-to-date problems, and fewer things slipped through the cracks. On the other hand, they always seemed shallow, as if the problems never had enough time to become painful enough for team members to act.

Shorter timeboxes also reduced our ability to go deep. Some challenges were big enough that we couldn’t solve them within a week, so we had many open loops and revisited the same topics.

Overall, the pros and cons of weekly retros usually balanced themselves out.

The most significant advantage of weekly sprints was their planning accuracy. Estimating our capacity for a week is just less problematic and more manageable. Keeping weekly commitments is easier, and it provides welcome short-term predictability.

One team member calling out sick, one unplanned workshop, one partner outage, and your whole sprint goal is kaput.

With weekly sprints, just a single day off reduces a team member’s capacity by 20 percent. Unexpected events simply hurt more the shorter the sprint.

In theory, shortening all timeboxes by half should lead to the same overhead. In practice, it does the opposite. One 90-minute long meeting is often less disruptive than two 45-minute long meetings.

You probably know the feeling where you have five minutes before the next meeting, so you don’t pick up anything new and just brew yourself another coffee. Weekly sprints, in my experiments, almost doubled these situations.

This might be a personal thing, but the weekly cadence put more stress on my mind than when we were running standard, two-week sprints. I had to worry every week about how the next retro would turn out, how to nail the next review, and what sprint goal would be the most optimal. There are no “chill weeks” when every week is sprint opening/closing week.

This was a wild experiment. I tried single-day sprints twice, actually. Maybe I’m a masochist.

I tried this approach during a time when our direction was in flux. It was impossible to plan for three days ahead, let alone a week or two.

In both cases, the approach was the same: one “scrum festival” meeting every day. We tried to pack the following daily, 25-minute sessions:

These experiments were super fun, and I encourage you to try them out sometime. It’s an eye-opening exercise, if nothing else. Here’s what I learned:

We quickly started skipping retros because there was nothing to discuss, and reviews turned into irregular, ad-hoc sessions. Sometimes, we didn’t have anything new to show after one day, and sometimes, it was hard to get the time from essential stakeholders.

Covering the retrospective part almost daily was a double-edged sword. On the one hand, the new improvements we introduced and challenges we solved were impressive. On the other hand, we always missed root cause analysis and covering bigger, fundamental issues.

Ultimately, I believe daily retrospectives are good exercises to run every now and then with any team to grab any low-hanging fruit, but it doesn’t cover fundamentals.

Developing, code reviewing, and testing tickets within a single day was challenging. We ended every “sprint” with tickets in QA because we simply didn’t have enough time to test it.

The best part of daily sprints was how they forced us to improve our processes. There was no longer time to wait two days for code review or a week for the designer’s review.

Although there’s a limit to how fast the cycle time can be, it did help us eliminate a lot of inefficiencies and build a more disciplined culture as a team. The pressure was somewhat unhealthy, but also very beneficial.

Both experiments ended similarly. At some point, we decided we wanted to hold deep-dive retros every now and then, so we added them to the schedule as an extra meeting.

Then, we figured out we needed external reviews in a more sustainable cadence for stakeholders, so we added yet another meeting. We also needed a long-term vision for more effective refinements.

Ultimately, our scrum festival naturally evolved back to daily meetings with supporting meetings every week or two. Unconsciously, we went back to biweekly sprints.

I once worked in a yearly roadmap-driven corporate setup, where we had a clear scope for almost a half year ahead. With the assumption of lower overhead with less risk (our plans rarely changed), we tried out 3-week sprints.

Here are my takeaways from that experience:

I felt like we found the sweet spot for our retros. With a formal retrospective every three weeks, we were less likely to fall into the “let’s wait until retro to bring it up” mentality and had more ad-hoc mini-retros and problem-solving sessions.

Surprisingly, the frequency of our inspect-and-adapt cycle actually increased.

Having two weeks in a row without any ceremonies was promising, and to an extent, it worked. But these weren’t fully focused weeks.

When planning and refining for three weeks ahead, it was easy to miss some details or leave some third-week scope refinement for later.

So, instead of planned, structured sessions to review work and plan details, they happened ad hoc. But we also learned we don’t always need half of the team to run a proper refinement session; sometimes a huddle between two developers is enough.

While three-week sprints worked fine with a stable roadmap, it stopped working when our roadmap started shifting. Sometimes, it was a business change. Sometimes, it was due to a third-party delay. Mid-sprint replanning became so frequent that they killed the idea of longer sprints.

Each experiment gave us a unique set of lessons. If I were to summarize them into two general buckets, they would be:

From a time perspective, two things that had the biggest impact on the success of various cadences were:

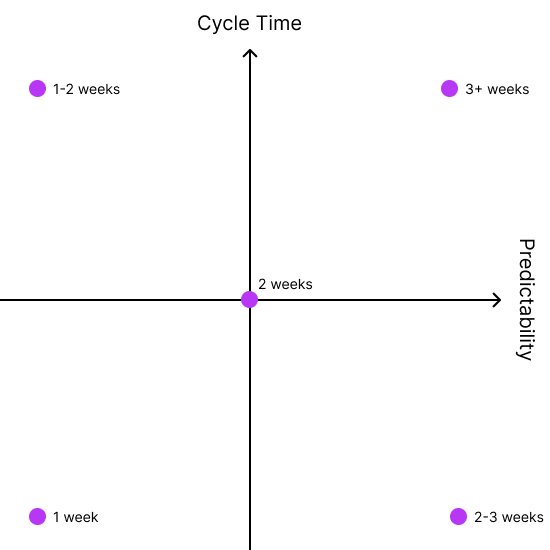

Let’s visualize it on an XY axis:

Cycle time determines how fast you can move a work item from “to do” to “done.” The lower your cycle time, the shorter sprints you can afford.

For example, if it currently takes you five days to develop, review, and test one Jira ticket properly, then weekly sprints likely don’t make sense, because that would mean you won’t complete anything you start on day two of a sprint.

Predictability is also a key factor. The longer sprint worked like magic, as long as our scope and roadmap were predictable. If you can confidently plan work for a month ahead, why not do so? But if you have problems securing a stable scope of a week ahead, then two-week sprints are already doomed.

The chart is just an overall guide. There are many other factors influencing the optimal sprint length, but I believe that predictability and cycle time are the most essential ones.

The Scrum Guide imposes artificial rules for sprint ceremonies, including that they should happen every sprint. Yet, the more we experimented, the more we learned that these rules only limit us. Working on daily, weekly, or three-week sprints often forced us to bend the rules, and we rarely regretted it.

Regardless of your sprint length, if a rule or principle annoys you, just break it.

Are you currently running monthly sprints, and you love it but feel that retrospectives are too rare? Don’t adjust the sprint length. Hold mid-sprint retrospectives. I promise, Ken Schwaber won’t visit you in your sleep if you do.

One of my teams currently runs weekly sprints but holds biweekly retros. It simply works for us better.

On another occasion, we had weekly sprints but held biweekly planning. We planned in detail the sprint ahead and preplanned the next sprint to give the team a sense of direction and help them refine ahead of time. After a week, we revisited the plan for the upcoming sprint while preplanning the next one.

Pre-planning might not be part of classic scrum, but we focus on what works best for us, not what’s in the books. I would encourage you to experiment and find a rhythm that works for you.

Sprint length is more than just the frequency of the ceremonies. Each experiment tremendously impacted our way of thinking, team dynamics, and approach to various issues.

Most importantly, each experiment led to learnings that were applicable regardless of length. With one team, I started with biweekly sprints, moved to weekly, then daily, then back to biweekly. Although we eventually stuck to biweekly sprints, previous experiments forced us to change our approach and helped us uncover many insights about our work, and these insights now help us work better in a biweekly cadence.

We never regretted any experiment, even though we reverted most of them. So, don’t be afraid to experiment and break the rules if needed.

Observe your cycle time and predictability to assess whether you are in the right spot or need a change. I believe you should adapt our sprint length depending on the current circumstances, which tend to change.

Do you have any dream or nightmare stories of running sprint experiments?

LogRocket identifies friction points in the user experience so you can make informed decisions about product and design changes that must happen to hit your goals.

With LogRocket, you can understand the scope of the issues affecting your product and prioritize the changes that need to be made. LogRocket simplifies workflows by allowing Engineering, Product, UX, and Design teams to work from the same data as you, eliminating any confusion about what needs to be done.

Get your teams on the same page — try LogRocket today.

How AI reshaped product management in 2025 and what PMs must rethink in 2026 to stay effective in a rapidly changing product landscape.

Deepika Manglani, VP of Product at the LA Times, talks about how she’s bringing the 140-year-old institution into the future.

Burnout often starts with good intentions. How product managers can stop being the bottleneck and lead with focus.

Should PMs iterate or reinvent? Learn when small updates work, when bold change is needed, and how Slack and Adobe chose the right path.