You’ve run countless interviews, launched prototypes, and maybe even shipped an MVP. As a well-trained, passionate PM, you’ve also conducted multiple tests for each of your riskiest assumptions. You’ve been collecting data, data, and more data. Now, your CEO strolls in and asks a valid question:

What have we learned, and what should we do next?

Product teams often drown in a sea of qualitative and quantitative data, struggling to make sense of months of assumptions tests. This blocks clear decision-making and wastes R&D resources. That’s where evidence maps come in.

Evidence maps are commonly used in academic research and public policy, but not (yet) in product management. This is an oversight, since they’re the logical next step after running tests and/or collecting data from various sources. Instead of keeping test results and data siloed, an evidence map brings everything together in one place.

For example, instead of separate documents that say, “The prototype test passed, and success metrics were met” or “We identified pain points X, Y, and Z in customer support tickets,” an evidence map consolidates all this information.

Already convinced that you need this? This article will deep-dive into how to use an evidence map to make sense of your riskiest assumptions. We’ll walk through a real example of creating, populating, and using an evidence map using this Miro template I created as a base.

In the best case scenario, your team meticulously identified your number one customer opportunity, ideated solution ideas, and pinpointed the riskiest assumptions underpinning those solutions. Your tests are neatly associated with these assumptions, and you’ve run multiple tests per assumption.

In the less-optimal case, you skipped all that, and instantly built and launched your solution, and are now trying to reverse-engineer why it’s not gaining traction. You’re left with vague hypotheses like, “We didn’t achieve the user growth we wanted because:

For each of your riskiest assumptions, create a dedicated space on your map. Divide this space into a left section for “soft evidence” and a right section for “hard evidence.”

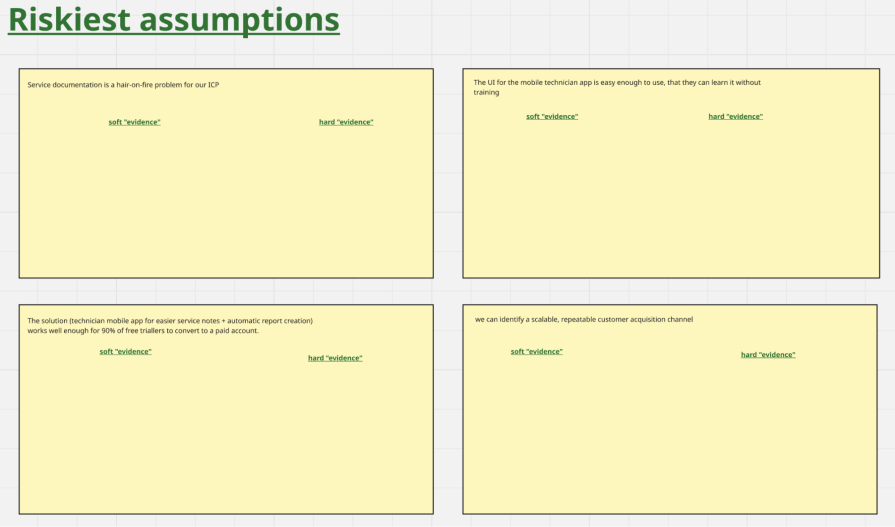

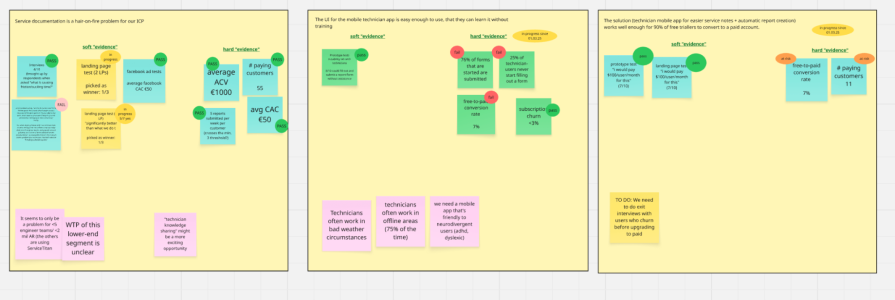

Here’s an example map for an early-stage startup that’s building a mobile app for field service technicians (electricians, HVAC installers, etc.) to capture service notes and photos on the job. Its key assumptions to validate are:

Three ingredients make this step a success:

Soft evidence comes from interviews or qualitative tests like landing page tests or prototype tests. When “evidence” is based on what a potential user has said, but not on what you’ve seen them do, it’s considered soft. Self-reported data is inherently unreliable; people often don’t do what they say they will.

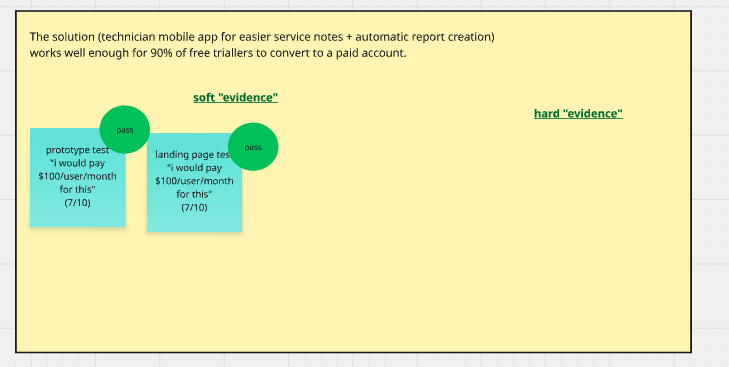

Here’s an example of soft evidence for the assumption: “The solution (technician mobile app for easier service notes plus automatic report creation) works well enough for 90 percent of free trial users to convert to a paid account:”

Assume you ran a moderated prototype test with 10 participants. You decided upfront that the test would pass if at least seven participants answered “Yes” to “Would you be willing to pay €100/user/month for this solution.”

The test passed. A similar landing page test also passed. Very promising, right?

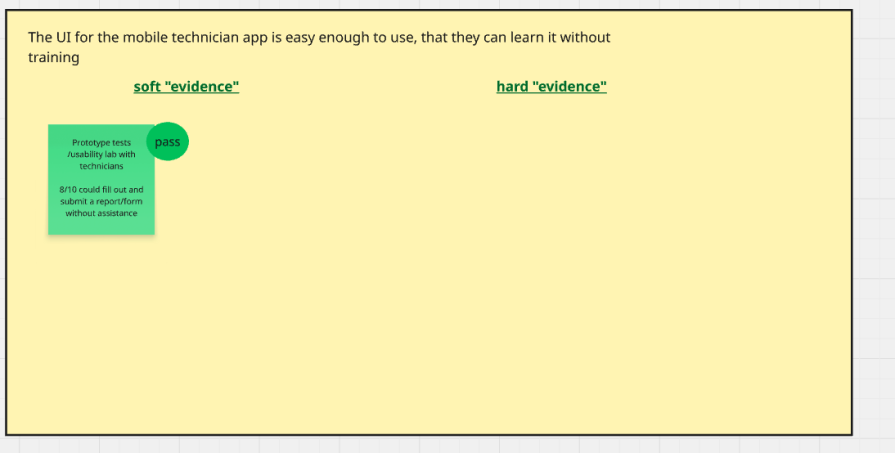

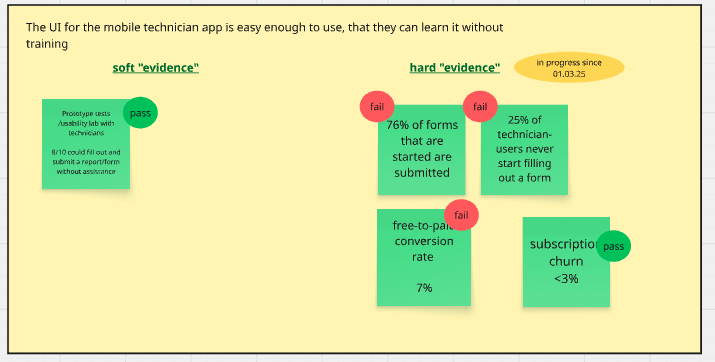

Now, for the assumption: “The UI for the mobile technician app is easy enough to use, that they can learn it without training:”

You ran a moderated prototype test with 10 participants, eight out of 10 participants succeeded in executing the task “fill out and submit a form” without guidance. Another pass!

Hard evidence is what you’ve seen people do under real-world circumstances, without being led by you. Think statistically significant A/B test results, a large-scale MVP, or launch data.

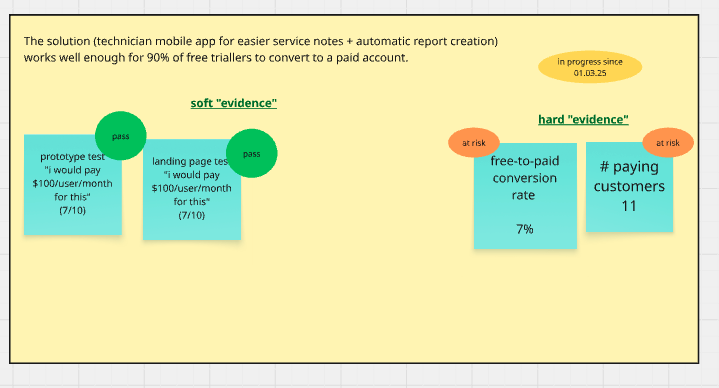

Let’s add real product data measured since launch for the assumption: “The solution (technician mobile app for easier service notes plus automatic report creation) works well enough for 90 percent of free trial users to convert to a paid account:”

Things are starting to look less rosy.

Your prototype test users indicated that they would be willing to pay $100/user/month for the solution, but in reality, fewer than 10 percent of free trial users are converting to paid.

So what should you do next? Some example options:

And for: “The UI for the mobile technician app is easy enough to use, that they can learn it without training:”

In response, you could:

Even with meticulous documentation, pulling out the real insights can feel like finding a needle in a haystack. To help with this, block dedicated thinking time to review all the evidence you’ve collected, whether it’s from learning cards, experiment docs, interview snapshots, or sales call transcripts.

Large language models (LLMs) can be very helpful here. Feed all test results and learnings into the LLM (remember, garbage in, garbage out, so clean up your notes!). Give it a strong background and a solid structure. Ask it questions to pull out insights, always double-checking that the LLM is looking at the correct input.

After that, plot the most important insights on your evidence map:

Your assumptions and insights might be something like:

Evidence mapping helps you make sense of the wealth of qualitative and quantitative data you collected to inform the decisions you set out to make.

At the end of day, it’s about honesty: Have you been spreading yourself too thin? Does your hard evidence paint a different picture from your soft evidence?

The map ultimately helps you see “what you know” versus “what’s still a gamble,” so that you can decide what steps to take next. Ready to create your own evidence map? Get started with this Miro template — and feel free to reach out if you have any questions!

Featured image source: IconScout

LogRocket identifies friction points in the user experience so you can make informed decisions about product and design changes that must happen to hit your goals.

With LogRocket, you can understand the scope of the issues affecting your product and prioritize the changes that need to be made. LogRocket simplifies workflows by allowing Engineering, Product, UX, and Design teams to work from the same data as you, eliminating any confusion about what needs to be done.

Get your teams on the same page — try LogRocket today.

Maryam Ashoori, VP of Product and Engineering at IBM’s Watsonx platform, talks about the messy reality of enterprise AI deployment.

A product manager’s guide to deciding when automation is enough, when AI adds value, and how to make the tradeoffs intentionally.

How AI reshaped product management in 2025 and what PMs must rethink in 2026 to stay effective in a rapidly changing product landscape.

Deepika Manglani, VP of Product at the LA Times, talks about how she’s bringing the 140-year-old institution into the future.