Customer discovery is foundational to good product management — but it’s often messy, slow, and hard to scale. So for the past few months, I’ve been going full force on using large language models (LLMs) to improve and accelerate my product discovery work.

From automating interview analysis to uncovering patterns in qualitative feedback, AI-powered tools can help PMs move faster and uncover deeper insights. But let me tell you, It’s been a hell of a ride. From, “Wow, this is amazing” to “Why can’t it get this simple thing right?”

My biggest unlock? A better fundamental understanding of what LLMs actually are. To get startedI highly recommend reading “Advances and Challenges in Foundation Agents” by B. Liu et al., which compares LLM agents to the human brain across four dimensions:

Despite the paper’s focus on agents, much of its insights can be directly applied to LLMs in general. As I learned more about LLMs, I was able to understand how to use the advancements in AI to streamline my PM duties.

In this article, I first breakdown the key factors that determine AI’s effectiveness, and then turn towards the most frequently asked questions about how to use it during your customer discovery process.

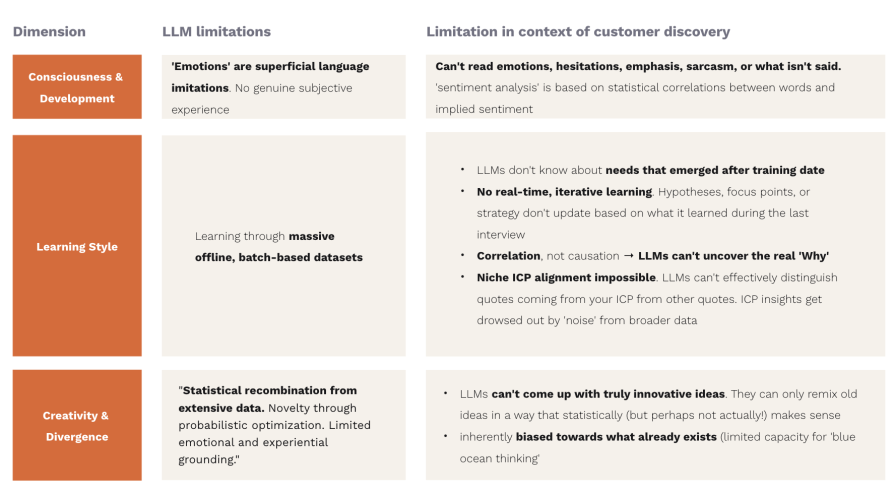

Let’s start out by diving into the the dimensions that have the most impact on LLMs’ limitations in terms of product discovery:

LLMs, in general (not just agents), have “no genuine subjective experience or self-awareness. Emotions are superficial language imitations.

What does that mean for customer discovery?

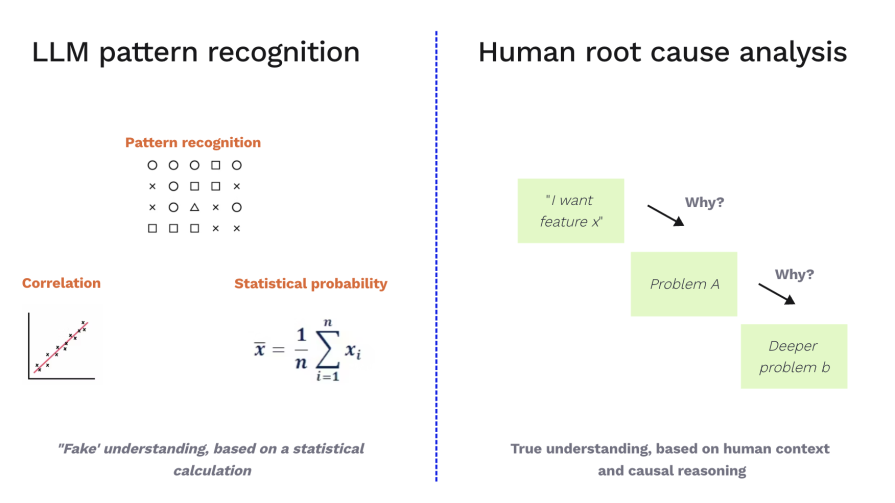

LLMs can’t read emotions the way humans can (even though “sentiment analysis” sounds great). They’re trained on vast amounts of text data, learning statistical patterns and correlations between words and implied sentiment.

For example, they’ll pick up that “love” or “fantastic” correlates with positive feelings, while “’hate” or “frustrating” appears with negative ones but this is a statistical echo, not genuine understanding. LLMs inherently lack the nuance and deep contextual depth that humans bring to the table. They can’t read emotional cues, hesitations, emphasis, sarcasm, or what isn’t said.

LLMs learn through massive offline datasets, with limited opportunities for online fine-tuning and adaptation. Learning tends to come in batches to catch them up, as opposed to continuously staying up to date.

What does that mean for customer discovery?

A LLM’s creativity stems from its ability to statistically recombine patterns from its training data. It’s not even remotely close to actual, human creativity.

What does that mean for product discovery?

And let’s not gloss over the hallucinations…

These stem from a complex interplay of the model’s learning style, its lack of genuine self-awareness, and its “creative” probabilistic generation.

The crucial takeaway for PMs: Hallucinations remain a persistent problem and haven’t been getting better:

Despite all the fear mongering about LLMs taking over every job, I don’t believe they will. If you’re leveraging your powerful human brain to deeply understand your specific target customer and their real-world problems, LLMs can’t replace you. And that remains the undeniable core of the job.

However, if you lean on AI to cut corners or mask your weak spots, this strategy will likely backfire.

The magic happens when you leverage generative AI to enhance your strengths.

Now that we’ve unpacked where LLMs fall short, let’s dive into how you can (and can’t) use AI as an effective, strategic partner.

Synthetic interview participants are AI-generated personas designed to simulate real people for the purpose of customer interviews or feedback collection. Instead of talking to a human, you’re interviewing an AI that was created to represent a specific type of user.

You feed the LLM a dataset that defines the persona’s characteristics, which can include behaviors, goals, and pain points. You can use Custom GPTs (ChatGPT) or Gems (Gemini) for this.

The issue is clear: It’s not a real person, but a representation of what you believe (which may or may not be true).

If your primary purpose for customer discovery is uncovering genuine, unsolved pain points or goals, then “interviewing” an AI that you’ve literally fed the answers is self-referential and largely pointless.

The verdict:

Anyone who’s manually sifted through endless forum posts and community comments gets excited about this: LLMs can drastically speed up scraping real user quotes from specified URLs and then categorizing them into pain points or needs.

Always make sure to ask the LLM to provide exact customer quotes and source URLs, so you can check for hallucinations.

Issues:

The verdict: Limited.

If quotes on forums are an important evidence source for you, AI drastically speeds up the scraping, summarizing, and synthesizing process but interviews will give you far richer insights.

Yes. Here are some examples of how:

Recruiting non-users (audience) — cold outreach

There are two obvious cases where you need to recruit non-users for interviews or tests:

There are plenty of AI-powered tools that can help automate outreach. For example, if you’re doing outbound via LinkedIn, players like Lagrowthmachine or LinkedRadar can automate connection requests or InMail sending.

The big downside: LinkedIn Sales Navigator only gives you limited credits and connection requests, which easily get used up when you let an AI-powered tool reach out to anyone in a search result list. It’s likely that you’ll reach out to people who aren’t a great fit.

The verdict: AI can drastically speed things up, but in some cases, a more manual process beats automation.

No, the actual human connection with your customers is one of your key assets. An AI won’t do as good a job as you because it can’t dynamically adjust its course or truly grasp a participant’s full context and subtext.

You’ll lose the opportunity to collect the most interesting insights and opportunities, and you risk making the participant feel their time was wasted.

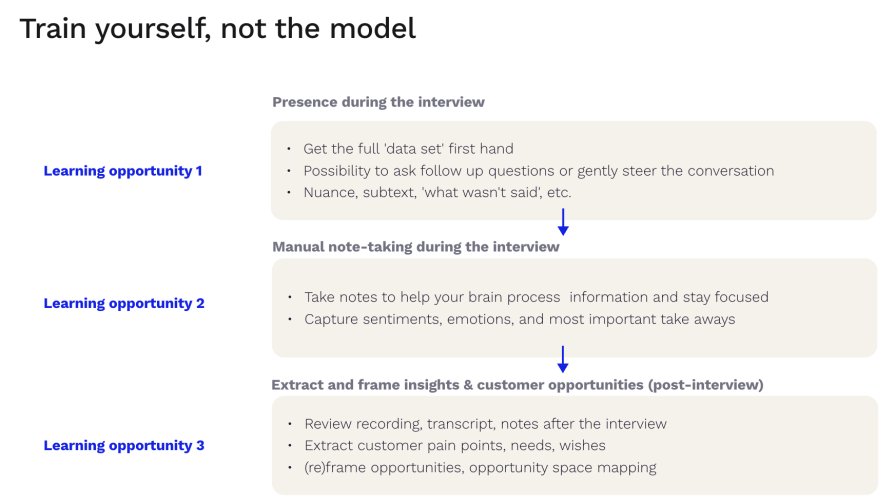

Being physically present in a customer discovery call is the only way to deeply understand the participant’s responses, context, and subtext. Reading someone else’s notes or a summary isn’t even close to being there in person.

The verdict: No.

Yes, but they won’t be as good as your own.

While AI note-takers (like Fathom AI and Granola) are useful as an extra pair of ears, filling in gaps you might miss, they can’t replace your subjective understanding. LLMs identify what’s statistically frequent or grammatically emphasized, not what’s truly important based on genuine subjective experience or your strategic context.

The verdict: Yes, but yours are better.

I’ve tried teaching conversational AI interfaces (Gemini, Claude, ChatGPT, etc.) to pull out insights and opportunities, after having fed them detailed information about what “customer opportunities” are (following Teresa Torres’ theory in “Continuous Discovery Habits”).

But even with precise instructions, these models still occasionally snuck in solution requests and business opportunities, or even mixed up opportunities with solutions.

More importantly, taking the time to extract insights and customer opportunities myself is where my deepest learning happens.

The verdict: AI can probably learn, but it’s not as simple as prompting once. More importantly, if you outsource this work, you stunt your own learning.

Sure, AI could do these things (albeit nowhere near as well as a human). But outsourcing these key discovery tasks means robbing yourself of important learning opportunities.

LLMs and generative AI are amazing. They offer incredible speed and scale for product discovery — from scraping data to hyper-personalized outreach — and are mind-blowingly good at processing vast information and pattern recognition.

However, LLMs and generative AI are deeply disappointing. They pick out seemingly random key takeaways, they totally miss emotional nuance, they can’t come up with anything innovative or uncover “blue ocean’ needs, and they miss basic requirements even if you spell them out.

I don’t believe AI can replace you if you’re dedicated to using your amazing, powerful, human brain to deeply understand your customer. But it can amplify your strengths, within boundaries.

Do the important work yourself, with AI as a backup or sparring partner.

Featured image source: IconScout

LogRocket identifies friction points in the user experience so you can make informed decisions about product and design changes that must happen to hit your goals.

With LogRocket, you can understand the scope of the issues affecting your product and prioritize the changes that need to be made. LogRocket simplifies workflows by allowing Engineering, Product, UX, and Design teams to work from the same data as you, eliminating any confusion about what needs to be done.

Get your teams on the same page — try LogRocket today.

How AI reshaped product management in 2025 and what PMs must rethink in 2026 to stay effective in a rapidly changing product landscape.

Deepika Manglani, VP of Product at the LA Times, talks about how she’s bringing the 140-year-old institution into the future.

Burnout often starts with good intentions. How product managers can stop being the bottleneck and lead with focus.

Should PMs iterate or reinvent? Learn when small updates work, when bold change is needed, and how Slack and Adobe chose the right path.