I had to rewrite this article three times. Originally, it was about how only product thinking could lead OpenAI from research toward its artificial general intelligence (AGI) goals, and now it’s much more. The first draft of this text was finished shortly after OpenAI DevDay, but two weeks later, the wildest weekend in tech happened: Sam Altman was fired as CEO of OpenAI and the company is practically doomed to death.

This is not a piece on the chaos that ensued, though. There are already plenty of resources written on this news. For a synthetic version of the facts and speculations surrounding Altman’s firing and the fallout, I highly recommend you watch this video.

This article is about how only product thinking can lead you far towards innovation, as well as about ethical risk assessment, stakeholder management, and how politics play a heavy part in product outcome. To go over all the lessons we can take from this convoluted case, we must understand how it all started.

In December 2015, Sam Altman, Elon Musk, and many other AI scientists and venture capitalists joined forces to found OpenAI. Not the first AI company ever created nor the last one to be born, OpenAI’s mission was to “advance digital intelligence in the way that is most likely to benefit humanity as a whole, unconstrained by a need to generate financial return.”

Even though the founding members were way above the average tech founder level, this company was not interested in financial return. OpenAI was expected to reach $1.3 billion in revenue by the end of 2023. This amount would surpass the individual revenues of 4,325 other publicly listed companies worldwide — all of which are very interested in generating profits.

When you watch OpenAI’s evolution from a purely research-based organization to a revenue-generating leader, it looks like a virtuous movement towards product-led thinking was a natural process. In fact, there was a rift among OpenAI’s leadership: while the board feared what profit could do to their mission of benefiting humanity, Altman saw commercial success as the only way to achieve said mission.

The road to hell is paved with good intentions, as the saying goes, and recent OpenAI developments go to lengths to show this is true. After Altman left, top brass left with him. A whopping 650 out of 770 total employees are formally considering doing the same. By averting growth and fighting against the race for time to market, the board inadvertently eliminated all means of reaching their own goals.

Their sin was to believe that tech alone would save their product. Little did we know they were not the first to think so.

History is full of launches that are over-promised and under-delivered. AT&T introduced the Picturephone in the 1960s, the first device capable of connecting people through voice and video in real-time. It was a bitter market failure. In 1996, Web TV (later bought by Microsoft and rebranded as MSN TV) would allow people to browse the web on their televisions. Sales were suspended in 2009 and subscriptions terminated in 2013.

ChatGPT is the first occurrence of a piece of technology, that I can recollect, that shook the market and is now poised to fail even so.

When you look back on why most of those early techs failed, there are usually three commonplace occurrences:

Even though Picturephone, WebTV, and others were ahead of their time, they didn’t cause any real disruption in society. They failed because they neglected the essential risks at the heart of the product operating model as we know it today:

OpenAI almost followed the same path with GPT-3. They managed to turn around in time, though, and assert themselves as a dominant player in the AI race with GPT-4 Turbo. That’s why it’s so shocking that they literally turned their back on it! Generative artificial intelligence is so divisive that not even the leading parties in this brand-new market are very aligned on what they should or should not be doing.

By late 2022 and early 2023, the world was in awe at the groundbreaking reveal of the first AI model capable of systematically impersonating humans. The large language model (LLM) GPT-3 took the news by storm, and everywhere you looked, you’d see people praising its brilliance or cursing the dark future it could bring.

When ChatGPT crossed the line of 100 million active users in just two months, it was clear that OpenAI had made it to the ranks of tech giants. But, as economists say, “After the boom, comes the bust.”

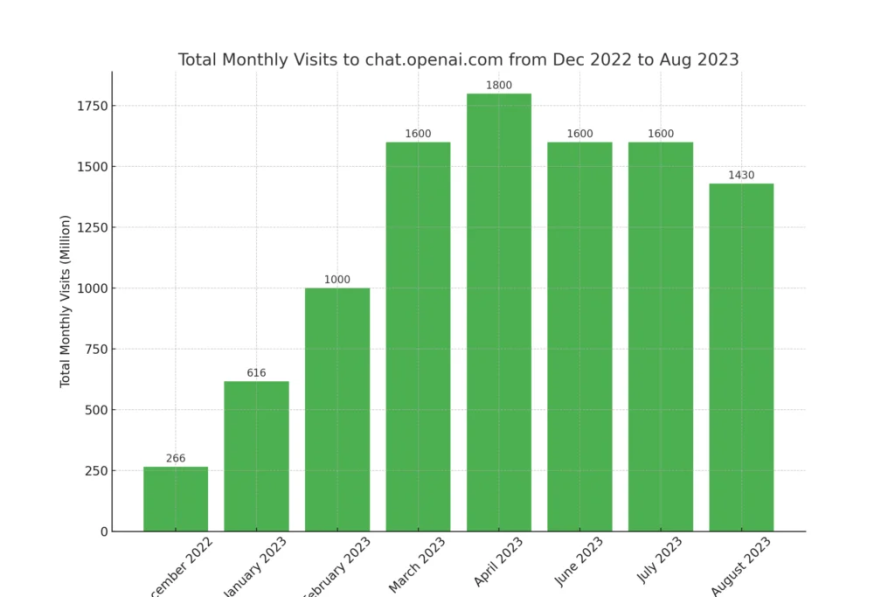

After peaking at 1.8 million visits in April, ChatGPT numbers began to dwindle. The most up-to-date visitor count we have access to comes from August 2023 at 1.43 million visits, a roughly 20-percent decrease compared to the previous peak in just three months:

As with all things new and exciting, people tend to engage out of curiosity instead of necessity. After having fun with some basic prompts, ChatGPT’s novelty quickly became mundane, and an overall lack of practical use cases meant that people moved on to the next shiny thing. That could have been the sad story of OpenAI — a brick laid down in the road that would lead to true massive AI adoption.

Much like the previous market fails I mentioned, ChatGPT checked all the boxes of a “too far ahead” checklist.

Although GPT-3 was free to use, it has always been a beast when it came to resource consumption. Estimates say that OpenAI spends between $100,000 and $700,000 a day to run their applications. If their burn rate remains as is, they can run out of money anywhere between the end of 2024 and 2025.

ChatGPT was just that: a chat. It had no means to do anything else other than read short messages and provide equally short responses. The technology behind it, though, was capable of much more.

The competition quickly began to spread with the release of AIs that can do image generation, audio generation, PDF reading and understanding, text embedding, coding copiloting, etc. GPT-3 quickly became limited given stark competition.

Part of ChatGPT’s initial success came from a pretty straightforward user experience: type in a message and get a response. Despite that, actually extracting value from ChatGPT demands some understanding of prompt engineering and how LLMs work. It’s not commonplace for your average user. Associate that with frequent outages and a serious input and output character limitation for conversations, and it was clear that ChatGPT was not ready for mass adoption.

Sam Altman said more than once that they revealed GPT-3 too early in favor of advancing the AI field. Under the pretext of being able to steer AI development in the right direction, the first public iteration of OpenAI’s LLM was targeted at research: it had no APIs, poor performance, limited applicability, and hard-to-use prompting.

It was powerful, but it was faced with constant hallucinations (when an LLM provides wrong information as if it were true) and outdated knowledge. It was nice to test the limits of the technology, but not good enough to sell.

Meanwhile, a plethora of companies, scientists, and AI enthusiasts were launching their own LLMs, all open source. Some of the most famous ones are Llama from Meta, Falcon from a UAE technology institute, and Claude from the French company Anthropic. Those were followed by an ecosystem explosion that led to the LangChain framework and HuggingFace community, all focused on democratizing access to large language models.

An open source community is like a squad composed of thousands of engineers, all working toward the goal of finding how that technology can better serve the community. AI has been mostly an open source environment so far because of its recency, and the problem with that is that open source technology remains niched inside the community — too complex to be used by an average user and too limited to be adopted in mass.

Instead of hiding development behind closed doors, outsourcing technical discovery to the community is a genius way of gaining scale. It was a risky move, though, because that same community discovered how to build the same tech with way less money.

The alleged leaked report from Google saying that big tech had “no moat” against open source was a wakeup call for the industry. Having the money to host AI infrastructure alone was not enough to build a business on top of the technology. Outpacing open source would only be possible if even more money and brain power were poured into generative AI, and one could only do it with mass adoption and mass revenue.

For OpenAI to “advance digital intelligence in the way that is most likely to benefit humanity as a whole,” they had to start making a product out of their research. Mass adoption that is above just novelty curiosity lies in addressing the real needs of real people, and that’s how the divide started within the company.

OpenAI has positioned itself as the harbinger of artificial general intelligence (AGI). It advocates that AGI must “benefit all of humanity,” but if humanity is not interested in benefiting from it, what’s the point?

There is a huge gap between OpenAI’s ambition and the average user’s expectations. What the open source experience showed is that there was a real demand for AI-powered software, but that users were less interested in dealing with big questions such as curing cancer or colonizing Mars, compared to gaining productivity or ridding boring, laborious tasks.

With that in mind, the faction that believed that only a giant business could bring AGI pushed the research- and safety-oriented sectors of the company further and further away from core development. Addressing the limitations of ChatGPT as a product (it’s expensive, has limited applicability, and has technical issues) is not averse to addressing the ethical risks of doing so. If the board’s mistake was a lack of farsight, Altman’s error was to downplay the board’s concerns with ethics.

The last major update in ChatGPT’s reasoning power was its fourth iteration, with further improvements being introduced in the coming months. The current model’s iteration, GPT-4 Turbo, is capable of seeing, hearing, coding, outputting voice, and generating images. The real deal-breaker for the board, as it now looks like, was DevDay itself. Although the writing on the wall was there, announcing GPTs (custom versions of ChatGPT) was a major break in trust from the board’s perspective when it came to introducing more AI power without proper risk assessment.

The future will tell us if we are moving too fast or not, but from a “should we build it” point of view, Altman clearly lost sight of the political factor involved in pushing for more and more results from a company that was structured around research. The technology didn’t fail, we’ll still have AI as powerful as GPT-4 Turbo, but the product itself we have available right now is most probably dead after most OpenAI employees leave.

That’s why there are four product risks to be assessed by product managers instead of just three: can we build it, can we make money from it, does the user want it, and, last but not least, should we build it?

The final section of this article’s original draft was about how OpenAI is changing tactics without changing strategy. It was a nice collection of evidence pointing to the investment in autonomous agents replacing the investment in bigger models in the pursuit of AGI. After DevDay and, most importantly, the weekend of 17 November, it lost all relevance given the shattering fact we were faced with.

Although it was not wrong, indeed there was a tactic shift while keeping the same strategy, a frequently overlooked lesson on product management speaks louder: the role of politics on product success.

Usually limited to “stakeholder management,” politics is hard to frame within a KPI or an OKR. For that reason, it’s usually shunned under the rug of product literature. The fact is that, the higher you are in a business hierarchy, the more politics play a part in contrast to traditional product concepts.

If you think about OpenAI as a cohesive, uniform entity, their product plan was perfect! They were iterating their product towards better assessing user needs and how to make money from it, while not losing sight of ethical concerns. They deprioritized addressing ethical risks in favor of addressing business risks, which is totally fine. Prioritization is what is expected from a good product plan.

Despite all that, in a single weekend, the company went from “Google’s rival” to “We don’t know if they’ll exist next week.” No amount of product anti-pattern did it, it was all bad politics. Altman took the board for granted, and the board got hurt and thought their mission was greater than their CEO. What baffles me the most in this whole story is how blindsided each party was concerning the other’s actions.

Politics is often considered a rotten practice, but it’s just communication manifested. When it’s said that a good product manager has to be a good communicator, it’s exactly because of situations like that. You’re most probably not in the position of a high executive in a groundbreaking AI company, but you for sure have already been faced with similar challenges.

Communication is not the same as building consensus, and that applies to politics. I’m not advocating that one party should have done this or that to more effectively undermine the other. Instead, if both parties kept their ground but were constantly effectively communicating with each other, this chaos could have been averted, and OpenAI would still be poised for a great future with great products.

OpenAI’s history will make the ranks of books, movies, and lectures in universities for sure. My job here was just to scratch the surface of all that happened and try to shed some light on what we can take away from the good and the bad.

Starting small and focusing on disrupting the fields of scientists and enthusiasts was definitely a winning call from OpenAI’s earlier days. Pivoting towards a customer-oriented strategy, as well as building on top of previously learned lessons to avoid dying out too soon as just another funny trinket, was masterful from the company.

Unfortunately, they made mistakes in the same way they made great decisions. Downplaying the need for ethical discussion on product development, specifically on such a convoluted subject as super artificial intelligence, and being unable to establish proper and effective internal communication were the factors that led what could be an amazing product down to the hangman’s row.

None of us could say we would have done differently, but that doesn’t mean we can’t learn from other’s mistakes.

Featured image source: IconScout

LogRocket identifies friction points in the user experience so you can make informed decisions about product and design changes that must happen to hit your goals.

With LogRocket, you can understand the scope of the issues affecting your product and prioritize the changes that need to be made. LogRocket simplifies workflows by allowing Engineering, Product, UX, and Design teams to work from the same data as you, eliminating any confusion about what needs to be done.

Get your teams on the same page — try LogRocket today.

A practical guide for PMs on using session replay safely. Learn what data to capture, how to mask PII, and balance UX insight with trust.

Maryam Ashoori, VP of Product and Engineering at IBM’s Watsonx platform, talks about the messy reality of enterprise AI deployment.

A product manager’s guide to deciding when automation is enough, when AI adds value, and how to make the tradeoffs intentionally.

How AI reshaped product management in 2025 and what PMs must rethink in 2026 to stay effective in a rapidly changing product landscape.