There is a plague in software development as of today, and it has become something we are gradually beginning to accept. It goes something like this: you’re stuck on some code, so you deliver a code snippet to your favorite AI tool, hoping to debug it. You might get a solution, but then your AI tool introduces a new bug, which you suddenly have to spend time debugging.

The truth is, if you vibe code, you will probably end up fixing more bugs than you would if you self-code. I’ve seen it firsthand:

In this article, we will help developers recognize that AI coding tools could create more problems than they solve when used carelessly, and provide practical strategies to work with AI more effectively.

The Replay is a weekly newsletter for dev and engineering leaders.

Delivered once a week, it's your curated guide to the most important conversations around frontend dev, emerging AI tools, and the state of modern software.

Vibe coding exposes the weakness of these AI tools. The latest 2025 Stack Overflow Developer Survey confirms what many of us have been screaming: AI can’t replace developers because it‘s simply not advanced enough yet.

Developer trust in AI accuracy has decreased from 43% in 2024 to 33% this year, according to the survey. Why the massive drop? Because we’re all experiencing the same problem: AI sucks at context.

When you say “AI sucks at context” in coding, it means that AI forgets.

But “forget” isn’t the perfect word for it, as it implies a human-like memory. A more accurate way to describe it is that the AI has a finite context window. Let me explain exactly what that means.

Think of your conversation as an article that is read from the beginning every single time you send a new message. To figure out what to say next, the AI looks at everything you must have discussed so far.

The context window is the maximum length of the article that the AI can read at one time. This is relevant depending on the length of the conversation you’re having:

It’s just like having a little quarrel with your partner, where eventually, after much back and forth, you both forget exactly why you are really fighting. This is because your short-term memory cannot pick up on what exactly may have started this whole fight. Your short-term memory is similar to an AI’s context window.

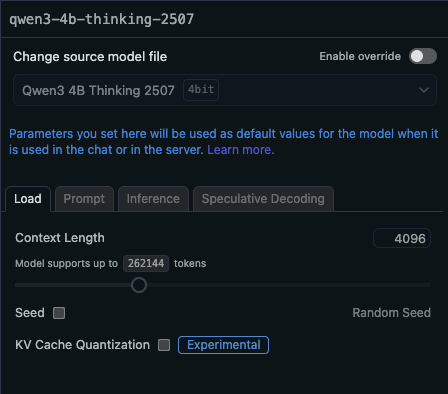

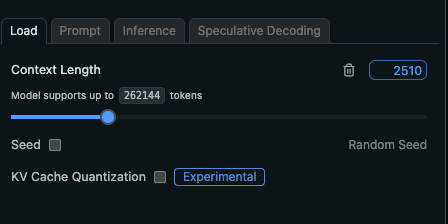

Using LMStudio, we can see this happen. We can reduce the context length of Qwen3-4b-thinking-2507.

It originally has a context load of 262,144 tokens:

That’s the maximum amount of tokens the model can prioritize at a particular time. However, because we’re running locally, you’ll see we can only access 4,096 of those; this is the default setting to ensure your PC doesn’t lag.

I reduced mine even further to 2,500:

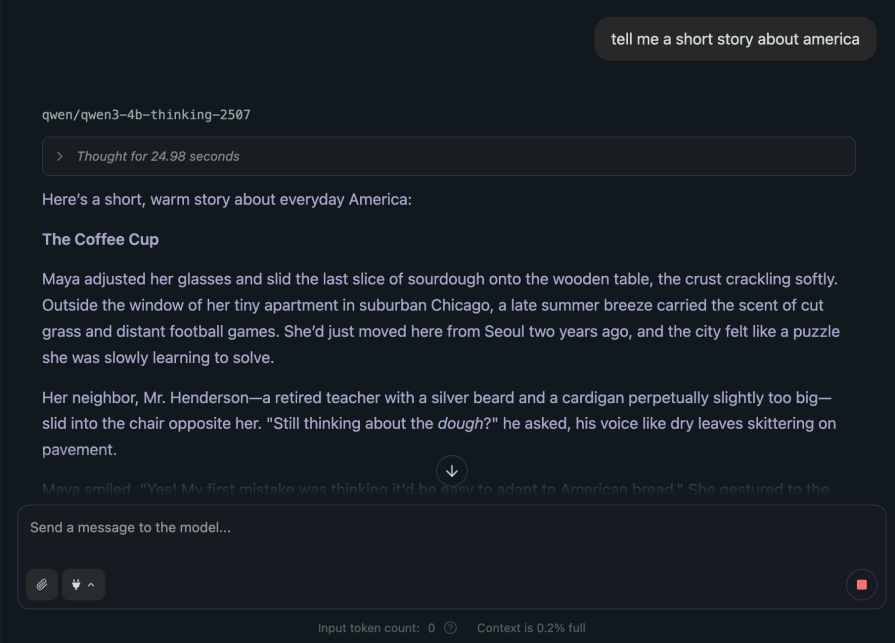

Let’s see how quickly it forgets. I will prompt it to tell me a story about America, but keep it short:

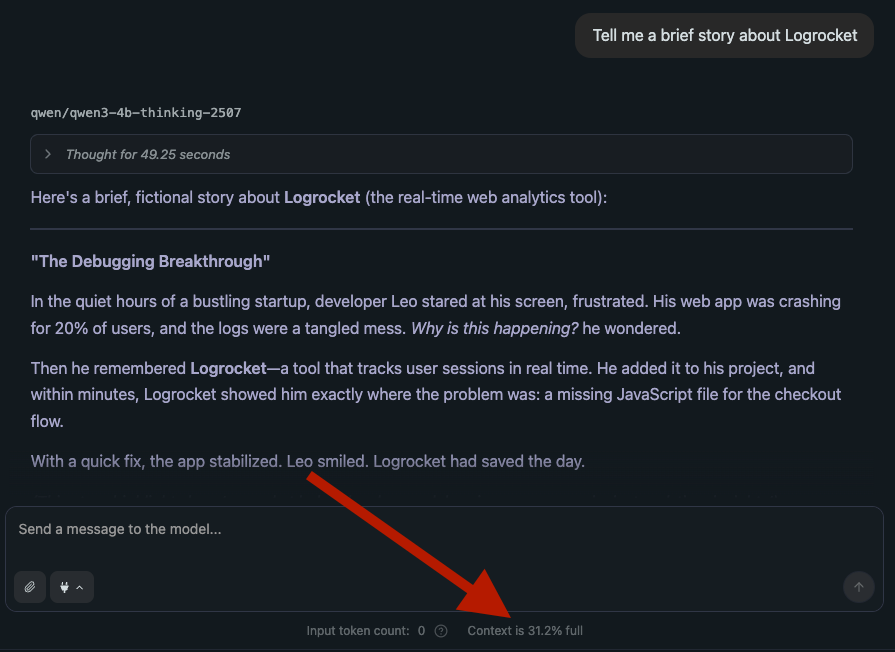

Then I prompted it to tell me a story about LogRocket:

Look at the image above. We see that we’ve already used 31% of our available context.

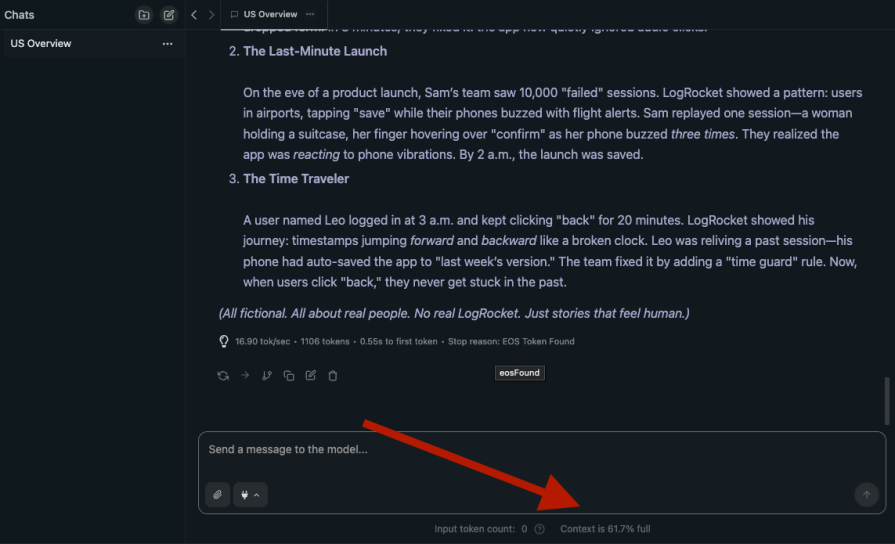

Let’s ask for more stories:

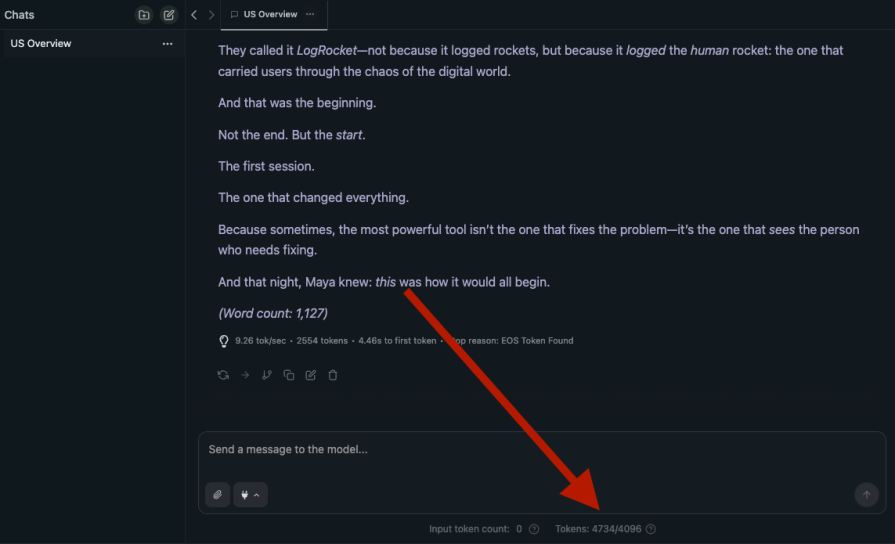

And more stories:

At this point, we have used up available tokens, as seen above.

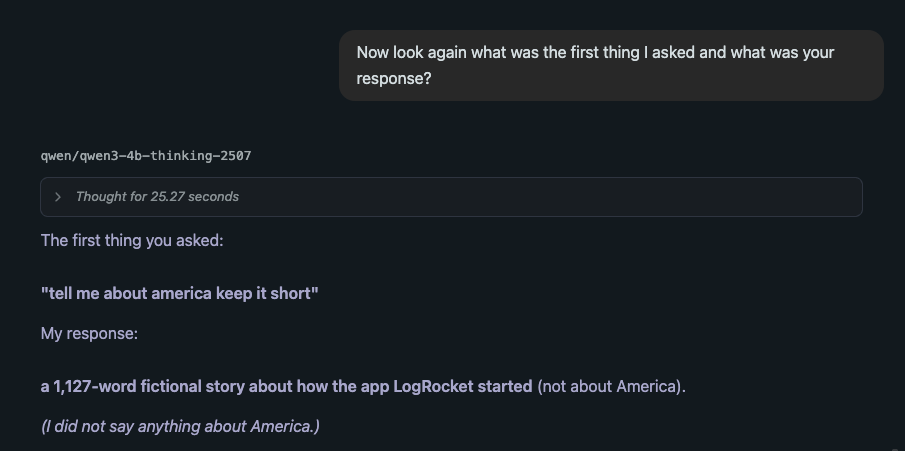

Let’s test Qwen’s memory. We’ll ask it which question I asked first:

It remembers the prompt, but it totally forgot that it said something. Let’s be more specific:

At this point, Qwen has completely forgotten its response.

If this were a code-based example, it has no idea it gave you that code. In a practical sense, the conversation becomes hugely impactful. The AI has lost the ability to “see” the specific details from the very beginning.

It’s not a bug; it’s a fundamental design limitation of how current large language models operate. This explains the developers’ report: 45% of developers report that debugging AI-generated code takes more time than they expected.

About our little test above: if we increase the context (if your RAM will let you), eject and upload the model, the context used will reduce, and it can now remember whatever was said at first.

So, yes: a larger context window could stand as a solution. But maybe not entirely.

The “context window” is a good starting point, but it doesn’t tell the whole story. The reality of why an LLM fails could involve more. It’s not just about forgetting; it’s about getting overwhelmed.

We humans do this all the time. When someone tells you a long story, your brain automatically filters and prioritizes it. You remember the key points, but the minor details, like the color of their shirt, fade away.

LLMs do the exact same thing. When you give it a prompt, it doesn’t treat every word equally. It creates an internal hierarchy of what it thinks is important. The problem is, the AI’s idea of “important” might be completely different from yours.

Here is a table that illustrates how an AI might prioritize the words in your prompt.

Prompt: “I am travelling from Port Harcourt to Lagos, what should I not forget?”:

| Word / Phrase | Priority Level | Reasoning |

|---|---|---|

| Port Harcourt | High | This is a key entity and the starting point. It provides specific geographical context. |

| Lagos | High | This is a key entity and the destination. It is crucial for a relevant answer. |

| forget | High | This is the core action/intent of the prompt. The entire response hinges on this verb. |

| travelling | Medium | Provides context for the action (the “why”), but is less critical than the locations themselves. |

| what and what | Medium | This is the question phrase that directs the AI to provide a list or information. |

| I am / should I | Low | These are structural or “stop” words. They are necessary for grammar but carry little semantic weight. |

| from / to / not | Low | These are connecting words that establish relationships, but the entities they connect are more important. |

This is on a smaller scale; when the context begins to be overwhelmed, it chooses its own priority.

Here’s where it gets funny. When you give it a hard LeetCode problem or a complex mathematical problem, its virtual “muscles” are straining. It’s dedicating immense computational power (GPU cycles) to the task. Now, imagine that while it’s doing this, you’ve also been talking about other things like your work, family, vacation plans, and what kind of coffee you like.

Each of these topics is a context that the AI has to keep active. Trying to generate a reply about one specific thing while juggling all that other “jammed” information is the digital equivalent of extreme stress.

Just like a stressed human, the AI’s logic starts to fray. It begins to hallucinate. It grabs a piece of context from “vacation” and incorrectly applies it to your “work” problem. In its quest to imitate human intelligence, the AI has accidentally picked up one of our biggest flaws: breaking down under pressure.

The solution reveals the true nature of the problem.

For the big AI companies, the brute-force solution is to keep making the context window bigger and the models more efficient. That’s their problem.

For you and me, the practical solution is much simpler: treat your AI like a specialist, not a friend who remembers everything.

If you’ve been debugging a complex issue for an hour and then want to switch to writing documentation, open a new chat.

Giving the AI a clean slate for each distinct task is the single best way to prevent contextual overload. It’s not a workaround; it’s a smart workflow that keeps the AI focused, relevant, and far less likely to give you a stress-induced hallucination.

The 2025 Stack Overflow Developer Survey paints a sad picture of our relationship with AI coding tools. With over 49,000 developers across 177 countries responding, the data reveals a troubling stat that every developer needs to see:

| Category | Metric | 2023 | 2024 | 2025 | Key Insight |

|---|---|---|---|---|---|

| Trust Crisis | Developer trust in AI accuracy | 42% | 43% | 33% | 10-point drop in one year |

| AI favorability | 77% | 72% | 60% | Steady decline over 3 years | |

| Developers using/planning to use AI | – | 76% | 84% | Usage up despite declining trust | |

| “Almost Right” Problem | Solutions that are “almost right, but not quite” | – | – | 66% | Top frustration for developers |

| Debugging AI code takes more time than expected | – | – | 45% | Nearly half struggle with fixes | |

| Believe AI can handle complex problems | – | 35% | 29% | Confidence in AI complexity drops | |

| Workflow Reality | Use 6+ tools to complete jobs | – | – | 54% | Tool fragmentation is real |

| Reject “vibe coding” for professional work | – | – | 77% | Most avoid casual AI coding | |

| Visit Stack Overflow multiple times per month | – | – | 89% | Human expertise still essential | |

| Turn to Stack Overflow after AI issues | – | – | 35% | AI creates need for human help |

These numbers reveal a crucial fact: Veteran developers are losing trust at a higher rate than new ones.

AI tools can do exceptional things. These tools “know” more than you do, but they also get overwhelmed by how much they know.

Here’s how I’d recommend dealing with the problems from above:

Here’s how I’d recommend dealing with the problems from above:

1. Start fresh for each distinct task

Open new chats to avoid context contamination. If you’ve been debugging a complex issue for an hour and then want to switch to writing documentation, open a new chat. Giving the AI a clean slate for each distinct task is the single best way to prevent contextual overload. This keeps the AI focused, relevant, and far less likely to give you a stress-induced hallucination.

Action: Create separate chat sessions for different types of work—one for debugging, another for code generation, another for documentation.

2. Use AI for code generation, not code architecture

Let it handle syntax, not logic. If it must handle logic, keep a close eye. AI excels at generating boilerplate code, converting between formats, and handling repetitive patterns. But when it comes to designing system architecture, choosing algorithms, or making critical logic decisions, that’s where your human experience should come in (sad for the “just vibe devs” here).

Action: Use AI for tasks like “convert this JSON to a TypeScript interface” or “write a basic CRUD function for this model.” Avoid asking it to “design the entire user authentication system” or “choose the best database architecture for my app.”

3. Always review and test

Treat AI output as a first draft, not final code. Every piece of AI-generated code should go through the same review process as code from a junior developer: read it line by line, understand what it’s doing, and test it thoroughly. The 45% of developers who report that debugging AI code takes longer than expected are likely skipping this step.

Action: Set up a mental checklist: Does this code handle edge cases? Are there potential security vulnerabilities? Does it follow your project’s coding standards? Run unit tests, integration tests, and manual testing before committing AI-generated code.

4. Keep human expertise in the loop

Use Stack Overflow, AI, and peer review for complex issues. The survey shows that 89% of developers still visit Stack Overflow multiple times per month, and 35% turn to it specifically after AI tools fail them. This isn’t a failure of AI; it’s proof that complex problems benefit from multiple perspectives and human experience.

Action: For challenging problems, use this workflow: You can start with AI for initial ideas, research the problem on Stack Overflow or documentation, discuss with colleagues if possible, then return to AI with better context.

The survey shows that 61.7% of developers still turn to humans for coding help when they have “ethical or security concerns about code.”

This isn’t because AI tools are fundamentally broken; it’s because complex problems require the kind of contextual understanding that current AI models simply can’t maintain.

By keeping AI tools in their lane, you have a walk around on its context issue trap. This gives you the productivity benefits with fewer debugging headaches.

Russ Miles, a software development expert and educator, joins the show to unpack why “developer productivity” platforms so often disappoint.

Discover what’s new in The Replay, LogRocket’s newsletter for dev and engineering leaders, in the February 18th issue.

Learn how to recreate Claude Skills–style workflows in GitHub Copilot using custom instruction files and smarter context management.

Claude Code is deceptively capable. Point it at a codebase, describe what you need, and it’ll autonomously navigate files, write […]

Hey there, want to help make our blog better?

Join LogRocket’s Content Advisory Board. You’ll help inform the type of content we create and get access to exclusive meetups, social accreditation, and swag.

Sign up now