As a frontend developer, I use AI generously in my daily workflow. Not only does it increase my efficiency at work, but it also frees up more time for improving the features I’ve built.

AI is able to build things in minutes or even seconds that would take humans hours to do. But its biggest strength is also its biggest flaw. AI is, at its core, a tool used to imitate human knowledge in an advanced capacity. So while it’s able to produce work faster, it also inherits all our biases.

It’s important to address these biases when building products to ensure that what we’re developing can be used by everyone.

A common mistake developers make is assuming that “everyone” refers to people like them. But this isn’t the case.

There are over 1 billion people in the world with some form of disability, meaning they require special accommodations to use certain products. This is why accessibility should be front and center in our development process, and also why it’s dangerous to remove the focus from humans and rely solely on artificial intelligence.

It’s simply too easy to slip into a “works for me” mentality and ignore those who are unable to use these products. There are also legal ramifications for inaccessible products, so it’s actually imperative that businesses make a deliberate effort to include accessibility in their development process to avoid lawsuits or fines.

In this article, we’ll take a look at areas where AI falls short on accessibility and how we can best harness AI for an optimized workflow — while still building products that everyone can use.

Note: This article assumes basic knowledge of HTML, JavaScript, and web accessibility practices.

When working with AI agents, it’s easier to avoid accessibility pitfalls once we’re aware of them. These are some of the glaring faults in the field of accessibility:

The Replay is a weekly newsletter for dev and engineering leaders.

Delivered once a week, it's your curated guide to the most important conversations around frontend dev, emerging AI tools, and the state of modern software.

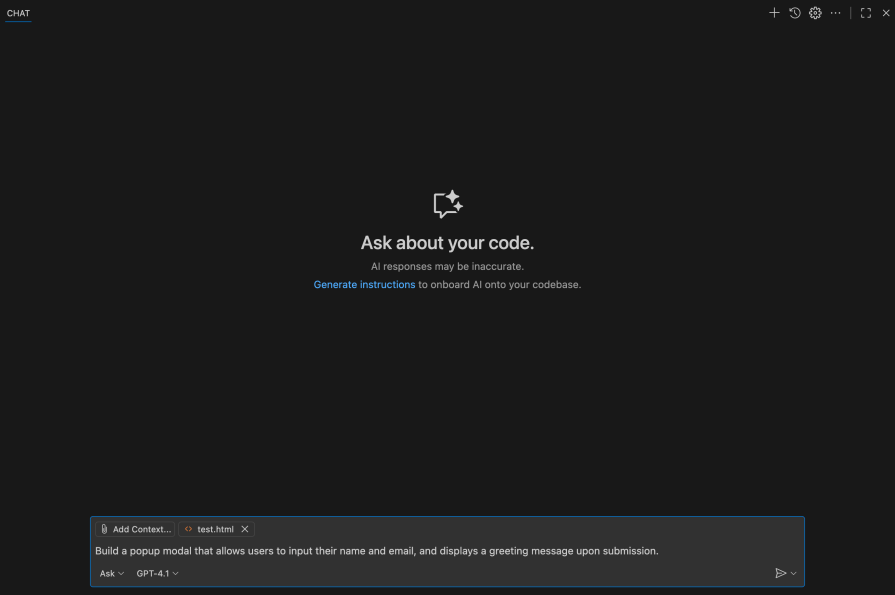

Let’s take a look at a simple prompt. I’m asking the AI agent to build a pop-up modal for a landing page. The prompt is, “Build a pop–up modal that allows users to input their name and email, and displays a greeting message upon submission”:

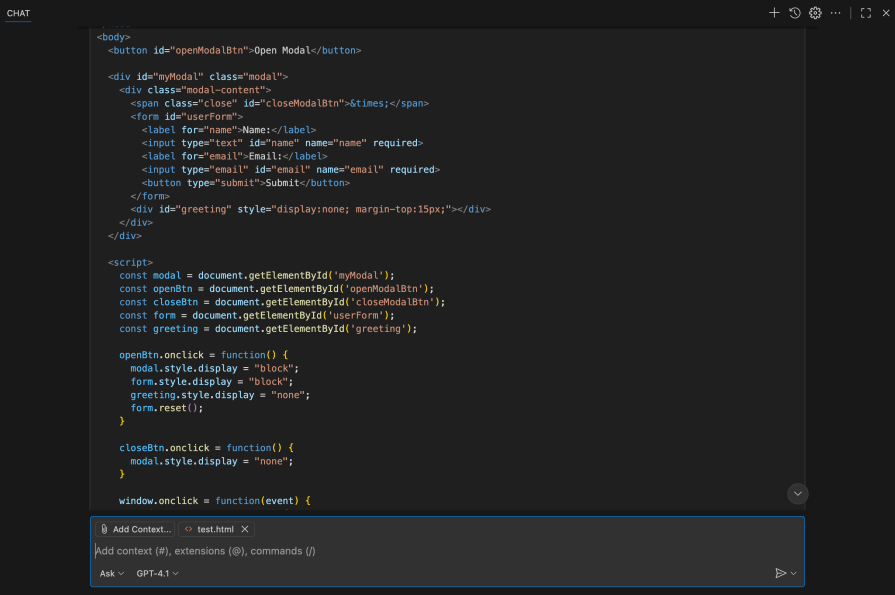

And this is the generated code:

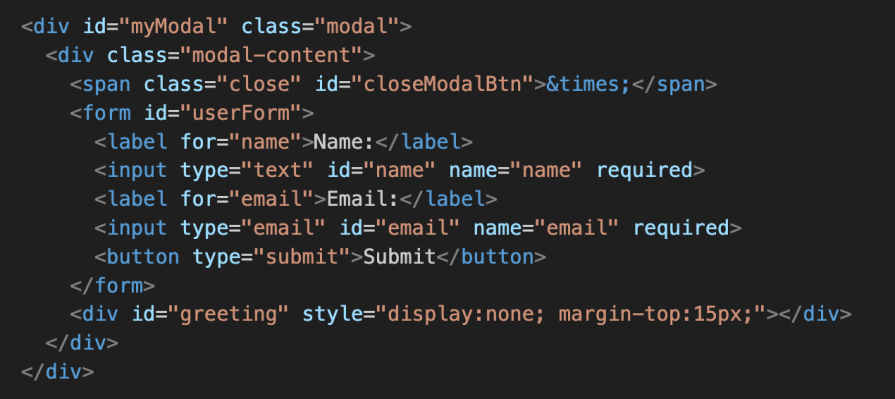

If we extract the generated HTML for the modal, this is what we have:

<button id="openModalBtn">Open Modal</button>

<div id="myModal" class="modal">

<div class="modal-content">

<span class="close" id="closeModalBtn">×</span>

<form id="userForm">

<label for="name">Name: </label>

<input type="text" id="name" name="name" required>

<label for="email">Email:</label>

<input type="email" id="email" name="email" required>

<button type="submit">Submit</button>

</form>

<div id="greeting" style="display:none; margin-top:15px;"></div>

</div>

</div>

While the generated code does create a functional modal, this modal is completely inaccessible. For starters, users who rely on keyboards are unable to close this modal, as the close button is a <span> element that doesn’t receive focus or have a corresponding KeyboardEvent.

Furthermore, there’s no way for non-visual users to be aware that a modal is visible on the screen, as it doesn’t have a valid role or aria-modal attribute.

(There are several other accessibility issues in this implementation. See how many you can spot and leave them in a comment 🙂.)

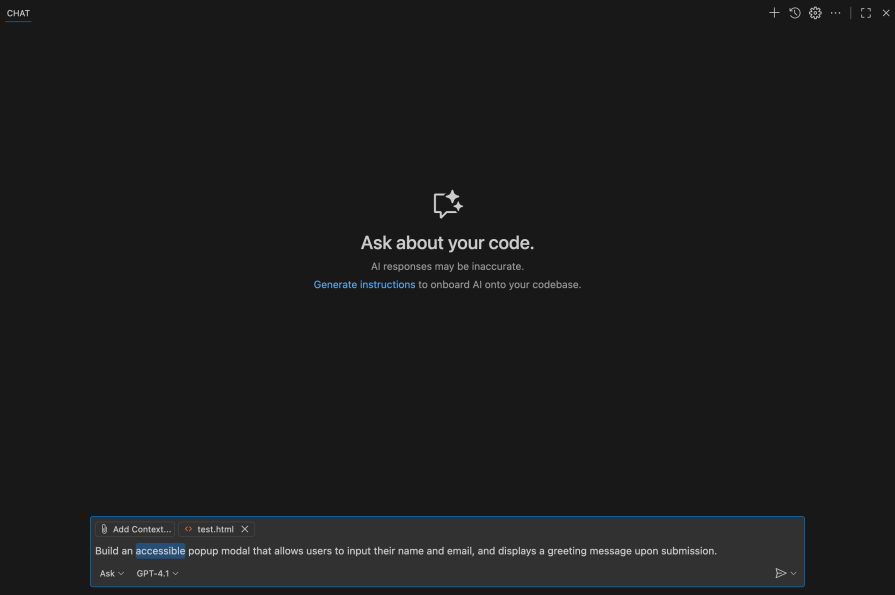

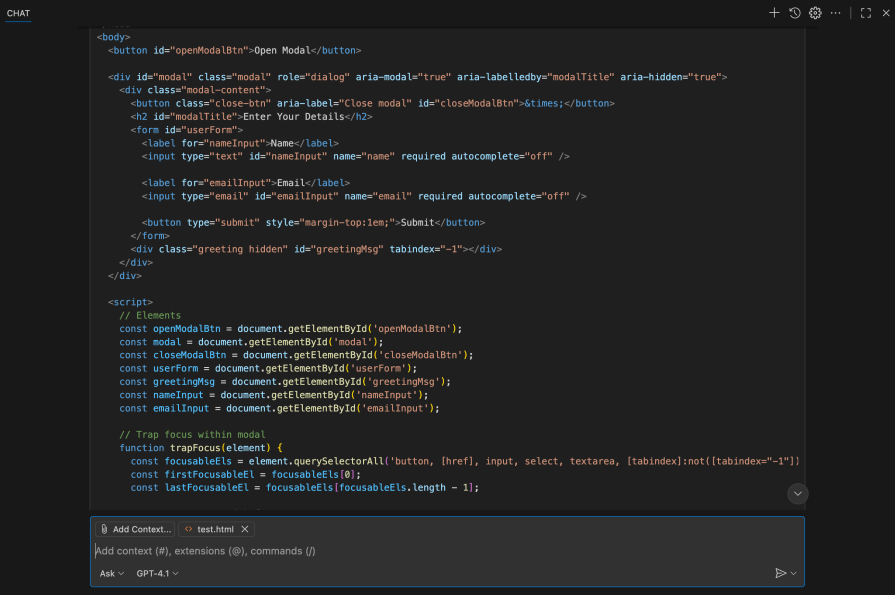

Now, let’s update our prompt to the following: “Build an accessible pop–up modal that allows users to input their name and email, and displays a greeting message upon submission.”:

Taking a look at the generated code, we’ll notice that several updates have been made:

<button id="openModalBtn">Open Modal</button>

<div id="modal" class="modal" role="dialog" aria-modal="true" aria-labelledby="modalTitle" aria-hidden="true">

<div class="modal-content">

<button class="close-btn" aria-label="Close modal" id="closeModalBtn">

×

</button>

<h2 id="modalTitle">Enter Your Details</h2>

<form id="userForm">

<label for="nameInput">Name</label>

<input type="text" id="nameInput" name="name" required autocomplete="off" />

<label for="emailInput">Email</label>

<input type="email" id="emailInput" name="email" required autocomplete="off" />

<button type="submit" style="margin-top:1em;">Submit</button>

</form>

<div class="greeting hidden" id="greetingMsg" tabindex="-1"></div>

</div>

</div>

The correct attributes have been added to the modal element, and the close button now uses an actual <button> element with a clear label.

This exercise shows one of the prominent issues with AI when it comes to accessibility; unless otherwise prompted, accessibility is not a default implementation in AI-generated code.

And this is very much a human fault, as well. AI provides the most popular solutions to a problem. So if we as humans don’t care enough about accessibility to include it as a default in our code, why should machines?

Let’s go back to our code example. Even with the accessibility update, there’s yet another issue with this implementation, and that’s how outdated the implementation is.

Thanks to the constant release of new web features, there are several modern HTML features that work as improved alternatives to custom JavaScript implementations.

The dialog element, for example, allows us to create fully accessible HTML modals with minimal JavaScript, and it handles most of the accessibility requirements of a modal by default. However, the solution generated by the AI agent ignores this feature in exchange for a custom JavaScript implementation — an implementation that requires more coding to achieve the same accessibility requirements already built into the semantic HTML element.

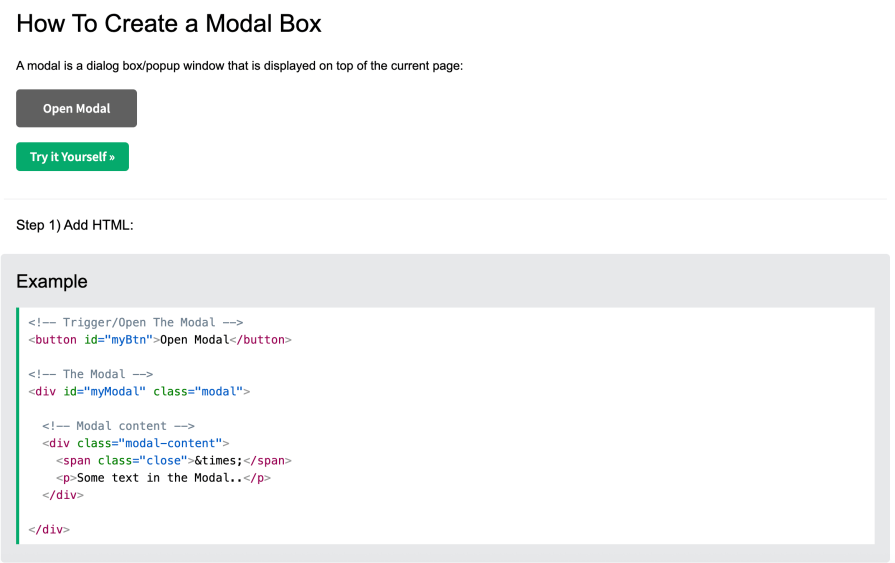

Why? AI is trained largely on public code and popular patterns, which tend to be obtained from tutorials and articles published a very long time ago. As a matter of fact, the modal initially generated by the AI agent is a word-for-word copy of a snippet from a W3Schools tutorial:

To be fair, I did ask the agent why it didn’t use the dialog element as an accessible alternative to the initial inaccessible modal, and this is what it had to say:

“Why didn’t you use a dialog element instead of a custom modal implementation?”

Great question! The

<dialog>element is a semantic, native way to create modals in HTML and provides built-in accessibility features like focus management and keyboard handling. In many modern browsers, it’s the preferred approach for modal dialogs.

Reasons I used a custom modal instead of<dialog>:

– Browser support: While<dialog>is widely supported in modern browsers, some older browsers or certain environments may not support it fully.

– Styling and behavior: Custom modals allow for more control over appearance and advanced behaviors, though most needs can be met with<dialog>.

– Legacy code or frameworks: Some projects require custom solutions for consistency or integration with existing code.

However, using<dialog>is recommended when possible.

And, after further prompting:

“Is it possible to use dialog and fix the errors of browser support and styling? Or do you think it’s better to use a custom JavaScript modal?”

Yes, it is possible to use the

<dialog>element and address browser support and styling issues:

1. Browser Support

– Most modern browsers support<dialog>.

– For older browsers, you can use a polyfill like dialog-polyfill, which makes<dialog>work everywhere.

2. Styling

– You can fully style<dialog>with CSS, just like any other element.

– You may need to reset some default styles for consistency across browsers.

3. Accessibility

–<dialog>provides built-in accessibility features, such as focus trapping and keyboard navigation.

Conclusion:

Using<dialog>with a polyfill for unsupported browsers is generally recommended. It’s more semantic, accessible, and easier to maintain than a fully custom modal. Only use a custom modal if you have very specific requirements that<dialog>cannot meet.

So the agent arrived at the conclusion that the <dialog> element is a more recommended approach for accessibility. However, this took more prompting on my part. (Of course, it’s also possible that the agent was just saying that to agree with me, as AI agents are known to be sycophantic but I tried to keep the prompts as neutral-sounding as possible)

Defaulting to the popular pattern can lead us to a toxic roundhouse where AI keeps regurgitating the same bad code we used in the past, which is then included in today’s codebases. This code is and then in turn used to train future AI agents, and the cycle continues. This leads us to our next problem.

We’re getting to a stage where most developers are using AI tools in some form for their workflow. While this is great for improving speed and efficiency, the issue that arises from this is: what happens when we stop training AI and AI starts training us?

For the new batch of junior developers joining the tech world, it’s getting harder to focus on learning basics. After all, look at all the shiny new agents that can solve every LeetCode and HackerRank problem for you without needing to understand how we arrive at the solution.

It’s similar to using calculators in math class. Obviously, we’ve very much moved past the point of using abacuses. While we can use calculators to solve very complex mathematical problems, it’s still important to be taught the basics of mathematical formulas because it encourages us to think.

So what’s the cost of relying on AI to solve problems without taking the time to learn how to approach those problems in the first place? It’s that we end up with code that we don’t understand and solutions that we didn’t think of. Much like a calculator, AI needs to be used as a tool and not a replacement for our brains.

So how can we achieve a more efficient approach to using AI in a way that focuses on human needs?

AI agents aren’t inherently bad at accessibility; they’re just trained that way. So it stands to reason that the fix lies in the training. Here are some practical ways to ensure accessible-first development in AI-generated code:

Most AI agents allow users to provide a fixed set of instructions that determine how the agents generate responses. This is a great way to combat the default nature of inaccessible generated code, as it ensures that the AI agent is always generating code that meets accessibility guidelines.

These instructions can either be included in the general configuration of the agent so it uses the same set of instructions every time, or they can be added on a repository or project basis so it’s limited to a specific codebase.

This is an example of a prompt that you could include in custom instructions for your AI agent:

// Generate code using accessible defaults. // - Use semantic HTML elements where possible // - Include keyboard navigation and focus management // - Add ARIA only when needed. // - Maintain accessible design standards according to WCAG guidelines (https://www.w3.org/WAI/WCAG21/Understanding/intro#understanding-the-four-principles-of-accessibility)

It’s also important to provide clear and precise prompts and helpful context, like “Take the role of a frontend developer”.

Different agents have different ways of adding these custom instructions. These are links for some of the more popular coding agents:

Developers aren’t able to prompt AI for better results if they don’t know what better results should look like. So, a crucial step in achieving more accessible AI-generated code is training developers.

As developers, we should constantly strive to remain up-to-date with the newest innovations in technology and not just rely on artificial intelligence. Whether this is keeping up with the latest releases in frontend features or constantly testing with assistive technologies to manually identify accessibility issues, it’s our responsibility to be aware of best practices for our codebase.

This knowledge also helps us fact-check the AI-generated code to avoid hallucinations and incorrect responses.

To learn more about accessibility, I can recommend the following resources to start with:

Much like unit tests to ensure predictability in the code base, accessibility checks should also be carried out at every stage of the development process to ensure the code base meets the basic standards for accessibility.

Incorporating accessibility checks into every stage of development, from planning and design to development and testing, will help ensure that inaccessible code is detected early on and resolved quickly. If the design and planning stages follow the accessibility guidelines for content and UI, it makes it easier to maintain the accessibility requirements in the development stage.

Automated accessibility tests can also be included in a codebase as part of the integration tests or as linting rules to detect easy accessibility fixes in the code. This can include issues like:

h4 appearing after a h2 element in the same section)This is especially necessary when working with a large codebase, as it becomes harder to manually audit AI-generated code. Automated accessibility tests will help detect this faster. Developers can also use in-code extensions like axe-core, automated tools like Lighthouse, or auditing tools to carry out tests for these accessibility issues.

Another important part of accessibility testing is manual tests, as automated tests can only detect so much. Implementing an accessibility checklist helps developers remember the areas they need to manually test for accessibility when implementing a new feature.

A checklist can look like this:

It also helps to utilize screen readers and keyboard-only navigation when carrying out these manual tests. This helps to avoid only testing for flaws in ways that we would normally use a product, or providing solutions for only the bugs that affect us.

With the onset of AI, we’ve seen a lot of accessibility improvements. These include accessible colour palette generators, automated image descriptions and language captioning, and even brand new innovations like an AI-powered visual description tool for people with visual disabilities.

Based on these advancements, one field of accessibility that I think would greatly benefit from artificial intelligence is product testing.

Even with all the methods listed above, testing for accessibility is by no means a straightforward approach. There’s still no one-size-fits-all process. It also requires a lot of manpower and time.

So it would be particularly useful if AI agents were able to combine all the necessary methods for accessibility testing in an automated way that greatly speeds up the process, especially if the agent is trained on the specific accessibility data for testing.

Instead of simply generating the code, the agent could also:

Of course, even this has its own in-built problem. There are some accessibility requirements that just can’t be automated, especially with regard to competing accessibility needs, but it would be a good first step to building a fully accessible web.

AI has transformed how we work, but it has also inherited our mindset on the way we build. Accessibility should not be an afterthought in our development process; it should be ingrained in every aspect of how we develop products.

This shift towards accessible-first development needs to be taught to the AI agents developing our products, too. At the end of the day, whether built by humans or machines, these products are built for everyone, so everyone should be able to use them.

Compare the top AI development tools and models of February 2026. View updated rankings, feature breakdowns, and find the best fit for you.

Broken npm packages often fail due to small packaging mistakes. This guide shows how to use Publint to validate exports, entry points, and module formats before publishing.

Discover what’s new in The Replay, LogRocket’s newsletter for dev and engineering leaders, in the February 11th issue.

Cut React LCP from 28s to ~1s with a four-phase framework covering bundle analysis, React optimizations, SSR, and asset/image tuning.

Hey there, want to help make our blog better?

Join LogRocket’s Content Advisory Board. You’ll help inform the type of content we create and get access to exclusive meetups, social accreditation, and swag.

Sign up now