Alex Guernon is a data-driven product management leader. She was most recently Senior Director, Product Management at Raptive, a creator media company. Alex began her career as an engineer at Electric Boat and MITRE before moving to product management at Wayfair. Alex served as a Senior PM at TripAdvisor and a Product Management Group Leader at Homesite Insurance before joining Raptive.

In our conversation, Alex talks about how she promotes a data-centric culture, including leading by example and giving each PM an effective measurement system for reporting. She discusses her “value divided by effort” approach to prioritizing product enhancements and shares how she helps her team effectively manage ambiguity.

To me, the definition of a data-driven culture means using data to inform decisions instead of relying on intuition or opinions. My goal is always to leverage quantitative and qualitative data to guide each product development phase. This is really important because data enables PMs and organizations to generate objective insights that can validate assumptions, reduce risk, and ultimately optimize decisions.

Using this approach can help tech organizations stay competitive and deliver customer-centric solutions by continuously learning from customer behaviors, product performance, and market trends as well.

One important piece is leading by example. Typically, a lot of product leaders are charged with coming up with goals for their product areas. It’s important to take that opportunity to think critically about KPIs for those product areas. Generating measurable goals for your team is critical to ensuring that they work on helpful things that are aligned with organizational and company goals.

It’s also important to come up with a measurement system for PMs that can be used for reporting purposes. How are they tracking progress and working toward achieving goals? Another way to promote a data-centric culture is to incorporate data into various processes throughout the product development process, including discovery, prioritization, execution, and post-launch measurement.

The discovery process is a huge opportunity to gather quantitative and qualitative data to help uncover customer pain points from various sources, such as interviews, surveys, events, and conferences where you can talk directly with customers.

Product usage data is another critical source of data for PMs. This is where you can dive into things like acquisition funnel data, churn data, active user stats, and user session durations. Another key source is internal stakeholders. This group typically holds a wealth of information — think customer service folks, account management, and sales. They talk to customers every day and hear about pain points and opportunities that can be helpful to the product.

Prioritization should always be a data-driven exercise, whether you’re doing annual, quarterly, or even sprint planning. Using quantifiable business impact and level of effort data to inform this planning process can be hugely helpful. At Raptive, when we decided that an initiative was important, we defined how to measure its impact right at the beginning of the project. That way, we could build in any product tracking or survey development before the initiative was tested. Then, by the time it was ready for official launch, we had everything set up and ready to go.

We found that this approach teed us up very well for post-launch measurement and results evaluation. Of course, we hoped the results would be successful, but regardless, it helped us make next-step decisions.

Selecting KPIs is important, though sometimes it can be counterintuitive, especially if you manage non-revenue generating products. Thinking through different options can be very helpful in ultimately landing on the right one. At Raptive, one of the work streams that I managed was the customer dashboard. For context, Raptive operates on a rev share business model. As enterprise publishers and content creators generate revenue through their ads, Raptive takes a cut of that.

The dashboard is the primary touch point for customers to see how much they earn through ads and where their traffic is coming from. This dashboard was not a revenue-generating product, so I needed to think about how I could measure the success of the work stream, which was very important to the customer.

At first, I thought, “Well, product usage makes the most sense here. If customers are in the product longer, they’re engaging with the reports and using them to make decisions. The longer they spend in the dashboard, the more valuable it is.” But then I started thinking about some of the feedback we were getting from customers — that they didn’t always know where to find things or that it took a lot of time to get the information they needed and analyze it effectively. I realized we had the opportunity to increase the value of the dashboard by making improvements that reduced time in the product.

I ended up selecting CSAT as the primary KPI for that work stream. However, we did continuously monitor product usage to gauge things like how many new reports people were viewing, what features users were engaging with, and average time spent in the dashboard. But for the reasons I just explained, we did not select product usage as the primary KPI.

My go-to approach is value divided by effort. This means thinking about what the business value would be if you could address this pain point or implement a solution, and what the level of effort is to actually implement it. Typically, you get that by working with your engineering counterpart to provide estimates in that area. Those two pieces of data can really be helpful in stack ranking different product opportunities.

With those two values, you can quickly identify quick wins — the high-value, low-effort projects. You want to get those out as soon as possible because they’re going to be impactful. On the opposite end, any low-value, high-effort projects should fall to the bottom. I’ve never been in a situation where I’ve had enough resources to work on those projects.

There will also be some high-value, high-effort projects — these are longer-term, strategic initiatives that you don’t want to put off. You have to think about balancing those across the quick wins. Make time to ultimately get to those, but realize that they are going to take a little longer. Thinking about ways to parallel path all of these projects when you’re building your roadmap is really important.

Lastly, there are additional factors like dependencies. Sometimes, you need the data team to work on something before you can implement a certain piece. You have to think about how that weighs in with your timelines. If you’re in a situation where there is a very strict deadline to consider, that might move things up or down. In general, I find the value divided by effort plus some other considerations to be a very valuable framework.

Fostering a data-driven culture seems very black and white when you’re talking about it, but actually doing that is harder than it seems. Newer PMs, or PMs who haven’t worked in these kinds of environments before, can find navigating this type of environment to be a little stressful and confusing. As a product leader, it’s important to understand where PMs are coming from. Mentoring and coaching people to navigate some of the inherent ambiguity can be really helpful.

Another pitfall can be the impression or assumption that, when you’re coming up with business value estimates to help determine priority, everything needs to be 100 percent accurate. Most of the time, that’s impossible. You can never have a crystal ball into the future. Helping PMs think about ways to leverage the information and data that’s available to generate assumptions and move forward can be tricky, but can be learned. You need to convey that nobody is expecting 100 percent accuracy. That goes a long way.

So many companies and organizations value speed. Having the time or luxury to get more data is great, but to your point, sometimes you don’t have that. Quickly coming to a decision is a valuable skill. Going back to those assumptions can be very helpful. Think about what type of data you have. Maybe you have an idea for launching a similar feature that has been implemented somewhere else — can you use that data as a proxy for how the other thing will perform?

Another valuable framework that I’ve leveraged when weighing options is listing out the possibilities, along with the pros, cons, risks, and mitigations so that you can have a holistic and better evaluation of trade-offs. What are you trying to optimize for? Having it all in one place allows you to make a proposal — it’s also helpful for having productive conversations with stakeholders and leadership. Overall, these techniques will help you get buy-in and alignment quickly so your team can execute and figure out the next steps from there.

It depends on the situation. When I worked at TripAdvisor on sponsored placements (the ad product for hotels). I had a hypothesis that if we changed the UI of the ad itself, we might be able to generate more bookings via the ad and increase the return on investment for customers purchasing those ads. There were a lot of permutations and different options. By A/B testing several different options, we were able to use data from the test results to inform which one to move forward with.

Another example from when I worked at Raptive is when data showed a drop-off in the acquisition funnel. For context, customers (i.e., content creators and enterprise publishers) need to apply to work with Raptive. If they meet the requirements, they get accepted, but they still need to complete an onboarding flow before ads are installed on their site and they (and Raptive) start generating revenue. We could see that there was a greater number of customers who were accepted than the numbers who installed their ads, but we didn’t have granular data to understand where and why that drop-off occurred.

We didn’t want to take a blind approach and just start trying different things to see what worked. Instead, we prioritized tracking to get that granular data. Once we had that, we knew we could come up with a plan for improving that drop-off within the acquisition funnel. Sometimes, just dedicating the time to getting the data you need is very valuable.

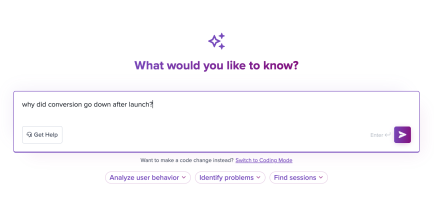

LogRocket identifies friction points in the user experience so you can make informed decisions about product and design changes that must happen to hit your goals.

With LogRocket, you can understand the scope of the issues affecting your product and prioritize the changes that need to be made. LogRocket simplifies workflows by allowing Engineering, Product, UX, and Design teams to work from the same data as you, eliminating any confusion about what needs to be done.

Get your teams on the same page — try LogRocket today.

Melissa Douros talks about how finserv companies can design for the “Cortisol UI“ by building experiences that reduce anxiety and build trust.

Introducing Ask Galileo: AI that answers any question about your users’ experience in seconds by unifying session replays, support tickets, and product data.

The rise of the product builder. Learn why modern PMs must prototype, test ideas, and ship faster using AI tools.

Rahul Chaudhari covers Amazon’s “customer backwards” approach and how he used it to unlock $500M of value via a homepage redesign.