If you paid attention to product releases in 2025, you probably came away with one of two reactions. Either everything changed forever, or nothing really changed at all and big tech is once again trying to push overhyped tools on users who don’t know better. However, both miss what’s actually happening.

2025 didn’t just deliver another cohort of shiny tools with an AI label. It began to fundamentally rewire how products are built, shipped, and learned from. In doing so, it exposed the limits of decades-old product logic about decision-making, engineering velocity, and user segmentation. As we move into 2026, PMs who continue to operate with pre-AI ways of thinking will find themselves increasingly out of step with how modern products evolve.

So what actually changed, and what does it mean for your career? This article breaks down three distinct AI shockwaves that have already started to reshape the foundations of product work. Each one forces you to rethink what it means to be effective as a product manager today. Let’s start at the top.

In 2025, even the most advanced AI companies struggled with what should have been basic product discipline. Launches failed, capabilities were overstated, and companies made unverified assumptions about safety and governance.

These weren’t edge-case mistakes or growing pains. They were headline-making failures from organizations with some of the brightest minds and deepest pockets, who had every reason to know better:

For example, several high-profile ChatGPT updates were released with powerful new capabilities that lacked clear behavioral guarantees. Features like browsing, memory, advanced voice, and tool use were introduced quickly, then partially rolled back, limited, or changed in ways users didn’t expect.

From a pure AI perspective, the progress might’ve seemed impressive. However, from a product perspective, the cracks were obvious. Unfortunately, it’s quite clear that those rushed updates were meant to keep OpenAI on top with its storytelling, rather than with a clearly superior and reliable product.

Classic product principles, such as user research, hypothesis testing, risk management, and iterative validation, exist to ensure that you deliver a quality product instead of a questionable PR stunt. These principles are even more important in the AI era of product management.

An AI model without clear outcomes, steering metrics, clear user value improvements, and safety checks is a liability disguised as an innovation.

Here’s what those traditional principles look like when you apply them to AI products:

Don’t get me wrong, you don’t need AI to ignore those principles and build updates with no rhyme or reason. However, the missteps of 2025 taught a harsh lesson.

AI alone doesn’t replace product rigor; it magnifies gaps in it. PMs who double down on fundamentals will ship safer, more valuable products.

We’ve now reached a point where AI can now craft meaningful code thanks to Cursor-scale funding, breakthroughs in model precision, and tooling. That doesn’t mean it can create perfect code or that you don’t still need oversight. But you now can create code that surfaces logic, data flows, and prototypes you can ship or iterate on

Lovable went a little further, allowing non-technical people to build products they imagined. You can now ask Gemini to build you copies of well-known 8-bit games from the NES era.

It’s no longer enough to know “what to build” and trust engineers to fill in the rest. PMs who can frame technical problems precisely, validate AI outputs, and iterate prototypes move from coordinators to product drivers.

This also changed the recruitment game. As a PM, you can walk into an interview with a product showcase ready, even before you’re given any home assignment. Imagine how impressive it might look to an interviewer if you’ve already identified a major product pain point and walked in out of the blue with a viable, working solution ready to be picked up for the next sprint.

This gave way to the so-called “Full Stack PM” in 2025.

Here’s how to think about it in practice:

This forces a recalibration of skills: analytical, systems-level thinking, and a working comfort level with code outputs are now levers of leverage.

Over the last decade, our user models were always human. Yes, we aggregated behaviors, segmented demographics, studied personas, but the actor on the other side of the interface was always a person.

Now imagine this: AI agents acting autonomously on behalf of users. They consume APIs, trigger flows, make optimization decisions, negotiate pricing, and choose content, not because they’re scripted bots, but because they’ve been delegated authority.

In that world, humans aren’t the only users anymore.

This shift breaks a lot of assumptions that underpinned user experience design, segmentation, and feature prioritization:

This isn’t hypothetical. Every automation, every integration, every flow that’s triggered by intelligent agents changes who, or what, interacts with your product.

It works both ways: You may want to design products so they’re more efficient for AI agents, but you may want to prevent those from ever interacting with the same product. This could be to protect privacy and/or copyright, or simply to wait until the legal framework catches up with the technology.

For example, can you point out, with absolute certainty, the legal party responsible if a user’s AI agent misbehaves and generates gigantic costs for the user? Or does the AI traffic (that generates on potential profit) on your website make the upkeep cost so massive that your business can no longer support it?

Those challenges mean that the classic PM mindset of “design for humans first” needs expansion, not abandonment.

2026 is the first year when product management must consciously evolve beyond the classical skill set. You can no longer be a PM who’s good in spite of AI. You have to be a PM who’s good because of it.

Teams that don’t adjust will ship products optimized for a world that no longer exists. There’s no longer a world where humans are the only actors that matter, where engineering velocity is measured by sprints alone, and where product rigor is a nice-to-have luxury.

The PM role has become a hybrid of strategist, systems thinker, and AI-literate builder. And that’s a good thing. Because with this shift comes an opportunity: products that are safer, more adaptive, more valuable, and more intimately tied to the real world, whether that world is human, agentic, or somewhere in between.

Whichever of those is closer to your heart, be sure to monitor this blog for the next product management piece. See you soon in the next blog post!

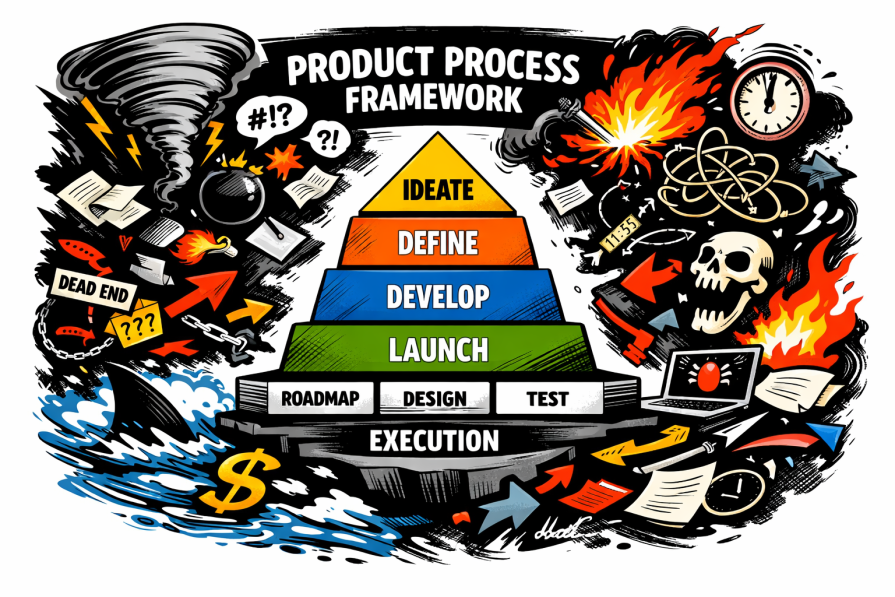

Featured image source: IconScout

LogRocket identifies friction points in the user experience so you can make informed decisions about product and design changes that must happen to hit your goals.

With LogRocket, you can understand the scope of the issues affecting your product and prioritize the changes that need to be made. LogRocket simplifies workflows by allowing Engineering, Product, UX, and Design teams to work from the same data as you, eliminating any confusion about what needs to be done.

Get your teams on the same page — try LogRocket today.

Rahul Chaudhari covers Amazon’s “customer backwards” approach and how he used it to unlock $500M of value via a homepage redesign.

A practical guide for PMs on using session replay safely. Learn what data to capture, how to mask PII, and balance UX insight with trust.

Maryam Ashoori, VP of Product and Engineering at IBM’s Watsonx platform, talks about the messy reality of enterprise AI deployment.

A product manager’s guide to deciding when automation is enough, when AI adds value, and how to make the tradeoffs intentionally.