Keeping your application performant is critical for effective operational objectives. Caching and memoization help you avoid redundant computations, keeping your applications running smoothly. Cloud-centric architectural patterns emphasize fault tolerance and store state in distributed caching services, like Redis and Memcached.

This tutorial will look at how to improve Node.js application performance using TypeDI and the strategy pattern. More specifically, we’ll build an API service to support switching between Redis and Memcached and use the strategy pattern to keep the service provider design flexible. We will also use dependency injection and rely on the TypeDI package to provide an Inversion of Control (IoC) container. You can read the complete code in the GitHub repository.

Jump ahead:

The Replay is a weekly newsletter for dev and engineering leaders.

Delivered once a week, it's your curated guide to the most important conversations around frontend dev, emerging AI tools, and the state of modern software.

The strategy pattern is a software design pattern that groups related implementations (strategies) together and allows other modules to interact using a central class called the Context. Using this pattern, we can support multiple payment and messaging providers within a single application.

Dependency injection is a software design pattern that enables a method to receive a dependency as a parameter. Dependency injection is crucial for avoiding tightly coupled software design and helps you prevent poor architectural decisions when designing software.

Dependency resolution is required for proper IoC. The dependency resolution process models dependencies as a graph with nodes and connecting edges. It is crucial to get this right to prevent inadvertently introducing cyclical dependencies.

TypeDI is an excellent tool for correctly executing the dependency resolution process, allowing us to inject values into class properties via their constructors. TypeDI enables us to define unchanging singleton services and transient services, which is helpful when building software that utilizes the request-response model.

In this article, we’ll create an Express.js-powered Node.js application to store and read transaction records, similar to those within a finance application.

We’ll write and read transaction records from highly performant caches that use the RAM available on the machine rather than the slow and non-deterministic sequential I/O typically encountered when fetching disk data.

To allow overrides from environmental variables, we’ll set the default cache provider to Redis and give support for Redis and Memcached as our high-performance caches.

Our application has the following external dependencies:

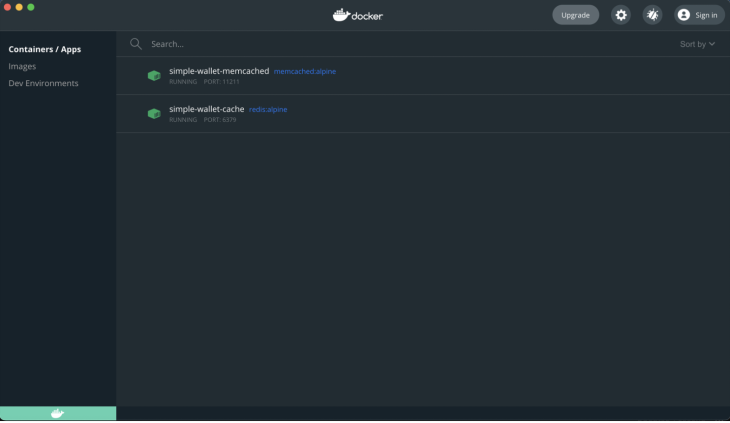

I prefer to run Memcached and Redis as Docker containers. To install these directly on your machine, execute the following commands:

mkdir simple-wallet cd simple-wallet npm init -y npm i typescript concurrently @types/dotenv @types/memcached @types/express @types/node nodemon --save-dev npm i redis memcached memcache-plus typedi express body-parser dotenv --save

Next, we need to start a TypeScript project within our working directory. If you have TypeScript installed globally, run the following:

tsc --init

If you wish to keep TypeScript as a local installation, run the following:

./node_modules/.bin/tsc --init

Then, edit tsconfig.json and allow the TypeScript features such as decorators and emitting decorator metadata:

{

"compilerOptions": {

"outDir": "./dist",

"baseUrl": "./",

"experimentalDecorators": true,

"emitDecoratorMetadata": true,

"skipLibCheck": true,

"strict": true,

"esModuleInterop": true,

"strictPropertyInitialization": true

}

}

Now, we’ll set up the Docker containers for Redis and Memcached. I prefer to use the minimal alpine images below:

# We need to create an isolated bridge network to allow us to connect to our instances docker create network simple-wallet-network --driver bridge docker pull redis:alpine memcached:alpine docker run --name simple-wallet-redis -d -p 6379:6379 redis:alpine docker run --name simple-wallet-memcached -d -p 11211:11211 memcached:alpine

With our Redis and Memcached instances running, connect to them with the code below:

npx redis-cli -p 6379 npx memcached-cli localhost:11211

Then, add a declarations.d.ts file to declare types for third-party packages and input the following to .env:

PORT=3050 CACHE_PROVIDER=redis REDIS_PORT=6379 MEMCACHED_PORT=11211

We need to create a config singleton object to export values used in other modules. Create src/config.ts and paste the following code:

import dotenv from "dotenv";

dotenv.config();

function getEnvVariable(name: string, fallback: string = ""): string {

const envVariable = process.env[name];

const fallbackProvided = fallback.length;

if (!envVariable && !fallbackProvided) {

throw new Error(`Environment variable ${name} has not been set.`);

}

return envVariable || fallback;

}

const config = {

server: {

port: getEnvVariable("PORT"),

},

cache: {

provider: getEnvVariable("CACHE_PROVIDER", "redis"),

},

redis: {

port: getEnvVariable("REDIS_PORT"),

},

memcached: {

port: getEnvVariable("MEMCACHED_PORT"),

},

};

export default config;

Then, we need to establish persistent TCP connections to our Redis and Memcached services in the application startup and expose these connections to the rest of our application.

We’ll use memcache-plus as a wrapper on the base memcache package because memcache-plus provides us with syntactic sugar, like async/await, instead of callbacks. Add these to src/connections:

import MemcachePlus from "memcache-plus";

import { createClient } from "redis";

import { RedisClientType } from "@redis/client";

let redisClient;

// The Redis client must be created in an async closure

(async () => {

redisClient = createClient();

redisClient.on("error", (err) =>

console.log("🐕🦺[Redis] Redis Client Error: ", err)

);

await redisClient.connect();

console.log("🐕🦺[Redis]: Successfully connected to the Redis server");

})();

const createMemcachedClient = (location: string) => {

const handleNetworkError = function () {

console.error(

`🎖️[Memcached]: Unable to connect to server due to network error`

);

};

const memcache = new MemcachePlus({

hosts: [location],

onNetError: handleNetworkError,

});

console.log("🎖️[Memcached]: Successfully connected to the Memcached server");

return memcache;

};

export default {

redis: redisClient,

memcached: createMemcachedClient("localhost:11211"),

};

Next, we’ll bootstrap our Express application in src/index.ts. Because TypeDI uses the experimental TypeScript Reflection API, we’ll import reflect-metadata first. To do this, add the code below to src/index.ts:

import "reflect-metadata";

import express, { Express, Request, Response } from "express";

import config from "./config";

import transactionsRouter from "./modules/transaction/transaction.routes";

import bodyParser from "body-parser";

const app: Express = express();

const { server } = config;

app.use(bodyParser.json());

app.use("/transactions", transactionsRouter);

app.get("/", (_req: Request, res: Response) => {

res.send("Hello! Welcome to the simple wallet app");

});

app.listen(server.port, () => {

console.log(`☁️ Server is running at http://localhost:${server.port}`);

});

With our bootstrapping done, we need to set up the transactions module. Before doing this, we must build an abstraction for caching to define the caching providers as strategies to switch between.

To build the cache provider, define the first class as Context. You can think of Context as the brain of the cache provider, when using the strategy pattern, it identifies all available strategies and exposes methods for consumers to switch between them.

We’ll define the Strategy class interface and types used within the cache provider at src/providers/cache/cache.definitions.ts:

export interface Strategy {

get(key: string): Promise<string>;

has(key: string): Promise<boolean>;

set(key: string, value: string): void;

}

export type strategiesList = {

[key: string]: Strategy;

};

Next, we need to define a list of strategies that Context can choose from, and we’ll make concrete implementation classes for the Redis and Memcached strategies.

Edit src/providers/cache/cache.context.ts and add:

import { Service } from "typedi";

import connections from "../../connections";

import config from "../../config";

import { Strategy, strategiesList } from "./cache.definitions";

import RedisStrategy from "./strategies/redis.strategy";

import MemcachedStrategy from "./strategies/memcached.strategy";

import { RedisClientType } from "@redis/client";

Our strategy class implementations will receive a connection instance to keep our strategies as flexible as possible:

const strategies: strategiesList = {

redis: new RedisStrategy(connections.redis as RedisClientType),

memcached: new MemcachedStrategy(connections.memcached),

};

In CacheContext, inject the default cache provider value from the environment into the constructor, and perform method delegation or proxying.

Although we manually delegate to the selected strategy class, this can also be achieved dynamically by using ES6 proxies. We add the rest of CacheContext within the block below:

@Service()

class CacheContext {

private selectedStrategy: Strategy;

private strategyKey: keyof strategiesList;

constructor(strategyKey: keyof strategiesList = config.cache.provider) {

this.strategyKey = strategyKey;

this.selectedStrategy = strategies[strategyKey];

}

public setSelectedStrategy(strategy: keyof strategiesList) {

this.selectedStrategy = strategies[strategy];

}

public async has(key: string) {

const hasKey = await this.selectedStrategy.has(key);

return hasKey

}

public async get(key: string) {

console.log(`Request for cache key: ${key} was served by ${this.strategyKey}`);

const value = await this.selectedStrategy.get(key);

return value;

}

public async set(key: string, value: string) {

await this.selectedStrategy.set(key, value);

}

}

export default CacheContext;

Next, we’ll create a minimal implementation for RedisStrategy by adding the code below to src/providers/cache/strategies/redis.strategy.ts:

import { RedisClientType } from "@redis/client";

import { Strategy } from "../cache.definitions";

class RedisStrategy implements Strategy {

private connection;

constructor(connection: RedisClientType) {

this.connection = connection;

}

public async get(key: string): Promise<string | null> {

const result = await this.connection.get(key);

return result;

}

public async has(key: string): Promise<boolean> {

const result = await this.connection.get(key);

return result !== undefined && result !== null;

}

public async set(key: string, value: string) {

await this.connection.set(key, value);

}

}

export default RedisStrategy;

Because MemcachedStrategy is similar to RedisStrategy, we’ll skip covering its implementation. You can view the implementation here if you want to review the MemcachedStrategy.

The API endpoints need to be exposed to save and retrieve transactions. To do this, import TransactionController and Container from TypeDI.

Because we are dynamically injecting TransactionService inside TransactionController, we have to get a TransactionController instance from the TypeDI container.

Create src/modules/transactions/transaction.routes.ts and add the code below:

import { Request, Response, NextFunction, Router } from "express";

import Container from "typedi";

import TransactionController from "./transaction.controller";

const transactionsRouter = Router();

const transactionController = Container.get(TransactionController);

transactionsRouter.post(

"/",

(req: Request, res: Response, next: NextFunction) =>

transactionController.saveTransaction(req, res, next)

);

transactionsRouter.get("/", (req: Request, res: Response, next: NextFunction) =>

transactionController.getRecentTransactions(req, res, next)

);

export default transactionsRouter;

Next, we have to define TransactionController. When we inject TransactionService into the constructor, the dependency is automatically resolved. To achieve this, we must annotate the class as a typedi service with the Service decorator.

Create src/modules/transactions/transaction.service.ts and add the code below:

import { NextFunction, Request, Response } from "express";

import { Service } from "typedi";

import TransactionService from "./transaction.service";

@Service()

class TransactionController {

constructor(private readonly transactionService: TransactionService) {}

async getRecentTransactions(

_req: Request,

res: Response,

next: NextFunction

) {

try {

const results = await this.transactionService.getTransactions();

return res

.status(200)

.json({ message: `Found ${results.length} transaction(s)`, results });

} catch (e) {

next(e);

}

}

async saveTransaction(req: Request, res: Response, next: NextFunction) {

try {

const transaction = req.body;

await this.transactionService.saveTransaction(transaction);

return res.send("Transaction was saved successfully");

} catch (e) {

next(e);

}

}

}

export default TransactionController;

Finally, we need to create the TransactionService and inject the CacheProvider.

Then, in the saveTransaction method, deserialize any existing transaction records from the cache, update the list of transactions, and serialize the updated list.

Create /src/modules/transaction/transaction.service.ts and add the code below:

import { Service } from "typedi";

import CacheProvider from "../../providers/cache";

import Transaction from "./transaction.model";

@Service()

class TransactionService {

constructor(private readonly cacheProvider: CacheProvider) {}

async saveTransaction(transaction: Transaction[]) {

let cachedTransactions = await this.cacheProvider.get("transactions");

let updatedTransactions: Transaction[] = [];

if (cachedTransactions && cachedTransactions.length > 0) {

updatedTransactions = [...JSON.parse(cachedTransactions), transaction];

}

await this.cacheProvider.set(

"transactions",

JSON.stringify(updatedTransactions)

);

}

async getTransactions(): Promise<Transaction[]> {

const cachedTransactions = await this.cacheProvider.get("transactions");

if (!cachedTransactions) return [];

return JSON.parse(cachedTransactions);

}

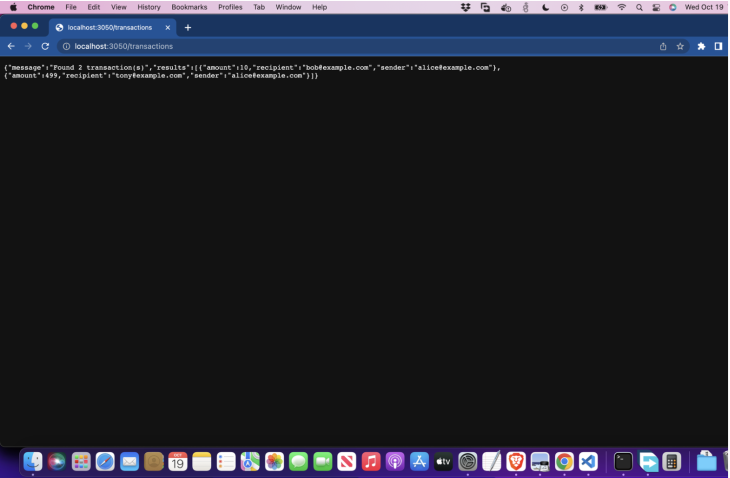

Now, we are ready to test the application manually. Go ahead and fire up the terminal and execute some HTTP requests with curl:

curl -X "POST" --data '{"amount":10,"recipient":"[email protected]","sender":"[email protected]"}' http://localhost:3050/transactions

Then, add a second transaction:

curl -X "POST" --data '{"amount":499,"recipient":"[email protected]","sender":"[email protected]"}' http://localhost:3050/transactions

If we visit localhost:3050/transactions in the browser, we should see the response below:

Design patterns, like the strategy pattern, are handy tools for solving problems that require multiple implementations. Dependency injection is a valuable technique to help keep modules maintainable over time, and distributed caches with fallbacks help improve performance when building cloud applications.

I highly recommend exploring other functional design patterns like the Proxy and Adapter patterns. Feel free to clone the project from this tutorial and give it a spin.

Monitor failed and slow network requests in production

Monitor failed and slow network requests in productionDeploying a Node-based web app or website is the easy part. Making sure your Node instance continues to serve resources to your app is where things get tougher. If you’re interested in ensuring requests to the backend or third-party services are successful, try LogRocket.

LogRocket lets you replay user sessions, eliminating guesswork around why bugs happen by showing exactly what users experienced. It captures console logs, errors, network requests, and pixel-perfect DOM recordings — compatible with all frameworks.

LogRocket's Galileo AI watches sessions for you, instantly identifying and explaining user struggles with automated monitoring of your entire product experience.

LogRocket instruments your app to record baseline performance timings such as page load time, time to first byte, slow network requests, and also logs Redux, NgRx, and Vuex actions/state. Start monitoring for free.

Solve coordination problems in Islands architecture using event-driven patterns instead of localStorage polling.

Signal Forms in Angular 21 replace FormGroup pain and ControlValueAccessor complexity with a cleaner, reactive model built on signals.

Discover what’s new in The Replay, LogRocket’s newsletter for dev and engineering leaders, in the February 25th issue.

Explore how the Universal Commerce Protocol (UCP) allows AI agents to connect with merchants, handle checkout sessions, and securely process payments in real-world e-commerce flows.

Would you be interested in joining LogRocket's developer community?

Join LogRocket’s Content Advisory Board. You’ll help inform the type of content we create and get access to exclusive meetups, social accreditation, and swag.

Sign up now