GraphQL and caching: two words that don’t go very well together.

The reason is that GraphQL operates via POST by executing all queries against a single endpoint and passing parameters through the body of the request. That single endpoint’s URL will produce different responses, which means it cannot be cached — at least not using the URL as the identifier.

“But wait a second,” you say. “GraphQL surely has caching, right?”

Yes, by doing it in the client through Apollo Client and similar libraries, which cache the returned objects independently of each other, identifying them by their unique global ID.

But this is a hack. This solution exists only because GraphQL cannot handle caching in the server, for which we normally use the URL as the identifier and cache the data for all entities in the response all together.

Caching in the client has a few disadvantages:

So what’s the solution then?

It is, simply put, to use the standards. In this case, the standard is HTTP caching.

“Yeah, but that’s the whole point — we can’t use HTTP caching! Or what are we talking about?”

Right. But knowing that we want to use HTTP caching, we can then approach the problem from a different angle. Instead of asking, “How can we cache GraphQL?” we can ask, “In order to use HTTP caching, how should we use GraphQL?”

In this article, we’ll answer this question.

The Replay is a weekly newsletter for dev and engineering leaders.

Delivered once a week, it's your curated guide to the most important conversations around frontend dev, emerging AI tools, and the state of modern software.

GETUsing HTTP caching means that we will cache the GraphQL response using the URL as the identifier. This has two implications:

GETThen, if the single endpoint is /graphql, the GET operation can be executed against URL /graphql?query=...&variables=....

This applies to retrieving data from the server (via the query operation). For mutating data (via the mutation operation), we must still use POST. There is no problem here since mutations are always executed fresh; we can’t cache the results of a mutation, so we wouldn’t use HTTP caching with it anyway.

This approach works (and it’s even suggested in the official site), but there are certain considerations we must keep in mind.

A GraphQL query will normally span multiple lines. For instance:

{

posts {

id

title

}

}

However, we can’t input this multi-line string directly in the URL param.

The solution is to encode it. For instance, the GraphiQL client will encode the query above like this:

%7B%0A%20%20posts%20%7B%0A%20%20%20%20id%0A%20%20%20%20title%0A%20%20%7D%0A%7D

Alright, this works. But it doesn’t look very good, right? Who can make sense of that query?

One of the virtues of GraphQL is that its queries are so easy to grasp. With some practice, once we see the query, we understand it immediately. But once it’s been codified, all that is gone, and only machines can comprehend it; the human is out of the equation.

Another solution could be to replace all the newlines in the query with a space, which works because newlines add no semantic meaning to the query. Then, the query above can be represented as:

?query={ posts { id title } }

This works well for simple queries. But if you have a really long query, opening and closing many curly brackets and adding field arguments and directives, then it becomes increasingly difficult to understand.

For instance, this query:

{

posts(limit:5) {

id

title @titleCase

excerpt @default(

value:"No title",

condition:IS_EMPTY

)

author {

name

}

tags {

id

name

}

comments(

limit:3,

order:"date|DESC"

) {

id

date(format:"d/m/Y")

author {

name

}

content

}

}

}

Would become this single-line query:

{ posts(limit:5) { id title @titleCase excerpt @default(value:"No title", condition:IS_EMPTY) author { name } tags { id name } comments(limit:3, order:"date|DESC") { id date(format:"d/m/Y") author { name } content } } }

Once again, it works, but we won’t know what it is we are executing. And if the query also contains fragments, then absolutely forget it — there’s no way we can make sense of it.

So, what can we do about it?

First, the good news: stakeholders from the GraphQL community have identified this problem and have begun work on the GraphQL over HTTP specification, which will standardize how everyone (GraphQL servers, clients, libraries, etc.) will communicate their GraphQL queries via URL param.

Second, the not-so-good news: progress on this endeavor seems to be slow, and the specification so far is not comprehensive enough to be usable. Thus, we either wait for an uncertain amount of time, or we look for another solution.

If passing the query in the URL is not satisfactory, what other option do we have? Well, to not pass the query in the URL!

This approach is called a “persisted query.” We store the query in the server and use an identifier (such as a numeric ID or a unique string produced by applying a hashing algorithm with the query as input) to retrieve it. Finally, we pass this identifier as the URL parameter instead of the query.

For instance, the query could be identified with ID 2908 (or a hash such as "50ac3e81"), and then we execute the GET operation against URL /graphql?id=2908. The GraphQL server will then retrieve the query corresponding to this ID, execute it, and return the results.

Using persisted queries, implementing HTTP caching becomes a nonissue.

Problem solved! If you want to use HTTP caching in your GraphQL server, find a GraphQL server that supports persisted queries, either natively or through some library.

max-age valueOn to the next challenge!

HTTP caching works by sending the Cache-Control header in the response, with a max-age value indicating the amount of time the response must be cached or no-store indicating not to cache it.

How will the GraphQL server calculate the max-age value for the query, considering that different fields can have different max-age values?

The answer is to get the max-age value for all fields requested in the query and find out which is the lowest one. That will be the response’s max-age.

For instance, let’s say we have an entity of type User. Following the behavior assigned to this entity, we can assign how long the corresponding field should be cached:

🛠 Its ID will never change ⇒ We give field id a max-age of 1 year

🛠 Its URL will be updated very randomly (if ever) ⇒ We give field url a max-age of 1 day

🛠 The person’s name may change every now and then (e.g., to add a status, or to say “Milton (wears a mask)”) ⇒ We give field name a max-age of 1 hour

🛠 The user’s karma on the site can change at all times (e.g., after somebody upvotes their comment) ⇒ We give field karma a max-age of 1 minute

🛠 If querying the data from the logged-in user, then the response can’t be cached at all (independently of whichever field we’re fetching) ⇒ The max-age must be no-store

As a result, the response to the following GraphQL queries will have the following max-age values (for this example, we ignore the max-age for field Root.users, but in practice, it will also be taken into account):

| Query | max-age value |

|---|---|

{

users {

id

}

}

|

1 year |

{

users {

id

url

}

}

|

1 day |

{

users {

id

url

name

}

}

|

1 hour |

{

users {

id

url

name

karma

}

}

|

1 minute |

{

me {

id

url

name

karma

}

}

|

no-store (don’t cache) |

max-age valueHow can the GraphQL server calculate the response’s max-age value? Because this value will depend on all the fields present in the query, there’s an obvious candidate to do it: directives.

A schema-type directive can be assigned to a field, and we can customize its configuration via directive arguments.

Hence, we can create a directive @cacheControl with argument maxAge of type Int (measuring seconds). Specifying maxAge with value 0 is equivalent to no-store. If not provided (the argument has been defined as non-mandatory), a predefined default max-age is used.

We can now configure our schema to satisfy the max-age defined for all fields earlier on. Using the Schema Definition Language (SDL), it will look like this:

directive @cacheControl(maxAge: Int) on FIELD_DEFINITION

type User {

id: ID @cacheControl(maxAge: 31557600)

url: URL @cacheControl(maxAge: 86400)

name: String @cacheControl(maxAge: 3600)

karma: Int @cacheControl(maxAge: 60)

}

type Root {

me: User @cacheControl(maxAge: 0)

}

@cacheControl directiveI will demonstrate my implementation of the @cacheControl directive for server GraphQL API for WordPress, which is coded in PHP. (This server has both native persisted queries and HTTP caching.)

The resolution for the directive is very simple: it just takes the maxAge value from the directive argument and injects it into a service called CacheControlEngine:

public function resolveDirective(): void

{

$maxAge = $this->directiveArgsForSchema['maxAge'];

if (!is_null($maxAge)) {

$this->cacheControlEngine->addMaxAge($maxAge);

}

}

Whenever injecting a new max-age value, the CacheControlEngine service will compute the lower value and store it in its state:

class CacheControlEngine

{

protected ?int $minimumMaxAge = null;

public function addMaxAge(int $maxAge): void

{

if (is_null($this->minimumMaxAge) || $maxAge < $this->minimumMaxAge) {

$this->minimumMaxAge = $maxAge;

}

}

}

The service can then generate the Cache-control header, with the max-age value for the response:

class CacheControlEngine

{

public function getCacheControlHeader(): ?string

{

if (!is_null($this->minimumMaxAge)) {

// Minimum max-age = 0 => `no-store`

if ($this->minimumMaxAge === 0) {

return 'Cache-Control: no-store';

}

return sprintf(

'Cache-Control: max-age=%s',

$this->minimumMaxAge

);

}

return null;

}

}

Finally, the GraphQL server will get the Cache-Control header from the service and add it to the response.

In the never-ending argument of whether GraphQL is better than REST (and vice versa), REST always had an ace up its sleeve: server-side caching.

But we can also have GraphQL support HTTP caching. All it takes is storing the query in the server and then accessing this “persisted query” via GET, providing the ID for the query as a URL parameter. It is a trade-off that is more than justified and more than worth it.

GraphQL and caching: two words that go very well together.

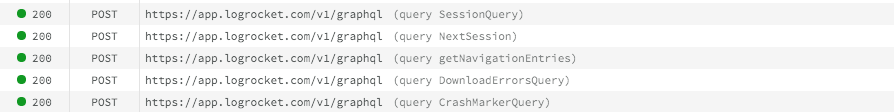

While GraphQL has some features for debugging requests and responses, making sure GraphQL reliably serves resources to your production app is where things get tougher. If you’re interested in ensuring network requests to the backend or third party services are successful, try LogRocket.

LogRocket lets you replay user sessions, eliminating guesswork around why bugs happen by showing exactly what users experienced. It captures console logs, errors, network requests, and pixel-perfect DOM recordings — compatible with all frameworks.

LogRocket's Galileo AI watches sessions for you, instantly aggregating and reporting on problematic GraphQL requests to quickly understand the root cause. In addition, you can track Apollo client state and inspect GraphQL queries' key-value pairs.

React Server Components and the Next.js App Router enable streaming and smaller client bundles, but only when used correctly. This article explores six common mistakes that block streaming, bloat hydration, and create stale UI in production.

Gil Fink (SparXis CEO) joins PodRocket to break down today’s most common web rendering patterns: SSR, CSR, static rednering, and islands/resumability.

@container scroll-state: Replace JS scroll listeners nowCSS @container scroll-state lets you build sticky headers, snapping carousels, and scroll indicators without JavaScript. Here’s how to replace scroll listeners with clean, declarative state queries.

Explore 10 Web APIs that replace common JavaScript libraries and reduce npm dependencies, bundle size, and performance overhead.

Would you be interested in joining LogRocket's developer community?

Join LogRocket’s Content Advisory Board. You’ll help inform the type of content we create and get access to exclusive meetups, social accreditation, and swag.

Sign up now