HttpInterceptor is one of the most powerful features in Angular. You can use it to mock a backend so you can easily test Angular apps without the hassle of setting up a server.

In this HttpInterceptor tutorial, we’ll demonstrate how to use HttpInterceptors to cache HTTP requests.

We’ll cover the following:

Caching HTTP requests helps to optimize your application. Imagine requesting a piece of data from the server every time a request is placed, even if the data has never changed over time. This would impact your app’s performance due to the time it takes to process the data in the server and send it over when the data has never changed from previous requests.

To remove the time delay in processing the data in the server when it hasn’t changed, we need to check whether the data in the server has changed. If the data has changed, we process new data from the server. If not, we skip the processing in the server and send the previous data. This is called caching.

In HTTP, not all requests are cached. POST, PUT, and DELETE requests are not cached because they change the data in the server.

POST and PUT add data to the server while DELETE removes data from the server. So we need to process them every time they’re requested without caching them.

GET requests can be cached. They just get data from the server without changing them. If no POST, PUT, or DELETE request occurs before the next GET request, the data from the last GET request does not change. We simply return the previous data or response without hitting the server.

Modern browsers have a built-in mechanism to cache our HTTP requests. We’ll show you how to do this in Angular.

HttpInterceptors are special services in Angular. HTTP requests are passed through them in the chain before the actual request is made to the server.

Put simply, HttpInterceptors intercept and handle HTTP requests. Typically, HttpInterceptors call next.handle(transformedReq) to transform outgoing requests before passing them to the next interceptor in the chain. In rare cases, interceptors handle requests themselves instead of delegating to the remainder of the chain.

We’ll create our HttpInterceptor so that whenever we place a GET request, the request will pass through the interceptors in the chain. Our interceptor will check the request to determine whether it has been cached. If yes, it will return the cached response. If not, it will pass the request along to the remainder of the chain to eventually make an actual server request. The interceptor will watch for the response when it receives the response and cache it so that any other request will return the cached response.

We’ll also provide a way to reset a cache. This is ideal because if the data processed by the GET API has been changed by POST, PUT, DELETE since the last request, and we’re still returning the cached data. We’ll be dealing with a stale data and our app will be displaying wrong results.

HttpInterceptorAll HttpInterceptors implement the HttpInterceptor interface:

export interface HttpInterceptor {

intercept(req: HttpRequest<any>, next: HttpHandler): Observable<HttpEvent<any>>;

}

The intercept method is called with the request req: HttpRequest<any> and the next HttpHandler.

HttpHandlers are responsible for calling the intercept method in the next HttpInterceptor in the chain and also passing in the next HttpHandler that will call the next interceptor.

To set up our interceptor, CacheInterceptor:

@Injectable()

class CacheInterceptor implements HttpInterceptor {

private cache: Map<HttpRequest, HttpResponse> = new Map()

intercept(req: HttpRequest<any>, next: HttpHandler): Observable<HttpEvent<any>>{

if(req.method !== "GET") {

return next.handle(req)

}

const cachedResponse: HttpResponse = this.cache.get(req)

if(cachedResponse) {

return of(cachedResponse.clone())

}else {

return next.handle(req).pipe(

do(stateEvent => {

if(stateEvent instanceof HttpResponse) {

this.cache.set(req, stateEvent.clone())

}

})

).share()

}

}

}

Since the request method is not a GET request, we pass it along the chain — no caching.

If it is a GET, we get the cached response from the cache map instance using the Map#get method passing the req as key. We are storing the request HttpRequest as a key and the response HttpResponse as the value in the map instance, cache.

The map will be structured like this:

| Key | Value |

HttpRequest {url: "/api/dogs" ,…} |

HttpResponse {data: ["alsatians"],…} |

HttpRequest {url: "/api/dogs/name='bingo'" ,…} |

HttpResponse {data: [{name:"bingo",…}],…} |

HttpRequest {url: "/api/cats" ,…} |

HttpResponse {data: [“serval”],…} |

A key is an HttpRequest instance and its corresponding value is an HttpResponse instance. We use its get and set methods to retrieve and store the HttpRequests and HttpResponses. So when we call get in cache, we know we’ll get an HttpResponse.

The response is stored in cachedResponse. We check to make sure it’s not null (i.e., we get a response). If yes, we clone the response and return it.

If we don’t get a response from the cache, we know the request hasn’t been cached before, so we let it pass and listen for the response. If we see one, we cache it using the Map#set method. The req becomes the key and the response becomes the value.

We need to watch for stale data when caching. We need to know when the data has changed and make a server request to update the cache.

We can use different methods to achieve this. We can use the If-Modfied-Since header, we can set our expiry date on the HttpRequest header, or we can set a flag on the header to detect when to make a full server request.

Let’s go with the third option.

Note: There are many ways to rest cache; the options listed above are just a few that come to mind.

The trick here is to add a parameter to the request when the user is making a request, so we can test for the header in our CacheInterceptor and know when to pass it along to the server.

public fetchDogs(reset: boolean = false) {

return this.httpClient.get("api/dogs", new HttpHeaders({reset}))

}

The method fetchDogs has a reset boolean param. If the reset param is set to true, the CacheInterceptor has to make a server request. It sets the reset param in the header before making the request. The header holds the reset, like this:

reset | true or rest | false

The CacheInterceptor has to check for the reset param in the header to determine when to rest the cache. Let’s add it to our CacheInterceptor implementation:

@Injectable()

class CacheInterceptor implements HttpInterceptor {

private cache: Map<HttpRequest, HttpResponse> = new Map()

intercept(req: HttpRequest<any>, next: HttpHandler): Observable<HttpEvent<any>>{

if(req.method !== "GET") {

return next.handle(req)

}

if(req.headers.get("reset")) {

this.cache.delete(req)

}

const cachedResponse: HttpResponse = this.cache.get(req)

if(cachedResponse) {

return of(cachedResponse.clone())

}else {

return next.handle(req).pipe(

do(stateEvent => {

if(stateEvent instanceof HttpResponse) {

this.cache.set(req, stateEvent.clone())

}

})

).share()

}

}

}

We requested the reset param in the req headers. If the reset param is true, we delete the HttpRequest/HttpResponse cache from cache using the Map#delete method. Then, with the cache deleted, a server request is made.

Lastly, we need to register our CacheInterceptor in the HTTP_INTERCEPTROS array token. Without it, our interceptor won’t be in the interceptors chain and we can’t cache requests.

@NgModule({

...

providers: {

provide: HTTP_INTERCEPTORS,

useClass: CacheInterceptor,

multi: true

}

})

...

With this, our CacheInterceptor will pick all HTTP requests made in our Angular app.

Interceptors are very helpful, mainly because they drastically reduce the huge amount of code required to implement HTTP caching.

For this reason, I urge you to adopt Angular. React, Vue.js, and Svelte all lack this capability. To achieve HTTP caching and intercepting using these frameworks would be a huge headache.

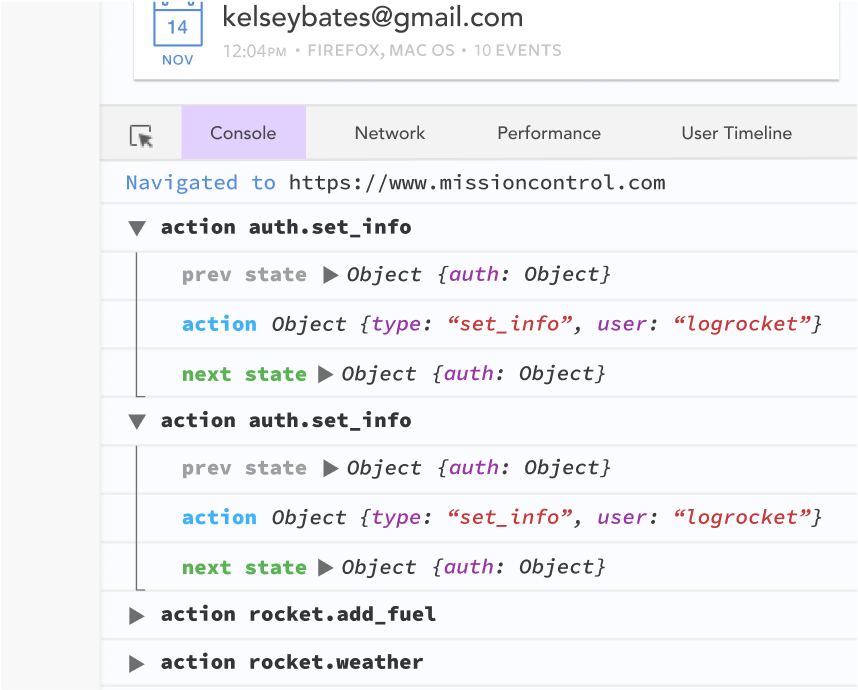

Debugging Angular applications can be difficult, especially when users experience issues that are difficult to reproduce. If you’re interested in monitoring and tracking Angular state and actions for all of your users in production, try LogRocket.

LogRocket lets you replay user sessions, eliminating guesswork by showing exactly what users experienced. It captures console logs, errors, network requests, and pixel-perfect DOM recordings—compatible with all frameworks.

With Galileo AI, you can instantly identify and explain user struggles with automated monitoring of your entire product experience.

The LogRocket NgRx plugin logs Angular state and actions to the LogRocket console, giving you context around what led to an error, and what state the application was in when an issue occurred.

Modernize how you debug your Angular apps — start monitoring for free.

Would you be interested in joining LogRocket's developer community?

Join LogRocket’s Content Advisory Board. You’ll help inform the type of content we create and get access to exclusive meetups, social accreditation, and swag.

Sign up now

Learn how OpenAPI can automate API client generation to save time, reduce bugs, and streamline how your frontend app talks to backend APIs.

Discover how the Interface Segregation Principle (ISP) keeps your code lean, modular, and maintainable using real-world analogies and practical examples.

<selectedcontent> element improves dropdowns

Learn how to implement an advanced caching layer in a Node.js app using Valkey, a high-performance, Redis-compatible in-memory datastore.

12 Replies to "Caching with HttpInterceptor in Angular"

Nice article, rookie mistake though! As long as you’re using `req: HttpRequest` as the key for your map, it’ll never use cache

Can you explain more?

`req: HttpRequest` is used as key in the cache as said by Angular docs.

you’re right, I tested and it does not work of course because each request is new object

How do we know/When to send the reset flag ‘true’ for getting the updated response?

return next.handle(req).pipe(

do(stateEvent => {

if(stateEvent instanceof HttpResponse) {

this.cache.set(req, stateEvent.clone())

}

})

).share()

this line of code create issue can you update it for angular 13 the main problem is i sent requst to bind table and i delete item from table and sent request its get reponce from cache please tell me how to fix this type of issue

I’m curious, why use .share() at the end?

Great article!

I just had to change the Map type to `Map<string, HttpResponse>`, where string is `req.urlWithParams`. With these few tweaks I was able to get mine to work.

This solution doesn’t work. Angular doesn’t pass the same HttpRequest instance between the same requests. So, you can’t cache using request object, need to use some combination of its fields.

`share` at the end is needed to make `do` (or `tap`) run only once

What about making the get full URL with parameters string has the key of the cache map ? That will identify the particular request

I have presist the cache even during the page refresh. How to do it with localStorage instead of Map?

Aside from the other issues highlighted in the comments, won’t this just grow memory with all the requests/responses? For an enterprise app, this means client side growth will be huge, and things will be cached even when we don’t want them to be.