Recently, Progressive Web Apps (PWAs) have gained a lot more attention. Partially, the now available set of APIs in browsers (especially on mobile) are part of that. The other part could be found in the still available desire to scale a web app into a mobile app with all the benefits and without the drawbacks. One of these drawbacks of building a mobile app is the platform lock-in, i.e., the requirement of being listed in the platform vendor’s store.

In this article we will explore what it takes to build a PWA these days and what disadvantages or blockers we face on the way. We will explore the most useful APIs and get a minimum example from zero to PWA.

The Replay is a weekly newsletter for dev and engineering leaders.

Delivered once a week, it's your curated guide to the most important conversations around frontend dev, emerging AI tools, and the state of modern software.

Let’s start with a little bit of an historic background. The main idea of a PWA is to be able to use as much of the native capabilities as possible. That includes the various sensors such as GPS, accelerometer, gyrometer, etc. However, most importantly a PWA should be working offline as well, i.e., no more “page not found” or similar errors when being offline.

The ability to go offline was already included in browsers years ago – it was the app cache or app manifest. In this specification we require a special file (usually called something like app.cache or app.manifest) to describe what resources should be available for offline use, only for online use, or being replaced when going from one mode to the other. The approach, however, faced many issues (especially in updating the manifest itself) and was not adopted well.

While the manifest approach was failing Mozilla came up with a lot new APIs – mostly for their Firefox OS mobile system. In this endeveaur Mozilla explored the possibility of using web apps like mobile apps including a web store, which could theoretically also used be included by other platforms such as Android or iOS. Unfortunately, Firefox OS never gained traction and the adoption of most of these standards failed to become critical. As a result the dream of “an open store” or “a store specification” failed. Nevertheless, some of the APIs continued to be specified and adopted well.

One of the APIs that gained a lot from the Firefox OS experiment was the service worker. Like the web worker it represents an isolated JS execution environment that is executed and stopped from the underlying platform. The only way to communicate with it is via message passing. The service worker provided a vast set of possibilities that were partially missing from web apps (as compared to mobile apps) and allowed more flexibility for handling caching in offline scenarios.

With all the APIs in the right place a couple of influentual people coined a new term “Progress Web App” for web apps that could be used like native apps, but are actually delivered like any other web app – no separate bundling, distribution, or anything needed.

According to Wikipedia[^1] the following characteristics exist:

In the following we will go over each characteristic to see how an implementation would look like. To fresh things up a bit we will explain every part in the context of an example app.

The order of the previous bullet points has been adjusted to follow a more natural implementation flow.

As we come from a web background we assume that all these points are implicitly handled. Hence we will leave out the obvious points, e.g., the progressive part, the safe part, and the linkable part. The safe part indicates only that our web app originates from a secure origin. It is served over TLS and displays a green padlock (no active mixed content).

Our example starts out with three files in a simple rudimentary state:

The current logic of our sample application looks as follows:

(function () {

const app = document.querySelector('#app');

const container = app.querySelector('.entry-container');

const loadMore = app.querySelector('.load-more');

async function getPosts(page = 1) {

const result = await fetch('https://jsonplaceholder.typicode.com/posts?_page=' + page);

return await result.json();

}

async function getUsers() {

const result = await fetch('https://jsonplaceholder.typicode.com/users');

return await result.json();

}

async function loadEntries(page = 1) {

const [users, posts] = await Promise.all([getUsers(), getPosts(page)]);

return posts.map(post => {

const user = users.filter(u => u.id === post.userId)[0];

return `<section class="entry"><h2 class="entry-title">${post.title}</h2><article class="entry-body">${post.body}</article><div class="entry-author"><a href="mailto:${user.email}">${user.name}</a></div></section>`;

}).join('');

}

function appendEntries(entries) {

const output = container.querySelector('output') || container.appendChild(document.createElement('output'));

output.outerHTML = entries + '<output></output>';

}

(async function() {

let page = 1;

async function loadMoreEntries() {

loadMore.disabled = true;

const entries = await loadEntries(page++);

appendEntries(entries);

loadMore.disabled = false;

}

loadMore.addEventListener('click', loadMoreEntries, false);

loadMoreEntries();

})();

})();

No React, no Angular, no Vue. Just direct DOM manipulation with some more recent APIs (e.g., fetch) and JS specs (e.g., using async / await). For the sake of simplicity we will not bundle (e.g., optimize, polyfill, and minimize) this application.

The logic is to simple load some initial entries and have some load more functionality by clicking a button. For the example we use the jsonplaceholder service, which gives us some sample data.

Without further ado, let’s go right into details.

Our web app can be identified as an “application” thanks to the W3C manifest and service worker registration scope. As a consequence this is allowing search engines to easily find (read “discover”) it.

Reference a web app manifest with at least the four key properties:

name,short_name,start_url, anddisplay (value being either “standalone” or “fullscreen”).To reference a web app manifest we only need two things. On the one hand a valid web app manifest (e.g., a file called manifest.json in root folder of our web app) and a link in our HTML page, e.g.,

<link href="manifest.json" rel="manifest">

The content may be as simple as

{

"name": "Example App",

"short_name": "ExApp",

"theme_color": "#2196f3",

"background_color": "#2196f3",

"display": "browser",

"scope": "/",

"start_url": "/"

}

A couple of nice manifest generators exist; either in form of a dedicated web app or as part of our build pipeline. The latter is quite convenient, e.g., when using a Webpack build to auto-generate the manifest with consistent content.

One example for a nice web app to generate a valid manifest is the Web App Manifest Generator.

To detect that our manifest was valid and picked up correctly we can use the debugging tools of our browser of choice. In Chrome this currently looks as follows:

Allow users to “keep” apps they find most useful on their home screen without the hassle of an app store.

At least include a 144×144 large icon in png format in the manifest, e.g., by writing:

"icons": [

{

"src": "/images/icon-144.png",

"sizes": "144x144",

"type": "image/png"

}

]

The great thing about the previously mentioned generators is that most of them will already create the icon array for us. Even better, we only need to supply one (larger) base image which will lead to all other icons.

Mostly, installable refers to installing a service worker (more on that later) and being launchable from the homescreen, which makes the application also app-like.

Feel like an app to the user with app-style interactions and navigation. While we will never be a true native app we should embrace touch gestures and mobile-friendly usage patterns.

Most importantly, as already discussed we want to be launchable from the homescreen. Some browsers allow us to show the add to home screen prompt. This only requires listening to the beforeinstallprompt event. Most importantly, the already mentioned manifest needs to include some specific icons (e.g., a 192×192 large icon).

In a nutshell we can listen for the event and react with showing the browser’s default prompt. Alternatively, we can also capture the event args and use them in a custom UI. It’s all up to us. A simple implementation may therefore look like:

window.addEventListener('beforeinstallprompt', e => {

e.preventDefault();

e.prompt();

});

More information on this feature can be found on Google’s documentation.

Fit any form factor: desktop, mobile, tablet, or forms yet to emerge. Keep in mind that responsive design is not constraint to react to different screen sizes, it also implies reacting to different forms of input and / or output.

Of course, targetting everything that is out there (smart speakers, smart watches, phones, tablets, …) may not be possible or even wanted. Hence it makes sense to first look at the desired target platforms before making any kind of responsive design efforts.

Creating a responsive design can be achieved in many different ways:

There are pros and cons for every point, but it mostly depends on our problem descriptions (e.g., how does the wanted design look like and do we need to support legacy browsers).

Our example follows already a fluid design. We could still make some improvements in this space, but as the focus in this article is more on the connectivity features we’ll skip explicit steps in this area.

Service workers allow work offline, or on low quality networks. Introducing a service worker is quite simple, usually the maintenance / correct use is much harder.

Load while offline (even if only a custom offline page). By implication, this means that progressive web apps require service workers.

A service worker requires two things:

The latter should look similar as

// check for support

if ('serviceWorker' in navigator) {

try {

// calls navigator.serviceWorker.register('sw.js');

registerServiceWorker();

} catch (e) {

console.error(e);

}

}

where sw.js refers to the service worker. In the best case sw.js is placed in the root of our application, otherwise it cannot handle all content.

Service workers can only react to events and are unable to access the DOM. The main events we care about are

install to find out if a service worker was registeredfetch to detect / react properly to network requestsThe following diagram illustrates the role of these two events in our web app.

Also, we may want to follow-up on a successful service worker installation.

async function registerServiceWorker() {

try {

const registration = await navigator.serviceWorker.register('sw.js');

// do something with registration, e.g., registration.scope

} catch (e) {

console.error('ServiceWorker failed', e);

}

}

With respect to the content of the service worker – it can be as simple as listening for an installation and fetch event.

self.addEventListener('install', e => {

// Perform install steps

});

self.addEventListener('fetch', e => {

// Empty for now

});

At this stage our application can already run standalone and be added to the homescreen (e.g., the desktop on Windows) like an app.

Now it’s time to make the application a little bit more interesting.

Make re-engagement easy through features like push notifications. Push notifications are similar to their native counter parts. They may happen when the user does not use our web app and they require explicit permission to do so. They are also limited in size (max. 4 kB) and must be encrypted.

While the permission request is shown automatically once needed, we can also trigger the request manually (recommended):

Notification.requestPermission(result => {

if (result !== 'granted') {

//handle permissions deny

}

});

In our service worker we can subscribe to new push notifications. For this we use the ability to follow-up on a successful service worker registration:

async function subscribeToPushNotifications(registration) {

const options = {

userVisibleOnly: true,

applicationServerKey: btoa('...'),

};

const subscription = await registration.pushManager.subscribe(options);

//Received subscription

}

The application server key is the public key to decrypt the messages coming from us. An implementation to enable web push notifications for Node.js is, e.g., Web Push.

Regarding the right value for the applicationServerKey we find the following information in the specification:

A Base64-encoded DOMString or ArrayBuffer containing an ECDSA P-256 public key that the push server will use to authenticate your application server. If specified, all messages from your application server must use the VAPID authentication scheme, and include a JWT signed with the corresponding private key.

Hence in order for this to work we need to supply a base64 value matching the public key of our push server. In reality, however, some older browser implementations require an ArrayBuffer. Therefore, the only safe choice is to do the conversion from base64 strings ourselves (the gist to look for is called urlBase64ToUint8Array).

Always up-to-date thanks to the service worker update process. To see the state of a service worker we should use the debugging tools of the browser (e.g., in Chrome via the Application tab). Make sure to check “Update on reload” in development mode, otherwise we need to manually update the registered service worker (avoid “skip waiting” confirmation messages). The underlying reason is that browsers only allow a single active service worker from our page.

Alright without further ado let’s step right into what’s needed to make this happen. First we need to provide some implementation to the install and fetch event. In the simplest form we only add the static files to a cache within the install event.

const files = [

'./',

'./app.js',

'./style.css',

];

self.addEventListener('install', async e => {

const cache = await caches.open('files');

cache.addAll(files);

});

The caches object gives us an API to create named caches (very useful for debugging and eviction strategies), resolve requests, and explicitly cache files. In this implementation we essentially tell the browser to fetch the URLs from the array and put them into the cache. The service worker itself is implicitly cached.

The fetch event may become really sophisticated. A quite simple implementation for our example project may look as follows:

self.addEventListener('fetch', async e => {

const req = e.request;

const res = isApiCall(req) ? getFromNetwork(req) : getFromCache(req);

await e.respondWith(res);

});

Nice, so essentially we just determine if we currently fetch a (static) file or make an API call. In the former case we go directly to the cache, in the latter case we try the network first. Finally, we respond with the given response (which either comes from the cache or the network). The only difference lies in the caching strategy.

The implementation of the network first strategy was done to use another named cache (“data”). The solution is pretty straight forward, the only important point is that fetch in an offline mode will throw an exception and that responses need to be cloned before they can be put into the cache. The reason is that a response can only be read once.

async function getFromNetwork(req) {

const cache = await caches.open('data');

try {

const res = await fetch(req);

cache.put(req, res.clone());

return res;

} catch (e) {

const res = await cache.match(req);

return res || getFallback(req);

}

}

The getFallback function uses a cache-only strategy, where fallback data that has initially been added to the static files cache is used.

The given solution is not free of problems. If the problem domain is very simple it may work, however, if, e.g., we have a growing set of files to handle we need a good cache expiration strategy. Also with multiple API requests and external static files entering our problem domain we may face new challenges.

A nice solution to these potential challenges is workbox-sw from Google[^1]. It takes care of the whole updating process and provides a nice abstraction layer over the standard fetch event.

PWAs are nothing more than our standard web apps enhanced by using some of the recently introduced APIs to improve general UX. The name progressive indicates that the technology does not require a hard cut. Actually, we can decide what makes sense and should be included.

If you want to follow the full example with steps feel free to clone and play around with the repository available at GitHub – PWA Example. The README will guide you through the branches.

Have you already enhanced your web apps? Which parts did you like and what is currently missing? What are you favorite libraries when dealing with PWAs? Let us know in the comments.

[1]: Wikipedia [2]: Workbox Documentation

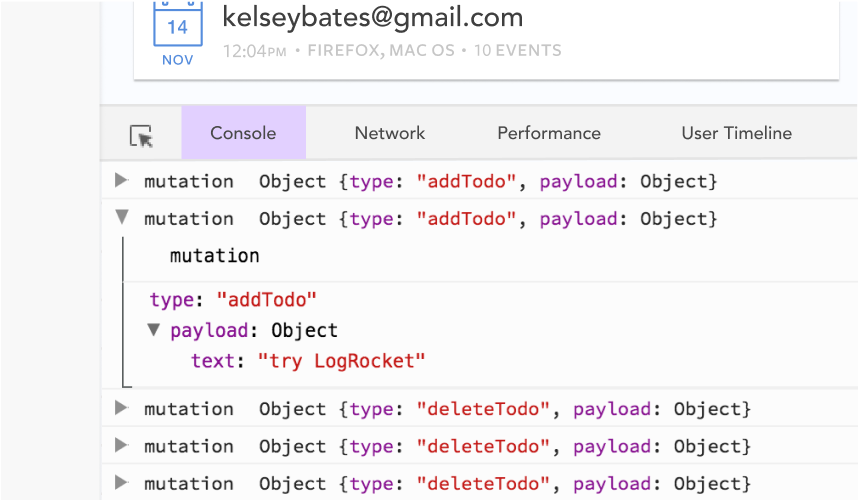

Debugging Vue.js applications can be difficult, especially when users experience issues that are difficult to reproduce. If you’re interested in monitoring and tracking Vue mutations and actions for all of your users in production, try LogRocket.

LogRocket lets you replay user sessions, eliminating guesswork by showing exactly what users experienced. It captures console logs, errors, network requests, and pixel-perfect DOM recordings — compatible with all frameworks.

With Galileo AI, you can instantly identify and explain user struggles with automated monitoring of your entire product experience.

Modernize how you debug your Vue apps — start monitoring for free.

Install LogRocket via npm or script tag. LogRocket.init() must be called client-side, not

server-side

$ npm i --save logrocket

// Code:

import LogRocket from 'logrocket';

LogRocket.init('app/id');

// Add to your HTML:

<script src="https://cdn.lr-ingest.com/LogRocket.min.js"></script>

<script>window.LogRocket && window.LogRocket.init('app/id');</script>

Solve coordination problems in Islands architecture using event-driven patterns instead of localStorage polling.

Signal Forms in Angular 21 replace FormGroup pain and ControlValueAccessor complexity with a cleaner, reactive model built on signals.

Discover what’s new in The Replay, LogRocket’s newsletter for dev and engineering leaders, in the February 25th issue.

Explore how the Universal Commerce Protocol (UCP) allows AI agents to connect with merchants, handle checkout sessions, and securely process payments in real-world e-commerce flows.

Hey there, want to help make our blog better?

Join LogRocket’s Content Advisory Board. You’ll help inform the type of content we create and get access to exclusive meetups, social accreditation, and swag.

Sign up now