In the near future, AI agents will handle most computer-based tasks for us, such as browsing websites, filling out forms, booking tickets, and more. Basically, anything you’d do with a browser and a keyboard, they’ll handle. That future isn’t far off, and this article is a step in that direction.

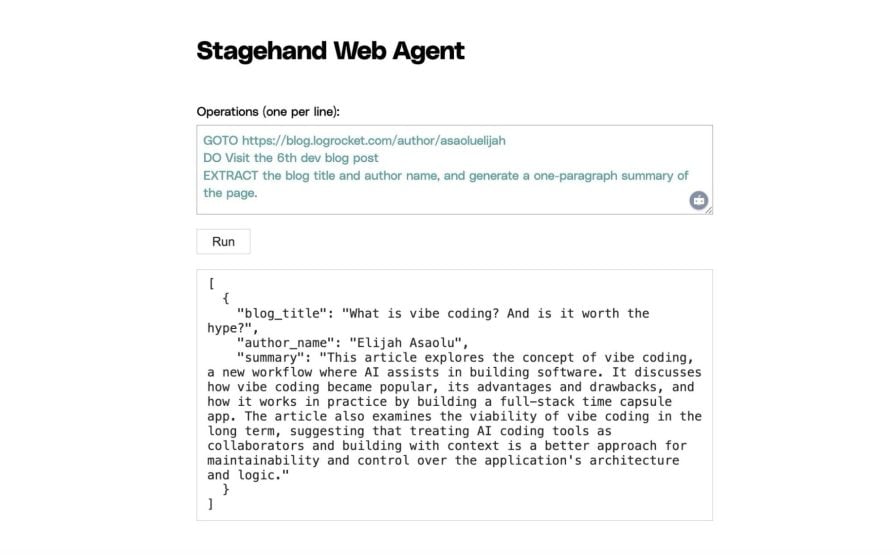

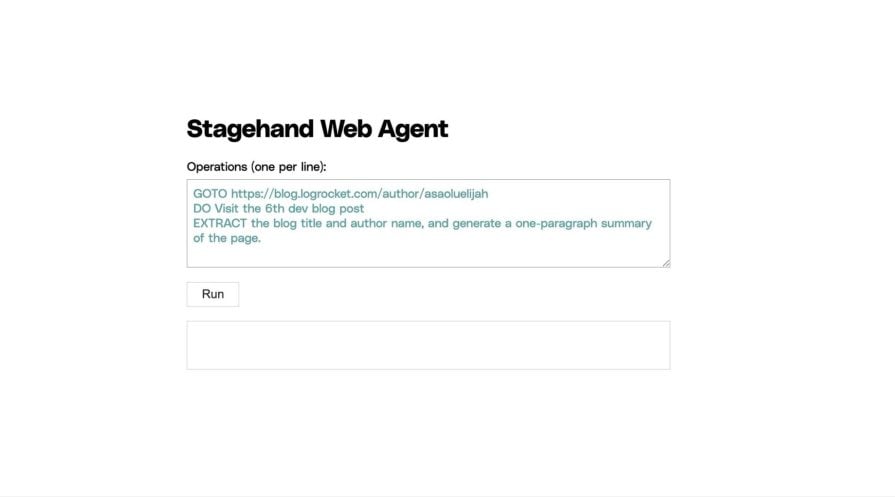

This tutorial explores how to build a web-based AI agent with Stagehand and Gemini. The agent will visit a given URL, follow natural-language instructions, and autonomously perform tasks like clicking buttons or extracting content:

The Replay is a weekly newsletter for dev and engineering leaders.

Delivered once a week, it's your curated guide to the most important conversations around frontend dev, emerging AI tools, and the state of modern software.

Stagehand is a headless browser/web automation tool with built-in AI capabilities. It works with automation libraries like Puppeteer and Playwright, letting you perform AI-driven interactions or extract data from web pages.

In traditional Playwright/Puppeteer workflows, automating a button click usually means inspecting the page, identifying the DOM selector or XPath, and writing something like:

await page.click('.long-unreadable-btn-selector');

With Stagehand, you can simply describe your intent in plain English:

await page.act("Click the submit button");

Stagehand takes care of the rest, no need to manually dig through the page’s markup.

Under the hood, Stagehand processes the page’s HTML (and sometimes screenshots or extracted metadata) by passing it to an AI model. The model interprets your natural language instructions, identifies the right elements to interact with, and generates automation code on the fly.

Stagehand supports a wide range of models, including OpenAI, Gemini, Claude, DeepSeek, and even local ones via Ollama. In this tutorial, we’ll use Gemini since it’s easy to get started with and offers a generous free tier.

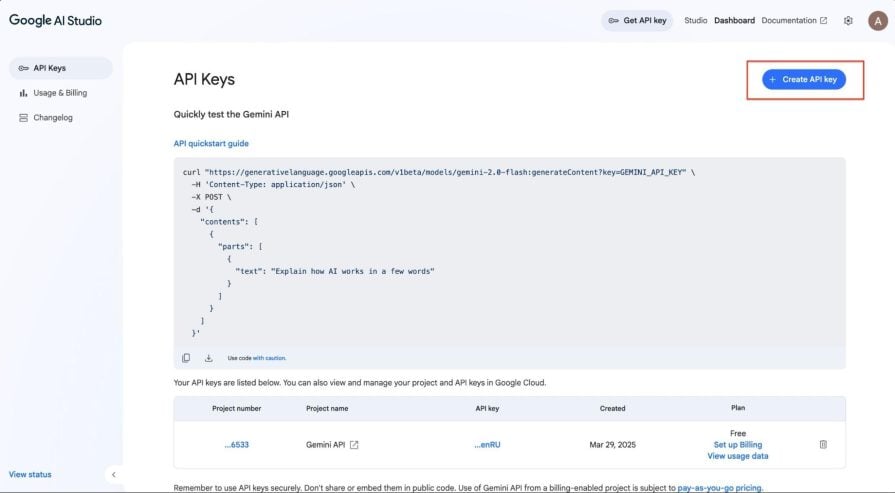

To create a new Gemini API key, head to Google AI Studio and click Create API Key, as shown below:

You’ll then be prompted to create a new Google Cloud project or select an existing one. Once completed, your API key will be displayed. Copy it and store it in a secure location.

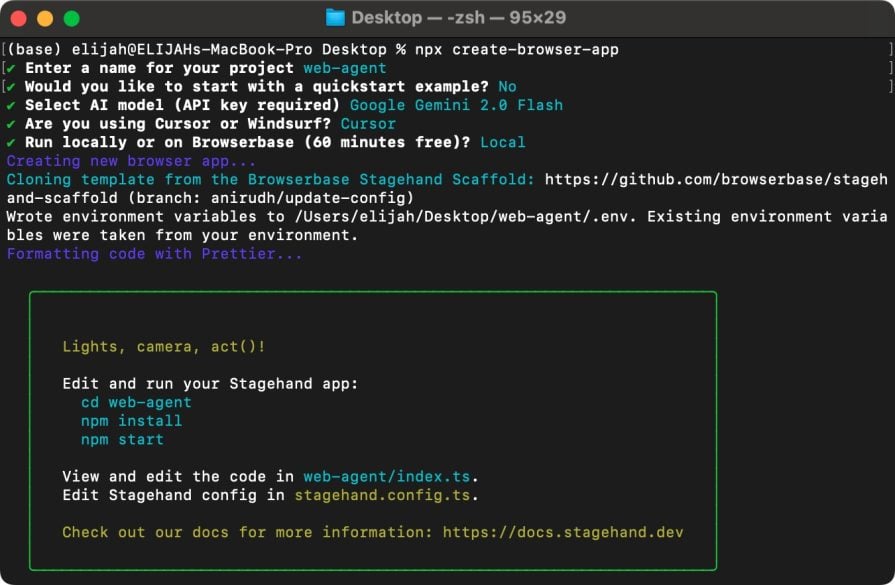

With your Gemini API key ready, let’s create a new Stagehand application by running the following command:

npx create-browser-app

You’ll be prompted to select options like your project name, preferred AI model, code editor, and more. Match your selections to those in the screenshot below:

After setup, move into your project directory and install dependencies:

cd web-agent npm install

Once that’s done, create a new .env file in the root of the project and add the following line, replacing the API key with your actual Gemini key:

GOOGLE_API_KEY="PASTE_YOUR_GEMINI_KEY_HERE"

Once setup is complete, your project directory should look like:

web-agent

├─ 📁llm_clients

│ ├─ 📄aisdk_client.ts

│ └─ 📄customOpenAI_client.ts

├─ 📄.cursorrules

├─ 📄.env.example

├─ 📄.gitignore

├─ 📄README.md

├─ 📄index.ts

├─ 📄package.json

├─ 📄stagehand.config.ts

├─ 📄tsconfig.json

└─ 📄utils.ts

Here’s a quick overview of what each file does:

To test things out, open the index.ts file and replace its contents with the following:

import { Stagehand, Page, BrowserContext } from "@browserbasehq/stagehand";

import StagehandConfig from "./stagehand.config.js";

import { z } from "zod";

async function main({

page,

}: {

page: Page;

context: BrowserContext;

stagehand: Stagehand;

}) {

await page.goto("https://blog.logrocket.com/author/asaoluelijah/");

await page.act("Visit the first blog post");

const { title, summary } = await page.extract({

instruction:

"Extract the post title and generate a high level summary of the post.",

schema: z.object({

title: z.string().describe("The title of the article"),

summary: z.string().describe("A summary of the article"),

}),

});

console.log(`Title: ${title}`);

console.log(`Summary: ${summary}`);

}

async function run() {

const stagehand = new Stagehand({

...StagehandConfig,

});

await stagehand.init();

const page = stagehand.page;

const context = stagehand.context;

await main({

page,

context,

stagehand,

});

await stagehand.close();

}

run();

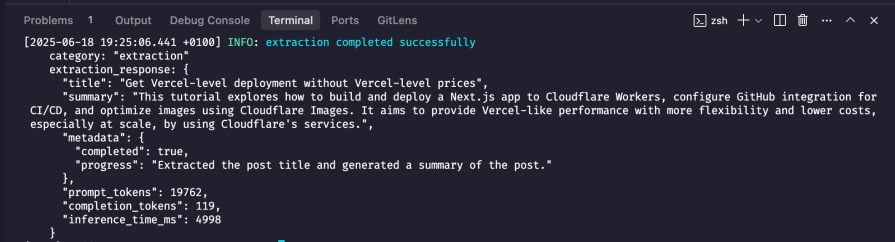

This script instructs Stagehand to navigate to the LogRocket author page using page.goto(), click the first blog post using a natural-language instruction via page.act(), and then extract the article title and generate a high-level summary using page.extract().

Run the app using the command below:

npm run start

Stagehand will open a new browser window, navigate to the page, click on the first blog post, extract the requested data, and log the output in your console, as shown below:

With the basics covered, let’s move on to building our agent logic.

There are multiple ways to design AI agent logic. A common approach involves setting a high-level goal, using an LLM to break it into sub-tasks, and looping through those tasks until completion. This method comes in many forms. One prominent example is computer-using agents (covered in the next section), which often depend on large, resource-heavy models.

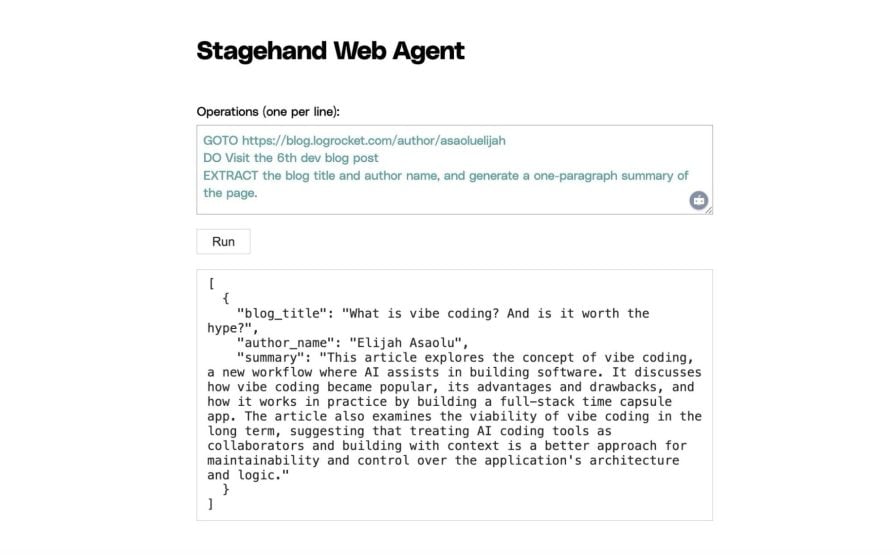

In this tutorial, we’ll use a more deterministic approach. Instead of goal decomposition, we’ll define operations like GOTO, DO, and EXTRACT that map directly to Playwright/Stagehand browser actions. Each operation is paired with a user prompt that describes what the agent should do.

In the end, the user can give instructions like:

GOTO www.blog.com

EXTRACT the top 5 blog posts

GOTO www.anotherblog.com

DO Close newsletter modal

EXTRACT blogs relating to artificial intelligence

To get started, install the following packages:

npm install express npm i --save-dev @types/express @types/body-parser

Next, create a new file named agent.ts in your project root and paste the following code into it:

import { Stagehand, Page } from "@browserbasehq/stagehand";

import StagehandConfig from "./stagehand.config.js";

import { z } from "zod";

export type Command =

| { type: "GOTO"; value: string }

| { type: "DO"; value: string }

| { type: "EXTRACT"; value: string }

| { type: "SCROLL"; value: "down" | "up" }

| { type: "WAIT"; value: number }

| { type: "FINAL"; value: string };

async function main({ page, commands }: { page: Page; commands: Command[] }) {

const extractedContent: string[] = [];

for (const cmd of commands) {

switch (cmd.type) {

case "GOTO":

await page.goto(cmd.value);

break;

case "DO":

const [action] = await page.observe(cmd.value);

await page.act(action);

break;

case "EXTRACT":

const { results } = await page.extract({

instruction: `Extract ${cmd.value}`,

schema: z.object({

results: z.any(),

}),

});

extractedContent.push(results);

break;

default:

// Ignore unknown commands

break;

}

}

return extractedContent;

}

export function parseCommands(input: string): Command[] {

return input

.split("\n")

.map((line) => line.trim())

.filter(Boolean)

.map((line) => {

const [type, ...rest] = line.split(" ");

const value = rest.join(" ");

switch (type.toUpperCase()) {

case "GOTO":

return { type: "GOTO", value } as Command;

case "DO":

return { type: "DO", value } as Command;

case "EXTRACT":

return { type: "EXTRACT", value } as Command;

case "SCROLL":

return { type: "SCROLL", value: value as "down" | "up" } as Command;

case "WAIT":

return { type: "WAIT", value: Number(value) } as Command;

case "FINAL":

return { type: "FINAL", value } as Command;

default:

return null;

}

})

.filter(Boolean) as Command[];

}

export async function runAgent({ commands }: { commands: Command[] }) {

const stagehand = new Stagehand({ ...StagehandConfig });

await stagehand.init();

const page = stagehand.page;

let result: string[] = [];

try {

result = await main({ page, commands });

} finally {

await stagehand.close();

}

return result;

}

This file contains the core logic for the AI agent. It defines a command type and includes a main() function that loops through each command, performing actions like navigating to a URL, interacting with UI elements, or extracting data. It also includes a parseCommands() function that turns plain-text input into structured commands and a runAgent() function to execute operations using Stagehand.

To build a simple frontend where users can submit commands, create a new folder named public. Inside it, add an index.html file and paste in the following:

<!DOCTYPE html>

<html>

<head>

<title>Stagehand Web Agent UI</title>

<style>

body {

font-family: "Polysans", sans-serif;

margin: 0;

padding: 0;

}

main {

height: 100vh;

display: flex;

justify-content: center;

align-items: center;

}

label {

font-size: 1rem;

}

textarea {

width: 100%;

height: 90px;

font-size: 1rem;

margin: 0.5em 0 1em 0;

padding: 0.5em;

font-family: inherit;

background: #fff;

color: cadetblue;

resize: vertical;

box-sizing: border-box;

}

button {

padding: 0.4em 1.2em;

font-size: 1rem;

border: 1px solid #bbb;

background: #fff;

color: #222;

cursor: pointer;

}

#result {

margin-top: 1.2em;

font-size: 1rem;

background: #fff;

border: 1px solid #bbb;

padding: 0.7em 0.8em;

color: #222;

min-height: 2.5em;

max-height: 300px;

overflow: auto;

white-space: pre-wrap;

font-family: monospace;

max-width: 40vw;

}

form {

margin-bottom: 0;

}

</style>

</head>

<body>

<main>

<div>

<h1>Stagehand Web Agent</h1>

<form id="agent-form" method="POST" action="/run">

<label for="commands">Operations (one per line):</label><br />

<textarea name="commands" id="commands">

GOTO https://example.com

EXTRACT a summary of the page</textarea

><br />

<button type="submit">Run</button>

</form>

<div id="result"></div>

</div>

</main>

<script>

document

.getElementById("agent-form")

.addEventListener("submit", async function (e) {

e.preventDefault();

const form = e.target;

const data = new FormData(form);

const commands = data.get("commands");

document.getElementById("result").textContent = "Running...";

const res = await fetch("/run", {

method: "POST",

headers: { "Content-Type": "application/json" },

body: JSON.stringify({ commands }),

});

const html = await res.text();

document.getElementById("result").innerHTML = html;

});

</script>

</body>

</html>

This HTML creates a basic form where users can enter a list of operations (like GOTO, DO, or EXTRACT) line by line. When submitted, the commands are sent to the server, and the results are displayed below the form.

Now, let’s tie everything together with a simple Express server. In your project root, create a file named server.ts and add the following code:

import express, { Request, Response } from "express";

import bodyParser from "body-parser";

import path from "path";

import { fileURLToPath } from "url";

import { runAgent, parseCommands } from "./agent.js";

const app = express();

const PORT = process.env.PORT || 3000;

const __filename = fileURLToPath(import.meta.url);

const __dirname = path.dirname(__filename);

app.use(bodyParser.urlencoded({ extended: false }));

app.use(bodyParser.json());

app.use(express.static(path.join(__dirname, "public")));

app.post("/run", async (req: Request, res: Response) => {

const input = req.body.commands || req.body.commandsText || "";

const commands = parseCommands(input);

let output = "";

try {

const results = await runAgent({ commands });

output = `${JSON.stringify(results, null, 2)}`;

} catch (err: any) {

output = `<pre>❌ Error: ${err.message}</pre>`;

}

res.send(output);

});

app.listen(PORT, () => {

console.log(`Web UI running at http://localhost:${PORT}`);

});

This server uses Express to serve the frontend and handle form submissions. When a POST request is made to /run, it parses the input commands, runs them using the agent logic, and returns either the output or an error message.

Finally, update your package.json to include a new script for launching the web agent:

"scripts": {

"build": "tsc",

"start": "tsx index.ts",

"web": "tsx server.ts"

}

Then, start the agent by running:

npm run web

Open http://localhost:3000 in your browser to view the UI.

Try entering the following operations to test the agent:

The agent will launch a browser, navigate to the specified URL, carry out the defined actions, and return the results, as shown below:

The current setup gives you full control over each step, making it ideal for predictable, repeatable tasks. But there’s a more flexible alternative: simply tell the agent what you want, and let it figure out the rest.

For example:

“Visit starbucks.com and order a coffee.”

The agent handles the entire process, navigating the site, clicking buttons, and filling out forms without step-by-step instructions. Stagehand supports this behavior through computer-using models. Let’s take a look at how that works.

Computer-using agents represent a recent breakthrough in AI automation. Unlike traditional bots that depend on structured data or predefined selectors, CUAs interact with applications visually, much like a human would, by interpreting what’s on the screen.

They operate in a tight feedback loop: the model issues an action (like clicking a button or entering text), receives a screenshot of the updated page, and uses that visual context to decide the next step. This cycle repeats until the task is complete.

That said, CUAs can be costly. Each step involves sending screenshots and context to a model, which can easily consume thousands of tokens per loop. Depending on the model, processing one million tokens can range from $0.15 to over $10, with a single action potentially using 5,000-10,000 tokens.

Stagehand supports CUAs through integrations with OpenAI’s computer-use-preview model and Anthropic’s Claude models, including Claude 3.7 Sonnet and Claude 3.5 Sonnet.

To get started, retrieve your API key from the provider you are using. Once set up, use stagehand.page.goto() to navigate to a page, then call stagehand.agent() to let the model take control.

Here’s an example:

import { Stagehand, Page, BrowserContext } from "@browserbasehq/stagehand";

import StagehandConfig from "./stagehand.config.js";

async function main({

page,

context,

stagehand,

}: {

page: Page;

context: BrowserContext;

stagehand: Stagehand;

}) {

await stagehand.page.goto("https://www.amazon.com");

const agent = stagehand.agent({

provider: "openai", // or "anthropic"

model: "computer-use-preview", // for OpenAI; use Claude model ID if using Anthropic

instructions: `You are a helpful assistant that can use a web browser.

Do not ask follow-up questions. Just perform the task based on the instruction.`,

options: {

apiKey: process.env.OPENAI_API_KEY,

},

});

await agent.execute(

"Search for the book 'Atomic Habits' on Amazon and open the product page."

);

}

async function run() {

const stagehand = new Stagehand({

...StagehandConfig,

});

await stagehand.init();

const page = stagehand.page;

const context = stagehand.context;

await main({

page,

context,

stagehand,

});

await stagehand.close();

}

run();

In this example, the agent launches a browser, navigates to Amazon, searches for Atomic Habits, and opens the product page, all autonomously. Hopefully, Stagehand will add support for multimodal Gemini models soon, enabling this workflow across a broader range of providers.

In this tutorial, we explored how to build a web-based AI agent using Stagehand and Gemini (or any other LLM provider of your choice). We began with a deterministic setup using operations like GOTO, DO, and EXTRACT to define specific tasks. From there, we built a simple web UI to run those instructions interactively.

We also looked at more advanced computer-using agents (CUAs), where models like OpenAI’s computer-use-preview or Anthropic’s Claude can autonomously control the browser and execute high-level goals without step-by-step instructions.

These kinds of web-based AI agents are becoming increasingly important as automation shifts from static scripts to intelligent, adaptable systems. Knowing how to build them gives developers a head start in creating tools that can save valuable time.

You can also find the full source code for this project on GitHub.

@container scroll-state: Replace JS scroll listeners nowCSS @container scroll-state lets you build sticky headers, snapping carousels, and scroll indicators without JavaScript. Here’s how to replace scroll listeners with clean, declarative state queries.

Explore 10 Web APIs that replace common JavaScript libraries and reduce npm dependencies, bundle size, and performance overhead.

Russ Miles, a software development expert and educator, joins the show to unpack why “developer productivity” platforms so often disappoint.

Discover what’s new in The Replay, LogRocket’s newsletter for dev and engineering leaders, in the February 18th issue.

Would you be interested in joining LogRocket's developer community?

Join LogRocket’s Content Advisory Board. You’ll help inform the type of content we create and get access to exclusive meetups, social accreditation, and swag.

Sign up now