Generative AI is one of those things that will change our society at its core. We felt that with computers, the internet, and mobile phones. Previous generations might have felt the same with TVs, radios, cars, planes, and electricity…

Generative AI applications are incredibly diverse, and the user interface is astonishingly straightforward. Simply type in a sentence and watch the magic unfold; it couldn’t be more designed for the masses. Just as we don’t have “computioneers” or “internet specialists” today, generative AI will be utilized by everyone soon. In fact, its usage will be expected of everyone.

Generative AI is revolutionary in nature and has a wide range of applications. Because of this, discussing generative AI is not about questioning if a particular craft will be replaced, but rather how every craft will be transformed. Product management is no exception, and you can start reaping the rewards right now.

Before I show you all how you can step up your product game right now with ChatGPT, I need first to take you on a detour: LLM and prompt engineering.

ChatGPT doesn’t possess awareness — it does not know anything and it’s not actually talking to you. It doesn’t even know what “you” are. ChatGPT is one of several LLMs available right now, and these have existed since 2018.

LLM, or large language model, refers to a deep learning model capable of producing complex language given a complex language prompt. A lot of big words, for sure, but I won’t be explaining any of those in this article. If you want to know more, I highly recommend you watch this video after you have finished reading this. What is important here is the understanding of how LLMs work.

LLMs are different from previous text model iterations because they can engage in unsupervised learning, meaning that they can “teach themselves.” LLMs analyze vast amounts of training data and recognize patterns from their observations without the need for human intervention.

As a simple example, think of a pair of parentheses. When you see an open one, there is a high probability that a closing one will follow at some point. That’s a pattern that you can easily observe, and so does the machine.

The LLM machine is capable of observing those patterns on a very large scale. “I love…” has a higher chance of being followed by “you” compared to “trains.” The sentence “the early bird…” will probably be completed with “gets the worm” instead of “never gonna give you up.” Apply this same logic over and over again at progressively more complex arrangements and you have yourself a working LLM.

OpenAI’s specific LLM, ChatGPT, has an added layer of training: it’s supervised by humans. It was one of the first to do it, and that’s why it looks so different from its predecessors. More than that, ChatGPT can predict responses on sample data made up of thousands of words. That means that it’s predicting not only based on pattern but also on context.

All of that is futuristic tech, but the one thing to take away from all these explanations is a pretty mundane understanding: LLMs are just probability calculators, and by knowing that, you can play with them.

Enter prompt engineering.

Prompt engineering is one of those fields of study that is so new that all the content available balances between snake oil sales pitches and scientific academic papers. Extracting actual knowledge from it is an exercise of critical thinking, and that’s why it’s important for us to know what ChatGPT is made of as an LLM.

Context aside, prompt engineering basically just means manipulating what you say to a machine to receive the best possible answer for your inquiry. Prompt engineering is not limited to ChatGPT — you need it for basically any text-based model. Let’s focus on OpenAI’s cash cow for the purpose of this piece.

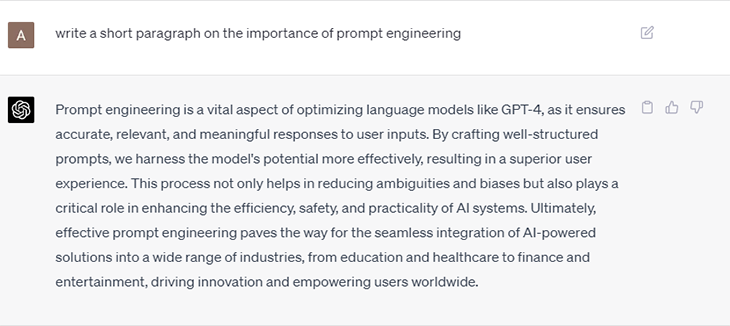

To explain how it works, take a look at the example below when I ask GPT-4 to write a short paragraph on the importance of prompt engineering:

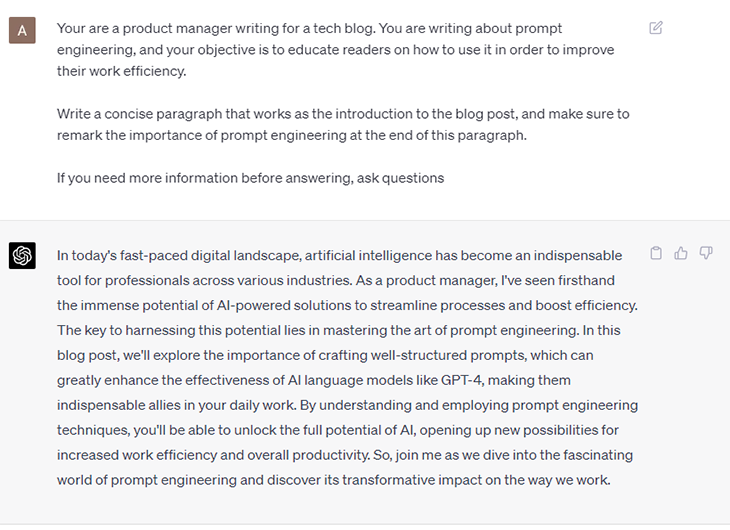

Now, let’s use some prompt engineering. I specify:

“You are a product manager writing for a tech blog and writing about prompt engineering. The objective is to educate readers on how to use it in order to improve their work efficiency.

Write a concise paragraph that works as the introduction to the blog post and make sure to remark the importance of prompt engineering at the end of this paragraph.

If you need more information before answering, ask questions:”

The results speak for themselves. The more context you provide in your prompt, the better the AI will understand what you want. Prompt engineering is the tool that broadens the application horizon of ChatGPT, and that’s how you can transform it into a powerful product manager companion.

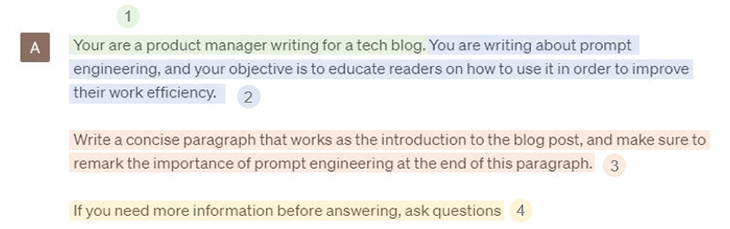

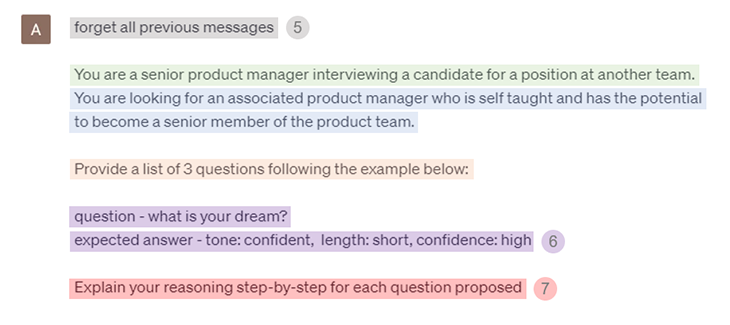

Let’s break down the fundamentals of prompt engineering. The numbers in the photo below correspond to the below sections explaining the components of prompt engineering:

Giving the AI a role is absolutely fundamental. It’s the starting point of most prompts and it’s responsible for narrowing down the possibilities that the model will consider before providing answers. A well-defined role is half the work done.

The context you give is as long as you need it to be, but there is somewhat of a balance to be struck here. If you provide too much information, the model might lose track of what you actually want. Think of what are the fundamentals of your request and give the machine orientation accordingly.

This is the actual request you make for the machine. Without this, the model is free to infer what you need and all sorts of things can come out of your prompt. The shorter your command, the more room for creativity ChatGPT will have.

Prompt engineering is not so much of an exact science, and you might be too dry on your context provision. Asking if the model has all the information it needs before giving you an answer guarantees that you’ve shared enough. Most often the machine will not ask for more, but it might need further instructions for more complex commands.

Those four prompt levers are enough for us to create our ChatGPT product hacks, but there are some more basic tools that can be used to maximize responses. The example below sheds some light on some of those:

Instead of opening new chats every time, you can erase previous context from the conversation in case you want answers to disregard whatever has been previously prompted and answered before. You can do this by telling the AI to forget all previous messages, or some variation similar to that.

The first prompt example I showed is a “zero-shot” prompt, meaning I didn’t force the AI to follow any specific response pattern. Prompts such as the above one are called “one-shot” prompts since they provide an example of answer structure. Beyond zero and one, prompts can also be “few-shots:” you provide several examples to make sure that ChatGPT answers accordingly.

Since LLMs are just probability machines, they can create reasoning that apparently makes sense, but actually is false. For more complex tasks or those that demand more creativity from the machine, forcing it to type a step-by-step thought process can help you to get better results.

There are a bunch of more technical tweaks you can use, such as playing with the value of P, temperature, or changing models. I won’t go over those because they add no real value to the intent of this article but feel free to dig in and discover more after you’ve finished reading.

Here is the answer I got for the above prompt, by the way:

I know it was a long detour, but the examples I’ll provide below wouldn’t make sense without the lengthy introduction. Ideally, I would paste the entire conversation between me and ChatGPT here, but since I don’t want this to be a three-part article, I’ll just share the prompts and I’ll let you test those yourself to see the results. Spoiler alert: they’re pretty impressive.

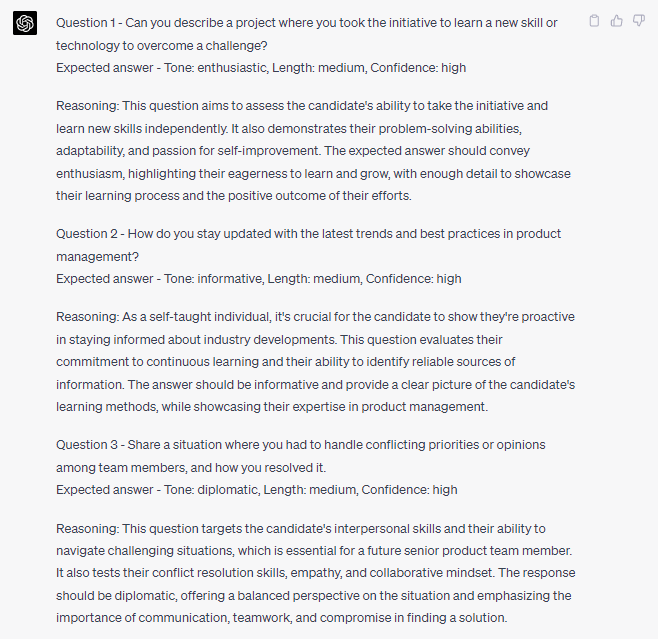

The first prompt is for user story creation:

I prompt GPT by saying:

“You are a product owner for a SAAS payment start-up based in Brazil and are responsible for the core payment platform which connects customers to acquirers.

You need to develop a new tokenization feature and your job is to create user stories for the engineering team to start development. Have in mind that you’re not responsible for the checkout experience, just communication of credit card data between buyer and acquirer. Clients should be able to manage their tokens.

Provide a brief explanation of the step-by-step rationale for each user story.

Before creating the user stories, ask me questions if you need more information to provide an answer.”

Not only did it ask questions, but it actually provided me with a five-user-stories-long answer with details about industry regulation protocols and API-specific commands that users should be able to perform. I’ve worked for the payment industry for a very long time and I can assure you that there was very little input I would need to add as a payment product manager.

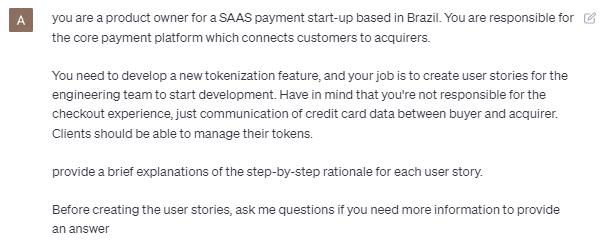

You can also use AI and ChatGPT for the discovery process:

As seen in the screenshot above, I tell the AI that:

“I’m a product manager and you are a potential user of my product.

I work at an app that connects people who can provide hosting and daycare for dogs and cats with people willing to pay for this service. We are having a challenging time meeting demand and offer within the platform. I am interviewing you to understand why you have never completed your provider form.

Give me a one-paragraph response for each possible user following the example below:

User: young unemployed female

Response: I like cats…

User: elder retired male

Response: I like dogs…

User: couple without kids

Response: we don’t have pets

Ask questions if you need more information before answering.”

Now, if you’ve tried to interview users, you know how hard it is to find people willing to give you feedback. Worse than that, there is fine art involved in assembling questions to avoid caveating users’ responses.

Although ChatGPT is far from being a real user, it has basically the entire internet as a data source to infer what a person that fits the description would most likely tell you. Sue me, but I think that’s a good enough proxy for short windowed qualitative research without all the pain of going through the challenges I mentioned above.

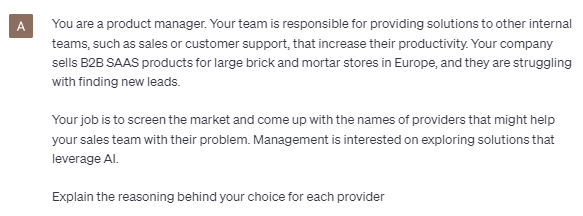

ChatGPT is a good resource for identifying third-party providers for different services. Keep in mind that the current versions of ChatGPTs are only accurate through September 2021, so there may be newer tools it doesn’t know about:

In the prompt above, I tell ChatGPT:

“You are a product manager and your team is responsible for providing solutions to other internal teams, such as sales or customer support, that increase their productivity. The company sells B2B SAAS products for large brick-and-mortar stores in Europe, and they are struggling with finding new leads.

Your job is to screen the market and come up with the names of providers that might help the sales team with their problems. Management is interested in exploring solutions that leverage AI.

Explain the reasoning behind your choice for each provider.”

Being a product manager is not always about relying on your internal output capability to deliver results. A lot of times, you will need to leverage third-party solutions. Although ChatGPT is not a search engine, it sure has some ideas to share regarding business that can help you.

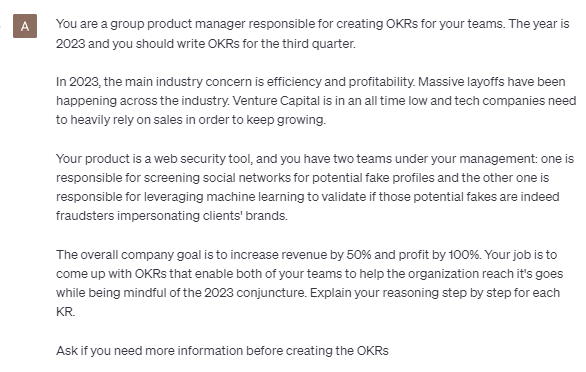

Finally, I’ve found ChatGPT helpful for defining OKRs:

This prompt leans on the heavy side, providing a lot of context and expecting a considerably complex answer:

“You are a group product manager responsible for creating OKRs for your teams. The year is 2023 and you should write OKRs for the third quarter.

In 2023, the main industry concern is efficiency and profitability. Massive layoffs have been happening across the industry. Venture Capital is at an all-time low and tech companies need to heavily rely on sales to keep growing.

Your product is a web security tool, and you have two teams under your management: one is responsible for screening social networks for potential fake profiles and the other one is responsible for leveraging machine learning to validate if those potential fakes are indeed fraudsters impersonating clients’ brands.

The overall company goal is to increase revenue by 50% and profit by 100%. Your job is to come up with OKRs that enable both of your teams to help the organization reach its goes while being mindful of the 2023 conjuncture. Explain your reasoning step by step for each KR.

Ask if you need more information before creating the OKRs.”

Since the prompt was well written, not only was ChatGPT able to provide OKRs for both teams, but it managed to consistently explain its reasoning as I further probed the proposed KRs. You might not be able to rely 100 percent on the output, but it’s definitely a good discussion starter.

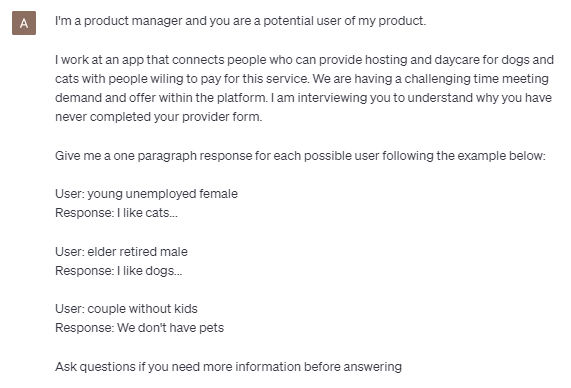

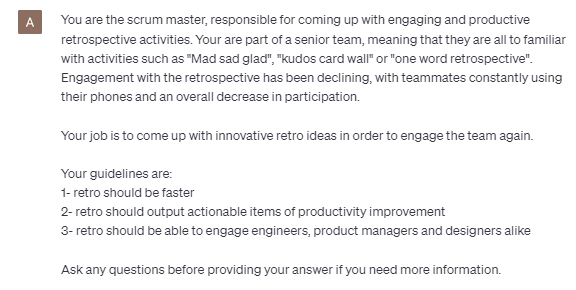

Finally, AI can be great for team activities. I’ve found it’s quite knowledgeable about scrum and agile in general, so it has a lot of context already built up:

The prompt I give is:

“You are the scrum master, responsible for coming up with engaging and productive retrospective activities. You are part of a senior team, meaning that they are all too familiar with activities such as “mad sad glad,” “kudos card wall,” or “one word retrospective.” Engagement with the retrospective has been declining, with teammates constantly using their phones and an overall decrease in participation.

Your job is to come up with innovative retro ideas in order to engage the team again.

Your guidelines are:

1- retro should be faster

2- retro should output actionable items of productivity improvement

3- retro should be able to engage engineers, product managers and designers alike

Ask any questions before providing your answer if you need more information.”

This prompt provided well-known retrospective suggestions, but most impressive, it created a new one I’ve never heard of! I searched for it on the internet and, although I found some versions of it, I wasn’t able to find exactly what ChatGPT suggested. It really took the request to be “innovative” seriously and produced something brand new!

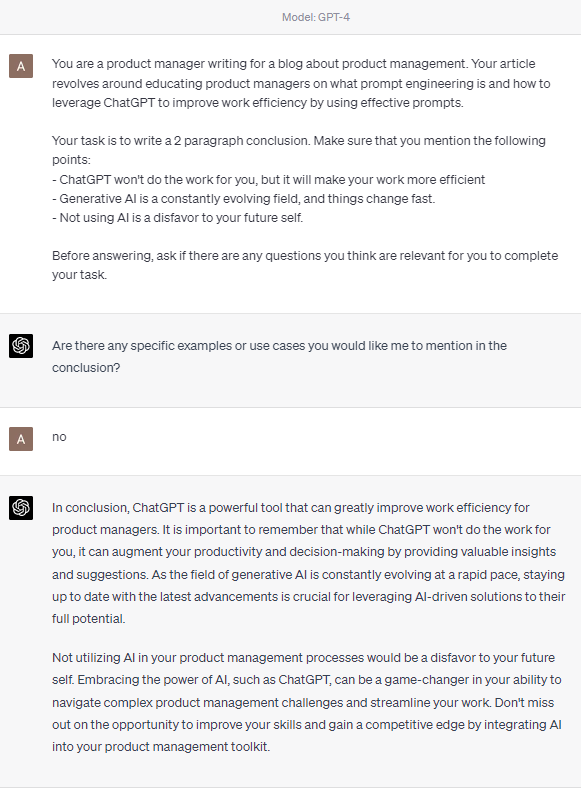

ChatGPT says:

“In conclusion, ChatGPT is a powerful tool that can greatly improve work efficiency for product managers. It is important to remember that while ChatGPT won’t do the work for you, it can augment your productivity and decision-making by providing valuable insights and suggestions. As the field of generative AI is constantly evolving at a rapid pace, staying up to date with the latest advancements is crucial for leveraging AI-driven solutions to their full potential.

Not utilizing AI in your product management processes would be a disfavor to your future self. Embracing the power of AI, such as ChatGPT, can be a game-changer in your ability to navigate complex product management challenges and streamline your work. Don’t miss out on the opportunity to improve your skills and gain a competitive edge by integrating AI into your product management toolkit.”

I rest my case…

LogRocket identifies friction points in the user experience so you can make informed decisions about product and design changes that must happen to hit your goals.

With LogRocket, you can understand the scope of the issues affecting your product and prioritize the changes that need to be made. LogRocket simplifies workflows by allowing Engineering, Product, UX, and Design teams to work from the same data as you, eliminating any confusion about what needs to be done.

Get your teams on the same page — try LogRocket today.

A strategy map is a tool that illustrates an organization’s strategic objectives and the relationship between them using a visual diagram.

Insight management is a systematic and holistic process of capturing, processing, sharing, and storing insights within the organization.

While agile is about iterative development, DevOps ensures smooth deployment and reliable software updates.

Aashir Shroff discusses how to avoid building features or products that replicate what’s already in the market but, instead, truly stand out.