Chances are, you’ve already dealt with common problems such as thread starvation, callback hell, and threads being blocked for longer than they should during your career as a programmer. The fact is, working with threads isn’t so easy, especially if you’re targeting asynchronous-fashion routines to your systems.

Many languages have developed simplifications for async coding — such as Goroutines from Go, which are basically lightweight threads managed by the Go runtime. Similar features are provided by Closure with its core.async facilities for async programming, by Node.js with the notorious event loop, and now Kotlin with coroutines.

In this article, we’ll explore the emerging universe of Kotlin coroutines in an attempt to demonstrate how they can simplify your asynchronous programming within the language.

The Replay is a weekly newsletter for dev and engineering leaders.

Delivered once a week, it's your curated guide to the most important conversations around frontend dev, emerging AI tools, and the state of modern software.

Kotlin doesn’t have default async capabilities that other languages have, such as the built-in reserved words for async and await in JavaScript. Instead, JetBrains released a new set of coroutines under the kotlinx-coroutines library with several high-level coroutines for various tasks such as launch and async, among others.

Take a look at the following example extracted from the playground environment JetBrains provides:

suspend fun main() = coroutineScope {

launch {

delay(1000)

println("Kotlin Coroutines World!")

}

println("Hello")

}

Which print line do you think is going to be printed first? You’re right if your answer was “Hello.” That happens because we’re delaying the launch block for one second while the second print is not.

At its core, a coroutine is nothing more than a simple, lightweight thread. Like we used to have with Java, they need to be explicitly launched, which you can do via the launch coroutine builder under the context of a coroutineScope (for example, in global scope, the coroutine lives as long as the application lives).

The coroutineScope builder creates a coroutine scope that waits for all of its child coroutines to complete before performing its own completion.

That’s a great feature for those who wish to group different coroutines under a more global one. And it’s a very similar concept to runBlocking, which blocks the current thread for waiting against the just-suspension mode that coroutineScope brings on.

In our example above, the delay function uses the Thread scope and can be replaced with such:

launch {

Thread.sleep(1000)

println("Kotlin Coroutines World!")

}

The launch function, in turn, can be replaced by the equivalent function Thread.

Be careful when changing it in the example because the delay function, which is also a suspend function, can only be called from a coroutine or another suspend function.

Based on these terms, our code example would migrate to the following:

import kotlinx.coroutines.*

import kotlin.concurrent.thread

suspend fun main() = coroutineScope {

thread {

Thread.sleep(1000)

println("Kotlin Coroutines World!")

}

println("Hello")

}

A great advantage of coroutines is that they can suspend their execution within the thread in which they’re running as many times as they want. This means that we save a lot in terms of resources because infinite stopped threads waiting for executions to complete aren’t the rule of thumb anymore.

If you’d like to wait for a specific coroutine to complete, though, you can do this as well:

val job = GlobalScope.launch {

delay(1000L)

println("Coroutines!")

}

println("Hello,")

job.join()

The reference that we’re creating here is known as a background job, which is a cancellable task with a lifecycle that culminates in its completion. The join function waits until the coroutine completes.

It’s a very useful concept to employ in instances where you’d like to have more control over the synchronous state of some coroutines’ completion. But how does Kotlin achieve that?

CPS, or continuation-passing style, is a type of programming that works by allowing the control flow to be passed explicitly in the form of a continuation — i.e., as an abstract representation of the control state of a computer program flow. It’s very similar to the famous callback function in JavaScript.

To understand it better, let’s take a look at the Continuation interface:

interface Continuation<in T> {

val context: CoroutineContext

fun resume(value: T)

fun resumeWith(result: Result<T>)

fun resumeWithException(exception: Throwable)

}

That represents a continuation after a suspension point that returns a value of type T. Among its main objects and functions, we have:

context: the context link to that continuationresumeXXX: functions to resume the execution of the corresponding coroutine with different resultsGreat! Now, let’s move on to a more practical example. Imagine that you’re dealing with an ordinary function that retrieves information from your database via a suspending function:

suspend fun slowQueryById(id: Int): Data {

delay(1000)

return Data(id = id, ... )

}

Let’s say that the delay function there emulates the slow query you have to run to get the data results.

Behind the scenes, Kotlin converts the coroutine into a sort of callback function through another concept known as state machine, rather than creating lots of new functions.

We’ve already learned how to create background jobs and how to wait until they get finished. We also saw that these jobs are cancellable structures, which means that instead of waiting for them to complete, you may want to cancel them if you’re no longer interested in their results.

In this situation, simply call the cancel function:

job.cancel()

However, there will also be times when you’d like to establish a limit for certain operations before canceling them or waiting for them to complete. That’s where timeouts become handy.

If a given operation takes longer than it should, then the timeout config will make sure to throw a proper exception for you to react accordingly:

runBlocking {

withTimeout(2000L) {

repeat(100) {

delay(500L)

}

}

}

If the operation exceeds the time limit we set of two seconds, a CancellationException error is thrown.

Another version of this is possible via the withTimeoutOrNull block. Let’s see an example:

import kotlinx.coroutines.*

suspend fun main() = runBlocking<Unit> {

withTimeoutOrNull(350) {

for (i in 1..5) {

delay(100)

println("Current number: $i")

}

}

}

Here, only numbers one through three will print because the timeout is set to 350ms. We have a delay of 100ms for each iteration, which is only enough to fill three values of our for.

That’s also good for the scenarios in which you don’t want exceptions to be thrown.

If you’ve worked with JavaScript before, you may be used to creating async functions and making sure to await them when the results are expected in a synchronous block.

Kotlin allows us to do the same via the async coroutine. Let’s say you want to start two different hard-processing threads and wait for both results to return to the main thread. Below is an example that exposes how Kotlin makes use of features from Java, such as Future:

val thread1 = async(CommonPool) {

// hard processing 1

}

val thread2 = async(CommonPool) {

// hard processing 2

}

runBlocking {

thread1.await()

thread2.await()

}

The async function creates a new coroutine and returns its future result as an implementation of Deferred. The running coroutine is canceled when the resulting Deferred is canceled.

Deferred, in turn, is a nonblocking cancellable future — i.e., it is a Job that has a result.

When the two hard-processing coroutines start, the main coroutine is suspended via the runBlocking execution call and will be resumed only after the two thread results become available. This way, we gain in performance since both coroutines will be executed in parallel.

Kotlin also gives us a great way to deal with async data streams. Sometimes you’ll need your streams to emit values, convert them through some external asynchronous functions, collect the results, and complete the stream successfully or with exceptions.

If that’s the case, we can make use of the Flow<T> type. Let’s take the following example that iterates over a numerical sequence and prints each of its values:

import kotlinx.coroutines.*

import kotlinx.coroutines.flow.*

suspend fun main() = runBlocking<Unit> {

(1..3).asFlow().collect { value -> println("Current number: $value") }

}

If you’re used to using the Java Streams API, or similar versions from other languages, this code may be very familiar to you.

Kotlin also offers auxiliary functions to map and filter operations as well, even though they may have long-running async calls within:

import kotlinx.coroutines.*

import kotlinx.coroutines.flow.*

suspend fun main() = runBlocking<Unit> {

(1..5).asFlow()

.filter{ number -> number % 2 == 0 } // only even numbers

.map{ number -> convertToStr(number) } // converts to string

.collect { value -> println(value) }

}

suspend fun convertToStr(request: Int): String {

delay(1000)

return "Current number: $request"

}

It’s great to see Kotlin taking a step further toward creating a more asynchronous and nonblocking world. Although Kotlin coroutines are relatively new, they already capitalize on the great potential other languages have been extracting from this paradigm for a long time.

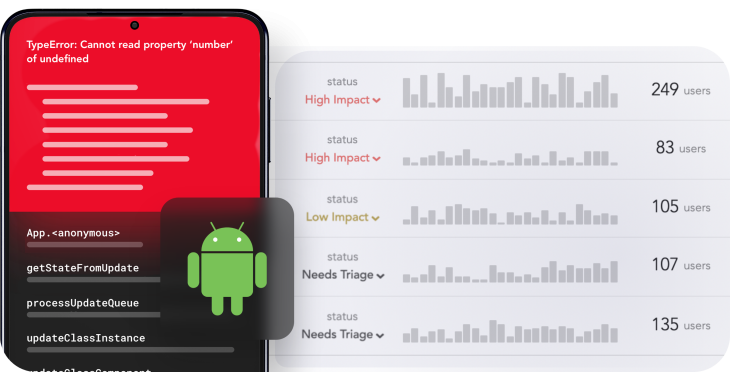

LogRocket is an Android monitoring solution that helps you reproduce issues instantly, prioritize bugs, and understand performance in your Android apps.

LogRocket's Galileo AI watches sessions for you, instantly identifying and explaining user struggles with automated monitoring of your entire product experience.

LogRocket also helps you increase conversion rates and product usage by showing you exactly how users are interacting with your app. LogRocket's product analytics features surface the reasons why users don't complete a particular flow or don't adopt a new feature.

Start proactively monitoring your Android apps — try LogRocket for free.

Solve coordination problems in Islands architecture using event-driven patterns instead of localStorage polling.

Signal Forms in Angular 21 replace FormGroup pain and ControlValueAccessor complexity with a cleaner, reactive model built on signals.

Discover what’s new in The Replay, LogRocket’s newsletter for dev and engineering leaders, in the February 25th issue.

Explore how the Universal Commerce Protocol (UCP) allows AI agents to connect with merchants, handle checkout sessions, and securely process payments in real-world e-commerce flows.

Hey there, want to help make our blog better?

Join LogRocket’s Content Advisory Board. You’ll help inform the type of content we create and get access to exclusive meetups, social accreditation, and swag.

Sign up now