If you have a Node.js GraphQL endpoint on your project’s backend with various resolvers, and if you have it deployed on production, you’ll need to secure your GraphQL API endpoints with rate and depth limiting.

Rate limiting helps you throttle a user if a set limit of requests per time is exceeded, and depth limiting helps you limit the complexity of a GraphQL query by its depth. These measures help your app prevent API spam and query attacks. In this article, we’ll cover why and how to rate limit and depth limit your APIs.

The Replay is a weekly newsletter for dev and engineering leaders.

Delivered once a week, it's your curated guide to the most important conversations around frontend dev, emerging AI tools, and the state of modern software.

Rate limiting means limiting the number of API calls an app or user can make in a given amount of time. If this limit is exceeded, the user or client may be throttled, i.e., the client may be prohibited from making more similar API calls within the same time period.

Your backend server will often have some limitations on how many requests it can process within a time frame. Many times, users with malicious intent will bombard your API endpoints with spam, which slows down your server and may even crash it.

To protect your API endpoints and server from getting overwhelmed, you must rate limit your API endpoints, be it a REST API or a GraphQL endpoint.

There are multiple ways in which to limit APIs, such as the following.

You can throttle certain IP addresses and can restrict their access to your services if they exceed the number of API requests within a timeframe.

You can throttle certain users on your app (by their unique identifier in your database) and restrict their access to your services if they exceed the number of API requests within a time frame.

In this method, you throttle a user if they exceed the rate limit set by you based on if they are using the same IP address to do so.

Do this when the GraphQL resolvers (mutations, queries, and subscriptions) on a server have the same rate limit, such as 10 requests per minute per user.

For example, a GraphQL server may have signInMutation, getUserQuery, and other such resolvers within the same rate-limiting rules, meaning there is a uniform rate limit across GraphQL resolvers).

Sometimes every GraphQL resolver gets a different rate-limiting rule. For instance, resolvers that require tedious amounts of memory and processing power will have stricter rate limits, compared to an easy-to-process and less time-consuming resolver.

Rate limits are stricter when fewer API requests are allowed per timeframe.

To rate limit your API or GraphQL endpoints, you need to track time, user IDs, IP addresses, and/or other unique identifiers, and you’ll also need to store data from the last time the identifier requested the endpoint in order to calculate if the rate limit was exceeded by the identifier or not.

An “identifier” can be any unique string that helps identify a client, such as a user ID in your database, an IP address, a string combination of both, or even device information.

So where do you store all of this data?

Redis is the most suitable database for these use cases. It’s a cache database where you can save small bits of information in key pairs, and it’s blazing fast.

Let’s install Redis now. Later, you’ll be able to plug it into your Node.js GraphQL server setup to store rate-limiting related information.

If you’re using the official docs to install Redis, use these commands in command line 👇

wget http://download.redis.io/redis-stable.tar.gz tar xvzf redis-stable.tar.gz cd redis-stable make sudo cp src/redis-server /usr/local/bin/ # copying build to proper places sudo cp src/redis-cli /usr/local/bin/ # copying build to proper places

Personally, I think there are easier ways to install:

On a Mac:

brew install redis # to install redis redis-server /usr/local/etc/redis.conf # to run redis

On Linux:

sudo apt-get install redis-server # to install redis redis-server /usr/local/etc/redis.conf # to run redis

After the Redis server starts, create a new user. On localhost, it’s okay to run Redis without any password, but you can’t for production because you wouldn’t want your Redis server to be open to the Internet.

Now let’s set up a Redis password. Run redis-cli to start the Redis command line. This only works if Redis is installed and running.

Then, enter this command in CLI:

config set requirepass somerandompassword

Now exit the command line. Your Redis server is now password secured.

Here, we will make use of the graphql-rate-limit npm module. You’ll also need ioredis.

npm i graphql-rate-limit ioredis -s

In this tutorial, I am using graphql-yoga server for the backend. You can also use Apollo GraphQL.

graphql-rate-limit works with any Node.js GraphQL setup. All it does is create GraphQL directives to use in your GraphQL schema.

This is what a normal GraphQL Server would look like:

import { GraphQLServer } from 'graphql-yoga'

const typeDefs = `

type Query {

hello(name: String!): String!

}

`

const resolvers = {

Query: {

hello: (_, { name }) => `Hello ${name}`

}

}

const server = new GraphQLServer({ typeDefs, resolvers })

server.start(() => console.log('Server is running on localhost:4000'))

Now, let’s rate limit welcome query (resolver) using this code.

import { GraphQLServer } from 'graphql-yoga'

import * as Redis from "ioredis"

import { createRateLimitDirective, RedisStore } from "graphql-rate-limit"

export const redisOptions = {

host: process.env.REDIS_HOST || "127.0.0.1",

port: parseInt(process.env.REDIS_PORT) || 6379,

password: process.env.REDIS_PASSWORD || "somerandompassword",

retryStrategy: times => {

// reconnect after

return Math.min(times * 50, 2000)

}

}

const redisClient = new Redis(redisOptions)

const rateLimitOptions = {

identifyContext: (ctx) => ctx?.request?.ipAddress || ctx?.id,

formatError: ({ fieldName }) =>

`Woah there, you are doing way too much ${fieldName}`,

store: new RedisStore(redisClient)

}

const rateLimitDirective = createRateLimitDirective(rateLimitOptions)

const resolvers = {

Query: {

hello: (_, { name }) => `Hello ${name}`

}

}

// Schema

const typeDefs = `

directive @rateLimit(

max: Int

window: String

message: String

identityArgs: [String]

arrayLengthField: String

) on FIELD_DEFINITION

type Query {

hello(name: String!): String! @rateLimit(window: "1s", max: 2)

}

`

const server = new GraphQLServer({

typeDefs,

resolvers,

// this enables you to use @rateLimit directive in GraphQL schema.

schemaDirectives: {

rateLimit: rateLimitDirective

}

})

server.start(() => console.log('Server is running on localhost:4000'))

There we go, the welcome resolver is now rate limited.

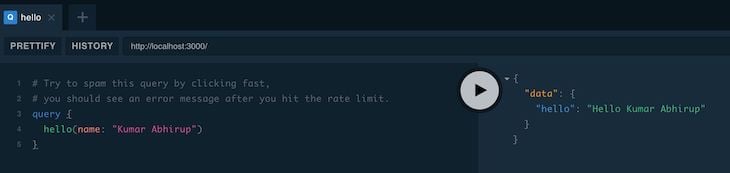

If you visit GraphQL Playground on https://localhost:4000, try running the below query.

# Try to spam this query by clicking fast,

# you should see an error message after you hit the rate limit.

query {

hello(name: "Kumar Abhirup")

}

You should see the set error message after you hit the rate limit: Woah there, you are doing way too much hello.

Now let’s break down the code in-depth.

const rateLimitOptions = {

identifyContext: (ctx) => ctx?.request?.ipAddress || ctx?.id,

formatError: ({ fieldName }) =>

`Woah there, you are doing way too much ${fieldName}`,

store: new RedisStore(redisClient)

}

const rateLimitDirective = createRateLimitDirective(rateLimitOptions)

identifyContext is a function that will return a unique string for all devices or a user in the database, or a combination of both. This is where you decide if you want to rate-limit by IP address, by user ID, or by other methods.

In the above snippet, we try to set the user IP address as the unique identifier, and it uses the default GraphQL server-provided contextID as a fallback value if the IP address isn’t retrieved.

formatError is a method that allows you to format the error message a user sees after they hit the rate limit.

store connects to the Redis instance to save the necessary rate-limiting data in your Redis server. Without a Redis store, this rate limit setup cannot operate. Note that you don’t always have to use Redis as a store — you may use MongoDB or PostgreSQL as well, but those databases are overkill for a simple rate-limiting solution.

createRateLimitDirective is a function that, if supplied with the rateLimitOptions, enables you to create dynamic rate-limiting directives that you can later connect to your GraphQL server for use in your schema.

Here’s the schema.

const typeDefs = `

directive @rateLimit(

max: Int

window: String

message: String

identityArgs: [String]

arrayLengthField: String

) on FIELD_DEFINITION

type Query {

hello(name: String!): String! @rateLimit(window: "1s", max: 2)

}

`

directive @rateLimit creates the @rateLimit directive in the schema that accepts some parameters that help configure rate limit rules for each resolver.

You can pre-fix @rateLimit(window: "1s", max: 2) to any resolver once your GraphQL directive is set up and ready to use. window: "1s", max: 2 means that the resolver can be run by a user, an IP address, or by the specified identifyContext only twice every second. If the same query is run for the third time within that time frame, the rate limit error will appear.

Hopefully, you now know how GraphQL resolvers can be rate-limited, which helps prevent query spam from overwhelming your servers.

Now that we’ve covered GraphQL rate limiting, let’s look at how to make your GraphQL endpoint safer with depth limiting.

Depth limiting refers to limiting the complexity of GraphQL queries by their depth. GraphQL servers often have dataloaders to load and populate data using relational database queries.

Look at the below query:

query book {

getBook(id: 1) {

title

author

publisher

reviews {

title

body

book {

title

author

publisher

reviews {

title

}

}

}

}

}

It fetches review(s) for the queried book(s). Every book has rviews, and every review is connected to a book, making it a one-to-many relationship being queried by the dataloader.

Now, look at this GraphQL query.

query badMaliciousQuery {

getBook(id: 1) {

reviews {

book {

reviews {

book {

reviews {

book {

reviews {

book {

reviews {

book {

reviews {

book {

# and so on...

}

}

}

}

}

}

}

}

}

}

}

}

}

}

This query is several levels deep. It creates a huge loop, which can continue for a long time, depending on the depth of the query, where book fetches reviews and reviews fetch books, and so on.

Such a query string can overwhelm your GraphQL server and may crash it. Imagine sending a query 10,000 levels deep — it would be disastrous!

This is where depth limiting comes in. It enables the GraphQL server to detect such queries and prevent them from being processed as a caution.

There is also another method used to solve this issue called the “Request Timed Out” error that stops a resolver from performing a query if it takes too long to resolve.

This is a fairly easy process. We can use graphql-depth-limit to depth limit GraphQL queries.

import { GraphQLServer } from 'graphql-yoga'

import * as depthLimit from 'graphql-depth-limit'

const typeDefs = `

type Query {

hello(name: String!): String!

}

`

const resolvers = {

Query: {

hello: (_, { name }) => `Hello ${name}`

}

}

const server = new GraphQLServer({

typeDefs,

resolvers,

// easily set a depth limit on all the incoming graphql queries

// here, we set a depth limit of 7

validationRules: [depthLimit(7)]

})

server.start(() => console.log('Server is running on localhost:4000'))

We’re all set! There are many additional ways you can use depth limiting to limit query complexities.

Rate limiting and depth limiting your GraphQL endpoints is a must in order to prevent your GraphQL server from getting overwhelmed with API requests, and it also protects your server against malicious query attacks that can put your resolvers in a never-ending request loop, especially when you are deploying the server for a live app.

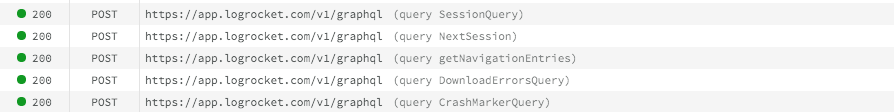

While GraphQL has some features for debugging requests and responses, making sure GraphQL reliably serves resources to your production app is where things get tougher. If you’re interested in ensuring network requests to the backend or third party services are successful, try LogRocket.

LogRocket lets you replay user sessions, eliminating guesswork around why bugs happen by showing exactly what users experienced. It captures console logs, errors, network requests, and pixel-perfect DOM recordings — compatible with all frameworks.

LogRocket's Galileo AI watches sessions for you, instantly aggregating and reporting on problematic GraphQL requests to quickly understand the root cause. In addition, you can track Apollo client state and inspect GraphQL queries' key-value pairs.

Solve coordination problems in Islands architecture using event-driven patterns instead of localStorage polling.

Signal Forms in Angular 21 replace FormGroup pain and ControlValueAccessor complexity with a cleaner, reactive model built on signals.

Discover what’s new in The Replay, LogRocket’s newsletter for dev and engineering leaders, in the February 25th issue.

Explore how the Universal Commerce Protocol (UCP) allows AI agents to connect with merchants, handle checkout sessions, and securely process payments in real-world e-commerce flows.

Would you be interested in joining LogRocket's developer community?

Join LogRocket’s Content Advisory Board. You’ll help inform the type of content we create and get access to exclusive meetups, social accreditation, and swag.

Sign up now

3 Replies to "Securing GraphQL API endpoints using rate limits and depth limits"

Is not working with apollo 3 and latest graphql-tools !

Hi Walter! Kumar here.

Thank you so much for the comment, really appreciate the feedback!

May I get a little more context here? What exactly isn’t working with Apollo 3, is it the graphql-rate-limit library, or the graphql-depth-limit library?

I remember using these libraries on production for itsbeam[dot]com with Apollo 3, so if possible, I would love to take a look at your repo & perhaps help! (my email: hey[at]kumareth.com)

If we find some issue together, will update this article, thanks a lot!

Hello,:

…

const server = new GraphQLServer({

typeDefs,

resolvers,

// this enables you to use @rateLimit directive in GraphQL schema.

schemaDirectives: {

rateLimit: rateLimitDirective

}

})

the parameter schemaDirectives isn’t supportet any longer.

i use the infos here:

https://www.apollographql.com/docs/apollo-server/schema/creating-directives/

and wrote a new directive by myself.