The immense frontend knowledge that AI possesses can be both intimidating and reassuring. However, AI should never be seen as a subject matter expert. It’s wise not to use AI output that you can’t test or determine to be true or false.

Code generated by AI may have dependency mismanagement issues or fail to follow logic that implements security best practices. Because every developer has adopted various AI tools into their workflow, it’s important to set up technical measures and processes that audit AI-generated code.

In this article, you will learn about security vulnerabilities and design flaws that can be introduced by code components developed by AI tools. More importantly, you will learn how to set up a technical auditing process that validates code generated by AI tools.

The Replay is a weekly newsletter for dev and engineering leaders.

Delivered once a week, it's your curated guide to the most important conversations around frontend dev, emerging AI tools, and the state of modern software.

A significant risk of using AI-generated code is the potential introduction of security vulnerabilities and architectural flaws. This section will discuss issues developers should look out for when using AI-generated code.

Many coding guidelines are set by different security standards, such as the NSA. AI code generators focus on writing code that accomplishes a given task. It may fail when it comes to ensuring that the generated code is secure. An example can be when an AI tool hardcodes secrets.

However, the most relevant security issue that AI can fall into is using outdated concepts or technologies. The reason why AI is susceptible to this security issue is that the datasets used to train AI aren’t regularly updated. If a certain library gets declared as obsolete because of data leaks, AI will continue using the obsolete library until its datasets are updated.

Using AI-generated code that is not scanned for any outdated libraries and concepts puts your application at risk of exploitation. Below is an example of outdated code that uses the old, insecure SHA-1 hashing algorithm that has since been deprecated. Outdated hashing algorithms are not effective at encrypting passwords against cyberattacks:

using System.Security.Cryptography;

using System.Text;

public string HashPassword(string password) {

SHA1 sha1 = SHA1.Create();

byte[] inputBytes = Encoding.ASCII.GetBytes(password);

byte[] hashBytes = sha1.ComputeHash(inputBytes);

return Convert.ToBase64String(hashBytes);

}

AI isn’t as flexible as human developers. The quality of the generated code is determined by the AI model’s training data. If a human developer thinks that the current data quality is bad, they can explore unlimited options and even get new insights that AI doesn’t have.

Additionally, there are cases where AI outputs stellar code, but the code has been retrieved from a public GitHub repository. Using a licensed code for commercial purposes or reproducing licensed products can get you into endless lawsuits!

Many factors lead developers to blindly trust AI outputs, despite their potential flaws:

Below are validating concepts that you should be familiar with and implement when using code generated by AI.

The best way to predict when an AI code generator will hallucinate or generate biased content is by checking its knowledge cut-off. A knowledge cut-off indicates the last date the model was updated. Any discoveries or events that happen after the cut-off are not known to the model. For example, the cutoff knowledge for GPT 4-Turbo is December 2023 at the time of writing.

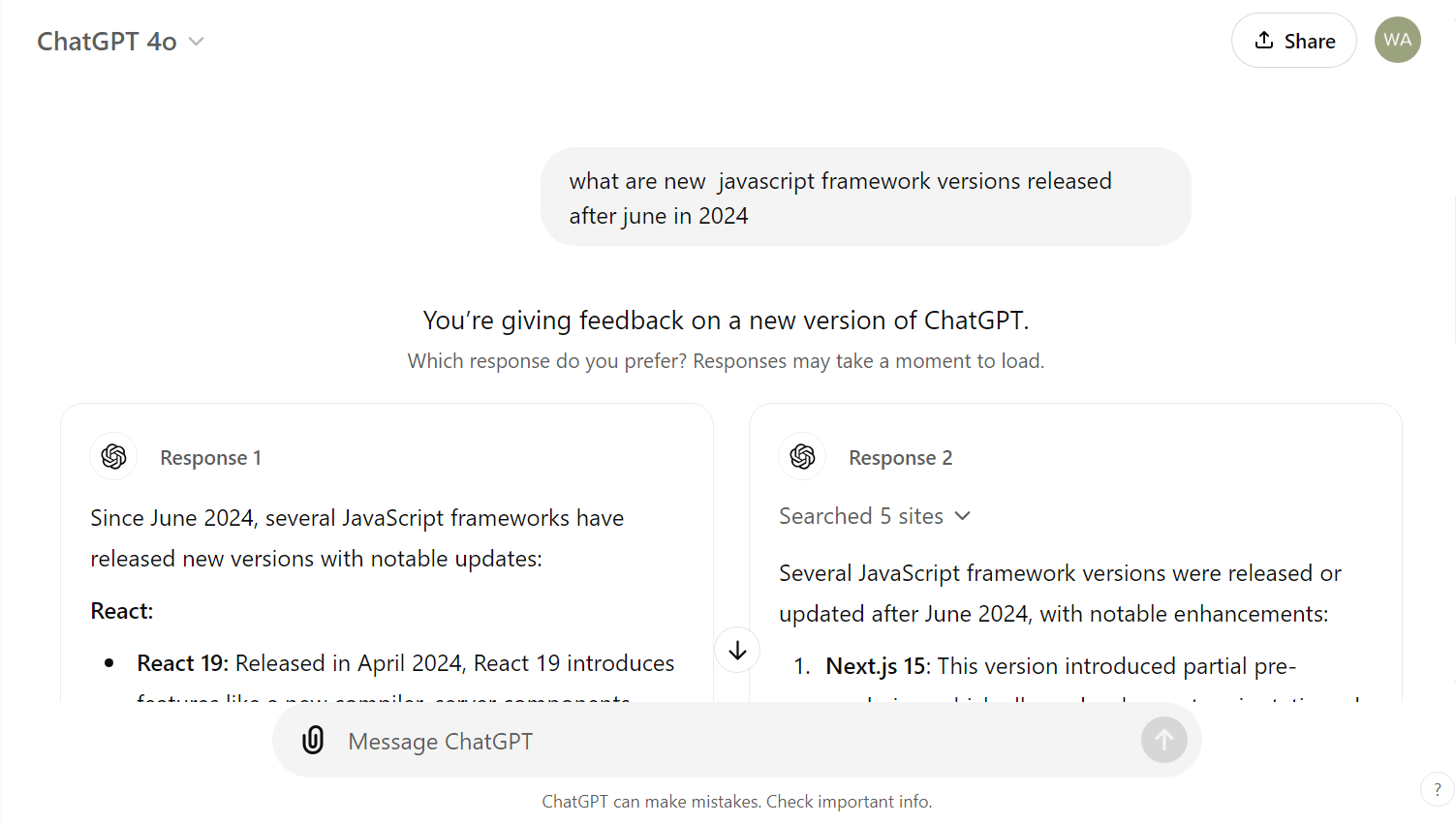

Although these AI tools can obtain new information by searching the web, this does not mean that they are in the best position to code or implement a new technological concept. The image below shows ChatGPT searching the web for information it doesn’t have after its knowledge cutoff date:

So, how is the knowledge cutoff helpful and important to a frontend developer? This is important when asking an AI coding tool to generate code or UI components using the latest libraries or new JavaScript framework version.

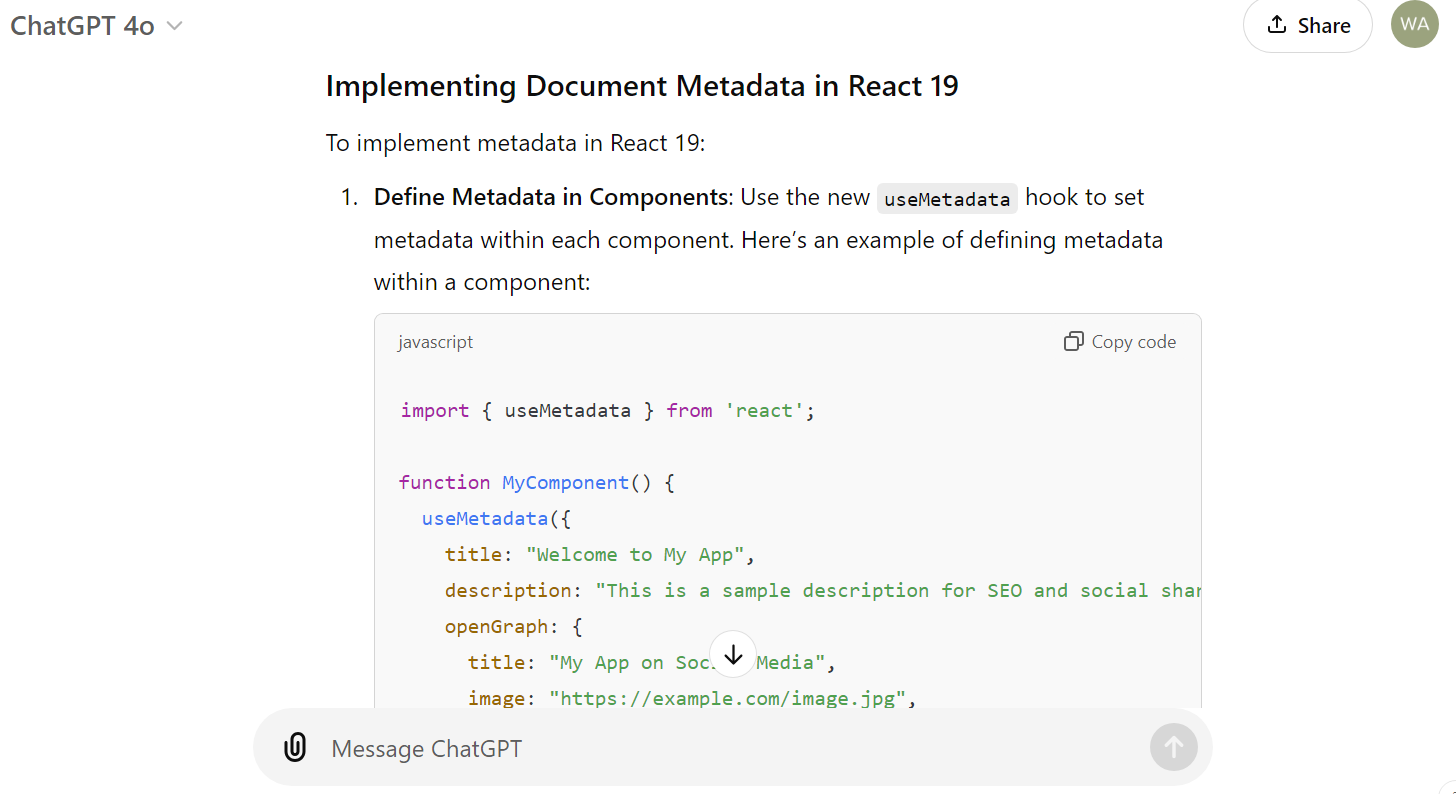

For example, I asked the ChatGPT 4o model to implement the new Document Metadata in React 19, which was released in April 2024. Because the 4o model knowledge cutoff was last year, the model hallucinated when it imported the useMetadata Hook, which was not introduced in React 19:

Earlier on, I mentioned that these AI coding tools can get new knowledge beyond the knowledge cut-off by searching the web. Well, in this hallucination case where ChatGPT uses the useMetadata Hook (that doesn’t exist in React), it turns out that ChatGPT fetched the hook from the Thirdweb website.

One of the reasons why AI models don’t add sufficient comments or package documentation in the file is that they aren’t generating code that will be reviewed by multiple developers. Generally, they are creating documentation for a user who understands the codebase. The AI doesn’t know when the generated components are too complex and will need to be explained with comments, and the AI also doesn’t know when the code is too simple and shouldn’t be explained.

AI can learn how to write better comments for your projects, but it has to be trained. However, not every developer is well-equipped to train these models or has the time to do so.

To ensure that Javascript Documentation (JSDoc) standards are followed, install the ESLint tool. This tool not only lints JavaScript code but also scans JavaScript documentation and identifies missing comments and informal documentation patterns. Install ESLint using the following command:

npm install --save-dev eslint

Next, install the JSDoc linter plugin:

npm install --save-dev eslint-plugin-jsdoc

Scan your file with the AI-generated documentation using the following command:

npx eslint project-dir/ your-file.js

Frontend projects are highly dependent on built-in and third-party libraries. Every project imports multiple libraries that add functionality and customization to the application. Libraries can be very helpful but are dangerous when obsolete.

Because AI is determined by its knowledge cut-off, it’s prone to adding outdated dependencies. Therefore, it is important to run npm audit when integrating AI-generated code into your application codebase. The npm audit command lists all the vulnerabilities found in the obsolete library or dependency. After detecting the vulnerabilities, use npm audit fix to eliminate vulnerabilities and find an update for the obsolete library.

When using AI code generators, keep in mind that the AI models are only limited to the algorithmic patterns they were trained on. They may not fully grasp certain requirements, business logic, and unique patterns used in your application. This limited understanding leads to AI generating code that does not align with your application’s needs.

AI tools can generate code that has function isolation issues. Function isolation means that the functions created don’t utilize any project libraries or call any project functions. That’s why AI-generated code has to be refactored to make it relevant to the codebase unless the AI tool can read the entire codebase and understand all functions.

Isolated functions offer little to no value to the application as they can be removed and no service is broken. Isolated functions mostly use hardcoded values and configurations. They are hard to be reused. Below is an example of isolated code:

// Irrelevant - Direct database access, bypassing the service layer

async function getUserData(id) {

return db.query(`SELECT * FROM users WHERE id = ${id}`);

}

// Relevant - Adheres to project's architecture

async function getUserData(id) {

return userService.getUserById(id); // Uses service layer abstraction

Issues of codebase context irrelevance can arise from functions that don’t adhere to project-specific naming conventions, formatting, and comment styles. To identify and address these architectural flaws caused by codebase context irrelevance, consider using a static analysis tool. Many modern-day testing platforms and static testing tools, such as SonarSource, have been optimized and trained to fix AI code-generated issues.

Although AI has completely disrupted the developer workflow, it continues to become more necessary to use AI code-generation tools. In order to do so effectively, follow the steps we’ve outlined in this tutorial to validate that the AI-generated code you’re working with is secure enough to be added to your app’s codebase.

Validating AI code reduces code errors and ensures that code is compliant. Then, you can feel comfortable using AI to automate code tasks like the following:

Solve coordination problems in Islands architecture using event-driven patterns instead of localStorage polling.

Signal Forms in Angular 21 replace FormGroup pain and ControlValueAccessor complexity with a cleaner, reactive model built on signals.

Discover what’s new in The Replay, LogRocket’s newsletter for dev and engineering leaders, in the February 25th issue.

Explore how the Universal Commerce Protocol (UCP) allows AI agents to connect with merchants, handle checkout sessions, and securely process payments in real-world e-commerce flows.

Hey there, want to help make our blog better?

Join LogRocket’s Content Advisory Board. You’ll help inform the type of content we create and get access to exclusive meetups, social accreditation, and swag.

Sign up now