Multi-AI agents refer to systems where multiple language models, or agents, work together to accomplish tasks that might be too complex for a single model. The advantage of this approach is that each agent can be specialized for a specific task:

The collaboration between these agents can lead to more efficient and accurate problem-solving: the choreography of the invocation of each agent is coordinated by an agent that interacts with humans and tries to determine which agents to invoke. OpenAI, of course, has its approach to supporting this kind of multi-AI agent, which is based on a series of concepts we describe in the following table:

| Assistant | An AI designed for a specific purpose that uses OpenAI’s models and calls Tools |

| Tool | An API, module, or function that an assistant can call upon to achieve specific objectives or retrieve information |

| Thread | A conversation session between an Assistant and a human. Threads store messages and automatically handle truncation to fit content into a model’s context |

| Message | A message created by an Assistant or a human. Messages can include text, images, and other files |

In this article, we’ll introduce the Experts.js framework, which offers a solution for creating and deploying OpenAI’s Assistants. It allows the assembly of an Assistant by combining tools to build sophisticated multi-AI agent systems, enhancing memory capacity and attention to detail.

The Replay is a weekly newsletter for dev and engineering leaders.

Delivered once a week, it's your curated guide to the most important conversations around frontend dev, emerging AI tools, and the state of modern software.

This section describes a simple boilerplate for an Assistant based on Experts.js. The complete project is available on GitHub for further reference.

First, install Experts via npm:

$ npm install experts

The usage is straightforward, as there are only three objects to import:

import { Assistant, Tool, Thread } from "experts";

These objects, which we described in the table above, are how we will interact with the library.

Before executing the code in the article, remember to set the environment variable to your OpenAI API key with the following:

export OPENAI_API_KEY="sk-proj-XXXTHIS_AN_EXAMPLE_REPLACE_WITH_REAL_KEY"

The Experts.js library will automatically query the OPENAI_API_KEY to interact with the OpenAI API.

Follow the code for a simple Assistantwith no Tools, just to check that everything works (check the file named boilerplate.js on the repository):

import { Assistant, Tool, Thread } from "experts";

import ReadLine from "readline-sync";

class SimpleAssistant extends Assistant {

constructor() {

super(

"Conversational Travel Guide Assistant",

`The Travel Guide Assistant helps you effortlessly plan and enjoy your trips by

suggesting destinations, creating personalized itineraries, and providing local tips.

It also offers language support and travel advice to ensure a seamless and enjoyable experience.`,

`Please act as my Travel Guide Assistant. Your primary task is to help me

plan and enjoy my trip. Focus on suggesting destinations, creating itineraries,

providing local information, translating common phrases, and offering travel tips.

Always ensure that the information you provide is accurate, relevant, and tailored to my preferences.

Avoid off-topic responses and concentrate solely on assisting with travel-related inquiries and tasks

If you cannot assist just say "Sorry, I can't help you with this"`,

);

}

}

const thread = await Thread.create();

const assistant = await SimpleAssistant.create({

model: "gpt-3.5-turbo",

});

while (true) {

let prompt = ReadLine.question(" 😊 > ");

let output = await assistant.ask(prompt, thread.id);

console.log(" 🤖 > " + output)

}

The core of the code is the definition of the SimpleAssistant class that simply extends the Assistant class by passing three parameters to the constructor: the first is just a name (Conversational Travel Guide Assistant, in this case); the second is the description field that clarifies what the Assistant will do; and the third is the instructions parameter that contains the proper prompt for the Assistant. Consequently, it can contain 256K characters.

Apart from the SimpleAssistant class, the rest of the code is pretty simple; simply instantiate the SimpleAssistant class and a Thread, which will represent the session of interactions with the OpenAI API.

An important aspect is the model we intend to use. This decision can be made for each Tool separately or, like we did in our case, at the Assistant level, when we instantiate the Assistant (lines 23-24).

At this point, we can check how the Assistant works. Let’s start with the following:

$ node boilerplate.js

A sample execution can look like this:

😊 > Hello, how are you today? 🤖 > I'm here and ready to help you plan and enjoy your trip! How can I assist you today? Do you have a specific destination in mind or any travel-related questions? 😊 > Please suggest a 1-day trip to Salerno 🤖 > Sure! Salerno is a beautiful city in the Campania region of Italy, known for its charming historic center, waterfront promenade, and proximity to the Amalfi Coast. Here's a suggested itinerary for a 1-day trip to Salerno: ### **Morning:** 1. **Salerno Cathedral (Duomo di Salerno)** - **Time:** 1-2 hours - Start your day by visiting the historic Salerno Cathedral, which dates back to the 11th century. The cathedral is known for its beautiful architecture and stunning bronze doors... ...

In the execution above, you can see how the prompt is effective. We have “convinced” the Assistant to be focused on its task of supporting the travel planning: in the first interaction, which was a simple greeting, the Assistant replies with a brief description of its functionalities, while, in the second interaction, it replies by following the instructions we passed in the construction.

Our next example will be more complex, and it will instantiate an Assistant using multiple, specialized Tools to accommodate specific requirements.

The general idea is that most of the Tools will just contain a particular prompt to control the output of the model – from the experiences most of us are gathering with LLMs, this is the most complex part. LLMs are designed to generate several tokens as a response to a given message and such generation may (and often does) go way beyond the intended task.

Using Tools, the interesting part of this approach is that you can segment the interactions in specific domains to better control the outcome of an exchange between the human and the robot.

An even more powerful possibility is to develop a Tool that is not related to an interaction with the LLM. This may sound counter-intuitive but it allows the development of a Tool that can be integrated into an Assistant that, for example, performs a query on a remote database, interacts with an API, or performs complex calculations with the GPU.

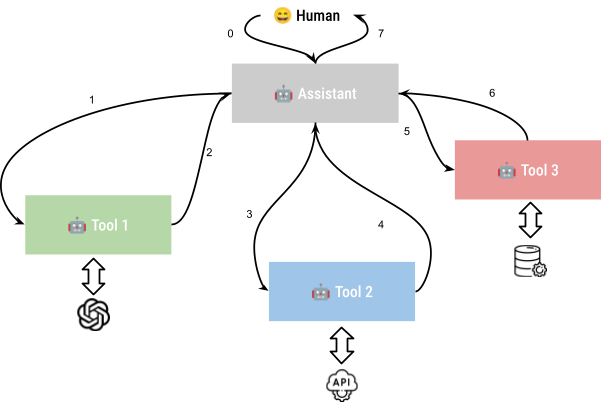

The possibility of this approach is depicted in the image below, where it is shown how the Assistant orchestrates the exchange with the Tools:

Tool 1, on the left, will rely on an LLM from OpenAI, Tool 2 will invoke an API in the cloud and, finally, Tool 3 will query a database. Note how the Assistant is in charge of interacting with the human, sending messages to each Tool, and receiving the answers.

This means that the interaction with the Tools can be schematic: it can use JSON to send parameters to the API Tool or SQL to the database tool, and the output from each Tool is manipulated by the Assistant before returning it to the human. This means that no matter how complex the interactions with the Tools are, you will still be able to control the interaction with the human with the prompt of the Assistant deciding, for example, the mood or the language.

In the following code, we implement a more complex version of the “Conversational Travel Guide.” It will have four specialized Tools: two based on LLM and two other tools implementing functionalities not based on an LLM.

The problem we intend to solve is to develop a trip planner Assistant that will let the user collect a list of cities to visit and, for each city in the trip plan, the Assistant will provide a brief description of the city and its climate.

The first two Tools are in charge of handling the trip plan and a simple list of cities. The trip plan is a class named TripPlan (see the code in complex.js) that has two methods: one to add a city and another to return the list of cities currently present in the trip plan. We will not comment on the TripPlan class, which is straightforward, but we will focus on the two non-LLM tools to handle it:

class AddCityTool extends Tool {

constructor() {

super(

"Add City Tool",

"Add a city to the trip plan",

"This tool add a city to the current trip plan",

{

llm: false,

parameters: {

type: "object",

properties: {

city: {

"type": "string",

"description": "The city, e.g. Salerno"

},

},

"required": ["city"]

}

});

}

async ask(_newcity) {

currentTrip.addCity(_newcity);

return `${_newcity} added to the trip.`;

}

}

The tool above just adds a city to the TripPlan class: as you can see, this is an extension of the Tool object, but the constructor has the same parameters, name, description, and instruction, as well as the relevant parameter that contains an object describing the Tool for the Assistant.

This description of the Tool (lines 41-53) first specifies that it is a non-LLM-based tool (line 42), then clarifies that it is a function (see the OpenAI API documentation) with a specialized task. Because it is a function, we must specify the name (line 38), description (line 39), instructions (line 40), and parameters that it expects. In this case, we simply need a string (line 47) that will contain a city name.

Note how we describe the parameter, even with an example. The last configuration is whether this parameter is required or not (line 51). Please note how we implement the ask method here: this is mandatory for non-LLMs and it is the place where we implement the logic of the Tool. The return statement (line 58) contains the Message we return to the caller, which is the Assistant, as we described in the figure above.

The second Tool is specialized to list the cities currently present in the TripPlan object:

class ListCities extends Tool {

constructor() {

super(

"List Cities",

"List all the cities in the current trip plan",

"This tool list all the cities in the current trip plan",

{

llm: false,

});

}

async ask() {

return `Your trip currently has the following cities: ${currentTrip.listCities()} `;

}

}

As expected, this is a non-LLM Tool with an even simpler logic, so it will just return to the Assistant the content of the TripPlan class.

In this example, we have two simple, specialized Tools: one to describe a city using a single sentence, and the other to describe the climate of a city:

class DescribeCityTool extends Tool {

constructor() {

super(

"Describe City Tool",

"Describe a city with a single sentence",

`You are concise assistant that describe a city with a

single sentence: do not go into too many details,

it is useful to grasp the "spirit" of the city`,

{

parameters: {

type: "object",

properties: {

city: {

"type": "string",

"description": "The city, e.g. Salerno"

},

},

"required": ["city"]

}

});

}

}

This is an LLM-based Tool but the definition is similar to the non-LLM Tools we described above; the only difference is that we do not have the _ask method here because it is implemented by the chosen model.

As you can see here, there is nothing very fancy going on. We just control the amount of information we expect to return, as reported in the description field (lines 83-85). The other Tool is similar (see the class DescribeClimateTool in the code); the only difference is that the instructions are about the climate of the city.

Now that we have the four Tools, we can assemble the Assistant:

class TripAssistant extends Assistant {

constructor() {

super(

"Conversational Travel Guide Assistant",

`The Travel Guide Assistant helps you effortlessly plan and enjoy your trips by

suggesting destinations, creating personalized itineraries, and providing local tips.

It also offers language support and travel advice to ensure a seamless and enjoyable experience.`,

`Please act as my Travel Guide Assistant. Your primary task is to help me

plan and enjoy my trip. Focus on suggesting destinations, creating itineraries,

providing local information, translating common phrases, and offering travel tips.

Always ensure that the information you provide is accurate, relevant, and tailored to my preferences.

Avoid off-topic responses and concentrate solely on assisting with travel-related inquiries and tasks

If you cannot assist just say "Sorry, I can't help you with this".

You have a set of tools:

- You have specialized tools to handle the trip plan

- You have a tool that describe a city with a single sentence

- You have a tool that describs how generically is the weather in a city

If asked without specifying the city applies all the tools to each city in the trip plan`,

);

this.addAssistantTool(DescribeCityTool);

this.addAssistantTool(DescribeClimateTool);

this.addAssistantTool(AddCityTool);

this.addAssistantTool(ListCities);

}

}

Once again, the code is simple. We have the usual fields about the name of the Assistant, the description, and the instructions (lines 129-139). It is interesting to notice how we explicitly cite the tools in the prompt. This is not always needed because the LLM can understand what the Tools are capable of and how to interact with them. The most relevant part is passing the Tool definitions to the Assistant (lines 142-145).

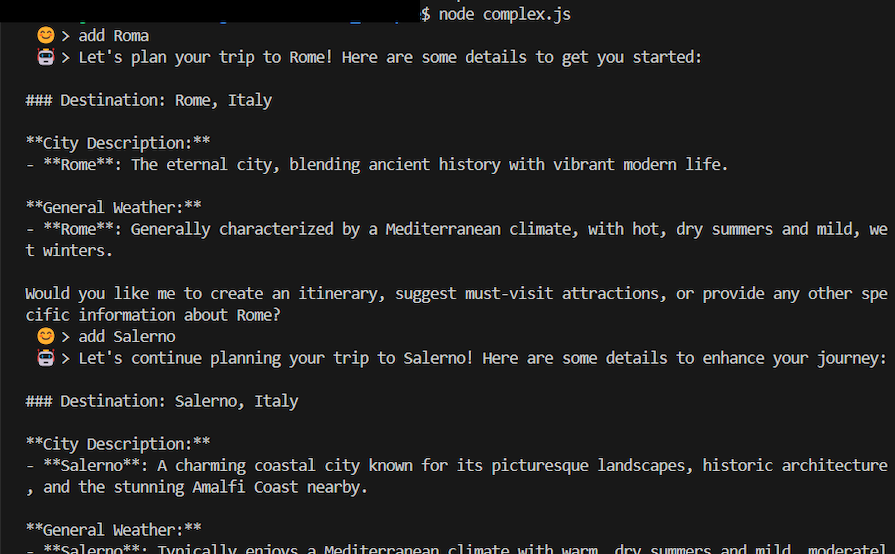

In the following image, you can see how the Assistant behaves. It will let you add cities to the trip plan, and returns a brief description with some details about the climate of each city in the plan:

In this article, we introduced multi-AI agent systems, such as those developed using the Experts.js framework, and how they enable specialized agents, or Assistants, to perform task-specific functions. Experts.js and similar frameworks simplify the process of information retrieval and analysis by providing tools and structures to manage interactions and coordinate various agents effectively.

Alternatives to Experts.js are also available for specific languages and different models, including:

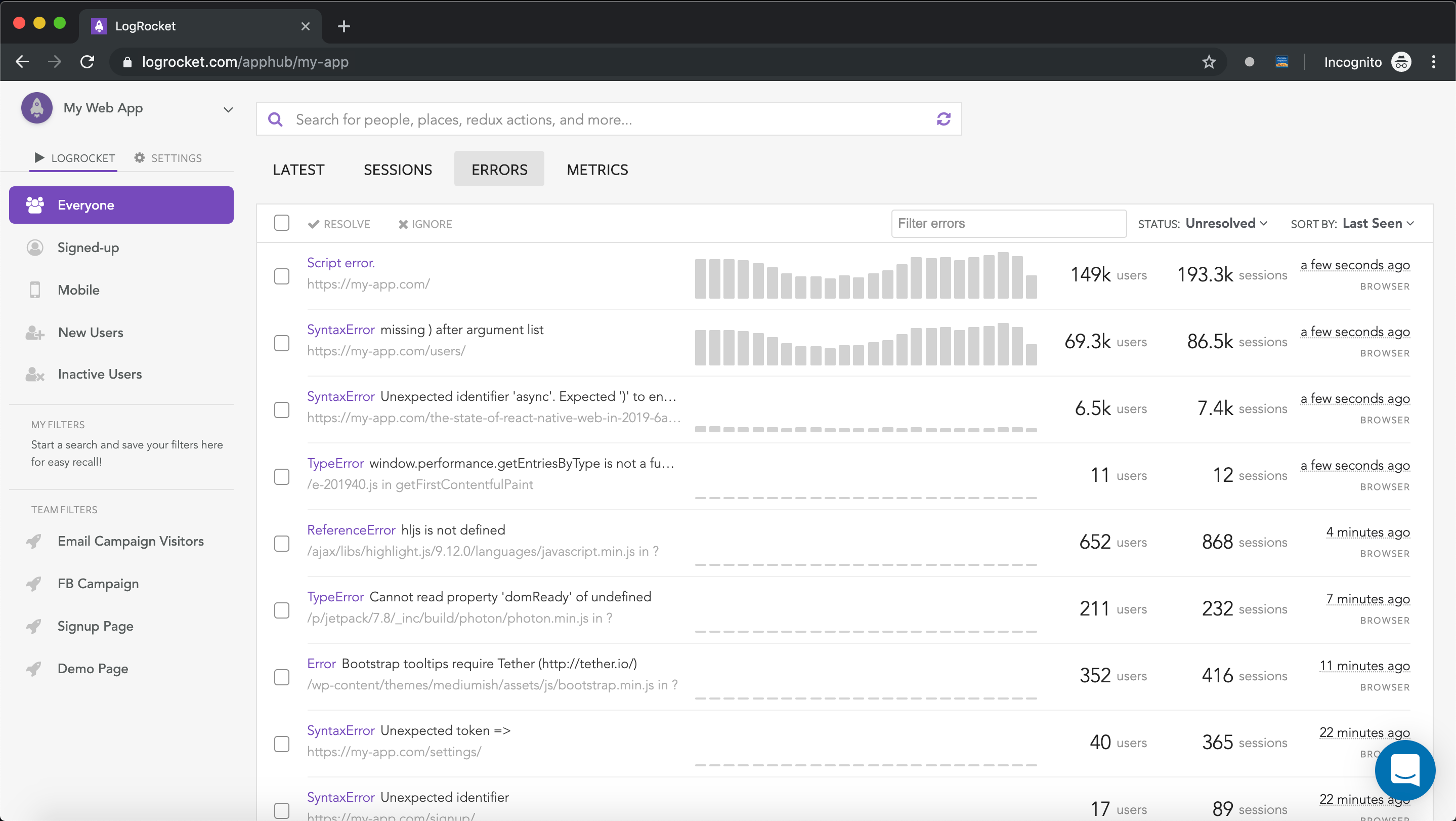

There’s no doubt that frontends are getting more complex. As you add new JavaScript libraries and other dependencies to your app, you’ll need more visibility to ensure your users don’t run into unknown issues.

LogRocket is a frontend application monitoring solution that lets you replay JavaScript errors as if they happened in your own browser so you can react to bugs more effectively.

LogRocket works perfectly with any app, regardless of framework, and has plugins to log additional context from Redux, Vuex, and @ngrx/store. Instead of guessing why problems happen, you can aggregate and report on what state your application was in when an issue occurred. LogRocket also monitors your app’s performance, reporting metrics like client CPU load, client memory usage, and more.

Build confidently — start monitoring for free.

Solve coordination problems in Islands architecture using event-driven patterns instead of localStorage polling.

Signal Forms in Angular 21 replace FormGroup pain and ControlValueAccessor complexity with a cleaner, reactive model built on signals.

Discover what’s new in The Replay, LogRocket’s newsletter for dev and engineering leaders, in the February 25th issue.

Explore how the Universal Commerce Protocol (UCP) allows AI agents to connect with merchants, handle checkout sessions, and securely process payments in real-world e-commerce flows.

Would you be interested in joining LogRocket's developer community?

Join LogRocket’s Content Advisory Board. You’ll help inform the type of content we create and get access to exclusive meetups, social accreditation, and swag.

Sign up now