Speed and performance is an important aspect of any application, and one of the most popular techniques for improving an app’s speed is implementing caching.

With caching, we can store an application’s data for quick access and avoid repeatedly running expensive queries or application logic.

An important aspect of caching is ensuring that the data we send from our cache is relevant and up to date, which leads us to cache validation.

Cache validation can be handled in several ways, including setting a cache expiration time or actively invalidating data at the points where the application data changes.

In this article, we will implement caching in a simple API application and handle cache validation within the application. To validate our cache data we will utilize a simple difference library called MicroDiff to help indicate the relevance of our cache.

MicroDiff is a lightweight JavaScript library that detects object and array differences and is popular for its significantly tiny size of less than 1kb, it’s speed, and its lack of dependencies.

MicroDiff is a great option for our cache validation because it does not bloat our application unnecessarily while providing clear difference detection. Using it, we can listen for data changes that indicate a need for an update to our cached data without slowing down our application.

To follow along with this article, install the following:

For our project, we will utilize a previously built simple Express.js API and extend it to implement caching and cache validation. We’ll start by cloning our API application:

$ git clone [email protected]:ibywaks/cookbook.git

Next, let’s install our cache library, node-cache, and our difference library MicroDiff:

$ yarn add microdiff node-cache

Next, we’ll start off by encapsulating our cache library with a custom cache class in our project. This way we can easily switch between cache libraries without a lot of edits in our application:

# src/lib/local-cache.ts

import NodeCache from 'node-cache'

type CacheKey = string | number

class LocalCache {

private static _instance: LocalCache

private cache: NodeCache

private constructor(ttlSeconds: number) {

this.cache = new NodeCache({

stdTTL: ttlSeconds,

checkperiod: ttlSeconds * 0.2,

})

}

public static getInstance(): LocalCache {

if (!LocalCache._instance) {

LocalCache._instance = new LocalCache(1000)

}

return LocalCache._instance

}

public get<T>(key: CacheKey): T | undefined {

return this.cache.get(key)

}

public set<T>(key: CacheKey, data: T): void {

this.cache.set(key, data)

}

public unset(key: CacheKey): void {

this.cache.del(key)

}

public hasKey(key: CacheKey): boolean {

return this.cache.has(key)

}

}

export default LocalCache.getInstance()

When implementing cache in an application to improve speed and performance, it is important to consider what data should be cached. Some important criteria for data that should be cached to improve performance are:

An important consideration when implementing caching in your application is to focus on caching data that is read more frequently than it changes.

Our cookbook API has an endpoint for listing recipes, which users are likely to read through more frequently than they would add new recipes. For this reason, the recipes list endpoint is a great candidate for caching.

Also, cookbook API users will frequently read through the list of available recipes. So, let’s add some caching to our GET recipes endpoint.

First, let’s start by updating the cache at the controller once data is retrieved:

# src/api/controllers/recipes/index.ts

...

import localCache from '../../../lib/local-cache'

...

export const getAll = async (filters: GetAllRecipesFilters): Promise<Recipe[]> => {

const recipes = await service.getAll(filters)

.then((recipes) => recipes.map(mapper.toRecipe))

if (recipes.length) {

localCache.set(primaryCacheKey, recipes)

}

return recipes

}

Next, we’ll create a middleware to check the existence of data in the cache. Where cached data exists, we return the data as a response, or else we call the controller to retrieve data and save to the cache:

# src/lib/check-cache.ts

import { Request, Response, NextFunction } from 'express'

import LocalCache from './local-cache'

export const checkCache = (req: Request, res: Response, next: NextFunction) => {

try {

const {baseUrl, method} = req

const [,,,cacheKey] = baseUrl.split('/')

if (method === 'GET' && LocalCache.hasKey(cacheKey)) {

const data = LocalCache.get(cacheKey)

return res.status(200).send(data)

}

next()

} catch (err) {

// do some logging

throw err

}

}

In the above snippet, we extract our resource name from the baseUrl, that is recipes from api/v1/recipes. This resource name is our key for checking our cache for data.

Next, we add the cache checker middleware to the appropriate route:

# src/api/routes/recipes.ts

...

import {checkCache} from '../../lib/check-cache'

const recipesRouter = Router()

recipesRouter.get('/', checkCache, async (req: Request, res: Response) => {

const filters: GetAllRecipesFilters = req.query

const results = await controller.getAll(filters)

return res.status(200).send(results)

})

Now that we’ve successfully cached our most frequently visited endpoint, /api/v1/recipes, it’s important to handle the cases where recipes are changed or added to ensure that our users always receive the most relevant data.

To do this, we will listen to the lowest level point of change to our data by listening to the change Hooks of our sequelize model. We do this by creating a Hook definition and adding it to our Sequelize initialization:

# src/db/config.ts

...

import { SequelizeHooks } from 'sequelize/types/lib/hooks'

import localCache from '../lib/local-cache'

...

const hooks: Partial<SequelizeHooks<Model<any, any>, any, any>> = {

afterUpdate: (instance: Model<any, any>) => {

const cacheKey = `${instance.constructor.name.toLowerCase()}s`

const currentData = instance.get({ plain: true })

if (!localCache.hasKey(cacheKey)) {

return

}

const listingData = localCache.get<any>(cacheKey) as any[]

const itemIndex = listingData.findIndex(

(it) => it.id === instance.getDataValue('id')

)

const oldItemData = ~itemIndex ? listingData[itemIndex] : {}

const instanceDiff = diff(oldItemData, currentData)

if (instanceDiff.length > 0) {

listingData[itemIndex] = currentData

localCache.set(cacheKey, listingData)

}

},

afterCreate: (instance: Model<any, any>) => {

const cacheKey = `${instance.constructor.name.toLowerCase()}s`

const currentData = instance.get({ plain: true })

if (!localCache.hasKey(cacheKey)) {

return

}

const listingData = localCache.get<any>(cacheKey) as any[]

listingData.push(currentData)

localCache.set(cacheKey, listingData)

},

}

const sequelizeConnection = new Sequelize(dbName, dbUser, dbPassword, {

host: dbHost,

dialect: dbDriver,

logging: false,

define: {hooks}

})

export default sequelizeConnection

Above we define Hooks on the Sequelize instance that listen to creates and updates. For creates on the models we cache, we just update the cached object with the new data instance.

For updates to models with data we are caching, we compare the old cached data to the updated model data using our microdiff library. This library highlights changes in the data and indicates the need to update the cache.

One of the most significant considerations when applying cache to an application is ensuring that users are served current and valid data, which is why we apply cache invalidation techniques to ensure our cached data is correct, particularly when the application allows users to write and update data sources.

When performing cache invalidation, it is common that cache becomes too sensitive to changes, meaning every write or update operation, whether it significantly alters data or not, translates to an update to our cache.

Avoiding cache sensitivity is an important impact of using microdiff in our cache validation so we can avoid unnecessary writes or flashes to our cache by first detecting that there is a change to be updated.

In this article we explored caching, its benefits and important considerations towards caching our data. We have also went through the validation of our cache data, particularly checking for changes in our cached data using Microdiff.

All the code from this article is available on GitHub. I would love you to share in the comments, how you handle cache validation and any cool caching tricks you’ve used in your projects!

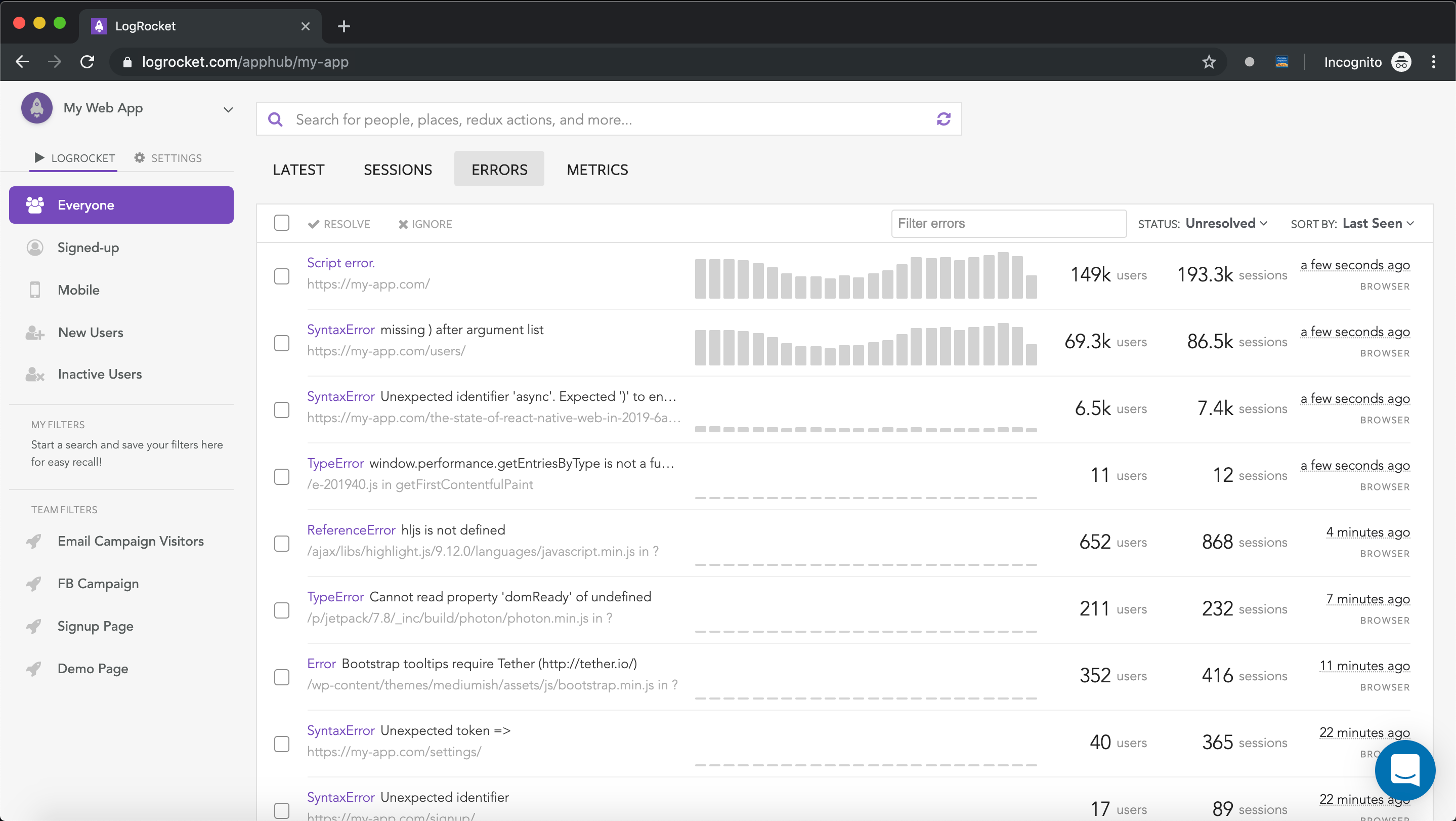

There’s no doubt that frontends are getting more complex. As you add new JavaScript libraries and other dependencies to your app, you’ll need more visibility to ensure your users don’t run into unknown issues.

LogRocket is a frontend application monitoring solution that lets you replay JavaScript errors as if they happened in your own browser so you can react to bugs more effectively.

LogRocket works perfectly with any app, regardless of framework, and has plugins to log additional context from Redux, Vuex, and @ngrx/store. Instead of guessing why problems happen, you can aggregate and report on what state your application was in when an issue occurred. LogRocket also monitors your app’s performance, reporting metrics like client CPU load, client memory usage, and more.

Build confidently — start monitoring for free.

Hey there, want to help make our blog better?

Join LogRocket’s Content Advisory Board. You’ll help inform the type of content we create and get access to exclusive meetups, social accreditation, and swag.

Sign up now

Get to know RxJS features, benefits, and more to help you understand what it is, how it works, and why you should use it.

Explore how to effectively break down a monolithic application into microservices using feature flags and Flagsmith.

Native dialog and popover elements have their own well-defined roles in modern-day frontend web development. Dialog elements are known to […]

LlamaIndex provides tools for ingesting, processing, and implementing complex query workflows that combine data access with LLM prompting.