EvaDB is a comprehensive, open source framework that developers can use to add AI-powered regression, classification, image recognition, and question answering to their applications.

EvaDB queries can be executed over data stored in existing SQL and vector database systems. The data can be manipulated by using pre-trained AI models from Hugging Face, OpenAI, YOLO, PyTorch, and other AI engines.

In this article, we’ll demonstrate how to include EvaDB in a simple project to provide AI-powered sentiment analysis. Since we’re focusing on how to use the toolkit, we’ll steer away from too much complexity in this guide. However, EvaDB may also be used with OpenAI, PyTorch, or other tools that require API keys or GPU.

The Replay is a weekly newsletter for dev and engineering leaders.

Delivered once a week, it's your curated guide to the most important conversations around frontend dev, emerging AI tools, and the state of modern software.

To easily follow along with the examples in this article, refer to this GitHub repo. For our demo, we’ll perform a simple natural language processing (NLP) task on some of strings (e.g., tweets, posts, or forum comments) in a table:

EvaDB plugs AI into traditional SQL databases, so as a first step, we’ll need to install a database. For this article, we’ll use SQLite because it’s fast enough for our tests and does not require a proper database server running somewhere. You may choose a different database, if you prefer.

To start, install EvaDB:

> pip install --upgrade evadb

TextBlob is a Python toolkit for text processing. It offers some common NLP functionalities such as part-of-speech tagging and noun phrase extraction. We’ll use TextBlob in our project to perform some quick sentiment analysis on tweets.

Once we install TextBlob, we’ll have everything we need to start experimenting:

> pip install --upgrade textblob

EvaDB offers a sophisticated, declarative language-extending SQL, designed for crafting AI queries. This language enables software developers to integrate AI-enhanced capabilities into their database applications.

The following code, from the repo’s analyze_twit.py file, is simple but contains all the concepts on which EvaDB is based:

import evadb

cursor = evadb.connect().cursor()

cursor.query("""

CREATE FUNCTION IF NOT EXISTS SentimentAnalysis

IMPL 'sentiment_analysis.py';

""").df()

cursor.query("""

CREATE TABLE IF NOT EXISTS twits (

id INTEGER UNIQUE,

twit TEXT(140));

""").df()

cursor.query("LOAD CSV 'tweets.csv' INTO twits;").df()

response = cursor.query("""

SELECT twit, SentimentAnalysis(twit) FROM twits

""").df()

print(response)

This code is a sequence of SQL statements executed by using the cursor object of EvaDB. The first statement defines a new function, SentimentAnalysis, that will execute a Python script. We’ll talk about this more in just a bit. It’s possible to specify more details about the function (e.g., the types of inputs and outputs), but this is the most basic example and it is good enough for our purposes.

The second statement creates a table to hold the strings that we’ll analyze. We load them from the tweets.csv file right into the table.

The last statement performs the analysis by calling the SentimentAnalysis function on each row of the table. Just appreciate how easily it integrates into the standard SQL:

import ...

class SentimentAnalysis(AbstractFunction):

@property

def name(self) -> str:

return "SentimentAnalysis"

@setup(cacheable=True, function_type="object_detection", batchable=True)

def setup(self):

print("Setup")

@forward(

input_signatures=[

PandasDataframe(

columns=["twit"],

column_types=[NdArrayType.STR],

column_shapes=[(1,)],

)

],

output_signatures=[

PandasDataframe(

columns=["label"],

column_types=[NdArrayType.STR],

column_shapes=[(1,)],

)

],

)

def forward(self, frames):

tb = [TextBlob(x).sentiment.polarity for x in frames['twit']]

df = pd.DataFrame(data=tb, columns=['label'])

return df

The function above is pretty simple (just two methods and a property), but it contains everything we need to see how EvaDB handles the interaction between the data from the traditional SQL engine and any additional engine you may wish to use.

To keep things simple, we’ll use the sentiment analysis provided by TextBlob; we won’t need an API key or external dependencies. EvaDB uses Python Decorators to add information to any specific methods that we want to implement.

The abstract method setup will create the running environment and can be used to initialize the parameters for executing the function. It must be implemented in our function. The following parameters must be set in the decorator:

cacheable:bool: When this parameter is True, the cache should be enabled and will be automatically invalidated when the function changes. When this parameter is False, the cache should not be enabled. This parameter is used to instruct EvaDB to cache the results of the function’s call. This will accelerate the execution of the function with the same parameters. If a function with the same set of parameters (e.g., image, string, or a generic chunk of data) could behave differently because of the non-determinism of the model, then the cacheable parameter should be Falsefunction_type:str: This function is for object detectionbatchable:bool: If this parameter is True, batching will be enabled; otherwise, the batching is disabledAny additional arguments needed to create the function can be passed as arguments to the setup function to perform some specific initialization for our environment.

Similarly, the new function must implement the forward abstract method. This function is responsible for receiving chunks of data (e.g., frames, strings, prompts) and executing the function logic.

This is where our deep learning model will execute on the provided data and where the logic for transforming the input data will run. Use of the forward decorator is optional.

Ensure that the following arguments are passed:

input_signatures: List[IOArgument]: Specifies the data types of the inputs expected by the forward function. If no constraints are provided, no validation is performed on the inputsoutput_signatures: List[IOArgument]: Specifies the data types of the outputs expected from the forward function. If no constraints are given, no validation is carried out on the outputsThe column names in the dataframe must correspond with the names specified in the decorators. At the time of writing, the following input and output arguments are available: NumpyArray, PyTorchTensor, and PandasDataframe.

In the code above, we specify the PandasDataframe package in the decorators for both the input and output data. The input consists of one column (the column_shapes parameter) named 'twit' (the column parameter) containing a string (the column_type parameter). The output has a similar shape and type, but the column is named 'label'.

Our goal is to conduct sentiment analysis on a bunch of tweets. Our function will consume and produce an evaluation of each string’s sentiment.

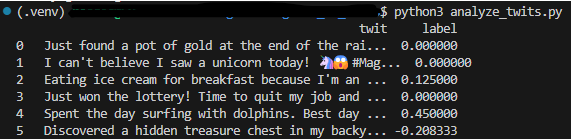

The logic of the forward function is fairly simple. The function will be invoked on every row of the twits table, returning the following result:

response = cursor.query("""

SELECT twit, SentimentAnalysis(twit) FROM twits

""").df()

On every invocation, the forward function will receive the frames parameter containing the PandasDataframe input that, in our case, will be a simple string. At this point, we just have to initialize TextBlob with each string contained in the frames parameter:

tb = [TextBlob(x).sentiment.polarity for x in frames['twit']]

The function is marked as batchable, meaning we may expect more than one row in the input dataframe. Therefore, the TextBlob sentiment analysis function will be called on each row in the dataframe.

The successive line of the forward function will just manipulate the tb array with the sentiment analysis result which will be packaged and returned to us in a dataframe.

EvaDB has the potential to convey the power of a wide range of so-called AI engines to the most traditional approach to manipulating data: SQL.

Adding functions to SQL isn’t new — the glorious store procedures have been available for decades. However, EvaDB is tailored to the specific task of accommodating a number of AI engines right in the code, saving us from handling interfaces and API keys and translating input and outputs between the engine and the SQL code. This approach is new and contains some good ideas – just consider that IBM is starting to provide similar functionalities in DB2, a mammoth enterprise-grade RDBMS.

Another benefit of EvaDB is that it allows the use of standard types for the inputs and outputs of our function. This assures expansion in the most general case of using libraries and engines not explicitly foreseen by EvaDB, like we did with TextBlob.

A similar approach is followed by Dask, a library that allows developers to execute parallel Python code within SQL statements. In some respects, Dask can be thought of as an EvaDB competitor, although EvaDB is more focused on the task of using AI Engine in SQL.

I hope you enjoyed this article and will experiment with including EvaDB in your projects.

Install LogRocket via npm or script tag. LogRocket.init() must be called client-side, not

server-side

$ npm i --save logrocket

// Code:

import LogRocket from 'logrocket';

LogRocket.init('app/id');

// Add to your HTML:

<script src="https://cdn.lr-ingest.com/LogRocket.min.js"></script>

<script>window.LogRocket && window.LogRocket.init('app/id');</script>

Solve coordination problems in Islands architecture using event-driven patterns instead of localStorage polling.

Signal Forms in Angular 21 replace FormGroup pain and ControlValueAccessor complexity with a cleaner, reactive model built on signals.

Discover what’s new in The Replay, LogRocket’s newsletter for dev and engineering leaders, in the February 25th issue.

Explore how the Universal Commerce Protocol (UCP) allows AI agents to connect with merchants, handle checkout sessions, and securely process payments in real-world e-commerce flows.

Hey there, want to help make our blog better?

Join LogRocket’s Content Advisory Board. You’ll help inform the type of content we create and get access to exclusive meetups, social accreditation, and swag.

Sign up now