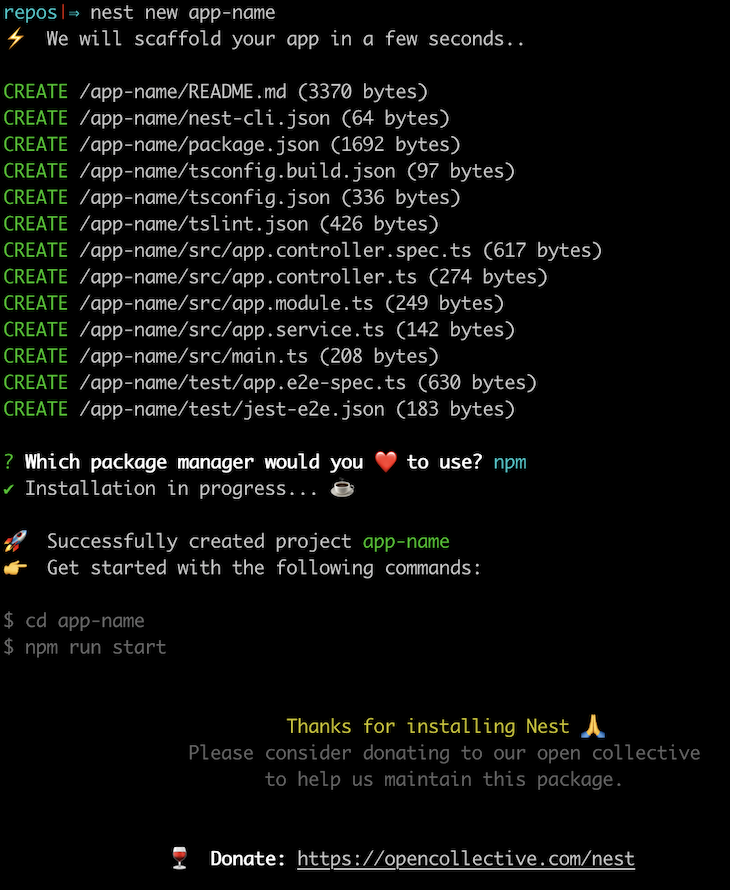

Creating a brand-new NestJS application is a breeze thanks to its awesome CLI. With a single command, nest new app-name, we have a fully functional, ready-to-go application.

The generated setup is fine for a simple application, but as it gets more complex and starts to rely on external services like Postgres or Redis, it could take quite a while for developers to set everything up themselves due to differences in the machines they’re using or whether they have all the necessary services already installed.

Ideally, our application should be started with a single command that guarantees it will work as expected regardless of the developer’s choice of machine/environment. The application should also ensure everything it depends on, like the aforementioned external services, is created during the start process. Here’s where Docker containerization becomes indispensable.

Note: This article assumes basic knowledge of Docker.

We can easily create a new NestJS application with its dedicated CLI.

To install the CLI globally, run:

npm install -g @nestjs/cli

Now in order to create an application, we can execute:

nest new app-name

Naturally, app-name is replaced by the actual name of the application. Keep in mind that the application will be generated in the app-name folder in the current directory.

The CLI wizard is going to ask us to choose between npm and yarn; in this guide, we will be using npm.

Now that our application is set up, let’s move on to adding Docker.

Containerizing our applications with Docker has many advantages. For us, the two most important are that the application will behave as expected regardless of the environment, and that it is possible to install all the external dependencies (in our case, Redis and PostgreSQL) automatically when starting the application.

Also, Docker images are easily deployable on platforms such as Heroku and work well with CI solutions like CircleCI.

As a bonus, we are going to use a recently added feature called multi-stage build. It helps us keep the built production image as small as possible by keeping all the development dependencies in the intermediate layer, which may, in turn, result in faster deployments.

With that said, at the root of our application, let’s create a Dockerfile that makes use of the multi-stage build feature:

FROM node:12.13-alpine As development

WORKDIR /usr/src/app

COPY package*.json ./

RUN npm install --only=development

COPY . .

RUN npm run build

FROM node:12.13-alpine as production

ARG NODE_ENV=production

ENV NODE_ENV=${NODE_ENV}

WORKDIR /usr/src/app

COPY package*.json ./

RUN npm install --only=production

COPY . .

COPY --from=development /usr/src/app/dist ./dist

CMD ["node", "dist/main"]

And let’s go through it line by line:

FROM node:12.13-alpine As development

First, we tell Docker to use an official Node.js image available in the public repository.

We specify the 12.13 version of Node and choose an Alpine image. Alpine images are lighter, but using them can have unexpected behavior.

Since we are using the multi-stage build feature, we are also using the AS statement to name the image development. The name here can be anything; it is only to reference the image later on.

WORKDIR /usr/src/app

After setting WORKDIR, each command Docker executes (defined in the RUN statement) will be executed in the specified context.

COPY package*.json ./ RUN npm install --only=development COPY . .

First, we copy only package.json and package-lock.json (if it exists). Then we run, in the WORKDIR context, the npm install command. Once it finishes, we copy the rest of our application’s files into the Docker container.

Here we install only devDependencies due to the container being used as a “builder” that takes all the necessary tools to build the application and later send a clean /dist folder to the production image.

The order of statements is very important here due to how Docker caches layers. Each statement in the Dockerfile generates a new image layer, which is cached.

If we copied all files at once and then ran npm install, each file change would cause Docker to think it should run npm install all over again.

By first copying only package*.json files, we are telling Docker that it should run npm install and all the commands appearing afterwards only when either package.json or package-lock.json files change.

RUN npm run build

Finally, we make sure the app is built in the /dist folder. Since our application uses TypeScript and other build-time dependencies, we have to execute this command in the development image.

FROM node:12.13-alpine as production

By using the FROM statement again, we are telling Docker that it should create a new, fresh image without any connection to the previous one. This time we are naming it production.

ARG NODE_ENV=production

ENV NODE_ENV=${NODE_ENV}

Here we are using the ARG statement to define the default value for NODE_ENV, even though the default value is only available during the build time (not when we start the application).

Then we use the ENV statement to set it to either the default value or the user-set value.

WORKDIR /usr/src/app COPY package*.json ./ RUN npm install --only=production COPY . .

Now this part is exactly the same as the one above, but this time, we are making sure that we install only dependencies defined in dependencies in package.json by using the --only=production argument. This way we don’t install packages such as TypeScript that would cause our final image to increase in size.

COPY --from=development /usr/src/app/dist ./dist

Here we copy the built /dist folder from the development image. This way we are only getting the /dist directory, without the devDependencies, installed in our final image.

CMD ["node", "dist/main"]

Here we define the default command to execute when the image is run.

Thanks to the multi-stage build feature, we can keep our final image (here called production) as slim as possible by keeping all the unnecessary bloat in the development image.

The Dockerfile is ready to be used to run our application in a container. We can build the image by running:

docker build -t app-name .

(The -t option is for giving our image a name, i.e., tagging it.)

And then run it:

docker run app-name

And everything works just fine.

But this is not a development-ready solution. What about hot reloading? What if our application depended on some external tools like Postgres and Redis? We wouldn’t want to have each developer individually install them on their machine.

All these problems can be solved using docker-compose — a tool that wraps everything together for local development.

Docker-compose is a tool that comes preinstalled with Docker. It was specifically made to help developers with their local development. Since our application is containerized and works the same on every machine, why should our database be dependent on the developer’s machine?

We are going to create a docker-compose config that will initiate and wire up three services for us. The main service will be responsible for running our application. The postgres and redis services will, as their names imply, run containerized Postgres and Redis.

In the application root directory, create a file called docker-compose.yml and fill it with the following content:

version: '3.7'

services:

main:

container_name: main

build:

context: .

target: development

volumes:

- .:/usr/src/app

- /usr/src/app/node_modules

ports:

- ${SERVER_PORT}:${SERVER_PORT}

- 9229:9229

command: npm run start:dev

env_file:

- .env

networks:

- webnet

depends_on:

- redis

- postgres

redis:

container_name: redis

image: redis:5

networks:

- webnet

postgres:

container_name: postgres

image: postgres:12

networks:

- webnet

environment:

POSTGRES_PASSWORD: ${DB_PASSWORD}

POSTGRES_USER: ${DB_USERNAME}

POSTGRES_DB: ${DB_DATABASE_NAME}

PG_DATA: /var/lib/postgresql/data

ports:

- 5432:5432

volumes:

- pgdata:/var/lib/postgresql/data

networks:

webnet:

volumes:

pgdata:

First, we specify that our file uses docker-compose version 3.7. We use this version specifically due to its support of multi-stage build.

Then we define three services: main, redis, and postgres.

mainThe main service is responsible for running our application.

container_name: main

build:

context: .

target: development

command: npm run start:dev

volumes:

- .:/usr/src/app

- /usr/src/app/node_modules

ports:

- ${SERVER_PORT}:${SERVER_PORT}

- 9229:9229

env_file:

- .env

networks:

- webnet

depends_on:

- redis

- postgres

Let’s go through its configuration line by line:

container_name: main

container_name tells docker-compose that we will be using the name main to refer to this service in various docker-compose commands.

build: context: . target: development

In the build config, we define the context, which tells Docker which files should be sent to the Docker daemon. In our case, that’s our whole application, and so we pass in ., which means all of the current directory.

We also define a target property and set it to development. Thanks to this property, Docker will now only build the first part of our Dockerfile and completely ignore the production part of our build (it will stop before the second FROM statement).

command: npm run start:dev

In our Dockerfile, we defined the command as CMD ["node", "dist/main"], but this is not a command that we would like to be run in a development environment. Instead, we would like to run a process that watches our files and restarts the application after each change. We can do so by using the command config.

The problem with this command is that due to the way Docker works, changing a file on our host machine (our computer) won’t be reflected in the container. Once we copy the files to the container (using the COPY . . statement in the Dockerfile), they stay the same. There is, however, a trick that makes use of volumes.

volumes: - .:/usr/src/app - /usr/src/app/node_modules

A volume is a way to mount a host directory in a container, and we define two of them.

The first mounts our current directory (.) inside the Docker container (/usr/src/app). This way, when we change a file on our host machine, the file will also be changed in the container. Now the process, while still running inside the container, will keep restarting the application on each file change.

The second volume is a hack. By mounting the first volume in the container, we could accidentally also override the node_modules directory with the one we have locally. Developers usually have node_modules on their host machine due to the dev tools Visual Studio Code relies on — packages such as eslint or @types, for example.

With that in mind, we can use an anonymous volume that will prevent the node_modules existing in the container to ever be overridden.

ports:

- ${SERVER_PORT}:${SERVER_PORT}

- 9229:9229

The ports config is rather self-explanatory.

Docker’s container has its own network, so by using ports, we are exposing them to be available to our host machine. The syntax is HOST_PORT:CONTAINER_PORT.

The ${SERVER_PORT} syntax means that the value will be retrieved from the environment variables.

We also add the 9229 port for debugging purposes, explained below.

env_file: - .env

When working with Node.js applications, we normally use the .env file to keep our environment variables in one place. Since we are using environment variables in our config (like we do above in ports), we also load the variables from the file just in case they were defined there.

networks: - webnet

Since each service has its own internal network (due to their being different containers), we also create our own network that will make it possible for them to communicate.

Note that the network is defined at the bottom of the file; here we are just telling docker-compose to use it in this particular service.

depends_on: - redis - postgres

Our two other services are named redis and postgres. When our application starts, we expect that both the Postgres database and the Redis storage are ready to be used. Otherwise, our application would probably crash.

redisredis:

container_name: redis

image: redis:5

networks:

- webnet

The redis config is very simple. First, we define its container_name. Then we specify the image name, which should be fetched from the repository. We also have to define the network that is to be used to communicate with other services.

postgrespostgres:

container_name: postgres

image: postgres:12

networks:

- webnet

environment:

POSTGRES_PASSWORD: ${DB_PASSWORD}

POSTGRES_USER: ${DB_USERNAME}

POSTGRES_DB: ${DB_DATABASE_NAME}

PG_DATA: /var/lib/postgresql/data

volumes:

- pgdata:/var/lib/postgresql/data

ports:

- 5432:5432

The postgres image makes use of a few environment variables that are described in the image’s documentation. When we define the specified variables, Postgres will use them (when starting the container) to do certain things.

Variables like POSTGRES_PASSWORD, POSTGRES_USER, and POSTGRES_DB are used to create the default database. Without them, we would have to write the SQL code ourselves and copy it into the container to create a database.

The PG_DATA variable is used to tell Postgres where it should keep all the Postgres-related data. We set it to /var/lib/postgresql/data.

If you take a look at the volumes config, you will see we mount the volume at the /var/lib/postgresql/data directory.

volumes: - pgdata:/var/lib/postgresql/data

What may confuse you is that the first part of the volume is not a directory, but rather something called pgdata.

pgdata is a named volume that is defined at the bottom of our file:

volumes: pgdata:

By using a named value, we make sure that the data stays the same even when the container is removed. It will stay there until we delete the volume ourselves.

Also, it’s always good to know where we keep the data instead of storing it at some random location in the container.

ports: - 5432:5432

Finally, we have to expose the 5432 port, which is the default Postgres port, to make it possible to connect to the database from our host machine with tools such as pgadmin.

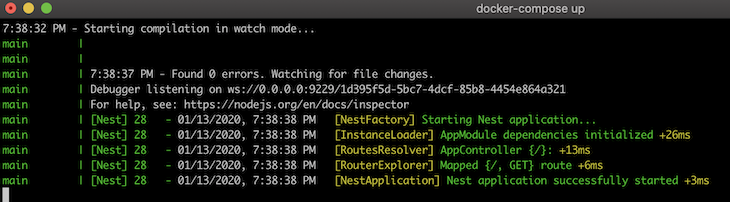

To run the application, we now have to use the following command:

docker-compose up

And Docker will take care of everything for us. Talk about a great developer experience.

In the main service config, we defined node_modules as an anonymous volume to prevent our host files from overriding the directory. So if we were to add a new npm package by using npm install, the package wouldn’t be available in the Docker context, and the application would crash.

Even if you run docker-compose down and then docker-compose up again in order to start over, the volume would stay the same. It won’t work because anonymous volumes aren’t removed until their parent container is removed.

To fix this, we can run the following command:

docker-compose up --build -V

The --build parameter will make sure the npm install is run (during the build process), and the -V argument will remove any anonymous volumes and create them again.

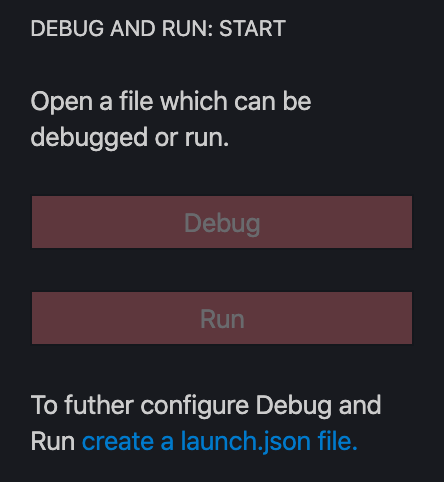

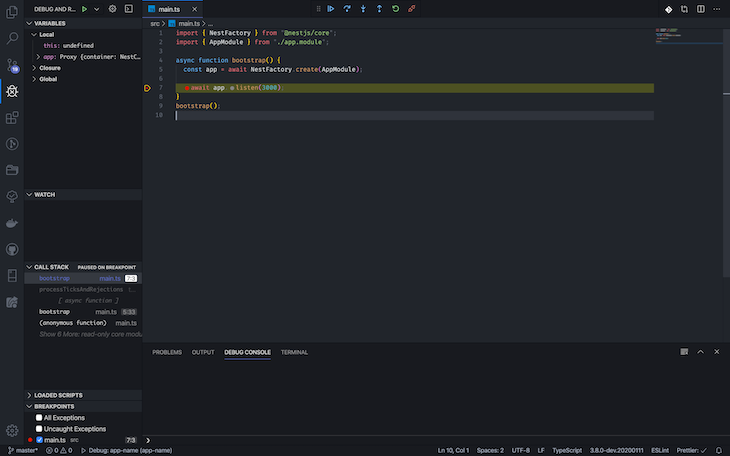

A lot of Node.js developers use console.logs to debug their code. Most of the time it is a tedious process, to say the least. Visual Studio Code has its own debugger that can be easily integrated into our application.

On the left panel of VS Code, click on the Debug and run panel and click create a launch.json file and choose Node.js in the modal.

Then, replace the contents of the .vscode/launch.json file with:

{

"version": "0.2.0",

"configurations": [

{

"type": "node",

"request": "attach",

"name": "Debug: app-name",

"remoteRoot": "/usr/src/app",

"localRoot": "${workspaceFolder}",

"protocol": "inspector",

"port": 9229,

"restart": true,

"address": "0.0.0.0",

"skipFiles": ["<node_internals>/**"]

}

]

}

We make sure that the remoteRoot is set to /usr/src/app (the path in the Docker container), port is set to 9229, and address is set to 0.0.0.0.

--debug parameterReplace the start:debug script in the package.json with the following:

"start:debug": "nest start --debug 0.0.0.0:9229 --watch",

We specify the URL 0.0.0.0:9229, on which we are going to attach the debugger. That’s why, when we defined the docker-compose config for the main service, we exposed the 9229 port.

docker-compose.yml fileIn order to use the debugger feature, we have to change the command of the main service from:

command: npm run start:dev

to

command: npm run start:debug

After starting the application, there should be a log:

Debugger listening on ws://0.0.0.0:9229/3e948401-fe6d-4c4d-b20f-6ad45b537587

Here’s the debugger view in VS Code:

The debugger is going to reattach itself after each app restart.

The NestJS CLI does a great job of setting the basic boilerplate of a project for us. In order to get a fully fledged development environment, however, we must add tools such as Docker and docker-compose ourselves. To put a cherry on top, by configuring the Visual Studio Code internal debugger to work with Docker, we improve developer productivity.

Monitor failed and slow network requests in production

Monitor failed and slow network requests in productionDeploying a Node-based web app or website is the easy part. Making sure your Node instance continues to serve resources to your app is where things get tougher. If you’re interested in ensuring requests to the backend or third-party services are successful, try LogRocket.

LogRocket lets you replay user sessions, eliminating guesswork around why bugs happen by showing exactly what users experienced. It captures console logs, errors, network requests, and pixel-perfect DOM recordings — compatible with all frameworks.

LogRocket's Galileo AI watches sessions for you, instantly identifying and explaining user struggles with automated monitoring of your entire product experience.

LogRocket instruments your app to record baseline performance timings such as page load time, time to first byte, slow network requests, and also logs Redux, NgRx, and Vuex actions/state. Start monitoring for free.

Hey there, want to help make our blog better?

Join LogRocket’s Content Advisory Board. You’ll help inform the type of content we create and get access to exclusive meetups, social accreditation, and swag.

Sign up now

Learn how to integrate MediaPipe’s Tasks API into a React app for fast, in-browser object detection using your webcam.

Integrating AI into modern frontend apps can be messy. This tutorial shows how the Vercel AI SDK simplifies it all, with streaming, multimodal input, and generative UI.

Interviewing for a software engineering role? Hear from a senior dev leader on what he looks for in candidates, and how to prepare yourself.

Set up real-time video streaming in Next.js using HLS.js and alternatives, exploring integration, adaptive streaming, and token-based authentication.

34 Replies to "Containerized development with NestJS and Docker"

Thanks for the awesome article, it’s so interesting. Keep posting please.

But I misunderstand the below statement ,

“In the build config, we define the context, which tells Docker which files should be sent to the Docker daemon. In our case, that’s our whole application, and so we pass in ., which means all of the current directory.”

Are you sure about this information ?

In the official documentation it’s telling that context is “Either a path to a directory containing a Dockerfile, or a url to a git repository.”

Thanks again.

Hey Ghandri, thank you for your kind words. More Nestjs articles coming soon!

Regarding context, here’s a quote from the documentation:

“Either a path to a directory containing a Dockerfile, or a url to a git repository.

When the value supplied is a relative path, it is interpreted as relative to the location of the Compose file. This directory is also the build context that is sent to the Docker daemon.”

Hope that helps 🙂

Nice article!

Thanks for the Article. Helped me a lot.

What did not work for me was the –only=development part in Dockerfile. The process ends up with an error that packages are missing. When I removed the –only flag, everything works fine. Docker will only install dev dependencies what is not enough to debug the application, right?

Also, where is the part how to run in production mode?

Thanks Ekene!

Hey Patrick, glad you liked the article.

Regarding the –only part, apps generated through the Nest CLI are using non-dev dependencies to build an application, which shouldn’t take place. Once the issue is resolved, the –only flag will work fine. I will adjust the article, thanks for catching it.

The production mode is as simple as building an image like you would normally do with Docker. While I will admit that it could have been mentioned towards the end, the article assumes a basic understanding of Docker.

https://docs.docker.com/get-started/part2/ – Here you can read about building and running Docker images. Hope that helps!

HI Maciej, great article.

So I just implemented as described above, however I’m running the production build I change both the target and the command instructions in the docker-compose.yml to production and npm run start:prod respectively but I keep getting the error, “Error: Cannot find module ‘/usr/src/app/dist/main'”. I don’t know if the same thing happened to you.

I’ve tried debugging to no avail. Could you please help with this?

Thanks.

Nice Article!

Could you please provide repo for it?

This is the clearest tutorial that I’ve ever read. Bunch of thanks to @Maciej Cieślar!

Thank you so much for your kind words!

This all works beautifully and the detailed instructions are very helpful. What is not working for me is the ‘watch’ mode from the Nest container to my local files. Even rebuilding the image with `docker-compose up –build -V -d` and starting from a new base image, which should refresh all the layer caches, is not dropping my file changes into the container. It had me confused for quite a while troubleshooting my database connection with mongo, when the real problem was unchanged files. What about this is not working/

services:

main:

# image is provided by docker build of local app using Dockerfile

container_name: nscc_nest

restart: always

build:

context: .

target: development

volumes:

– .:/usr/src/app

I have fixed the above issue by looking at the author’s Github repo for another NestJS tutorial for websockets on this same blog (https://github.com/maciejcieslar/scalable-websocket-nestjs) and found something missing in the Dockerfile displayed above, which is the second line of `ENV NODE_ENV=development`. I’m not sure if this caused my containers to rebuild with the required copying of files, or some pruning that I did in my local Docker environment, but now the NestJS container picks up my code changes and runs in debug mode (still trying to hit a breakpoint).

I have made a generic repo available for others to use as a starter on this tutorial (as some have requested), although I am using a MongoDB instance and Mongo-Express for web-based admin. It has some initial database seeding in it of users and documents. Check it out if you like. The Docker stuff works just as described here, only the databases have been changed to protect the innocent!

https://github.com/theRocket/mongo-express-nestjs

One of the complete and easy tutorial i’ve ever seen. Thank you dear Maciej.

If you encounter error during `docker-compose up` that rimraf and nest is not found add a command to Dockerfile before `RUN npm install –only=development`:

RUN npm install -g rimraf @nestjs/cli

Great tutorial BTW 🙂

Nice article! Very helpful. Has anyone managed to get the the debugging to work in VSC? Debugger is attached but I am unable to set any breakpoints in the TS files. VSC gives me the error: Breakpoint ignored because generated code not found (source map problem?).

This is a really great article. Thanks for taking the time to step through each piece and explain it thoroughly. I’m just getting started with Nestjs, but this article contained some great ideas that I’ll carry over to my other node projects.

Yes, I had the same problem trying to run it with docker-compose. I had to update my launch.json to look like below, because my actual node project was nested one level inside of my workspace folder, and VS Code likes to put the .vscode/launch.json at the root of the workspace by default. So my folder structure is ~/Workspace/my-project-workspace/my-nest-app. My .vscode/launch.json file is at ~/Workspace/my-project-workspace, and I’m trying to map the source to files in the ~/Workspace/my-project-workspace/my-nest-app/dist

“`

{

“version”: “0.2.0”,

“configurations”: [

{

“type”: “node”,

“request”: “attach”,

“name”: “Debug: task-management”,

“remoteRoot”: “/usr/src/app”,

“localRoot”: “${workspaceFolder}/my-nest-app”,

“protocol”: “inspector”,

“port”: 9229,

“restart”: true,

“address”: “0.0.0.0”,

“outFiles”: [“${workspaceFolder}/my-nest-app/dist/**/*.js”],

“skipFiles”: [“/**”],

“sourceMaps”: true

}

]

}

“`

And my .tsconfig looks like this:

“`

{

“compilerOptions”: {

“module”: “commonjs”,

“declaration”: true,

“removeComments”: true,

“emitDecoratorMetadata”: true,

“experimentalDecorators”: true,

“target”: “es2017”,

“sourceMap”: true,

“outDir”: “./dist”,

“baseUrl”: “./”,

“incremental”: true

},

“exclude”: [“node_modules”, “dist”]

}

“`

This was a great help! Can you please explain to me as a Docker newbie how you would deploy this app and use the production section of the Dockerfile? Would I have to use a different docker-compose.yml file with the build: target set as production? How else would this file change? Take out the volumes and command from main?

You might need to do a build as the debugger is actually stepping through the .js files. It is a bit of an illusion when you put a breakpoint in .ts files, and once you change the code, the illusion breaks.

Wow! This is one clear and thorough guide. Been a challenge to find clear guides such as this for devops related stuff for NestJs. Please keep it coming! 🔥}

Just have a question, I currently am planning to build a web app using NestJS as the backend while also having Angular as the frontend. What is the best practice for this on Docker?

Does that mean I will only need to create 2 new services; one being for Angular Development & Build which will build the angular app and copy the dist folder, and then two being the server that will serve this built app? (probably Nginx).

Great tutorial Maciej! I’ve been trying to run npm run build with only devDependencies, and it’s not possible, because nest will build the app and therefore needs production deps. If we follow your tutorial (which is gorgeous btw) it works because you didn’t mention a .dockerignore and so it send all current directory as context to docker daemon. I’m just sharing my thoughts on this, if I’m doing something wrong or misunderstood something, please let me know.

Thank you for sharing such an amazing article

How can I access the site when I run the Docker image? It’s not exposed on any port on localhost, as far as I have tried.

Hi Maciej!! Great article, that’s all I have to say. I have some Docker knowledge and be able to follow every step of the journey and agree with each line of code was a huge satisfaction.

There’s only one line in the Dockerfile which I think is not necessary. In the second build where the “final” image is created, there’s a “COPY . .” that in my opinion is not necessary. You would be copying all the source files again into the final image, which are not needed and would bloat the final result.

So, to be precise:

// Dockerfile

…

RUN npm install –only=production

COPY . . # <– I think this instruction should be removed

COPY –from=development /usr/src/app/dist ./dist

…

The only caveats would be that:

– The .env file won't be in the final image. This is not a problem because, as a best practice, environmental variables should be used instead or, if using docker-compose to test it, the .env file is loaded from outside the image, so it shouldn't be necessary to include it next to /dist folder.

– Any other file that should be placed to connect and authenticate to external services (any kind of secret) must be copied explicitly to the final image.

Again, thanks for the article, gratifying to find all the little pieces from some posts together in only one place.

Keep it coming!

This is a very good observation and thanks for sharing this. I was having the exact same problem until I noticed that indeed my .dockerignore file included “node_modules”. BTW, have you found a solution: removed node_modules from .dockeringore or run “npm install” without any flag.

Thank you for the post, pretty good guide.

I wonder if I’m making any mistake, because I got this error trying to run the docker container.

$ docker run –name api -p 3000:3000 api:1

internal/modules/cjs/loader.js:800

throw err;

^

Error: Cannot find module ‘/usr/src/app/dist/main’

at Function.Module._resolveFilename (internal/modules/cjs/loader.js:797:15)

at Function.Module._load (internal/modules/cjs/loader.js:690:27)

at Function.Module.runMain (internal/modules/cjs/loader.js:1047:10)

at internal/main/run_main_module.js:17:11 {

code: ‘MODULE_NOT_FOUND’,

requireStack: []

Ohhh man. Thank you very much for the detailed explanation. I’ve been struggling with this problem for about 2 weeks.

After a short troubleshooting I was able to get my first Nest App working with Mongo using containers!

This is a really good tutorial. everything I needed to know to get started from local dev to production just had to make some changes for my use case. I have open sourced the results as a starter kit for anyone who wants to use 🙂

https://github.com/asemoon/nestjs-postgres-dockerized-starter

This worked very well, but with node.Js 15, I get problems with user rights and other problems. Any idea what’s changed?

I found what’s different and why it’s not working on my site with Node-apline >=15.

I used in the docker-compose.yml

command: npm run start:dev:docker

which starts the script located in package.json

“start:dev:docker”: “tsc-watch -p tsconfig.build.json –onSuccess \”node –inspect=0.0.0.0:9229 dist/src/main.js\””

with node:14-apline node is started as root user all works fine.

But with >=15 now node is started as USER Node and not as root ! And this leads to the permission problem I had.

Solution was now, not to call npm, instead I called direct in the docker-compose file

command: “node /app/node_modules/tsc-watch/lib/tsc-watch -p tsconfig.build.json –onSuccess \”node –inspect=0.0.0.0:9229 dist/src/main.js\””

So again all is started as root user and works well.

It looks to me, that npm is starting node no more as root in node-alpine>= 15.

Hi! Thanks for the great article. It gives me a lot of info to start implementing a dockerized dev environment.

I’m confused by the following step though:

”

Here we install only devDependencies due to the container being used as a “builder” that takes all the necessary tools to build the application and later send a clean /dist folder to the production image.

”

In my opinion it’s impossible to run a –only=development install and then run a build. It will always miss the main packages.

RUN npm install –only=development

COPY . .

# this command will now miss e.g. nestjs/core package

RUN npm run build

I think I will need an intermediate stage in between my development and production stages to prune devDeps, like:

FROM node:12.13-alpine As development

…

RUN npm install

COPY . ./

RUN npm run build

…

FROM node:12.13-alpine as build

…

COPY –from=development . ./

RUN npm prune –production

…

FROM node:12.13-alpine as production

…

COPY –from=build /dist /dist

COPY –from=build /node_modules /node_modules

…

Am I wrong?

Hello, the `COPY . .` should not be present in the production stage of Dockerfile. Best regards, Maciej.

Awesome article! thanks a lot. This is my first time developing in a containerized project… this is great.

Do you think developing this way could be slower than simply running the application directly on a developer’s own machine?

This article is a true life-saver for making a docker-development container for your team, if this is your first time doing so. I burned an entire day and a half pulling my hair out as to why my dockerfile + compose file (with a single host-volume-mout) worked for everyone the first time they spun it up on Friday.. and then when I went to ‘docker compose up) on Monday .. I kept getting ‘sh: nest: command not found’ (it’s a nestJS app) .. npm install .. node –version.. everything worked fine except for the exact same command that worked on Friday.. and I had no clue.. NOTHING HAD CHANGED !! … My first clue was commenting out the volumes section of the compose file which brought it back to a working state.. and then I had better context for what to search for, and found a few articles, but none as clear and concise as this one.

Thank you!!