Say you’re working in a bank where you need to input data from a customer’s form into a computer. To make this possible, you would have to do the following:

While this solution might work, consider this situation: what if your bank receives hundreds of forms every day? Consequently, this would make your job more tedious and stressful. So how do we solve this issue?

This is where OCR (text detection) comes in. It is a technology that uses algorithms to procure text from images with high accuracy. Using text recognition, you can simply take a picture of the user’s form and let the computer fill in the data for you. As a result, this would make your work easier and less boring. In this article, we will build a text detector in React Native using Google’s Vision API.

This will be the outcome of this article:

Here are the steps we’ll take:

Let’s get started!

The Replay is a weekly newsletter for dev and engineering leaders.

Delivered once a week, it's your curated guide to the most important conversations around frontend dev, emerging AI tools, and the state of modern software.

In this section, you will learn how to activate Google’s text detection API for your project.

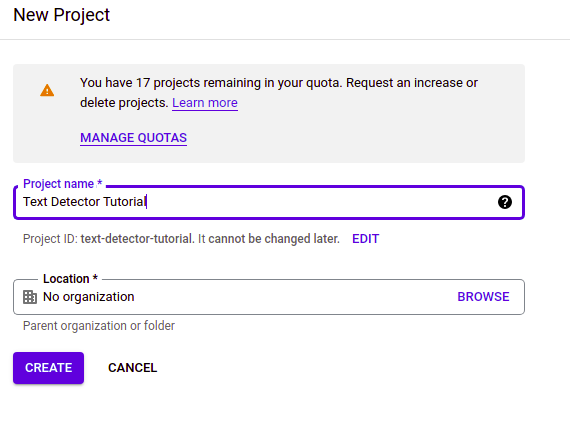

As a first step, navigate to Google Cloud Console and click on New Project:

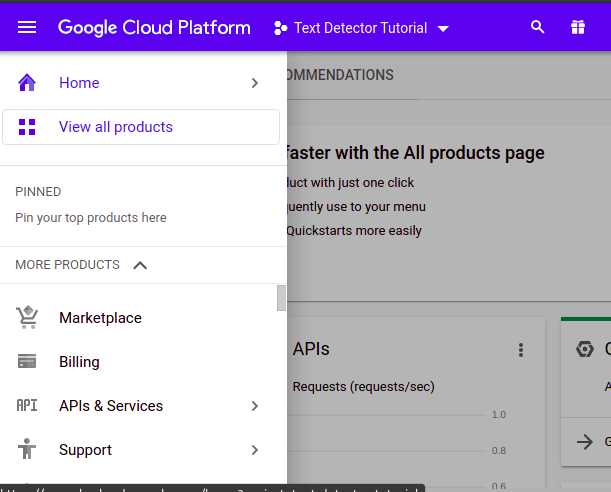

Next, we now need to tell Google that we need to use the Cloud Vision API. To do so, click on Marketplace:

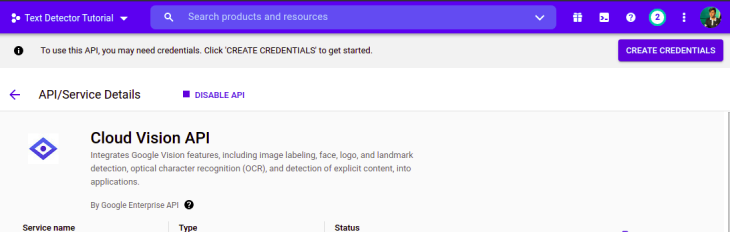

When that’s done, search for Cloud Vision API and enable it like so:

Great! We have now enabled this API. However, for authorization purposes, Google requires us to create an API key. To make this possible, click on Create Credentials:

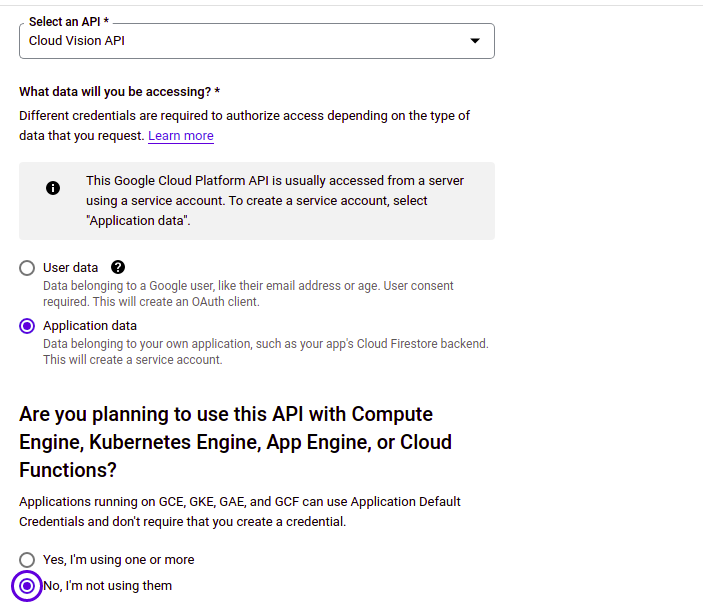

In the Credentials menu, make sure the following options are checked:

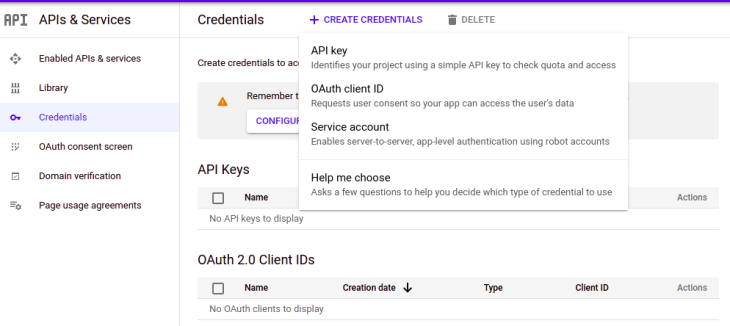

Next, click on Done. This will bring you to the dashboard page. Here, click on Create credentials, and then API key.

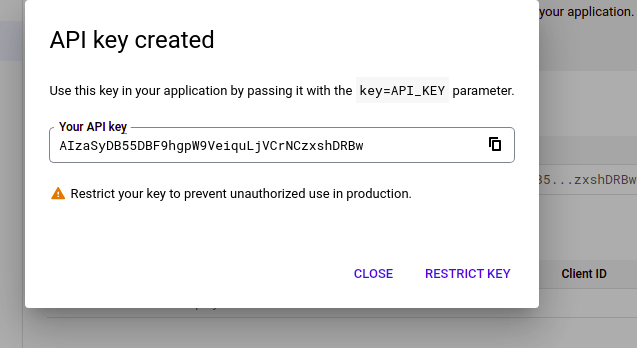

As a result, the program will now give you an API key. Copy this code to a file or somewhere safe.

Congratulations! We’re now done with the first step. Let’s now write some code!

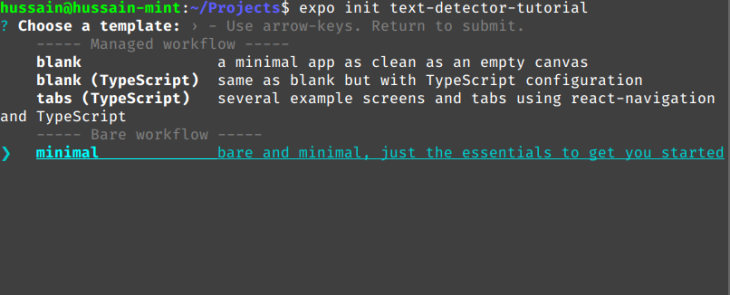

To initialize the repository using Expo CLI, run the following terminal command:

expo init text-detector-tutorial

Expo will now prompt you to choose a template. Here, select the option that says minimal:

For this application, we will let the client pick photos from their camera roll. To make this possible, we will use the expo-image-picker module:

npm i expo-image-picker

Create a file called helperFunctions.js. As the name suggests, this file will contain our utility functions that we will use throughout our project.

In helperFunctions.js, start by writing the following code:

//file name: helperFunctions.js

const API_KEY = 'API_KEY_HERE'; //put your key here.

//this endpoint will tell Google to use the Vision API. We are passing in our key as well.

const API_URL = `https://vision.googleapis.com/v1/images:annotate?key=${API_KEY}`;

function generateBody(image) {

const body = {

requests: [

{

image: {

content: image,

},

features: [

{

type: 'TEXT_DETECTION', //we will use this API for text detection purposes.

maxResults: 1,

},

],

},

],

};

return body;

}

A few key concepts from this snippet:

API_KEY constant will contain your API key. This is necessary to incorporate Google’s Cloud Vision API in our appgenerateBody function that will generate a payload for our request. React Native will send this payload to Google for OCR purposesimage parameter. This will contain base64-encoded data on the desired imageAfter this step, append the following code to helperFunctions.js:

//file: helperFunctions.js

async function callGoogleVisionAsync(image) {

const body = generateBody(image); //pass in our image for the payload

const response = await fetch(API_URL, {

method: 'POST',

headers: {

Accept: 'application/json',

'Content-Type': 'application/json',

},

body: JSON.stringify(body),

});

const result = await response.json();

console.log(result);

}

export default callGoogleVisionAsync;

Let’s break down this code piece by piece:

generateBody functionPOST request to Google’s API and sends the payload as a requestCreate a new file called ImagePickerComponent.js. This file will be responsible for letting the user choose a photo from their gallery.

In ImagePickerComponent.js, write the following code:

import * as ImagePicker from 'expo-image-picker';

import React, { useState, useEffect } from 'react';

import { Button, Image, View, Text } from 'react-native';

function ImagePickerComponent({ onSubmit }) {

const [image, setImage] = useState(null);

const [text, setText] = useState('Please add an image');

const pickImage = async () => {

let result = await ImagePicker.launchImageLibraryAsync({

mediaTypes: ImagePicker.MediaTypeOptions.All,

base64: true, //return base64 data.

//this will allow the Vision API to read this image.

});

if (!result.cancelled) { //if the user submits an image,

setImage(result.uri);

//run the onSubmit handler and pass in the image data.

const googleText = await onSubmit(result.base64);

}

};

return (

<View>

<Button title="Pick an image from camera roll" onPress={pickImage} />

{image && (

<Image

source={{ uri: image }}

style={{ width: 200, height: 200, resizeMode:"contain" }}

/>

)}

</View>

);

}

export default ImagePickerComponent;

Here’s a brief explanation:

ImagePickerComponent, we created the pickImage function, which will prompt the user to select a fileonSubmit handler and pass the image’s base64 data to this functionAll that’s left for us is to render our custom image picker component. To do so, write the following code in App.js:

import ImagePickerComponent from "./ImagePickerComponent";

return (

<View>

<ImagePickerComponent onSubmit={console.log} />

</View>

);

Here, we are rendering our ImagePickerComponent module and passing in our onSubmit handler. This will log out the chosen image’s encoded data to the console.

Run the app using this Bash command:

expo start

Our code works! In the next section, we will use the power of Google Vision to implement OCR in our app.

Edit the following piece of code in App.js:

import callGoogleVisionAsync from "./helperFunctions.js";

//code to find:

return (

<View>

{/*Replace the onSubmit handler:*/}

<ImagePickerComponent onSubmit={callGoogleVisionAsync} />

</View>

);

In this snippet, we replaced our onSubmit handler with callGoogleVisionAsync. As a result, this will send the user’s input to Google servers for OCR operations.

This will be the output:

Notice that the program is now successfully procuring text from the image. This means that our code was successful!

As the last step, attach this piece of code to the end of callGoogleVisionAsync:

//file: helperFunctions.js.

//add this code to the end of callGoogleVisionAsync function

const detectedText = result.responses[0].fullTextAnnotation;

return detectedText

? detectedText

: { text: "This image doesn't contain any text!" };

This tells the program to first check if there was a valid response. If this condition is met, then the function will return the extracted text. Otherwise, an error will be thrown.

In the end, your complete callGoogleVisionAsync function should look like this:

//file: helperFunctions.js

async function callGoogleVisionAsync(image) {

const body = generateBody(image);

const response = await fetch(API_URL, {

method: "POST",

headers: {

Accept: "application/json",

"Content-Type": "application/json",

},

body: JSON.stringify(body),

});

const result = await response.json();

console.log(result);

const detectedText = result.responses[0].fullTextAnnotation;

return detectedText

? detectedText

: { text: "This image doesn't contain any text!" };

}

Now that we have implemented OCR in our program, all that remains for us is to display the image’s text to the UI.

Find and edit the following code in ImagePickerComponent.js:

//code to find:

if (!result.cancelled) {

setImage(result.uri);

setText("Loading.."); //set value of text Hook

const responseData = await onSubmit(result.base64);

setText(responseData.text); //change the value of this Hook again.

}

//extra code removed for brevity

//Finally, display the value of 'text' to the user

return (

<View>

<Text>{text}</Text>

{/*Further code..*/}

</View>

);

text Hook to the response datatext variable

And we’re done!

In the end, your ImagePickerComponent should look like so:

function ImagePickerComponent({ onSubmit }) {

const [image, setImage] = useState(null);

const [text, setText] = useState("Please add an image");

const pickImage = async () => {

let result = await ImagePicker.launchImageLibraryAsync({

mediaTypes: ImagePicker.MediaTypeOptions.All,

base64: true,

});

if (!result.cancelled) {

setImage(result.uri);

setText("Loading..");

const responseData = await onSubmit(result.base64);

setText(responseData.text);

}

};

return (

<View>

<Button title="Pick an image from camera roll" onPress={pickImage} />

{image && (

<Image

source={{ uri: image }}

style={{ width: 400, height: 300, resizeMode: "contain" }}

/>

)}

<Text>{text}</Text>

</View>

);

}

Conclusion

Here is the source code for this article.

In this article, you learned how to use Google Cloud Vision in your project and implement text detection capability. Other than data entry, we can use our brand new OCR app for several situations, for example:

If you encountered any difficulty, I encourage you to play with and deconstruct the code so that you can fully understand its inner workings.

Thank you so much for making it to the end! Happy coding!

LogRocket's Galileo AI watches sessions for you and and surfaces the technical and usability issues holding back your React Native apps.

LogRocket also helps you increase conversion rates and product usage by showing you exactly how users are interacting with your app. LogRocket's product analytics features surface the reasons why users don't complete a particular flow or don't adopt a new feature.

Start proactively monitoring your React Native apps — try LogRocket for free.

Solve coordination problems in Islands architecture using event-driven patterns instead of localStorage polling.

Signal Forms in Angular 21 replace FormGroup pain and ControlValueAccessor complexity with a cleaner, reactive model built on signals.

Discover what’s new in The Replay, LogRocket’s newsletter for dev and engineering leaders, in the February 25th issue.

Explore how the Universal Commerce Protocol (UCP) allows AI agents to connect with merchants, handle checkout sessions, and securely process payments in real-world e-commerce flows.

Hey there, want to help make our blog better?

Join LogRocket’s Content Advisory Board. You’ll help inform the type of content we create and get access to exclusive meetups, social accreditation, and swag.

Sign up now

2 Replies to "Build a text detector in React Native"

when i try your tutorial above i got the message me have to activate the billing on GCP on the project that i use. i thought the Cloud Vision API could use as free hahaha

Very interesting to learn in detail about the subtleties of implementation with text, various nuances, I wonder if the implementation will also work steadily with large amounts of data. Thank you!