Creativity has reached new heights thanks to the rise of artificial intelligence (AI), which makes image generation possible with simple text prompts and descriptions capable of creating amazing works of art. Artists, designers, and developers alike have access to a wealth of AI tools to enhance traditional workflows.

In this article, we will build a custom AI image generator application that works offline. The web app will be built with React, and the core of the project will be powered by the Hugging Face Diffusers library, a toolkit for diffusion models. Hugging Face offers tools and models to develop and deploy machine learning solutions, focusing on natural language processing (NLP). It also has a large open source community of pre-trained models, libraries, and datasets.

We’ll pair these tools with Stable Diffusion XL, one of the most advanced and flexible text-to-image generation models currently available.

A significant part of this article will focus on the practical implementation that is achieved by running these kinds of models. Two main approaches will be compared: performing inference locally inside an application environment versus using a managed Hugging Face Inference Endpoint. With this comparison, we’ll see the trade-offs in performance, scalability, complexity, and cost.

The Replay is a weekly newsletter for dev and engineering leaders.

Delivered once a week, it's your curated guide to the most important conversations around frontend dev, emerging AI tools, and the state of modern software.

Modern AI image creation is led by a class of models known as diffusion models. Picture an image going through a process that turns it into static noise, one pixel at a time. Diffusion models do the opposite by starting with noisy randomness, building structure, and then removing the noise until a visually recognizable image appears based on the text prompt. This calculation can be intensive, but it allows anyone to create fantastically accurate and beautiful photos.

Diffusion models can be complex and challenging to work with, but fortunately, the Hugging Face Diffusers library simplifies this process significantly. Diffusers offers pre-trained pipelines that can be used to create images using just a few lines of code, along with precise control over the diffusion process for complex use cases. It protects us from most of the complexity of the process, thus allowing us to spend more time creating our apps.

In this project, we will use stabilityai/stable-diffusion-xl-base-1.0. Stable Diffusion XL (SDXL) is a huge leap for text-to-image generation, thanks to its capability of generating higher quality, more realistic, and more visually stunning pictures than models of the same class. It also has a superior capacity for handling prompts and can generate more sophisticated outputs.

You can check out the entire library of text-to-image models in the Text-to-Image page under the filters section.

Our image generator app will have a full-stack structure that uses local inference. This means that the app will run offline on our machine and won’t require internet access or calls to an external API.

The frontend will be a React web app that runs in the browser, and it will make calls to our Flask backend server, which will be responsible for running the AI inference and logic locally using the Hugging Face Diffusers library.

AI inference is the process of using a trained AI model to make predictions or decisions based on new input data that it has not seen before. In its simplest form, this can mean training a model to learn a new pattern after analyzing some data.

When the model can use what it has learned, this is referred to as “AI inference.” For example, a model can be trained to tell whether it’s looking at a picture of a cat or a dog. In more advanced use cases, it can be trained to perform text autocompletion, offer recommendations for a search engine, and even control self-driving cars.

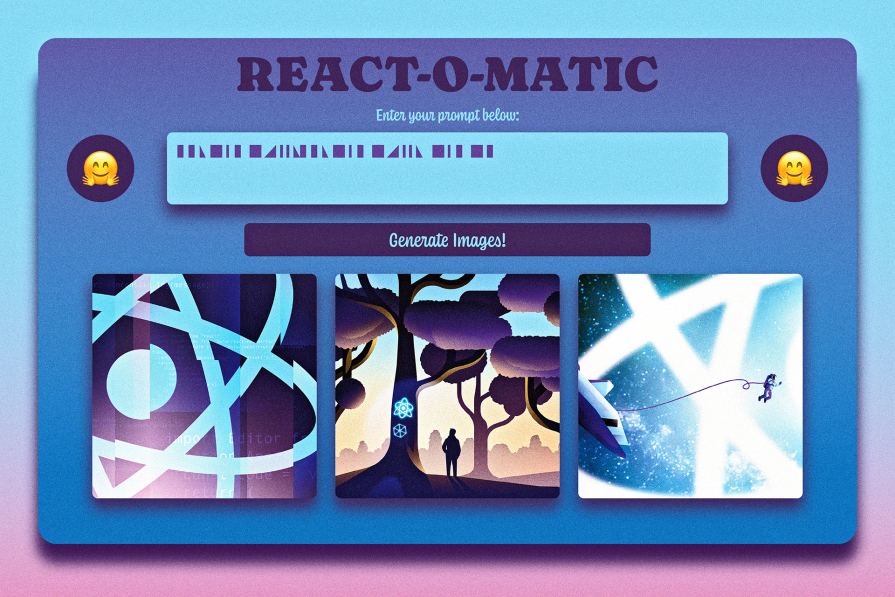

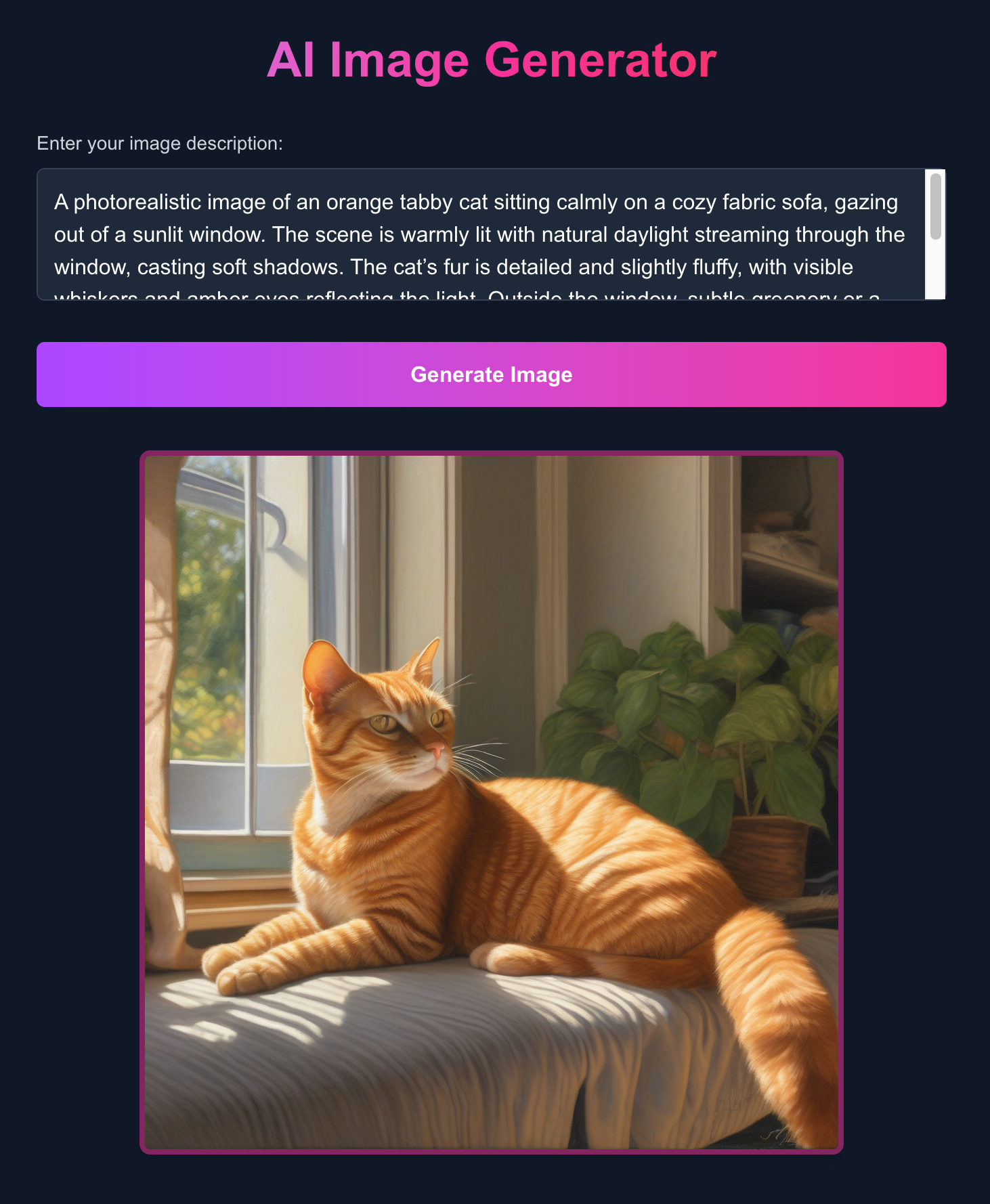

This is what the design for our AI image generator app will look like:

Our project will use the following technologies:

<img> tag to display the newly generated picture for the userFor better UX, the React frontend displays a loading indicator that remains as long as the backend is performing its inference, and error messages if anything goes wrong.

You can clone or download the application from this GitHub repository. The README file contains instructions on how to set up the Flask backend and React frontend, which are straightforward and shouldn’t take very long.

When you complete the setup, you need to run the backend and frontend servers in different tabs or windows on the command line with these commands:

# Run the Flask backend python app.py # Run the Next.js frontend npm run dev

When you call the Diffusers library for the first time, it will automatically download the model files from the Hugging Face Hub. These models will then be stored in a local cache directory on your machine.

On macOS/Linux, the default location for this cache is in your home directory under ~/.cache/huggingface/hub. Inside that directory, you will find more subdirectories that are related to the models you have downloaded. For this model, the path might look something like this:

~/.cache/huggingface/hub/models--stabilityai--stable-diffusion-xl-base-1.0/.

The library will manage this cache automatically, so you don’t need to interact with it directly. If you have downloaded other models using Hugging Face libraries, they will also be stored in this cache.

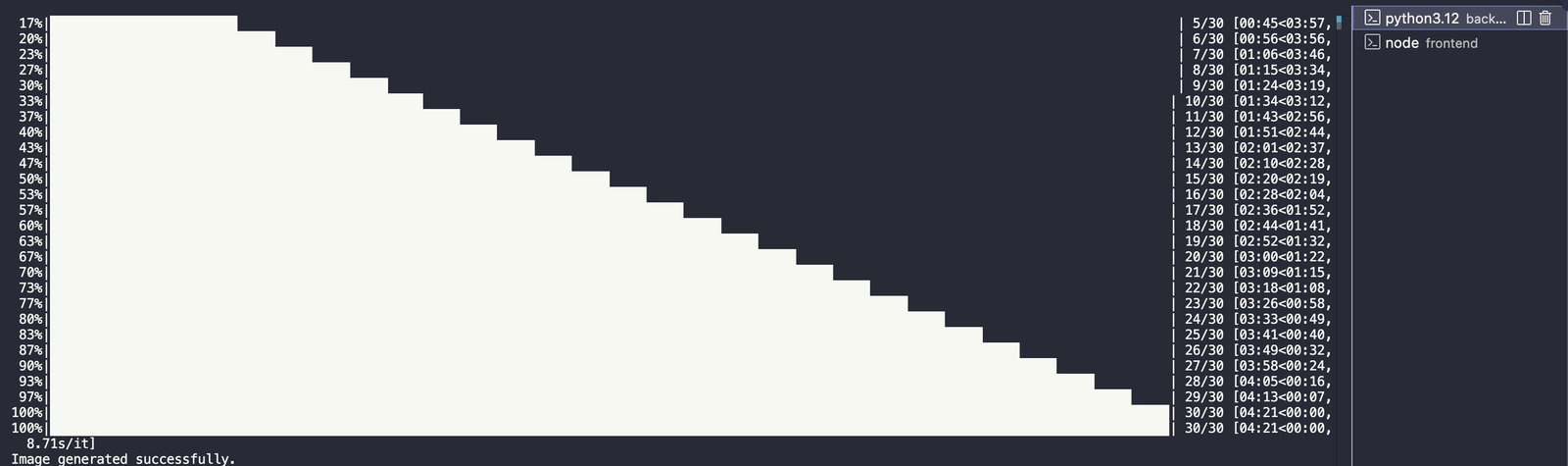

When you generate an image using the application below, you can see what it looks like in the terminal. For reference, an M1 MacBook Pro takes between two and 12 minutes to generate an image. A high-spec computer will likely complete the process in seconds, especially if you have an NVIDIA GPU with CUDA:

One of the most essential factors when deploying an AI model for inference is where the computation takes place. In our React app example, we had two choices:

Let’s see how both models compare!

When a diffusion model is run locally, the computations are performed on the user’s machine or a server, which is directly under your control, and not a third party like a shared hosting provider.

The pros to this approach include:

The cons to this process include:

Unlike local inference, Hugging Face Inference Endpoints are managed online on Hugging Face infrastructure. Models can be accessed through an easy-to-use API call.

The pros to using this approach include:

The cons to this approach include:

When it comes to setup, local inference is highly technical in terms of setting up the hardware and software stack. Hugging Face Endpoints have a much less technically complicated setup and a focus on model selection and setup through their platform.

As for cost, local inference is pricey to set up in terms of hardware and upfront costs, but it potentially has lower ongoing costs if usage is extremely high and the hardware is performant. Inference Endpoints are cheap upfront but have costs that accumulate depending on use, which can be more stable and manageable for variable usage.

Performance is greatly dependent on hardware, with inference endpoints having less variable performance (following cold starts) on optimized hardware, the trade-off being that latency arises from network communication.

Choosing between Hugging Face Inference Endpoints and local inference mostly depends on the type of requirements and constraints of your project:

Use local inference if you:

Choose Hugging Face Inference Endpoints if you:

When an image is generated on the backend, it needs to be optimally transmitted to the frontend React app. The biggest concern is transferring binary image data over HTTP.

Two techniques can be used to solve this:

One of the best aspects of using the Base64 encoding method is that it integrates the image directly into the API’s JSON response as it converts the binary data into a string of text. This can simplify the transfer because the image data is bundled along with other response data, so it can be embedded directly into an image tag as a data URL.

However, a big drawback to this approach is the 33% increase in data size due to the encoding process. Very small or multiple images can significantly increase the payload, making network transfer time slower and even impacting frontend speed as it is forced to process much more data.

On the other hand, the temporary URL method has the backend to save the generated image file and return a short-lived URL pointing to its location. The frontend can then use this URL to retrieve the image independently.

This approach keeps the initial API response payload small and uses the browser’s enhanced image loading efficiency. Even though it adds backend overhead for handling storage and generating URLS, it more or less offers enhanced performance and scalability with larger images or for generating more images, as the main data channel is not filled with large image content.

Choosing between these methods mainly comes down to anticipating image sizes and balancing backend complexity with frontend responsiveness.

One of the largest issues with building an AI image generation app, especially when performing inference on a local machine or a self-hosted server, is handling the high computational demands.

For example, Stable Diffusion XL and similar models need fast, high-capacity GPUs, which can be very costly. They might also require special setup and technical knowledge, which the average non-technical person might not have.

Regardless, with any inference method, good error handling is crucial. This means using user prompt validation to limit inappropriate or unsafe requests and handling potential failures.

In addition to the basic implementation, performance improvement of the application is a significant requirement when attempting to provide an uninterrupted experience because image generation can be time-consuming. Strategies such as using load screens, queueing requests in the backend, exploring model optimization, or caching techniques can help with any latency problems.

Security should also be taken into account, such as storing sensitive API keys securely when using managed endpoints, sanitizing user input to avoid vulnerabilities, and potentially using content moderation on produced content, which are important measures for creating a secure and responsible application.

The world of generative AI is evolving fast, and the best way to understand what it can and can’t do is by building projects to test the wide variety of tools available.

Use this guide as a reference point for building a React-based AI image generation app. The project we built is good enough to be a basic minimum viable product (MVP). You can experiment with more features using the other text-to-image models on the Hugging Face Hub. There’s no limit to what you can create with all the tools we have at our disposal today!

Install LogRocket via npm or script tag. LogRocket.init() must be called client-side, not

server-side

$ npm i --save logrocket

// Code:

import LogRocket from 'logrocket';

LogRocket.init('app/id');

// Add to your HTML:

<script src="https://cdn.lr-ingest.com/LogRocket.min.js"></script>

<script>window.LogRocket && window.LogRocket.init('app/id');</script>

Russ Miles, a software development expert and educator, joins the show to unpack why “developer productivity” platforms so often disappoint.

Discover what’s new in The Replay, LogRocket’s newsletter for dev and engineering leaders, in the February 18th issue.

Learn how to recreate Claude Skills–style workflows in GitHub Copilot using custom instruction files and smarter context management.

Claude Code is deceptively capable. Point it at a codebase, describe what you need, and it’ll autonomously navigate files, write […]

Would you be interested in joining LogRocket's developer community?

Join LogRocket’s Content Advisory Board. You’ll help inform the type of content we create and get access to exclusive meetups, social accreditation, and swag.

Sign up now

2 Replies to "Build a React AI image generator with Hugging Face Diffusers"

This sounds really cool! Building an AI image generator with React and Hugging Face Diffusers is a great idea. I love that it can work offline too. I’m excited to see how this project turns out!

Thanks for your blog! It helps a lot.

What are other models you typically use besides stable diffusion?