In the past three years, AI tools have become a key part of many digital products. You can see them in new chat applications like Claude or ChatGPT, as well as in traditional applications like Google Sheets or Photoshop.

Because of the nature of large language models (LLM), their hallucinations (mistakes), their additional costs, and the lag in response time (similar to old computers), designers have had to innovate add new interactions, and adapt user interfaces to make AI tools and features more intuitive for users so the interaction between the user and the machine remains smooth.

In this article, I show different AI interactions to help you design more intuitive interfaces for your product, no matter what role you play in your organization.

To get an accurate answer from an AI model, it’s critical to give it details and context. This helps the AI model understand and respond clearly.

Because the technology is new, many users aren’t familiar with how these models work. They think that with short prompts and a few words, the AI model will give them the perfect answer.

So, they keep sending prompts until they get the results they want. But it doesn’t have to be like that, especially for applications with a specific focus.

When the user asks a question, the AI model can respond by asking specific questions before answering.

By doing this, the answer will be more accurate, and also save money for the company. Remember that each call to the AI model costs money.

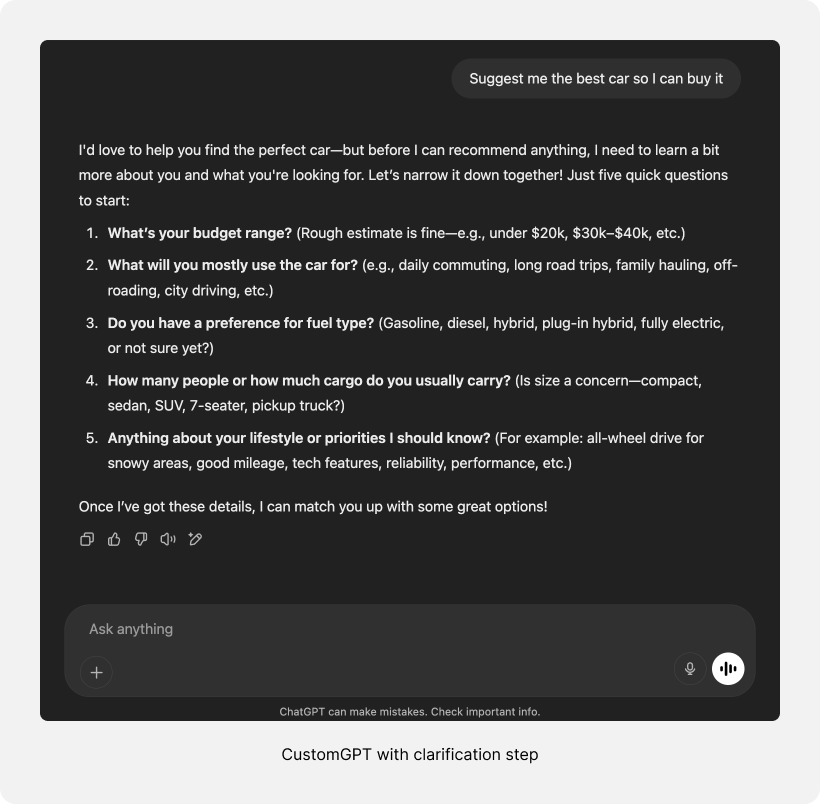

Let’s take a chatbot for buying cars as an example. The AI model needs to know the user’s needs and budget to give accurate results. If the important details are missing, the AI model can’t answer correctly.

So if a user asks, “Suggest the best car I can buy, ” the LLM model can answer and ask questions, like:

“Hey, I’m happy to help you, but before we continue, I would like to know some more details to accurately suggest to you your dream car.

With the users’ answers, the AI model can calculate all the information and suggest the best models. It’s like how a car dealer asks questions when a buyer talks with them:

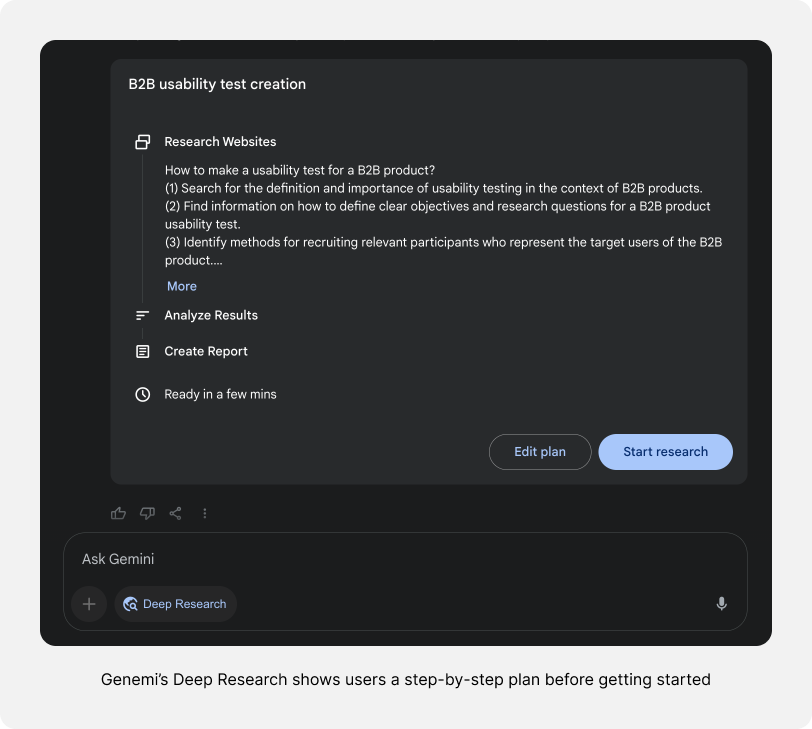

Google Gemini’s deep research feature uses a similar technique.

Before starting, it shows the plan and asks for approval or modifications. This step is crucial because the research can take five to ten minutes, and if the results are inaccurate, the user’s time is wasted:

Unlike how we work with computers today, AI models don’t always provide consistent answers.

This is because of how AI models behave — they determine an answer from collapsing information, so the results aren’t always consistent.

We can send the same prompt to the same AI model and the information will be similar, but it won’t be exactly the same answer. Apart from that, sometimes the AI model hallucinates and gives a wrong answer.

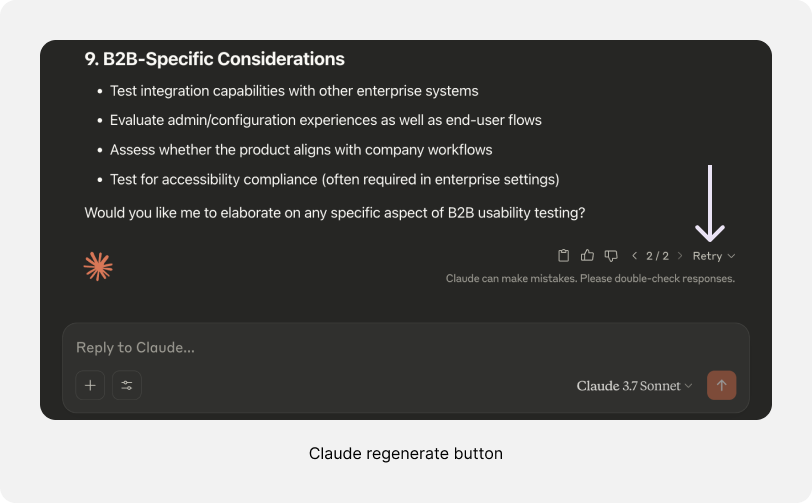

Because of that, it’s important to add a regenerate button to the interface so that if the user isn’t satisfied with the result, they can click on the regenerate button and quickly get another answer without having to type the prompt again:

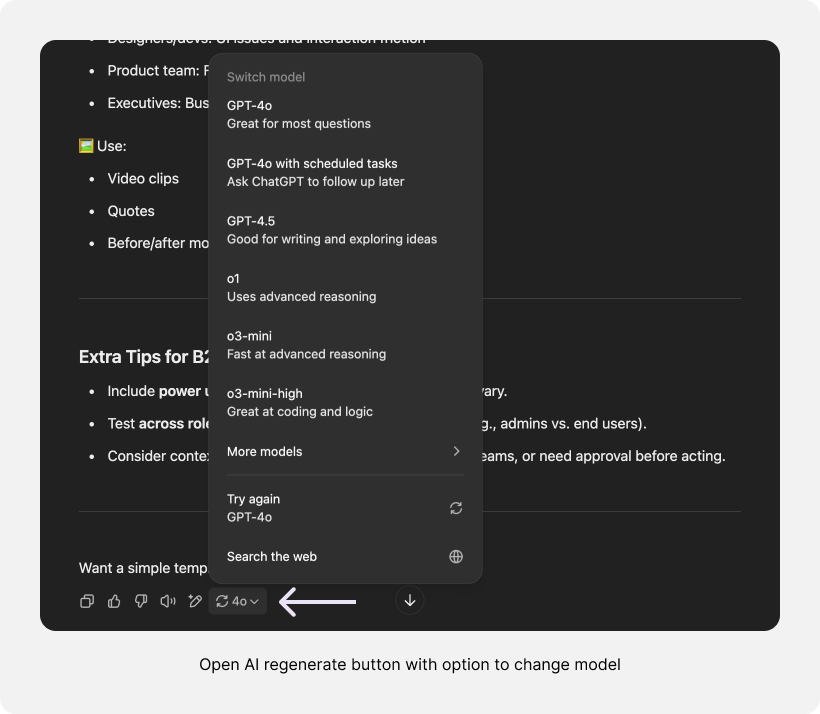

You can also make it more advanced like how on ChatGPT the user can regenerate the same prompt but also change the model.

If the answer isn’t good and the user thinks a more powerful model will be better for the task, they can easily click to generate the response with a different one:

Writing prompts isn’t a simple task. It takes time, especially when the user crafts them well.

The problem begins when users don’t save their prompts after sending them to the LLM model. If they want to reuse the same prompt later, they need to find it or rewrite it from scratch, which is tedious and time consuming:

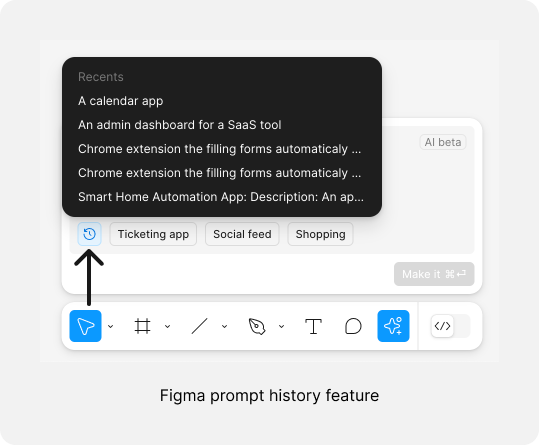

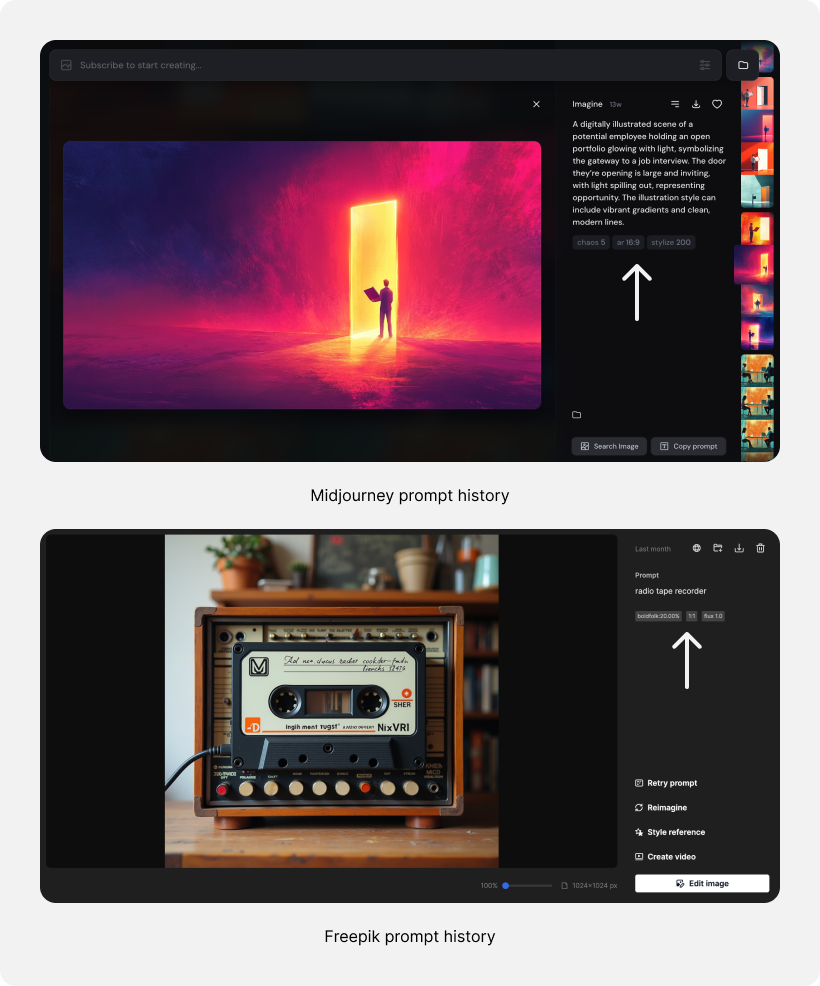

To address this, many AI tools provide a feature where users can access their old prompts. This way, if a user wants to use a prompt they didn’t save, they can easily find it in the interface.

This feature can be designed similarly to Figma, which saves the latest prompt, or like Freepik and Midjourney, which allow users to reuse the exact prompt for generating an image easily:

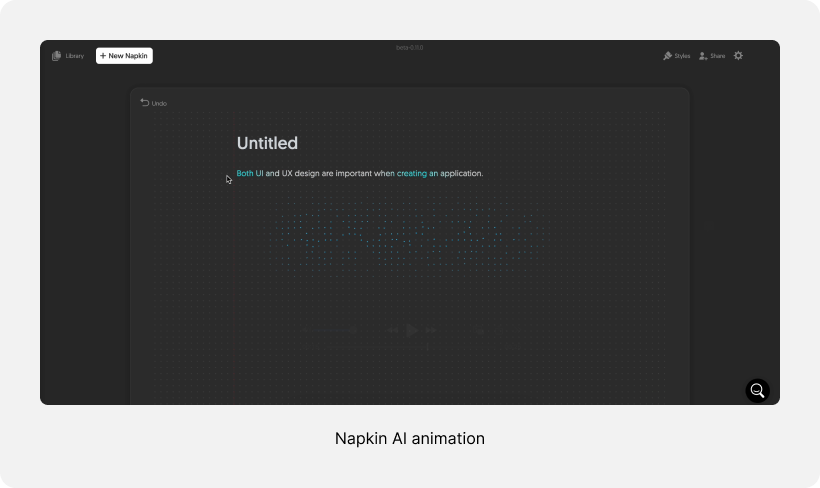

AI systems generate answers slowly. To improve the user experience, you can show a short animation of the AI process. This shows your users that the system is working on the task.

Simple animations like writing “thinking” with three dots or a loader can be enough, but adding advanced interaction can improve it even more. For example, the company Npakin shows great animations while users wait for the product to generate images:

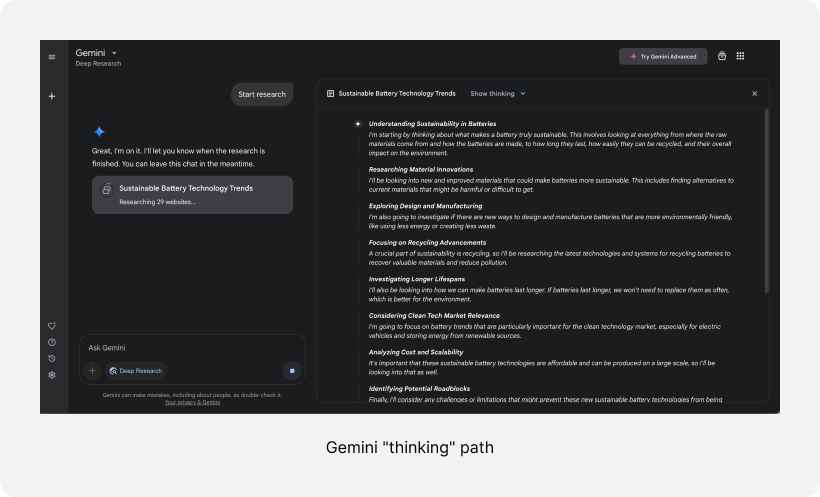

For complex tasks, you can show users what the LLM model does while it’s working on it. This is especially true with thinking models like Gemini 2.5 or GPT O1 that perform many tasks before showing the user an answer.

This is particularly important for deep research features like Gemini and ChatGPT, where the user can wait five minutes or more to get the final answer:

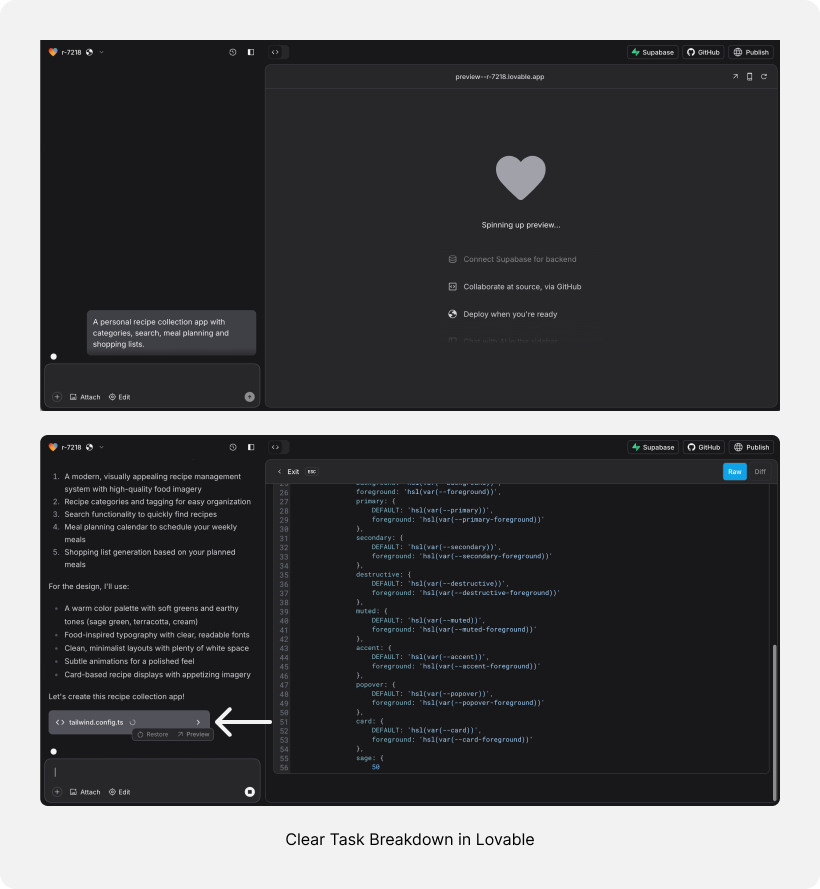

You can see this pattern also in products like Lovable, which creates websites and applications. When the user asks for a task, Lovable shows them exactly what it does, like generating the header, CSS, etc:

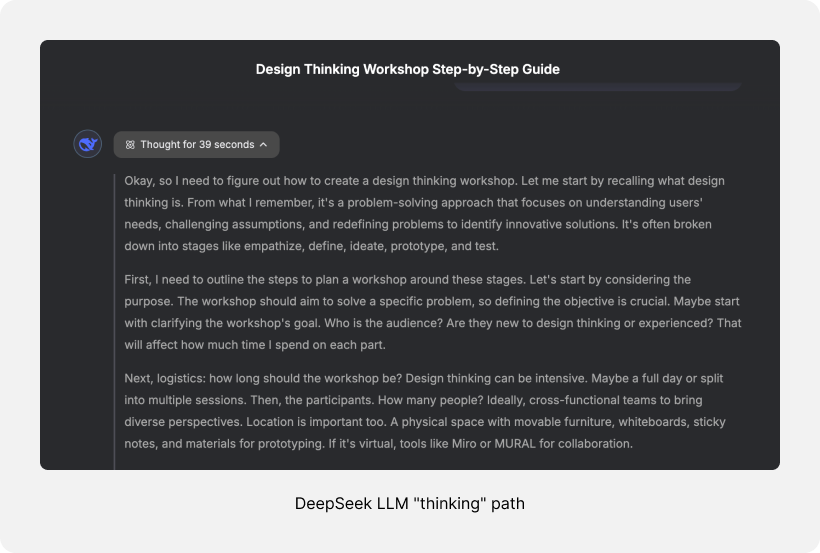

Also, after the task is finished, you can show the user the process. This will enable them to understand what the LLM model did to get this answer. For example, the DeepSeek R1 shows all the processes so the user understands how the model got to the final answer:

If the user needs to use the AI tool again and again for the same task, give them the option to configure default parameters and values. It can be done in different ways.

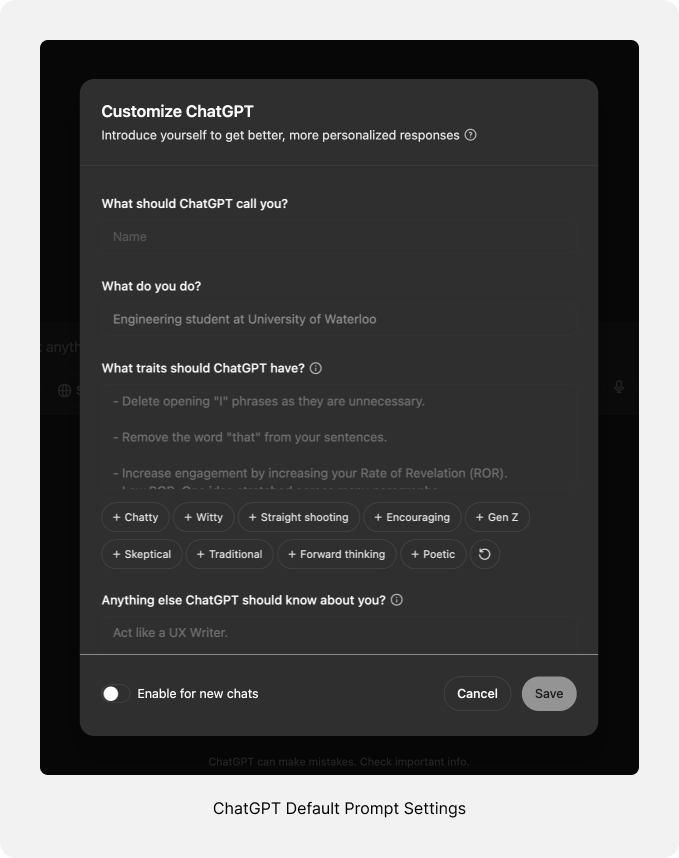

You can let the user set default information. For example, in ChatGPT, users can set details about themselves, the voice tone they want the AI to use, some basic writing tips, or any other advanced settings they prefer. Another example is Claude which allows users to set default parameters for the writing style they want the chat to use:

Another option is to give the user the ability to train a model for specific needs. Despite this process being very technical, there are some applications that start to simplify it for the user, like Freepik where the user can train the AI model based on their photos, or Eleven Labs where the users are fine-tuning a model to their specific voice:

You could also build a simple task agent within the tool for specific tasks. The user can provide all the relevant information, and the AI model will focus on that task.

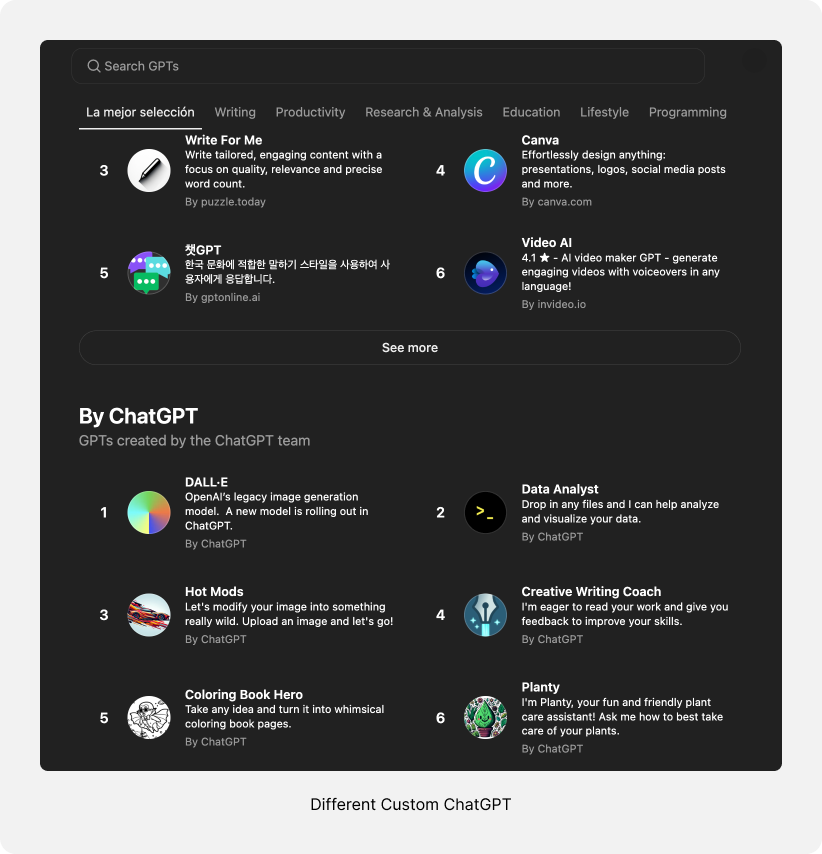

This is like the custom instructions feature in ChatGPT or the Claude Projects feature. Users can add a system prompt and file to guide the AI’s behavior and set the context for the conversation.

All these features and solutions give the user the ability to configure the tool for their specific needs so they can work faster and more efficiently with it and the result will be more accurate.

Unlike most digital products that come with a time limit, such as one year’s use, AI products have an additional factor: credits.

Users don’t only buy time — they buy credits. Sometimes the credit limit is high so they cannot reach it easily. However, sometimes the limitations are very strict, especially for products that use API calls from other services.

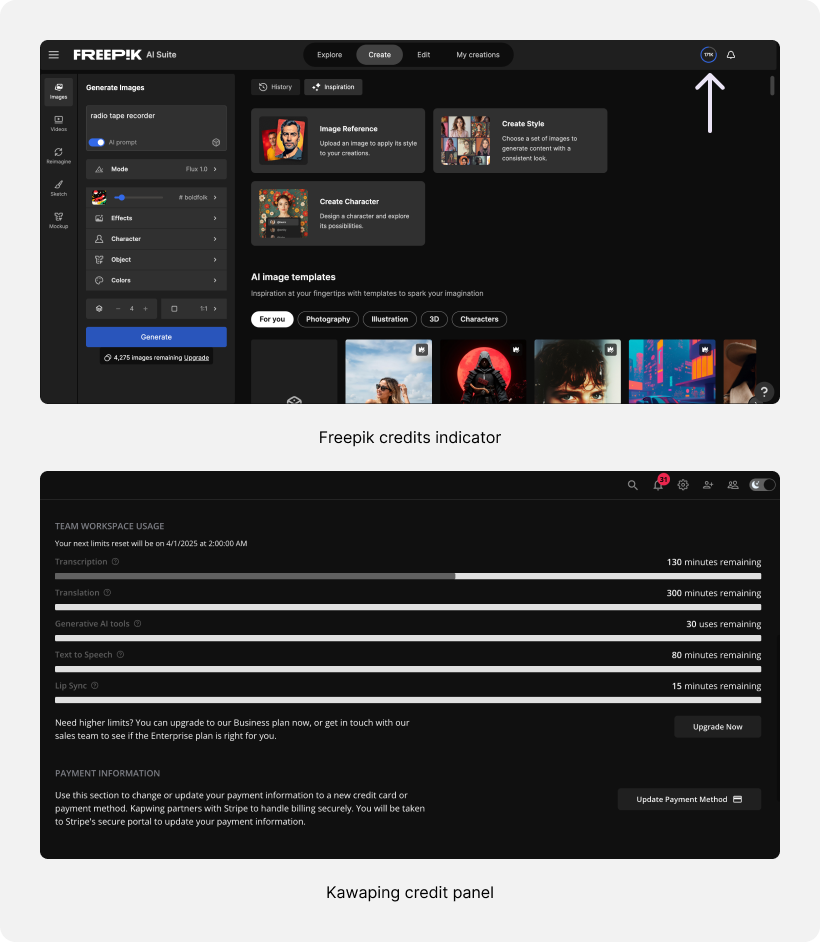

Because of this, you need to show users how many credits they’ve consumed. This helps them understand their usage and decide whether to limit it or buy more credits. This also improves the product’s transparency with the user.

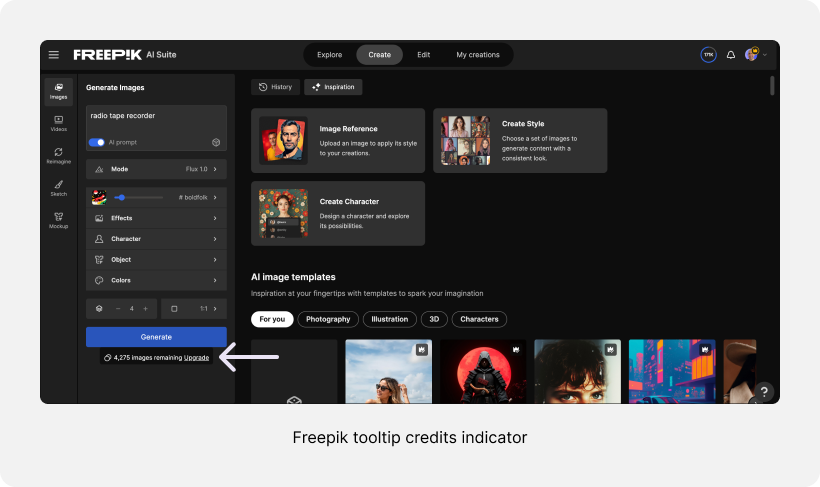

Some products, like Freepik, show this information on the main screen, while others, like Kawaping (a video editing tool), add it to the settings page. This information must be displayed, but where you show it depends on specific product:

I also like the tooltip in Freepik that shows users exactly how many credits they’ll use for each generation. This happens before clicking on the generate button:

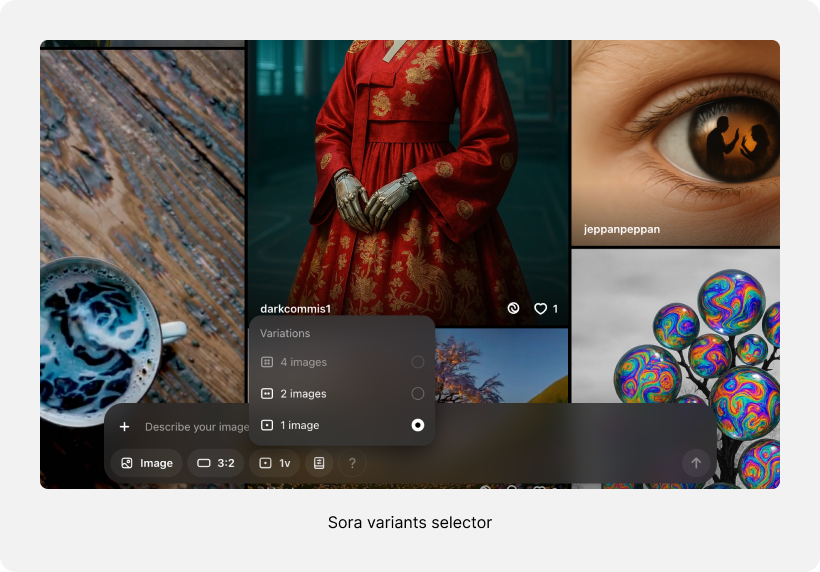

Another thing you can do is let users generate more than one option at a time. Users can choose to generate more or fewer images. However, it would be helpful to let users set a default so they don’t have to set it each time. This will enable them to fit the software to their needs:

While designing an AI chat feature, remember to design it so the model’s tone of voice aligns with your brand. This helps users have a complete experience with the product.

For simple systems, setting the prompts can be okay, but if you want to take it to the next level, you’ll probably need to fine-tune a model.

You can also take it a step further and make it even more advanced. For example, if you have a chatbot that helps people on your ecommerce website, a conversation about a user’s issue with the product they bought is different from a conversation with a user before they buy the product.

If the task is to help them say yes and buy, the chat can respond with more passion. However, if the user talks to the chat about a product issue, an empathetic tone of voice would be a better fit.

Although the technology is new and it takes time to adapt, I believe we’ll soon see more companies using approaches like this.

Using text to explain what you want the AI to generate for you has two sides. On the one hand, it’s very easy and simple to use, but on the other hand, the user needs to write every detail, which can be uncomfortable.

Because of that, you can add predefined inputs to the prompts so they can select some parameters from the interface instead of writing them.

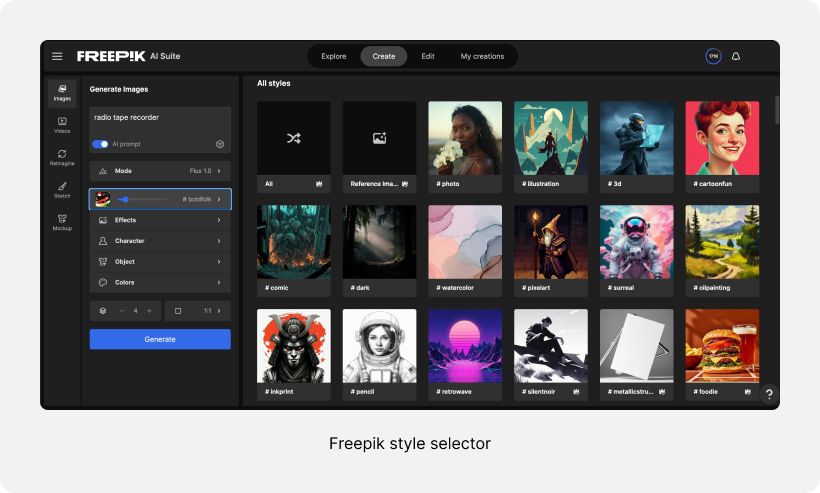

In Freepik, for example, the users can select the style of the image from a gallery. It’s much easier than explaining the style to the machine. It can also help the user open their eyes to different styles in a visual way and not by text:

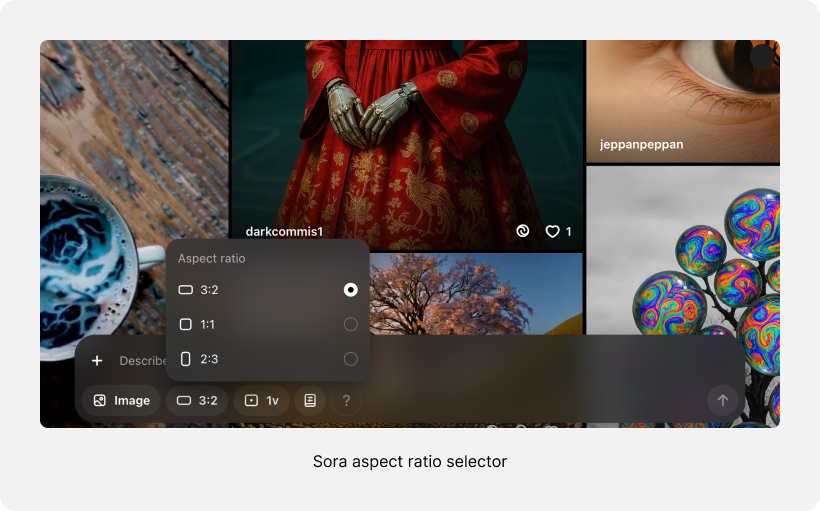

Another example is Sora, where the user can select the aspect ratio of the image or video from the interface instead of writing it:

Not all the options need to be inside. However, it’s worth adding the most used inputs to help the user work faster with the tool.

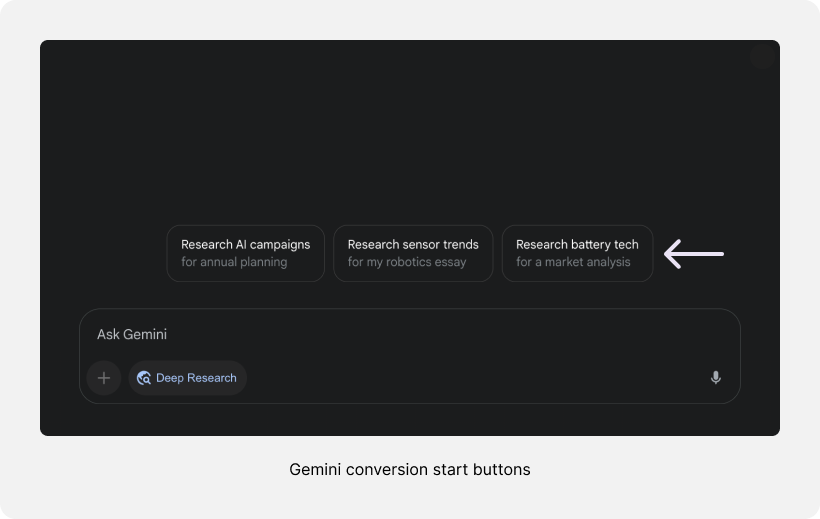

Many AI tools use predefined conversation buttons in the interface. When the user clicks on them, the chat starts directly with a specific task. This helps new users understand how the product works, giving them a sense of how to use it with just one click.

You can see this in Gemini:

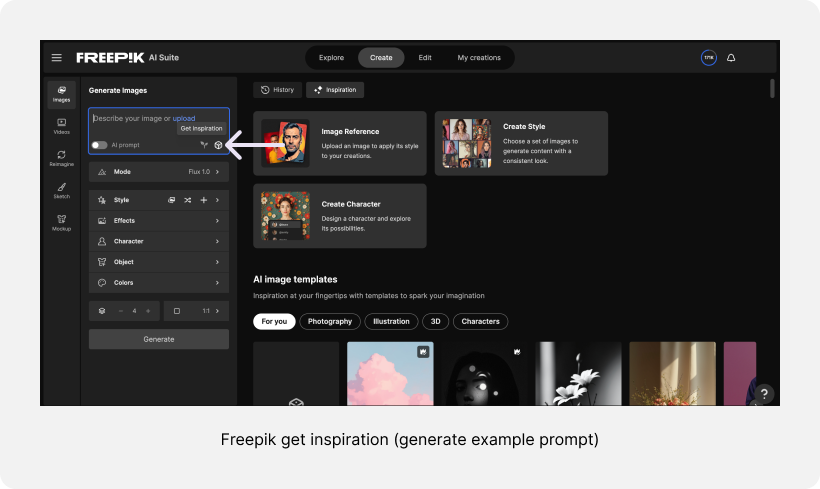

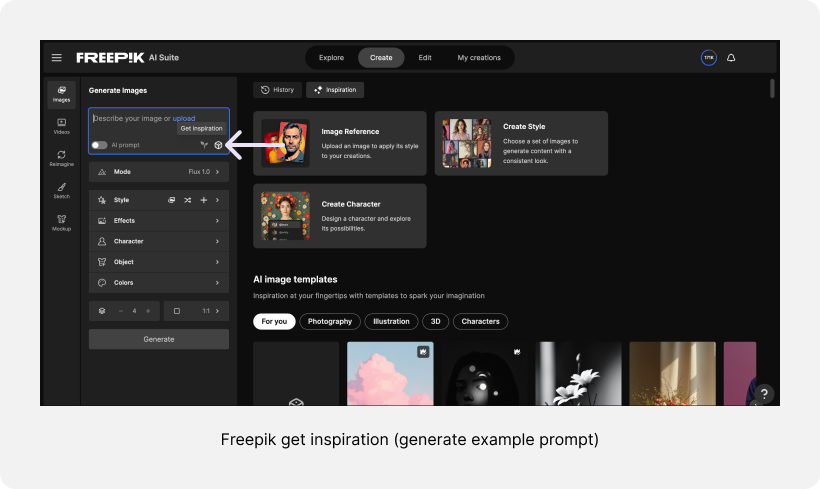

Another example of this is the “generate example prompt” button, where the AI model generates an example prompt, allowing the user to easily understand what the AI tool can do:

In some cases, these buttons can help users work faster by automating frequently performing tasks. For instance, in the Wordtune app (a tool that helps with writing), there’s a default “Fix any grammar mistakes” button, as this is one of the most commonly used features for writers. This eliminates the need to repeatedly write the same prompt:

In this article, I highlighted some of the emerging patterns in AI products. Although AI is quite new in digital products, several patterns are becoming standard across tools.

We discussed the importance of asking users questions before providing answers, especially for complex tasks where insufficient information can lead to poor results.

I explained the importance of adding a regenerate button to the interface and enabling users to receive an updated response without retyping their prompts. I also pointed out the value of adding a prompt history feature because users often want to go back and use their prompts again, even if they didn’t save them right away.

We saw that AI task execution takes time so it’s essential to include a loading indicator in the interface. For thinking tasks, explaining the app’s process to users helps them understand what’s happening.

We also explored the benefits of showing users their remaining credits, how adding structured inputs alongside free text can enhance tool efficiency, and why many AI tools now include conversation starter buttons in the interface.

I’m confident that these interactions will continue to improve, with some patterns becoming obsolete and new UX patterns emerging. Given the rapid evolution of this technology, there’s a lot to wait for.

LogRocket's Galileo AI watches sessions and understands user feedback for you, automating the most time-intensive parts of your job and giving you more time to focus on great design.

See how design choices, interactions, and issues affect your users — get a demo of LogRocket today.

Security requirements shouldn’t come at the cost of usability. This guide outlines 10 practical heuristics to design 2FA flows that protect users while minimizing friction, confusion, and recovery failures.

2FA failures shouldn’t mean permanent lockout. This guide breaks down recovery methods, failure handling, progressive disclosure, and UX strategies to balance security with accessibility.

Two-factor authentication should be secure, but it shouldn’t frustrate users. This guide explores standard 2FA user flow patterns for SMS, TOTP, and biometrics, along with edge cases, recovery strategies, and UX best practices.

2FA has evolved far beyond simple SMS codes. This guide explores authentication methods, UX flows, recovery strategies, and how to design secure, frictionless two-factor systems.