At Config 2025, Figma launched Figma Make — a generative AI tool that turns prompts, screenshots, and even Figma screens into functional code. The goal has been to streamline the workflow between designers and developers.

I’ve been testing it out. And while it’s clear that this tool is still in its early days — buggy, clunky, and limited to basic use cases — it also hints at a more exciting future. One where prototyping, handoff, and fixing UI bugs aren’t stuck in tedious workflows.

Here’s what I see coming — and why UX designers should start paying attention now.

Right now, building prototypes in Figma means manually linking screens with lines for testing the functionality. Adding variables to make prototypes more dynamic, such as real inputs that function, requires a significant amount of time and effort to set up.

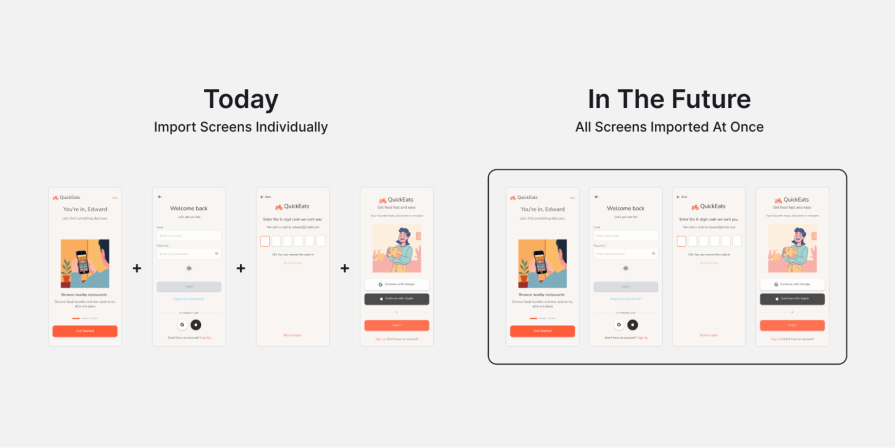

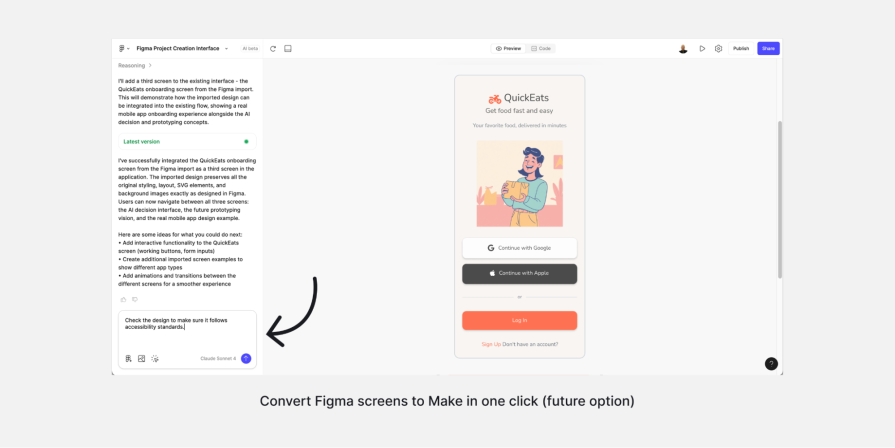

Another option is to import screens one by one into Figma Make and explain the interactions to the LLM model. While this approach works, it remains time-consuming because you must import each screen separately and explain every interaction. This process can be tedious.

In the future, I believe this process will improve, making it easier for designers to create full prototypes.

Here’s how it could work. Designers will continue to create flows in Figma as they do today, with multiple screens on a single page. Then, they will import all the screens at once, rather than one by one. During the import, designers can provide context about the design, the app, and how the flows work. With this information, the AI model will automatically create the prototype, removing the need for extra work or overly detailed input.

This process is already possible today, but companies do not yet have many AI models specifically trained for this task (they use general LLMs), so results can be inaccurate.

But if Figma fine-tunes an AI model for this purpose, it could better understand how to create flows. Many products share common solutions, so the AI model could fill in the details without needing every piece of information from the user:

One of the most frustrating aspects of Figma Make is that it often makes unintended decisions when I request a simple change or flow.

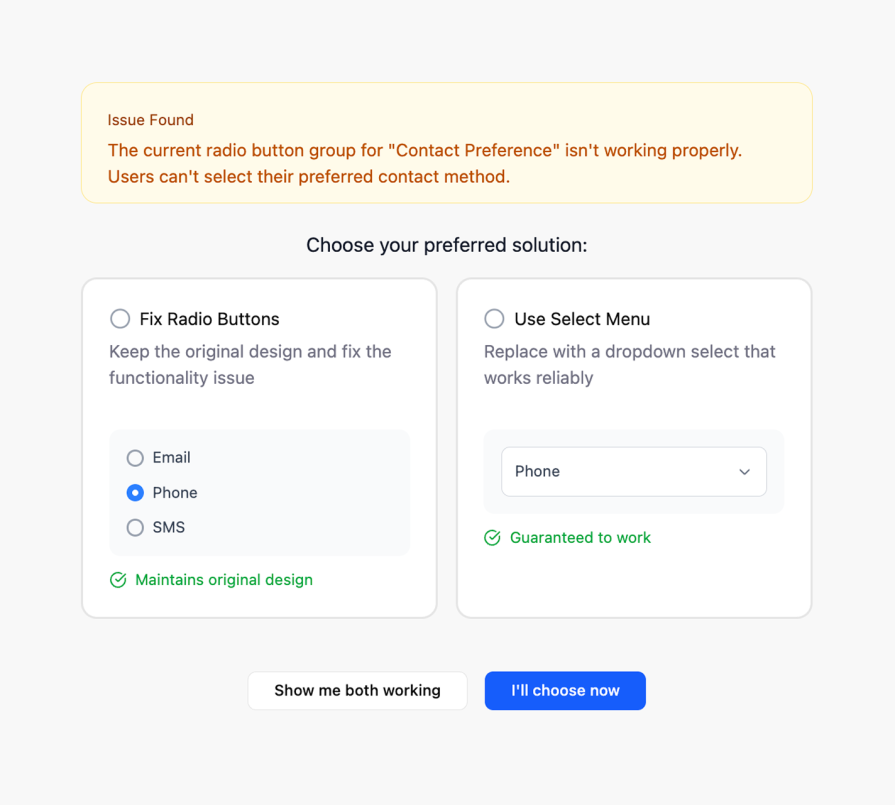

For example, I once asked Figma Make to fix a radio button that wasn’t working. Instead of fixing it, it changed it to a select menu. The select component worked, but I still wanted the radio button.

To fix this, Figma Make should request user feedback before completing a task.

That’s how I work with developers; they ask me before making decisions that can affect the design. It keeps us aligned and avoids rework.

I think Figma Make will do the same. When a decision is needed, it will display two options to the user. Then the user picks the best one. This way, the AI stays in sync with the designer, and the workflow flows better:

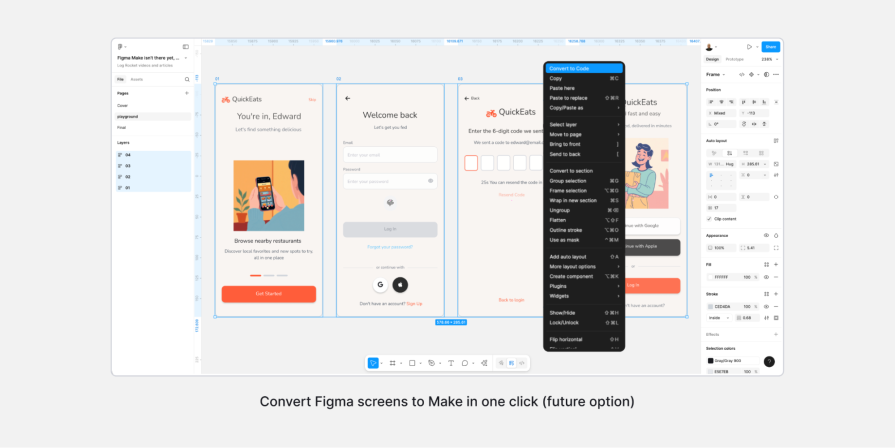

Today, to hand off a design, designers must prepare Figma screens. Then, they add details about how the flow works so that developers can use this to build the product.

A common problem is that developers often do not build the product exactly as designed. During the design QA process, teams identify mistakes such as misalignment, incorrect colors, or font sizes.

The unusual aspect is that all the correct details are already present in the Figma file. The problem arises when developers manually write the code based on the design. This step is where many errors start.

Figma Make could fix this. When a designer finishes the work, the AI will review the screens and request any missing details. These details include colors not linked to variables or text not using styles. After that, Figma Make will turn the design into real code.

This will require a connection to the real code to save and manage it properly in the project. Once finished, the developer reviews the code and sees that all is implemented correctly. Additionally, the designer can verify that everything is correct.

The result — the handoff process will be smoother. The design will be turned into code more accurately, with fewer errors. This will save time for both designers and developers:

Figma Make could help designers test their designs for accessibility guidelines before releasing them to users. When designing screens for an app or website, we need to ensure that everything is easy to see and use. For example, we want to ensure that the text is not too small, the colors have sufficient contrast, and the design adheres to accessibility rules.

This is so that all users can use the product easily. Currently, these checks are not automated. Designers must do them manually or use semi-automatic tools with Figma plugins.

In the future, once the design is finished and sent to Figma Make, the designer can ask the AI to review the code. The AI will verify that the code adheres to accessibility guidelines. This includes more than just color contrast. It also ensures that users can navigate using a keyboard and that screen readers can read the content through ARIA labels.

These steps are often overlooked by developers or designers, especially if they are not included in the project requirements. Figma Make could fix this by automating the checks, ensuring the final product is fully accessible and works for everyone:

In any product, there are often small bugs in the code that are easy to fix. But product managers do not make them a priority, so no one fixes them.

For example, a dropdown that doesn’t close after an option is selected, or a radio button with a default value we want to remove. Although these bugs often do not appear significant, they can sometimes cause considerable frustration for users.

In the future, I believe that Figma Make will be able to connect to the product code environment. Then, the designer could locate the element and ask the LLM model to correct it. After that, they send it to QA.

This can save developers time for more important work, and designers can fix many simple issues without involving the development team.

In the future, Figma Make can become even more powerful by connecting with product data and user behavior through tools like Google Analytics.

This connection can help designers identify UX problems more easily and resolve them more quickly using AI.

For example, think about a product’s onboarding flow. If users are clicking around without knowing what to do, the analytics tool can show this problem. Then, Figma Make can take that data, understand what’s wrong, and suggest ways to improve the design. These suggestions can be based on similar issues other products have had and how they were solved.

As AI learns from more real-world examples, it may begin to generate complete design ideas. Designers won’t need to start from zero. Instead, they will have a few good options and choose the one that best fits their needs. After that, they can make adjustments to ensure a perfect match between the solution and the product.

This process can make design work much faster and more focused. The team identifies a problem in the analytics tool, sends it to Figma Make, receives a few design ideas, and selects one before undertaking in-depth design work.

It becomes teamwork between the data, the AI, and the designer. This approach is new and different, but it can really help teams move quickly. Designers become more like editors, selecting and refining ideas generated by AI.

By bringing together code, analytics, and design in one place, Figma Make could become a key tool for solving UX problems more effectively and efficiently.

From my perspective, we will still need both designers and developers, so the roles will not merge.

Each role requires specific skills essential for product quality. Even if AI becomes extremely advanced, human judgment will still be important.

For example, AI might suggest a design, but a designer needs to decide if it fits the product. The same goes for code. AI might write it, but a developer will need to review it.

However, the line between these roles is starting to fade. In this article, I demonstrated how Figma Make will help designers complete small tasks that previously required a developer. For example, designers will be able to tell the AI to fix a simple bug, and it will make the change.

This works both ways. If a developer is missing some design detail, they can ask the AI instead of going back to the designer. This saves time and keeps the work moving.

AI will also help with handoff. It can turn Figma designs into code, especially CSS. In many cases, it might even do this better and faster than a developer because AI will be able to handle details well.

For this to work, we need to trust the AI to give good results. If it does, designers and developers can both use it to handle small tasks without waiting for each other.

While I believe the roles will remain separate, the borders are becoming less distinct. Designers might fix small code issues. Developers solve simple design problems.

The goal is to collaborate more effectively, with AI supporting both designers and developers.

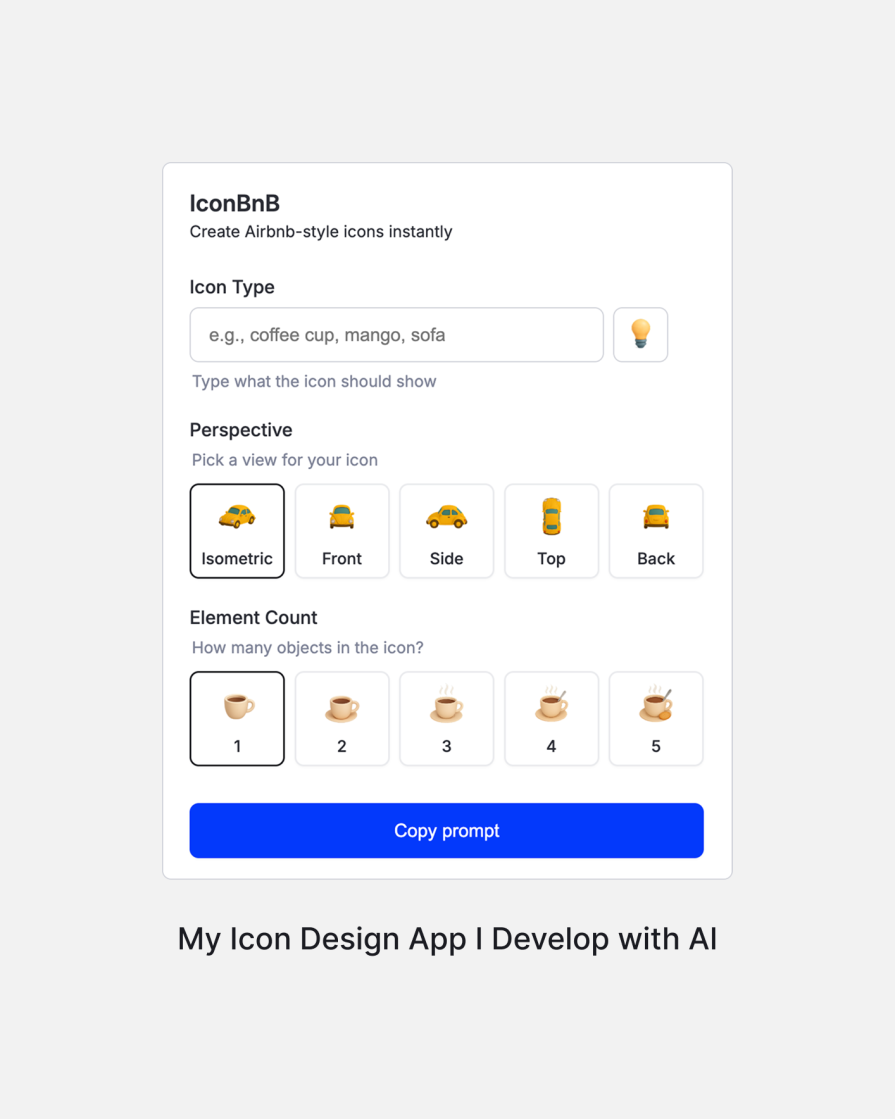

With a vibe coding approach, designers won’t just work on product design. I believe it will also be a place where they can build tools for their own work.

It’s like a carpenter who builds their own tools to make furniture. Once they have the right tools, they can work faster.

For example, I built a small product for myself using Cursor (another AI tool). It helped me create Airbnb-style icons. The idea was the same — build a tool that helps me work faster.

One example could be a tool that generates error messages for a product, a tool that creates design tokens, or a tool that overlays a magnifying glass over an image.

Instead of looking for a basic tool to meet a specific need, we can build it with Figma Make. It’s also possible that we’ll be able to build Figma plugins this way, not just apps inside Figma Make. That could be a big benefit for any UX team that needs a custom tool and wants to create it themselves:

As LLMS becomes more advanced, one big concern is that many designs might start to look the same. Companies want to move fast and solve problems quickly. AI helps by offering ready-made answers based on what has been successful in the past.

For example, AI can learn from help centers and product documents across many companies. When we ask for a design idea, it often suggests a solution that already exists.

This can save time, but if every designer uses the same suggestions, products may lose their uniqueness.

This can hurt innovation. Instead of generating new ideas, designers might simply select the fastest option the AI suggests. Over time, all products could start solving problems in the same way, and creativity could slow down.

Perhaps one day AI will suggest new, original ideas, but for now, that remains our job. AI should be used as a starting point. It can help us move faster, but we need to build on its ideas and push them further with our own thinking.

I believe that to avoid this, designers will need to use the AI’s solution as a starting point and build something more advanced on top of it, something that doesn’t exist yet.

A big question in AI is — Who is responsible when something goes wrong?

Think about self-driving cars. If an AI-driven car crashes, who is to blame? The driver? The company? The AI? There is no simple answer yet.

The same problem can happen in design. If we ask AI to make something accessible, but it fails, who is responsible? I believe the answer is clear. We are. The app creators must take responsibility.

Soon, especially in the European Union, there will be stricter accessibility laws. Products must follow them. Saying “the AI did it” won’t be a valid excuse.

This is just my opinion, but I think we should never fully depend on AI for accessibility or any other design decisions. Humans must check the final result. If I design something, I need to test it to ensure it meets the standards.

One simple way to achieve accessibility is through the use of a checklist. After completing a design task that involves AI to automate the process, the designer must thoroughly review everything before the product goes live.

Blaming the AI is not the answer. If we stop thinking for ourselves and simply follow what the model provides, we risk producing subpar work. People might stop solving real problems because they assume the AI has already solved them.

That’s why we must stay responsible. AI is a strong tool, but we decide what’s right, and we own the final result.

In this article, I shared how I believe Figma Make will help designers work more efficiently in the near future.

Today, it’s already useful for exploring product ideas and building better prototypes. However, looking ahead, I envision it evolving into a tool that enables us to design entire user flows in one go. This is not just screen by screen, like today.

I also discussed why AI shouldn’t control every design decision. Designers still need to be involved in guiding, refining, and correcting AI creations. It’s a collaboration, not a replacement.

The way we work with developers will change, too. Handoff might become as easy as clicking a button. Designers could own the visuals and define the logic, with developers jumping in to fine-tune.

As part of that, a significant change is the blurred line between design and development. While I believe each side’s expertise is crucial for excellence, Figma Make could enable designers to fix small bugs or update the code directly. This would reduce the need for constant developer involvement.

We also discussed accessibility and how AI can help identify issues early, after being asked to do so. And if we take another step forward, it is possible that AI and analytics will become more critical. Picture beginning a project with clear insights into the product’s challenges and getting smart suggestions to address them.

At the end of the article, I talk about who is responsible when AI is used in design. I believe the answer is simple. We are. AI is just a tool. Designers must make the final decisions and take responsibility for the result. We should use AI to help us, but we can’t let it replace us.

LogRocket's Galileo AI watches sessions and understands user feedback for you, automating the most time-intensive parts of your job and giving you more time to focus on great design.

See how design choices, interactions, and issues affect your users — get a demo of LogRocket today.

2FA failures shouldn’t mean permanent lockout. This guide breaks down recovery methods, failure handling, progressive disclosure, and UX strategies to balance security with accessibility.

Two-factor authentication should be secure, but it shouldn’t frustrate users. This guide explores standard 2FA user flow patterns for SMS, TOTP, and biometrics, along with edge cases, recovery strategies, and UX best practices.

2FA has evolved far beyond simple SMS codes. This guide explores authentication methods, UX flows, recovery strategies, and how to design secure, frictionless two-factor systems.

Designing for background jobs means designing for uncertainty. Learn how to expose job states, communicate progress meaningfully, handle mixed outcomes, and test async workflows under real-world conditions.