ultrafetch to boost node-fetch behavior

In 2015, JavaScript introduced the Fetch API, which is based on the fetch() function. This new method of managing local and remote resources was quickly adopted by browsers, but Node.js took much longer. In fact, fetch() was only added to the standard library in 2022.

While the Fetch API quickly became a popular choice for making HTTP requests in Node apps, its implementation is still a step behind current standards. It has some limitations and drawbacks that hinder its potential, and it’s likely to take the core team years to address them all.

The solution to these shortcomings is called ultrafetch, a lightweight library that aims to elevate the capabilities of fetch through modular utilities. Its goal is to solve the problems of Node’s Fetch API implementation and help improve the developer experience as a result.

In this article, we will cover:

fetchultrafetch?ultrafetchNote that ultrafetch adds only a single significant feature to fetch, and the library is not actively maintained. Even so, using ultrafetch helps enhance fetch behavior and is worth knowing how to use. Let’s get into it now.

The Replay is a weekly newsletter for dev and engineering leaders.

Delivered once a week, it's your curated guide to the most important conversations around frontend dev, emerging AI tools, and the state of modern software.

The JavaScript Fetch API provides an interface based on the fetch() function for fetching local or remote resources. It was introduced as a flexible replacement for XMLHttpRequest and has been supported by most popular browsers since 2015.

Node, however, took much longer to embrace it. During this time, the node-fetch library became one of the most popular non-official implementations of the Fetch API for Node.js.

In April 2022, Node finally added experimental support for fetch() in v17.5. Its implementation is based on Undici, a fast, reliable, and spec-compliant HTTP/1.1 client for Node.js.

As of Node v18, fetch() is part of the standard Node API and can be used without manually importing it or installing any additional library.

fetchfetch has quickly become one of the most popular clients for making HTTP requests in Node applications. Some factors behind its success include:

However, there’s still a lot to do to make it fully compliant with HTTP RFC standards. It will probably take another year or two before we see the Fetch API fully stabilized in Node.

A major drawback of the current implementation of fetch is its lack of a built-in, standards-compliant caching system. Caching is crucial for improving performance and reducing redundant requests to the same endpoint, especially when dealing with frequently requested data.

At the time of this writing, the only way to cache fetch responses is to store them in memory or on disk, relying on custom logic or external caching libraries. This adds complexity to the code and can lead to inconsistent implementations.

Luckily, there is a solution to this problem called ultrafetch!

ultrafetch?ultrafetch is a Node.js library that provides modular utilities for enhancing the standard fetch and npm node-fetch libraries. It can also extend any library that follows the Bring Your Own Fetch (BYOF) approach, such as @vercel/fetch.

The main goal behind ultrafetch is to enhance the Fetch API with an RFC-7234-compliant caching system. The library uses an in-memory Map instance as the default cache engine for storing Response objects produced by the Fetch API’s GET, POST, or PATCH requests. Custom caches are supported as well.

To understand how this library works, consider the following example.

Suppose that your Node backend needs to make an HTTP GET request to get some data as part of the business logic of an API endpoint. Each API call to that endpoint will require a new HTTP GET request.

If this request always returns the same data, you could cache the response the first time and then read it from memory the following times. That is exactly what ultrafetch is all about!

In the rest of this tutorial, we’ll explore how and see ultrafetch in action with a practical example.

fetch with ultrafetchNow, let’s learn how to integrate ultrafetch into Node.js in a step-by-step tutorial. To follow along, you need a Node.js 18+ project.

The code snippets below will be in TypeScript, so I recommend checking out our tutorial to learn how to set up a Node.js Express app in TypeScript. However, you can easily adapt the code snippets to JavaScript, so a Node.js Express project in JavaScript will work as well.

ultrafetch and node-fetchAdd ultrafetch to your project’s dependencies with the following command:

npm install ultrafetch

As a TypeScript library, ultrafetch already comes with typings. This means you don’t need to add any @types library.

Additionally, as mentioned before, fetch is already part of Node.js 18, so there’s no need to install it. If you instead prefer to use node-fetch, install it with:

npm install node-fetch

Great! You are ready to boost any fetch implementation!

fetch with ultrafetchAll you have to do to extend a fetch implementation with ultrafetch is to wrap it with the withCache() function:

// import fetch from "node-fetch" -> if you are a node-fetch user

import { withCache } from "ultrafetch"

// extend the default fetch implementation

const enhancedFetch = withCache(fetch)

withCache() enhances fetch by adding caching functionality.

Then, you can use the enhancedFetch() function just like fetch():

const response = await enhancedFetch("https://pokeapi.co/api/v2/pokemon/pikachu")

console.log(response.json())

The request URL used above comes from the PokéAPI project, a collection of free API endpoints that return Pokémon-related data.

This will print:

{

// omitted for brevity...

name: 'pikachu',

order: 35,

past_types: [],

species: {

name: 'pikachu',

url: 'https://pokeapi.co/api/v2/pokemon-species/25/'

},

// omitted for brevity...

types: [ { slot: 1, type: [Object] } ],

weight: 60

}

As you can see, it works just like the standard Fetch API. The benefits of ultrafetch show up when making the same request twice.

After the first request, the response object will be added to the internal in-memory Map cache. When repeating the request, the response will be extracted from the cache and returned immediately with no need for network turnaround, which saves time and resources.

You can import and use isCached() from ultrafetch to verify this behavior:

import { withCache, isCached } from "ultrafetch"

This function returns true when the given response object was returned from the cache. Otherwise, it returns false.

Test it in an example like the below:

const response1 = await enhancedFetch("https://pokeapi.co/api/v2/pokemon/pikachu")

console.log(isCached(response1)) // false

const response2 = await enhancedFetch("https://pokeapi.co/api/v2/pokemon/pikachu")

console.log(isCached(response2)) // true

Note that the first request is performed, while the response for the second one is read from the cache as expected.

The default caching feature introduced by ultrafetch is great, but also limited in that it does not allow you to access and control the internal cache.

There’s no way to programmatically clear the cache or remove a specific item from it. Once a request is made, it will be cached forever, which may not be the desired behavior.

For this reason, withCache also accepts a custom cache object:

class MyCustomCache extends Map<string, string> {

// overwrite some methods with custom logic...

}

const fetchCache: MyCustomCache = new MyCustomCache()

const enhancedFetch = withCache(fetch, { cache: fetchCache })

This mechanism gives you control over the cache object. For example, you can now clear the cache with the following command:

fetchCache.clear()

The cache parameter must be of type Map<string, string> or AsyncMap<string, string>. You can use either a Map instance or instantiate an object of a custom type extending one of two interfaces, as done above. The latter approach is more flexible if you want to write some custom caching logic.

AsyncMap is a custom ultrafetch type that represents a standard Map where each method is async:

export interface AsyncMap<K, V> {

clear(): Promise<void>;

delete(key: K): Promise<boolean>;

get(key: K): Promise<V | undefined>;

has(key: K): Promise<boolean>;

set(key: K, value: V): Promise<this>;

readonly size: number;

}

This is useful when your cache storage needs to perform asynchronous operations.

ultrafetch in actionTime to put it all together and see ultrafetch in action in a complete example:

// import fetch from "node-fetch" -> if you are a node-fetch user

import { withCache, isCached } from "ultrafetch"

// define a custom cache

class MyCustomCache extends Map<string, string> {

// overwrite some methods with custom logic...

}

const fetchCache: MyCustomCache = new MyCustomCache()

// extend the fetch implementation

const enhancedFetch = withCache(fetch, { cache: fetchCache })

async function testUltraFetch() {

console.time("request-1")

const response1 = await enhancedFetch("https://pokeapi.co/api/v2/pokemon/pikachu")

console.timeEnd("request-1") // ~ 300ms

const isResponse1Cached = isCached(response1)

console.log(`request-1 cached: ${isResponse1Cached}\n`) // false

console.time("request-2")

const response2 = await enhancedFetch("https://pokeapi.co/api/v2/pokemon/pikachu")

console.timeEnd("request-2") // ~ 30ms

const isResponse2Cached = isCached(response2)

console.log(`request-2 cached: ${isResponse2Cached}\n`) // true

// clear the cache used by the boosted fetch

fetchCache.clear()

console.time("request-3")

const response3 = await enhancedFetch("https://pokeapi.co/api/v2/pokemon/pikachu")

console.timeEnd("request-3") // ~300ms (may be a lower because of server-side caching from PokéAPI)

const isResponse3Cached = isCached(response3)

console.log(`request-3 cached: ${isResponse3Cached}\n`) // false

}

testUltraFetch()

Run the Node script and you should get something like this:

request-1: 234.871ms request-1 cached: false request-2: 21.473ms request-2 cached: true request-3: 109.328ms request-3 cached: false

Besides isCached(), you can prove that ultrafetch is reading the request-2 response from the cache by looking at the request times. After clearing the cache, request-3 should behave just like request-1.

Note that request-3 takes less time than request-1 because the Pokémon API implements server-side caching. After the first cold request, it already has the response ready and can return it faster.

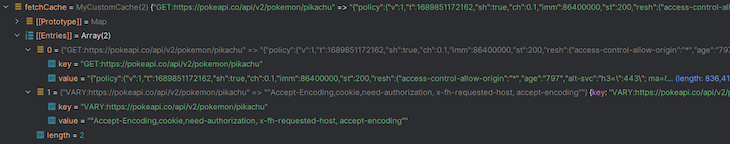

If you inspect fetchCache after request-1, you will get:

As you can see, ultrafetch uses the HTTP method and URL of the request as the key of the cache entries in the following format:

<HTTP_METHOD>:<request_url>

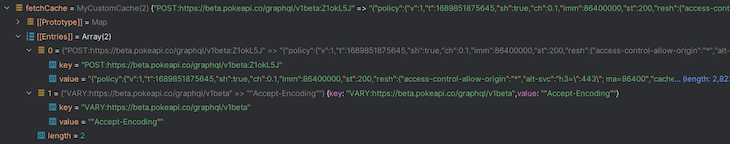

Now, replace the GET calls with some POST requests:

const response1 = await enhancedFetch("https://beta.pokeapi.co/graphql/v1beta", {

method: "POST",

body: `

{

pokemon: pokemon_v2_pokemon(where: {name: {_eq: "pikachu"}}) {

name

id

}

}

`

})

In this case, fetchCache will contain the following array:

This proves that ultrafetch does not cache responses based only on the destination API endpoint. Instead, it also takes into account the headers and body of the request.

Specifically, it hashes fetch‘s header and body objects and concatenates the resulting strings to the <HTTP_METHOD>:<request_url> key string.

Congrats! You just learned how to boost fetch with ultrafetch!

In this article, you learned what the JavaScript Fetch API is, why its implementation in Node.js has some shortcomings, and how to tackle them with ultrafetch.

While this library extends fetch with useful functionality, using libraries to enhance fetch is not something new. For example, the fetch-retry library adds retry logic to fetch to automatically repeat requests in case of failures or network problems.

As seen here, ultrafetch is an npm library that adds caching capabilities to the fetch and node-fetch modules. It addresses one of the major drawbacks of both implementations of the Fetch API, as they do not provide a standardized-based way to cache server responses.

With ultrafetch, you can easily cache HTTP responses produced by any fetch-compliant implementation, saving time and avoiding wasting resources on unnecessary requests.

Monitor failed and slow network requests in production

Monitor failed and slow network requests in productionDeploying a Node-based web app or website is the easy part. Making sure your Node instance continues to serve resources to your app is where things get tougher. If you’re interested in ensuring requests to the backend or third-party services are successful, try LogRocket.

LogRocket lets you replay user sessions, eliminating guesswork around why bugs happen by showing exactly what users experienced. It captures console logs, errors, network requests, and pixel-perfect DOM recordings — compatible with all frameworks.

LogRocket's Galileo AI watches sessions for you, instantly identifying and explaining user struggles with automated monitoring of your entire product experience.

LogRocket instruments your app to record baseline performance timings such as page load time, time to first byte, slow network requests, and also logs Redux, NgRx, and Vuex actions/state. Start monitoring for free.

Solve coordination problems in Islands architecture using event-driven patterns instead of localStorage polling.

Signal Forms in Angular 21 replace FormGroup pain and ControlValueAccessor complexity with a cleaner, reactive model built on signals.

Discover what’s new in The Replay, LogRocket’s newsletter for dev and engineering leaders, in the February 25th issue.

Explore how the Universal Commerce Protocol (UCP) allows AI agents to connect with merchants, handle checkout sessions, and securely process payments in real-world e-commerce flows.

Would you be interested in joining LogRocket's developer community?

Join LogRocket’s Content Advisory Board. You’ll help inform the type of content we create and get access to exclusive meetups, social accreditation, and swag.

Sign up now