When Anthropic introduced Claude Skills, they demonstrated how specialized instruction sets could transform AI assistants into domain experts.

But not every developer can use Claude at work. Many companies have IT restrictions or security policies that prevent the use of a large LLM like Anthropic’s. Or you just want to avoid context-switching between a million different AI tools.

Count me as one of those devs.

As someone who uses GitHub Copilot in my daily workflow, I was curious whether I could bring a Skills-like capability to my development environment.

With Copilot, it‘s not as simple as copying prompt templates, but it can still be achieved. With some additional configuration and usage of Copilot’s Instructions file, I’ve been able to achieve a similar workflow in Copilot to what Claude Skills offers.

In this article, I’ll provide a brief intro of what Claude Skills are and then share my strategy for trying to implement similar behaviors with GitHub Copilot. If you want to see my example project, please check out my sample project on GitHub.

The Replay is a weekly newsletter for dev and engineering leaders.

Delivered once a week, it's your curated guide to the most important conversations around frontend dev, emerging AI tools, and the state of modern software.

While Claude Skills offer powerful customization within the Claude ecosystem, many development teams have already standardized GitHub Copilot as their primary AI coding assistant. After learning about Claude Skills, I wanted to bring the same level of specialization directly into my existing workflow with Copilot.

Claude Skills allows for making agentic AI tools more efficient through context management. The core idea is to control how much context the AI needs to accomplish a goal. Controlling the context here makes responses faster and also reduces the likelihood of AI hallucination.

LLMs excel at contextual understanding and pattern matching. They start with a broad review of knowledge and then hone in on data specific to a request. As you will see in both Claude Skills and my strategy for Copilot implementation, I make the core work of LLMs more efficient through context management.

The strategy allows for the same beneficial features of Skills within an existing Copilot-based workflow.

Before going into my Copilot examples, it helps to understand what Claude Skills are at a high level. Claude Skills are specialized instruction sets that extend Claude’s capabilities for specific tasks or domains.

Think of them as expert personas or detailed playbooks that Claude can reference when handling particular types of work. Each Skill contains comprehensive guidelines, best practices, and domain-specific knowledge that help Claude deliver consistently high-quality results for specialized tasks.

Skills are stored as Markdown files that Claude can access and read before tackling a task. When a user’s request aligns with a particular Skill (such as creating a presentation, analyzing a spreadsheet, or writing technical documentation), Claude automatically loads the relevant instructions and follows them throughout the interaction.

Claude Skills improve efficiency with AI tooling through modular context management. Rather than manually providing domain-specific instructions in every conversation, teams can create reusable Skills that Claude loads when relevant. This allows Claude to apply specialized expertise consistently without requiring users to repeatedly share the same context or guidelines.

It helps to understand this through an example. Imagine you’ve created a custom Code Review Skill for your development team. This Skill might contain:

A sample SKILL.md file for this Code Review Skill would look like the following:

---

name: code-review

description: Use when the user asks to "do a code review", "review code", "review this file", "review this folder", or any request to analyze code for issues, review pull requests, or provide code feedback against team standards.

---

# Code Review Skill

## Purpose

This skill provides comprehensive code review capabilities aligned with our team's standards, security requirements, and architectural patterns.

## When to Use This Skill

- When reviewing pull requests or code changes

- When analyzing code for potential issues

- When providing feedback on code structure or implementation

- When checking code against team standards

## Code Review Checklist

### 1. Code Standards & Style

- **Naming Conventions**

- Use camelCase for variables and functions (e.g., `getUserData`, `isValid`)

- Use PascalCase for classes and components (e.g., `UserService`, `DataProcessor`)

- Use UPPER_SNAKE_CASE for constants (e.g., `MAX_RETRY_ATTEMPTS`)

- Avoid single-letter variables except in short loops

- **File Organization**

- Maximum 300 lines per file

- Group related functions together

- Place imports at the top, organized: external libraries, internal modules, types

- Export statements at the bottom of the file

### 2. Security Vulnerabilities

Check for these common security issues:

- SQL injection risks (always use parameterized queries)

- XSS vulnerabilities (sanitize user input before rendering)

- Hardcoded credentials or API keys (use environment variables)

- Insecure direct object references (validate user permissions)

- Missing authentication/authorization checks on sensitive endpoints

### 3. Performance Patterns

- **Database Queries**

- Flag N+1 query patterns

- Suggest eager loading for related data

- Recommend indexing for frequently queried fields

- **API Calls**

- Ensure proper caching for repeated requests

- Check for unnecessary API calls in loops

- Verify timeout and retry logic exists

### 4. Error Handling

- All async operations must have try-catch blocks

- Errors should be logged with context (user ID, request ID, timestamp)

- User-facing errors should never expose internal details

- Use custom error classes: `ValidationError`, `AuthenticationError`, `DatabaseError`

### 5. Testing Requirements

- Unit tests required for all business logic functions

- Integration tests required for API endpoints

- Minimum 80% code coverage for new code

- Test file naming: `[filename].test.js` or `[filename].spec.js`

### 6. Documentation Standards

- JSDoc comments required for all exported functions

- Include @param, @returns, and @throws tags

- Complex logic should have inline comments explaining the "why"

- Update README.md if public API changes

### 7. Architecture Alignment

- **Service Layer Pattern**: Business logic must be in service files, not controllers

- **Dependency Injection**: Use constructor injection for dependencies

- **Event-Driven**: Use event emitters for cross-module communication, not direct calls

- **Repository Pattern**: Database access only through repository classes

## Review Output Format

Structure your review as follows:

1. **Summary**: Brief overview of the changes and overall assessment

2. **Critical Issues**: Must be fixed before merge (security, bugs, breaking changes)

3. **Suggestions**: Improvements for code quality, performance, or maintainability

4. **Positive Feedback**: Highlight what was done well

5. **Questions**: Any clarifications needed from the author

## Example Review Comments

**Good**: "Line 45: This query could cause N+1 problem. Consider using `include` to eager load the user's orders: `User.findById(id).include('orders')`"

**Avoid**: "This is bad" or "Fix this"

## CI/CD Integration Notes

- All tests must pass before merge

- Linting errors must be resolved (ESLint configuration in `.eslintrc.js`)

- Build must succeed in staging environment

- Database migrations must be reversible

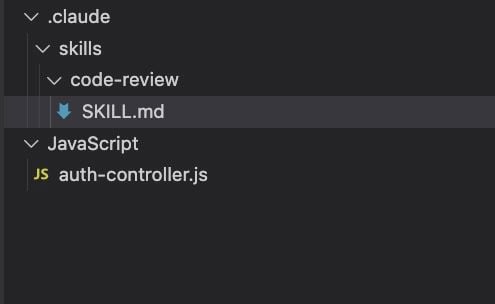

You would then place that SKILL.md file in .claude/skills/<SKILL_NAME>, where in this case <SKILL_NAME> would be code-review. The resulting structure would look something like this:

A few notes in this example:

SKILL.md file..claude folder is important for it to automatically read in the Skill as an option when doing related tasks..claude folder.With the SKILL.md in place, you can then start a Claude Code session and ask, “Can you do a review of the code of the X folder?” and it would read in that SKILL.md file as part of its work.

Here is an example interaction from my sample project where I asked it to do a review of the code in a folder called “JavaScript” in my project (with the SKILL.md) file in place:

❯ can you do a code review of the JavaScript folder?

⏺ Skill(code-review)

⎿ Successfully loaded skill

⏺ I'll start by exploring the JavaScript folder to understand its contents.

⏺ Explore(Explore JavaScript folder structure)

⎿ Done (4 tool uses · 11.6k tokens · 24s)

⏺ Read 1 file (ctrl+o to expand)

⏺ Code Review: JavaScript/auth-controller.js

Summary

This file is an Express.js authentication controller handling user login. It

has several critical security vulnerabilities that must be addressed before

this code is used in any environment. The file is short (23 lines) but

contains significant issues.

---

Critical Issues

1. SQL Injection (Line 8-10)

The query directly interpolates user input into the SQL string. This is the

most severe vulnerability — an attacker can bypass authentication or

extract/destroy the entire database.

// VULNERABLE

`SELECT * FROM users WHERE username = '${username}' AND password =

'${password}'`

// FIX: Use parameterized queries

db.query('SELECT * FROM users WHERE username = $1 AND password = $2',

[username, password])

2. Plaintext Password Storage/Comparison (Line 9)

Passwords are compared directly as plaintext in the SQL query. Passwords must

be hashed (e.g., with bcrypt) and compared using a constant-time comparison

function.

// FIX: Hash lookup + bcrypt comparison

const user = await db.query('SELECT * FROM users WHERE username = $1',

[username]);

if (user && await bcrypt.compare(password, user.password_hash)) { ... }

3. Insecure Token Generation (Lines 19-21)

generateToken simply concatenates 'token_' with the user ID. This is

trivially guessable and provides no security. Use a proper JWT library or

cryptographically secure token generator.

// FIX: Use JWT or crypto

const jwt = require('jsonwebtoken');

function generateToken(id) {

return jwt.sign({ userId: id }, process.env.JWT_SECRET, { expiresIn: '1h'

});

}

4. Missing Error Handling (Line 5-16)

The async handler has no try-catch block. If the database query fails, the

error will be unhandled, potentially crashing the process or leaking internal

details to the client.

router.post('/login', async (req, res) => {

try {

// ... logic

} catch (err) {

logger.error('Login failed', { error: err.message });

res.status(500).json({ error: 'Internal server error' });

}

});

---

Suggestions

- Input validation — Validate that username and password are present and are

strings before querying. Consider using a validation library or middleware.

- Rate limiting — Add rate limiting to the login endpoint to prevent

brute-force attacks.

- Architecture — Per the team's service layer pattern, the business logic

(query + token generation) should be extracted into an AuthService class

rather than living in the controller.

- JSDoc — The generateToken function is exported implicitly through usage but

lacks documentation. Add JSDoc with @param and @returns tags.

- SELECT * — Avoid selecting all columns; only retrieve what's needed (e.g.,

id, password_hash).

---

Positive Feedback

- The file is well under the 300-line limit and focused on a single concern.

- Uses async/await rather than raw promises.

- Proper use of Express Router for modular routing.

---

Questions

- Is there an existing authentication/JWT infrastructure elsewhere in the

project that this should integrate with?

- Is there a shared error-handling middleware this should defer to?

As you can see from the output, Claude Code initially finds and reads the Code Review Skill, as it recognizes the phrases that indicate it would be needed. After reading the Skill, Claude Code then uses it to conduct the analysis, and responds with the review.

In the next sections, I will show a way to achieve a similar behavior with GitHub Copilot.

Now with an understanding of Claude Skills, let’s dive into my strategy to achieve a similar behavior with GitHub Copilot.

As I mentioned in my earlier section, the goal is to bring Claude Skills to workflows that are centered on Copilot. My strategy achieves bringing a similar workflow to that of Claude Skills, but in a Copilot-centered workflow. The benefit is that teams that are limited to the use of Copilot (or who want to avoid context switching) can utilize a feature similar to Claude Skills.

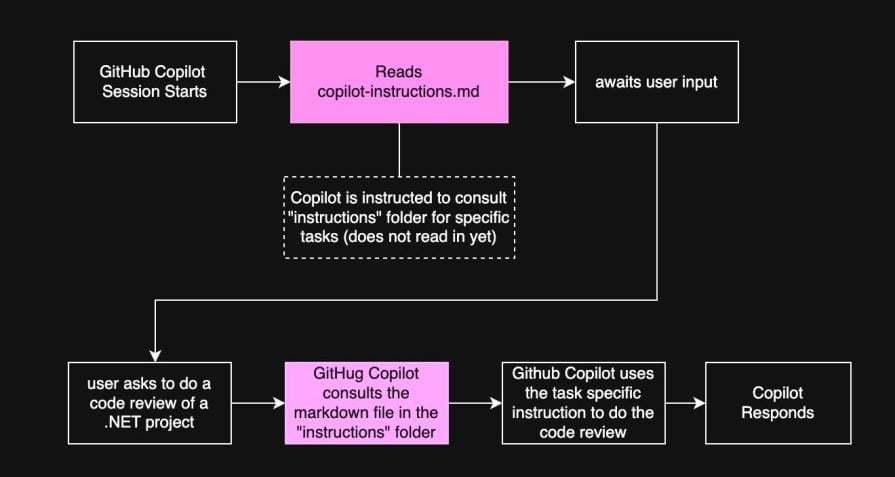

I first start with creating a .github folder, which is where all of my Copilot Instructions files will live. The copilot-instructions.md file would be read in anytime you start a Copilot chat and serve as an entry point when Copilot chats begin.

Within the Copilot Instructions file, I’ll next guide Copilot to refer to a second “instructions” folder with specific instructions based on what I’m doing.

The files I place in this “instructions” folder also need to have -instructions in the file name to properly be recognized by Copilot. Using these “instructions” files is similar to the way that Claude Skills work with a SKILL.md file within a folder. The only added step is that I have to basically provide an additional system prompt via the copilot-instructions.md file to reference those files when needed.

Here is the part of my copilot-instrucitons.md file where I outline usage of the additional instructions files:

## Language-Specific Guidelines This repository uses modular Copilot instructions organized by language and framework. Language-specific guidelines are automatically applied via `.instructions.md` files in `.github/instructions/` based on the file being edited: - **Dotnet/** - .NET Web API guidelines (`dotnet-webapi.instructions.md`) - **React/** - TypeScript React guidelines (`typescript-react.instructions.md`)

If you’re following along with my sample project, the end project structure looks like:

.github/

├── copilot-instructions.md # Main instructions file (always loaded)

└── instructions/ # Language-specific guidelines (auto-loaded by applyTo glob)

├── dotnet-webapi.instructions.md # .NET Web API guidelines → **/Dotnet/**

└── typescript-react.instructions.md # TypeScript React guidelines → **/React/**

With the configuration setup, I now have more control over the system prompts and context that Copilot reads in on every session. Instead of all of the rules for specific tasks being read in on each session, the domain-specific instructions are only read in when they’re needed.

The goal is to manage the context and make Copilot more efficient. Instead of using up the valuable context window, my Copilot sessions can be cleaner and focused on the tasks to be done.

With any agentic AI tool, controlling the context window is essential for efficiency and to have a positive developer experience. Without proper management of context, any agentic AI tool is open to inefficiencies, which leads to poor performance (slow response), hallucinations, poor cost management, and overuse of account tokens.

A visual of the Copilot workflow when asked to “code review a .NET project” with this configuration looks like:

If you look at my sample project, you’ll see that I’ve set it to enforce standards based on the programming language. I’ve included special instructions for (1) .NET and (2) TypeScript React as examples. Here is a portion of the .NET instructions file:

---

applyTo: '**/Dotnet/**'

---

# .NET Web API Guidelines

## Architecture & Structure

- Use minimal APIs for simple endpoints, controller-based APIs for complex scenarios

- Organize code using feature folders or clean architecture layers

- Implement dependency injection for all services

- Use `Program.cs` for service registration and middleware configuration

## Best Practices

### Controllers/Endpoints

- Return `IActionResult` or `ActionResult<T>` from controller actions

- Use attribute routing with versioning: `[Route("api/v1/[controller]")]`

- Apply appropriate HTTP method attributes: `[HttpGet]`, `[HttpPost]`, etc.

- Use `[FromBody]`, `[FromQuery]`, `[FromRoute]` for explicit binding

If you notice, I have also included a frontmatter section at the top of the file applyTo: '**/Dotnet/**'. This frontmatter is seen by Copilot when it initially scans the .github folder and connects what is outlined in the copilot-instructions.md file to look for “Dotnet” specific tasks.

I did the same with the React instructions using applyTo: '**/React/**' for React projects.

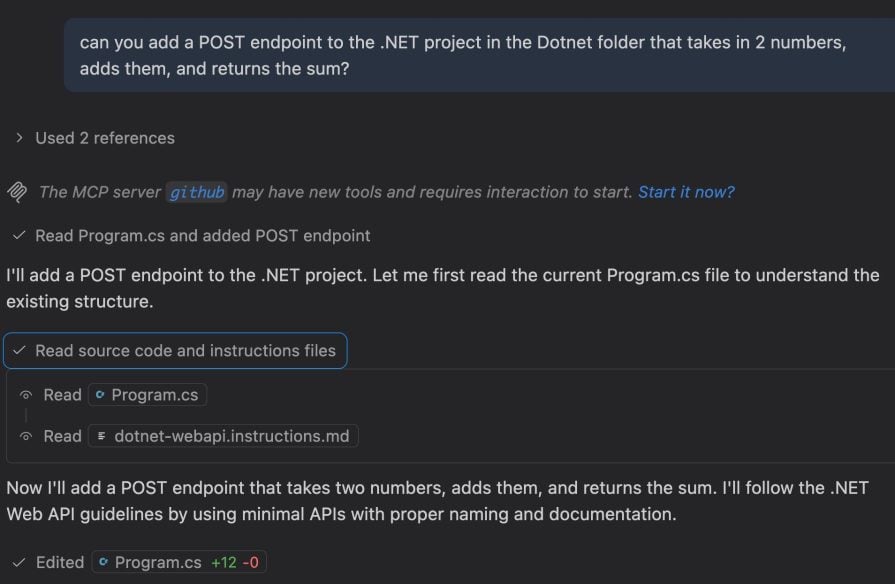

Now I can share what it looks like with this configuration in my sample project. When I start Copilot chats, it follows the rules when I ask it to do code changes or review. Here is an example where I asked Copilot to create a new endpoint in the .NET project:

Once it started doing the work, it referenced the correct dotnet-webapi.instructions.md file.

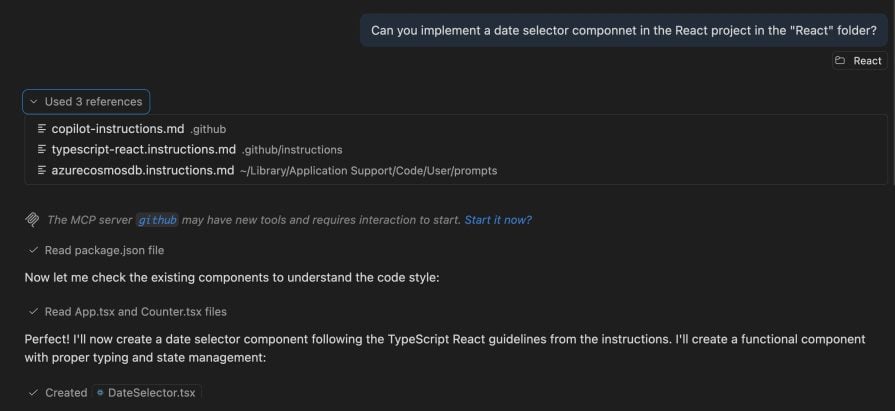

Similarly, with the React project, you’ll notice the same behavior if I ask it to add a Date Selector component:

Note here in this screenshot that Copilot referenced the typescript-react.instructions.md file to conduct its review. If you are following along with the sample project, I encourage you to try to get Copilot to make code changes and see how it is using these special instructions files.

One last example would be to ask Copilot to do a review similar to how I did in the earlier section with Claude. Here is a code review for the React project:

User: Can you do a code review of the React project? GitHub Copilot: I'll review the React project for you. Let me start by examining the files. Read \[\](file:///Users/andrewevans/Documents/projects/copilot-custom-development-skills/React/src/App.tsx) Read \[\](file:///Users/andrewevans/Documents/projects/copilot-custom-development-skills/React/src/Counter.tsx) Read \[\](file:///Users/andrewevans/Documents/projects/copilot-custom-development-skills/React/src/main.tsx) Read \[\](file:///Users/andrewevans/Documents/projects/copilot-custom-development-skills/React/package.json) Read \[\](file:///Users/andrewevans/Documents/projects/copilot-custom-development-skills/React/tsconfig.json) ## React Project Code Review Here's my comprehensive review of your React project: ### ✅ Strengths 1. **TypeScript Configuration**: Solid setup with strict mode enabled, ES2020 target, and proper JSX configuration. 2. **Component Structure**: Good separation of concerns with `App` and `Counter` components. 3. **Export Pattern**: Using named exports for `Counter` is better for testability. 4. **React Version**: Modern React 18 with Hooks. 5. **Build Setup**: Vite is a great choice for fast development and optimized builds. ### ⚠️ Issues & Recommendations 1. **App.tsx** - Missing CSS file import - No styling file included, making the component unstyled. Consider adding CSS modules or Tailwind. 2. **Counter.tsx** - Potential performance issues - `increment` function is recreated on every render. Use `useCallback` to memoize it for consistency: ```tsx const increment = useCallback(() => setCount(count + 1), [count]); const clear = useCallback(() => setCount(0), []); ``` 3. **main.tsx** - Unnecessary React import - Line 1 imports React but only uses `React.StrictMode`. Since you're using the `jsx: "react-jsx"` compiler option, you don't need this import. 4. **Type Safety** - Consider adding prop types to `App` even if it currently has no props, for consistency. 5. **Missing Features** - No error boundaries for error handling - No test files visible (consider adding Jest/Vitest tests) - No `.gitignore` file shown (should exclude `node_modules`, `dist`, `.env`) 6. **index.html** - Not reviewed - Unable to check; ensure it has proper accessibility attributes and a non-empty `<div id="root">`. ### 📋 Suggested Improvements 1. Add CSS file for component styling 2. Remove unused React import from main.tsx 3. Add `useCallback` optimization to Counter 4. Create `.gitignore` if not already present 5. Add unit tests (especially for Counter increment/clear logic) 6. Consider adding a lint configuration (ESLint) - check if one exists in the root Would you like me to implement any of these fixes?

In all cases, the special instructions files that I have set up are consulted in Copilot sessions. This example project is very simple, but you could imagine expanding this to more detailed tasks for team-specific workflows.

In addition to the strategy I’ve outlined, the Microsoft Copilot ecosystem offers some other options for features similar to Claude Skills.

The Microsoft Copilot ecosystem offers some features that do not require the use of instruction files. These options are for individuals who want to build workflows integrated within Microsoft products, as opposed to software development tasks.

Microsoft offers Copilot Studio as a way to create custom AI agents with specialized domain knowledge and behaviors. With Copilot Studio, you can develop workflows for things like document uploads, SharePoint Connections, and linking to websites.

Inside Copilot Studio, you can define custom topics and conversation flows and integrate with Microsoft 365 apps like Teams, Outlook, and SharePoint. You can also create custom actions and plugins using Power Automate or API connectors.

Declarative Agents for Microsoft 365 Copilot enable lightweight customizations through the use of a manifest file. With Declarative Agents, you can define custom instructions and knowledge sources (like SharePoint or OneDrive) along with integrated apps and actions. Declarative Agents allow you to build personalized experiences for business processes.

Microsoft 365 Copilot Connectors allow you to extend Microsoft 365 Copilot with the use of plugins to external data sources and services. Copilot Connectors can use semantic indexing to provide more meaningful and efficient data retrieval.

Reflecting on my experience with this strategy, I wanted to callout a few things that I’ve observed when trying to work with this strategy.

First, I had mixed experiences with the instruction files being automatically loaded. Generally, they seemed to load, but there were occasions where Copilot ignored them. In a Copilot chat, you can tell it to directly reference those files in its solutions.

Part of the initial issue was properly naming the files with the -instructions name, and calling them out in the copilot-instructions.md file. Another part of the issue was that at times, Copilot would ignore the instruction files for more generic requests that it may already have knowledge of. Through some trial and error, you could tweak the instruction files to look for more specific phrases that would help steer the AI toward what you wanted done.

Second, the sample project I created is simplistic and only meant as an example. A more realistic solution would include more pointed descriptions in the copilot-instructions.md file.

Development teams will have unique processes that they use for delivery. The point of my sample project was just to showcase how this works. But in the real world, a more robust solution would be required for good results.

Based on my experiences, I generally think this approach would work well if your team is centered around Copilot based workflows.

Both Copilot and Claude provide a good range of customization options and work with many different types of workflows. The workflow I’ve presented takes advantage of that flexibility and provides a good workflow for those who mainly use Copilot but want the benefit of Claude Skills.

Overall, I hope you found some value in the strategy and examples I’ve shared in this post. As I worked through this strategy, I learned quite a bit about how Copilot uses system prompts and instruction files.

I also learned more about how to manage context with AI tooling. One of the key things about maximizing Agentic AI tool use is to manage context.

I have additionally written other articles that cover similar concepts, including:

I encourage you to look at those articles for more ways you can improve your experience with Agentic AI tools.

I also encourage you to play with my sample project, and look at the different interactions with the instructions files. Hopefully, this post helped you on your journey of using Agentic AI tools. Thanks for reading!

Claude Code is deceptively capable. Point it at a codebase, describe what you need, and it’ll autonomously navigate files, write […]

Compare the top AI development tools and models of February 2026. View updated rankings, feature breakdowns, and find the best fit for you.

Broken npm packages often fail due to small packaging mistakes. This guide shows how to use Publint to validate exports, entry points, and module formats before publishing.

Discover what’s new in The Replay, LogRocket’s newsletter for dev and engineering leaders, in the February 11th issue.

Hey there, want to help make our blog better?

Join LogRocket’s Content Advisory Board. You’ll help inform the type of content we create and get access to exclusive meetups, social accreditation, and swag.

Sign up now