Editor’s note: Examples of profanity in this article are represented by the word “profanity” in order to remain inclusive and appropriate for all audiences.

Detecting and filtering profanity is a task you are bound to run into while building applications where users post (or interact with) text. These can be social media apps, comment sections, or game chat rooms, just to name a few.

Having the ability to detect profanity in order to filter it out is the solution to keeping communication spaces safe and age-appropriate, if your app requires.

This tutorial will guide you on building a GraphQL API to detect and filter profanity with Python and Flask. If you are just interested in the code alone, you can visit this GitHub repo for the demo application source code.

To follow and understand this tutorial, you will need the following:

Profanity (also known as curse words or swear words) refers to the offensive, impolite, or rude use of words and language. Profanity also helps to show or express a strong feeling towards something. Profanity can make online spaces feel hostile towards users, which is undesirable for an app designed for a wide audience.

Which words qualify as profanity is up to your discretion. This tutorial will explain how to filter words individually, so you have control over what type of language is allowed on your app.

A profanity filter is a software or application that helps detect, filter, or modify words considered profane in communication spaces.

Using Python, let’s build an application that tells us whether a given string is profane or not, then proceed to filter it.

To create our profanity filter, we will create a list of unaccepted words, then check if a given string contains any of them. If profanity is detected, we will replace the profane word with a censoring text.

Create a file named filter.py and save the following code in it:

def filter_profanity(sentence):

wordlist = ["profanity1", "profanity2", "profanity3", "profanity4", "profanity5", "profanity6", "profanity7", "profanity8"]

sentence = sentence.lower()

for word in sentence.split():

if word in wordlist:

sentence = sentence.replace(word, "****")

return sentence

If you were to pass the following arguments to the function above:

filter_profanity("profane insult")

filter_profanity("this is a profane word")

filter_profanity("Don't use profane language")

You would get the following results:

******* ****** this is a ******* word Don't use ******* language

However, this approach has many problems ranging from being unable to detect profanity outside its word list to being easily fooled by misspellings or word paddings. It also requires us to regularly maintain our word list, which adds many problems to the ones we already have. How do we improve what we have?

Better-profanity is a blazingly fast Python library to check for (and clean) profanity in strings. It supports custom word lists, safelists, detecting profanity in modified word spellings, and Unicode characters (also called leetspeak), and even multi-lingual profanity detection.

To get started with better-profanity, you must first install the library via pip.

In the terminal, type:

pip install better-profanity

Now, update the filter.py file with the following code:

from better_profanity import profanity

profanity.load_censor_words()

def filter_profanity(sentence):

return profanity.censor(sentence)

If you were to pass the following arguments once again to the function above:

filter_profanity("profane word")

filter_profanity("you are a profane word")

filter_profanity("Don't be profane")

You would get the following results, as expected:

******* **** you are a ******* **** Don't be *******

Like I mentioned previously, better-profanity supports profanity detection of modified word spellings, so the following examples will be censored accurately:

filter_profanity("pr0f4ne 1n5ult") # ******* ******

filter_profanity("you are Pr0F4N3") # you are *******

Better-profanity also has functionalities to tell if a string is profane. To do this, use:

profanity.contains_profanity("Pr0f4ni7y") # True

profanity.contains_profanity("hello world") # False

Better-profanity also allows us provide a character to censor profanity with. To do this, use:

profanity.censor("profanity", "@") # @@@@

profanity.censor("you smell like profanity", "&") # you smell like &&&&

We have created a Python script to detect and filter profanity, but it’s pretty useless in the real world as no other platform can use our service. We’ll need to build a GraphQL API with Flask for our profanity filter, so we can call it an actual application and use it somewhere other than a Python environment.

To get started, you must first install a couple of libraries via pip.

In the terminal, type:

pip install Flask Flask_GraphQL graphene

Next, let’s write our GraphQL schemas for the API. Create a file named schema.py and save the following code in it:

import graphene

from better_profanity import profanity

class Result(graphene.ObjectType):

sentence = graphene.String()

is_profane = graphene.Boolean()

censored_sentence = graphene.String()

class Query(graphene.ObjectType):

detect_profanity = graphene.Field(Result, sentence=graphene.String(

required=True), character=graphene.String(default_value="*"))

def resolve_detect_profanity(self, info, sentence, character):

is_profane = profanity.contains_profanity(sentence)

censored_sentence = profanity.censor(sentence, character)

return Result(

sentence=sentence,

is_profane=is_profane,

censored_sentence=censored_sentence

)

profanity.load_censor_words()

schema = graphene.Schema(query=Query)

After that, create another file named server.py and save the following code in it:

from flask import Flask

from flask_graphql import GraphQLView

from schema import schema

app = Flask(__name__)

app.add_url_rule("/", view_func=GraphQLView.as_view("graphql",

schema=schema, graphiql=True))

if __name__ == "__main__":

app.run(debug=True)

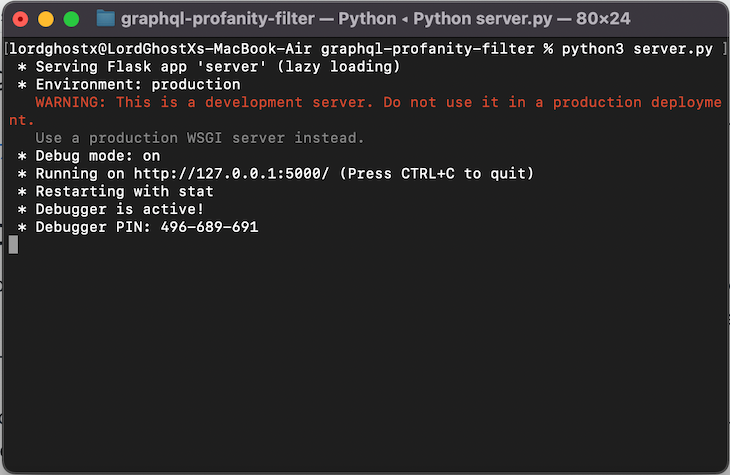

To run the server, execute the server.py script.

In the terminal, type:

python server.py

Your terminal should look like the following:

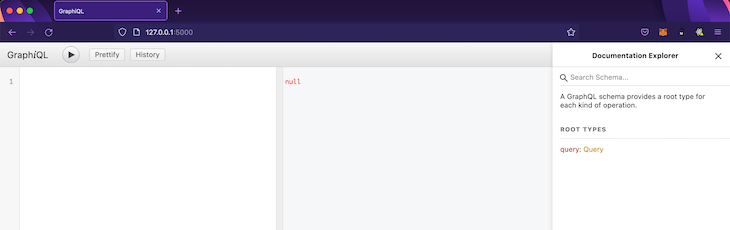

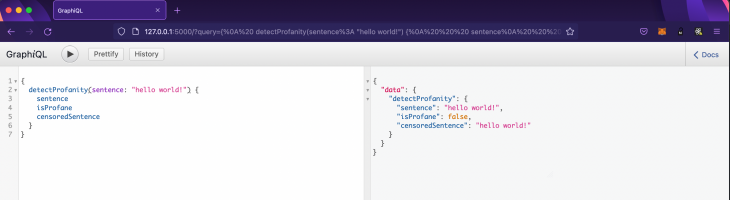

After running the server.py file in the terminal, head to your browser and open the URL http://127.0.0.1:5000. You should have access to the GraphiQL interface and get a response similar to the image below:

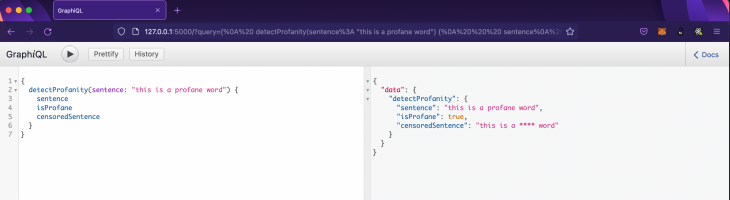

We can proceed to test the API by running a query like the one below in the GraphiQL interface:

{

detectProfanity(sentence: "profanity!") {

sentence

isProfane

censoredSentence

}

}

The result should be similar to the images below:

This article taught us about profanity detection, its importance, and its implementation. In addition, we saw how easy it is to build a profanity detection API with Python, Flask, and GraphQL.

The source code of the GraphQL API is available on GitHub. You can learn more about the better-profanity Python library from its official documentation.

While GraphQL has some features for debugging requests and responses, making sure GraphQL reliably serves resources to your production app is where things get tougher. If you’re interested in ensuring network requests to the backend or third party services are successful, try LogRocket.

LogRocket lets you replay user sessions, eliminating guesswork around why bugs happen by showing exactly what users experienced. It captures console logs, errors, network requests, and pixel-perfect DOM recordings — compatible with all frameworks.

LogRocket's Galileo AI watches sessions for you, instantly aggregating and reporting on problematic GraphQL requests to quickly understand the root cause. In addition, you can track Apollo client state and inspect GraphQL queries' key-value pairs.

Would you be interested in joining LogRocket's developer community?

Join LogRocket’s Content Advisory Board. You’ll help inform the type of content we create and get access to exclusive meetups, social accreditation, and swag.

Sign up now

Discover how to use Gemini CLI, Google’s new open-source AI agent that brings Gemini directly to your terminal.

This article explores several proven patterns for writing safer, cleaner, and more readable code in React and TypeScript.

A breakdown of the wrapper and container CSS classes, how they’re used in real-world code, and when it makes sense to use one over the other.

This guide walks you through creating a web UI for an AI agent that browses, clicks, and extracts info from websites powered by Stagehand and Gemini.