The usage of pipes to transport water, air, gas, or any other fluid substance has enabled tons of things we take for granted. Pipes allow us to transport water to our homes so that we can use things like the sink and bathtub. Our ovens and heating systems rely on pipes transporting gas. Even a straw for drinking out of a cup is a little pipe, if you think about it!

In software, pipes take the output of whatever expression exists on the left side of the pipe and uses that as the input for the right side of the pipe. And like their real-world counterparts, the output of one pipe can easily be hooked up as the input of another.

Without pipelines we would normally apply our function arguments by putting them inside of parentheses — for example, we could do myFunction(argumentToApply). Pipelines just give us another syntax to apply arguments. With a pipeline, we could do the same function like this: argumentToApply |> myFunction.

If you haven’t worked much with pipes, you might be thinking, “That’s it? Why is this even worth anyone talking about?”. And to some degree, adding simple pipeline operators won’t change how JavaScript works too much. The exciting thing about pipes is that they make certain types of operations really easy to read!

const toLower = str => str.toLowerCase();

const prepend = (prefix, str) => prefix + str;

const trim = str => str.trim()

// Standard way.

toLower(prepend("🚧 ", trim(" pipelines! 🚧 . ")))

// With pipeline sugar 🍭

" pipelines! 🚧"

|> trim

|> (str => prepend("🚧 ", str))

|> toLower

// Result either way: "🚧 pipelines! 🚧"As you can see, either way is “right” in that it produces the same result. But using the pipeline syntax allows us to dramatically decrease the amount nested parentheses we have.

If you’re working with data frequently, you might be doing a lot of operations on your data as it comes into your application in order to make it fit better to how your application uses it (I know I do!). Pipes are extremely useful for describing these sets of transformations since instead of having to read your data transformations backward (from the innermost parentheses to the outermost call) you can just read them top-to-bottom in the order that they execute.

Using pipelines like this also encourages us to create a lot of small, isolated functions where each function does only one thing. Later on, when we need to do more complex things, we can just stitch all of our atomic functions together in a clean, readable format! This helps with testing our application logic (we don’t have one massive function that does everything) as well as reusing logic later on.

There’s a ton of exciting proposals going through TC39 right now, why are we diving into pipelines? For one, pipelines already exist in a whole host of functional languages — Elm, Reason, Elixir, even Bash! Because they already exist we can easily see how they improve code readability based on their usage in other ecosystems.

As another reason, pipelines have the potential to make nested operations way cleaner, similar to the way that arrow functions made anonymous functions a lot easier to follow (in addition to the slight functionality differences they add). This gets me really excited about seeing them come to JavaScript and the effect that they might have on functional programming in JavaScript

As cool of an addition to ECMAScript pipelines will be, they’re not quite ready to be added anytime soon. The proposal is currently in Stage 1, meaning that the discussion about what JavaScript pipelines should be is still in full swing (If you want a quick refresher on the spec & proposal process, check out this document for a great little chart). As it stands today, there are three main proposals competing detailing what a pipeline operator could look like in JavaScript.

As the name suggests, the simple pipes proposal is the least complex of the pipeline contenders, behaving exactly like the example we saw up above. Within this proposal, the pipeline is only responsible for taking the evaluated expression on the left and using it as the input to the function on the right.

" string" |> toUpper |> trimUsing an arrow function within a simple pipeline requires that you wrap it in parentheses.

The Replay is a weekly newsletter for dev and engineering leaders.

Delivered once a week, it's your curated guide to the most important conversations around frontend dev, emerging AI tools, and the state of modern software.

" string" |> toUpper |> (str => str + " 😎")Another “gotcha” is the output from the left side is passed into the right side function as a single argument. This means that if we have a function expecting two arguments we would need to wrap it in an arrow function in order to guarantee that our arguments are in the correct order.

" string" |> toUpper |> (str => prepend("😎 ", str)Because of this, using simple pipes tends to promote the use of curried functions — especially if the data being passed in from the left side is the last argument to the function. If we curry our prepend function, it’ll be easier to add to our pipeline since it now it doesn’t require wrapping an arrow function.

const prepend = prefix => str => prefix + str

" pipeline with currying 🤯"

|> toUpper

|> prepend("🤯 ")Since our curried prepend function returns a new function that receives the output of toUpper, it makes the pipeline considerably cleaner!

The last noticeable thing about the simple pipeline proposal is that there is no special treatment for await. In this proposal, await within a pipeline isn’t even allowed. Using it will throw an error!

The F# pipeline proposal is super close to the simple pipelines proposal. The only difference is the ability to use await within a pipeline chain to allow for asynchronous operations. Using await in the middle of a pipeline waits for the function on the left to resolve before running the functions later on the pipe.

url

|> fetch

|> await

|> (res => res.json())

|> doSomeJsonOperationsThe above example would desugar to something that looks like this:

let first = fetch(url)

let second = await first;

let third = second.json()

let fourth = doSomeJsonOperations(third)The last main proposal for adding pipes draws its inspiration from Hack, a PHP dialect originating out of Facebook. In Hack pipelines, the output of the expression on the left side of the pipe is dropped into a token to be used by the expression on the right side of the pipe.

In Hack, the token used is $$, but the ECMAScript proposal has been considering using something like# as a token. A Hack-style pipeline in JavaScript might look like this:

"string" |> toUpper(#) |> # + " 😎"In addition to having a “token style” requiring the use of # on the right side of the pipeline, smart pipelines would also allow a “bare style”. This “bare style” would be closer to the simple/F# pipes proposals. Based on which style is used, the pipe would assign the left value of the pipe differently. That’s what makes them so “smart”!

// With smart pipes

" string"

|> toUpper

|> # + " 😎"

|> prepend("😎 ", #)

// Without pipes

prepend(

"😎 ",

toUpper(" string") + " 😎"

)In addition, usage of await inside of the middle of the pipeline would also be allowed. The possibility of using tokens along with the ability to write asynchronous pipelines allows this proposal to supply an extremely flexible pipe that can handle virtually any group of nested operations.

However, adding the extra sugar to the smart pipelines does complicate the syntax considerably over the simple pipelines and the F# proposal. In addition to adding a pipeline syntax (|>), a placeholder syntax needs to be agreed upon and implemented.

Another concern with these smart pipelines is that there’s a lot of syntactic sugar and “magic” going on with how the pipe works. Adding this type of magic to the pipes could result in some confusing behavior and might even negatively impact readability in some cases.

Of course, since this proposal is still being fleshed out, these concerns are being taken into consideration and I certainly hope we end up with something that is both simple to understand and elegant to use.

As we’ve seen, pipelines aren’t close to becoming part of the ECMAScript spec — they’re only in Stage 1 and there’s a lot of differing opinions about what they should be.

However, let’s not see these competing proposals as a bad thing! The number of differing opinions and proposals is a great part of the JavaScript ecosystem. JavaScript’s future trajectory is being determined out in the open — anyone can chime in with their thoughts and actually make a real impact on the language. People like you and me can go read through these discussions on the TC39 repo on GitHub and see what things are going to be added to the language!

While some may see this “open-source” development of JavaScript as a weakness (since the input of so many voices might detract from a language’s “cohesiveness”), I think it’s something many people, myself included, enjoy about the JavaScript language and ecosystem.

In addition, Babel is currently working on plugins for these 3 pipeline proposals so that we as developers can play with them before a proposal is fully adopted. With JavaScript, backward compatibility is super important. We don’t want older websites to break when new language features are added! Being able to try out these language features during the spec and approval process is huge for the JavaScript ecosystem since developers can voice any concerns before a language feature gets set in stone.

If you want to get started playing around with pipelines in JavaScript, check out this repo I made as a playground. Currently, the only proposal supported by Babel is the simple pipeline proposal, so that’s the only one I was able to play around with. That said, work on the other two is well underway, and I’ll try to update once the other two syntaxes are supported by the plugin.

What do you think about the pipeline proposal? Which is your favorite proposal? If you’ve got any questions feel free to reach out or tweet at me!

Debugging code is always a tedious task. But the more you understand your errors, the easier it is to fix them.

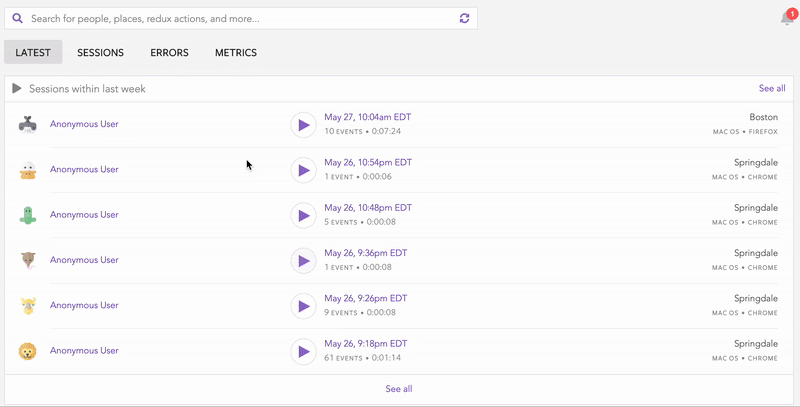

LogRocket allows you to understand these errors in new and unique ways. Our frontend monitoring solution tracks user engagement with your JavaScript frontends to give you the ability to see exactly what the user did that led to an error.

LogRocket records console logs, page load times, stack traces, slow network requests/responses with headers + bodies, browser metadata, and custom logs. Understanding the impact of your JavaScript code will never be easier!

Discover five practical ways to scale knowledge sharing across engineering teams and reduce onboarding time, bottlenecks, and lost context.

Discover what’s new in The Replay, LogRocket’s newsletter for dev and engineering leaders, in the March 4th issue.

Paige, Jack, Paul, and Noel dig into the biggest shifts reshaping web development right now, from OpenClaw’s foundation move to AI-powered browsers and the growing mental load of agent-driven workflows.

Check out alternatives to the Headless UI library to find unstyled components to optimize your website’s performance without compromising your design.

Hey there, want to help make our blog better?

Join LogRocket’s Content Advisory Board. You’ll help inform the type of content we create and get access to exclusive meetups, social accreditation, and swag.

Sign up now