When users perceive that AI systems are developed with their best interests in mind, they are more inclined to trust the technology and engage with it confidently. Embracing and adhering to the best practices for designing ethical AI user interfaces will prove mutually advantageous to both designers and users.

In this article, we’ll talk about essential best practices for designing ethical AI user interfaces, offering guidance on creating interfaces that prioritize user trust and confidence. We’ll explore the importance of conducting user research to gain insights into user perspectives on AI and their expectations. Additionally, we’ll discuss the significance of transparency in AI algorithms and decision-making processes, along with strategies for designing interfaces that provide explanations for AI-driven outcomes.

Designers should never skip over user research when creating AI interfaces. Only by conducting research can you truly understand what your users want and expect from AI systems. By diving into user research, you gain invaluable insights and feedback on how users actually perceive and interact with AI technology.

Now, let’s explore the different ways you can conduct user research. Think of interviews as those deep conversations that bring out unique insights and perspectives. On the other hand, surveys let you cast a wide net, capturing input from a diverse range of users and giving you a broader view of your audience. By combining multiple research methods, you can gather both qualitative and quantitative data that will help you make design decisions to cater to meet your users’ specific needs.

After gathering your research findings, synthesize the data to identify key insights that will help with fighting potential biases and concerns related to AI systems. Biases may already be present in the data used to train AI models or in the algorithms themselves. By proactively addressing these biases, designers can mitigate the potential negative impacts and promote fairness and inclusivity.

Many people are skeptical about AI because of misinformation or a lack of understanding of how it produces results. That’s why it’s important for designers to document and explain the underlying AI algorithms and decision-making processes within the interface.

By providing clear and accessible documentation, designers can help users grasp how AI systems function and how they reach their outcomes. Being transparent establishes a foundation of trust and enables users to make informed judgments about whether they can rely on the AI system being fair.

One important aspect of transparency is disclosing data sources and addressing potential biases. AI algorithms heavily rely on data for training and decision-making. To ensure ethical design, designers should be upfront about the data sources used in AI systems and highlight any potential biases.

Acknowledging the limitations and biases present in the data helps users understand that AI isn’t a magical solution that always produces accurate and unbiased results. It also creates an opportunity to work towards fair and inclusive AI outcomes.

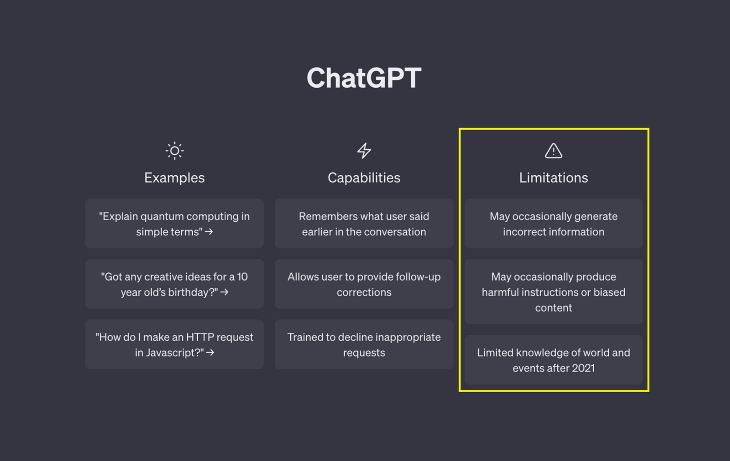

For instance, on ChatGPT’s website, there is a clear notification about the limitations of generating correct information and unbiased content. This prevents users from assuming that ChatGPT is always right and encourages them to fact-check any information it generates.

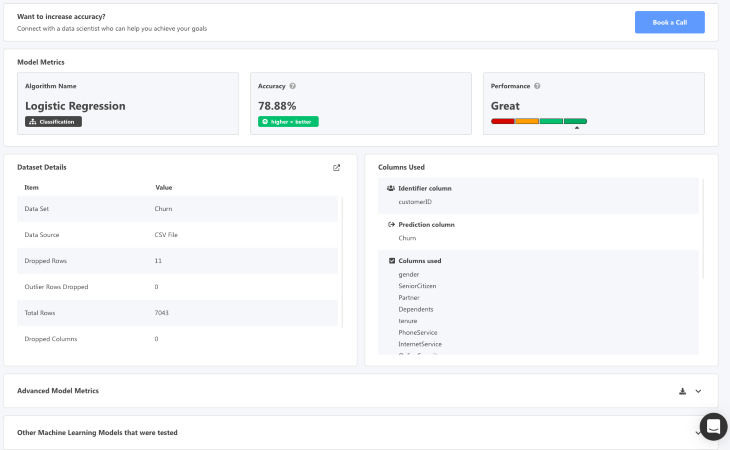

To further enhance trust in AI interfaces, designers should provide insights into the behavior and performance of the underlying AI models. Some common performance metrics that might be insightful to users include model accuracy (which tells how often the AI is correct) and recall (which measures how many of its positive predictions are really true).

By presenting AI metrics in a clear and understandable manner, users can assess the reliability of the AI system and decide for themselves how much to trust it. Transparently reporting model behavior enables users to contextualize and make better decisions based on AI-generated recommendations.

When designing ethical AI interfaces, designers should remember to provide explanations for the outcomes and recommendations generated by AI. Users deserve to understand why a certain recommendation or decision was made, so explaining how the AI got to that outcome can help educate users on AI-driven processes.

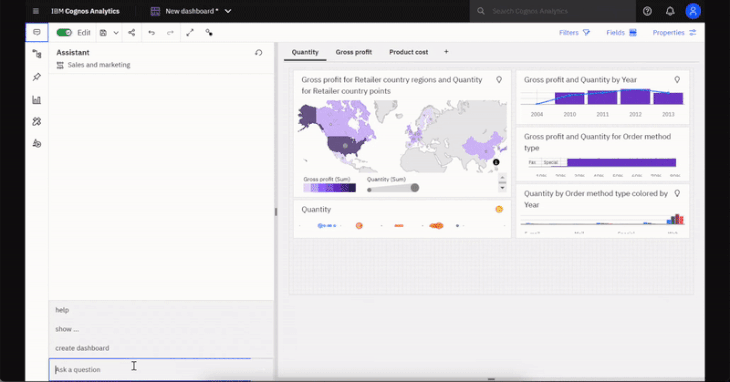

In this example, IBM Cognos Analytics’ Assistant feature leverages Watson AI to provide users with recommendations and insights. The interface for the Watson insight clearly describes what Watson can detect and how it arrives at its recommendations.

By offering clear explanations, designers can increase user trust, promote transparency, and help users make informed choices based on AI-driven insights.

Designers should present AI-generated information in a user-friendly and easily understandable manner. Whenever complex technical details are included in messaging and communication, translate them into basic terminology and use accessible language to help all users understand the information.

When possible, include visuals to further help communicate the message. The average user is not an expert in AI or technology, so explaining complex information in layman’s terms will ensure that everyone can understand it.

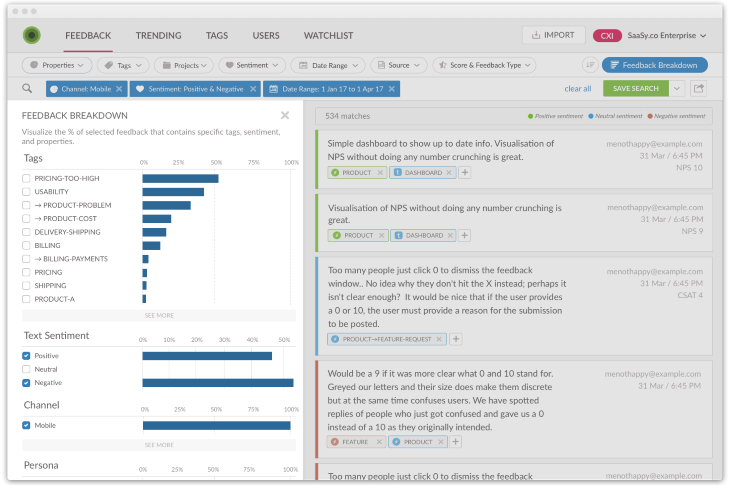

Visualizations and interactive elements can be used to strengthen the messaging of explanatory interfaces. Graphs, charts, and diagrams can help users grasp patterns, trends, and correlations in the data.

Interactive features allow users to explore the underlying information and dive deeper into AI-driven insights. By incorporating these elements thoughtfully, designers can facilitate user understanding and engagement with AI-generated outcomes.

Communicating the limitations, uncertainties, and potential errors of AI systems can help set realistic user expectations and promote responsible engagement with AI-driven outcomes. Here are some ways to ensure transparency with users when designing AI-driven interfaces.

AI is not flawless and can definitely come with limitations, such as being sensitive to certain input conditions or lacking contextual understanding. This can be due to the data that the AI system is trained on.

By transparently communicating these limitations, designers can help users understand the boundaries of AI capabilities and prevent unrealistic expectations.

AI systems may encounter uncertainties and errors in generating outcomes or recommendations. Designers should proactively address these uncertainties and potential errors to provide users with a realistic understanding of the AI system’s reliability.

Communicating the margin of error or uncertainty associated with AI-driven outcomes allows users to interpret and contextualize the information more accurately.

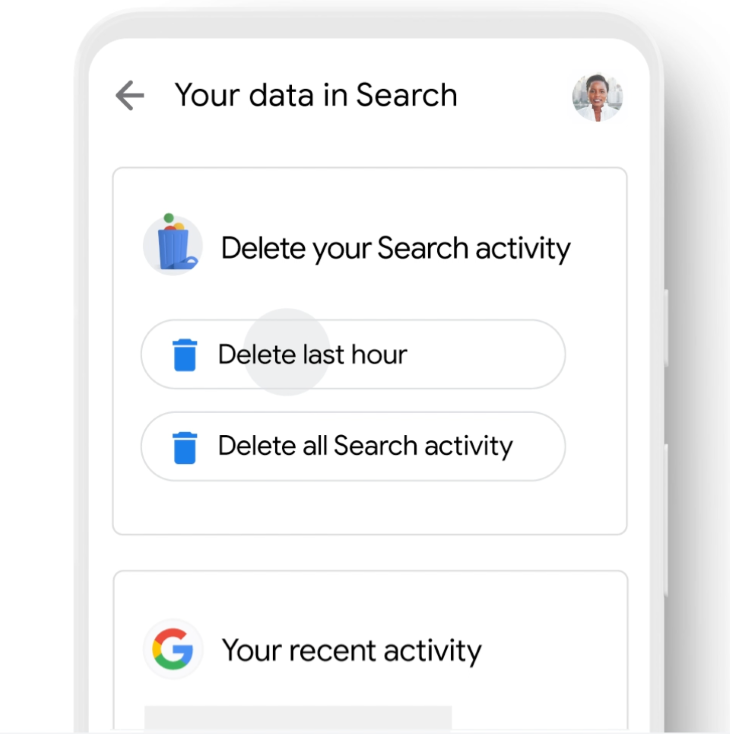

Users have a right to their own data privacy, so when it comes to AI systems collecting user data, designers must be careful about how they handle the data collection process. Respecting user privacy in AI user interfaces demonstrates a commitment to ethical practices and user rights.

By collecting only necessary data, obtaining informed consent, and implementing security measures, designers can foster user trust and confidence in the AI system. Prioritizing user privacy also ensures that users have control over their personal information and feel comfortable engaging with AI technology.

You should carefully assess the data requirements of the AI system and avoid excessive or unnecessary data collection. If the AI system only requires location data, there’s no need to ask for credit card information.

By minimizing data collection, you protect user privacy and reduce potential risks associated with data breaches or misuse. This ensures that user privacy is respected throughout their experience using AI products.

Designers should also provide the option for users to delete any data that was previously collected and used by their application. This gives users control over their own data and strengthens their trust in using AI applications.

Designers should always seek user consent before collecting personal data for AI systems. Without consent, users may feel their privacy is violated.

When asking for consent, be clear about how the data will be used and set boundaries. Explain the purpose of data collection, storage duration, and any involvement of third-party systems. Respecting user consent and providing transparency builds trust and fosters an ethical AI ecosystem that values privacy.

Users have different preferences and boundaries when it comes to interacting with AI. Designers should prioritize user control and personalization in AI interfaces, allowing users to customize their experience according to their needs.

Some users may want to limit AI involvement or opt out of certain AI features. To respect these preferences, designers can provide accessible settings that allow users to adjust the behavior of the AI system.

By giving users the freedom to define parameters, set boundaries, and personalize AI responses, we can create a more user-centric approach. It’s important to acknowledge and accommodate the diverse user preferences, offering options that allow them to tailor their AI interface.

Designers can enhance the AI user interface by establishing feedback channels. This creates a feedback loop for continuous improvement. Actively seeking user input, encouraging issue reporting, and addressing concerns promptly fosters user satisfaction, trust, and confidence in the AI system.

Accessible feedback mechanisms, such as feedback forms, in-app messaging, or dedicated support channels allow users to submit feedback. Actively encouraging user feedback enables designers to gain valuable insights, identify issues, and gather suggestions for improvement.

Creating an environment where users feel comfortable reporting issues and concerns is crucial for improving the AI interface. By actively encouraging users to provide feedback through accessible channels like feedback forms, in-app messaging, or dedicated support channels, you can gather valuable insights into user experiences, identify potential problems, and collect suggestions for enhancement.

Promptly addressing user feedback and resolving issues is equally important. Designers and developers should prioritize and categorize user feedback based on urgency and significance. Acknowledging receipt of feedback and keeping users informed about the progress of issue resolution cultivates transparency and fosters trust in the AI system.

Designers should actively solicit and value user feedback, listen to their concerns, and promptly address any emerging issues. By embracing this iterative feedback loop, designers can gather invaluable insights, enhance user satisfaction, and optimize the AI interface to align with user expectations.

The collaborative efforts between designers and users lay the foundation for continuous improvement, ensuring the AI system remains responsive, reliable, and attuned to the users’ evolving needs.

As AI adoption rapidly accelerates, ethical design principles are essential for responsible and beneficial AI use. By implementing these best practices, designers can foster user confidence, transparency, and trust with AI systems. The ethical design of AI user interfaces is a shared responsibility that contributes to the beneficial integration of AI technology into our lives.

LogRocket's Galileo AI watches sessions and understands user feedback for you, automating the most time-intensive parts of your job and giving you more time to focus on great design.

See how design choices, interactions, and issues affect your users — get a demo of LogRocket today.

Security requirements shouldn’t come at the cost of usability. This guide outlines 10 practical heuristics to design 2FA flows that protect users while minimizing friction, confusion, and recovery failures.

2FA failures shouldn’t mean permanent lockout. This guide breaks down recovery methods, failure handling, progressive disclosure, and UX strategies to balance security with accessibility.

Two-factor authentication should be secure, but it shouldn’t frustrate users. This guide explores standard 2FA user flow patterns for SMS, TOTP, and biometrics, along with edge cases, recovery strategies, and UX best practices.

2FA has evolved far beyond simple SMS codes. This guide explores authentication methods, UX flows, recovery strategies, and how to design secure, frictionless two-factor systems.