At Google I/O 2023, one of the major highlights of the event was Generative AI, the cutting-edge branch of artificial intelligence that focuses on creating models and algorithms capable of generating new content, such as text, images, code, videos, etc., from simple natural language prompts.

During the event, Google announced several new AI products that enable developers to add intelligent capabilities to their applications. One of these products is Google’s PaLM API, a generative AI platform that allows developers to leverage Google’s large language models, such as PaLM 2 for building innovative solutions.

Google also unveiled a set of AI extensions that connect the PaLM API with Firebase, simplifying the integration process for developers seeking to incorporate the PaLM API into their own applications.

In this article, we’ll explore four AI extensions and learn how to incorporate one into your application.

Jump ahead:

The Replay is a weekly newsletter for dev and engineering leaders.

Delivered once a week, it's your curated guide to the most important conversations around frontend dev, emerging AI tools, and the state of modern software.

Generative artificial intelligence (AI) is a subset of AI that creates new content, including text, graphics, and even audio. It uses large neural networks called large language models (LLMs) that are trained on massive amounts of data to learn the patterns and structures of human language. When you provide a prompt to an LLM, such as a word, phrase, or question, the LLM can then generate a response that is relevant and coherent to the prompt.

Generative AI has many applications and benefits across various domains and industries, such as education, entertainment, health care, and more. A popular use case is in building chatbots that can converse naturally and intelligently with users such as Open AI’s ChatGPT and Google’s Bard.

However, Generative AI also comes with some challenges and risks, such as the possibility of creating harmful or biased content, the challenge of checking the accuracy or quality of the created content, the ethical and legal consequences of using or sharing the created content, and the social and cultural effects of substituting human creativity with machine-created content. Hence, generative AI needs careful and responsible development and use, as well as continuous research and evaluation.

PaLM stands for Pathways Language Model. It is a transformer-based large language model (LLM) developed by Google AI. The initial version of PaLM was first announced in April 2022.

On May 2023, a new iteration was announced called the PaLM 2, which is trained on five times more data than PaLM. PaLM 2 is good at math, coding, advanced reasoning, and multi-lingual tasks like translation. It is currently used in Bard and Google Workspace products:

Overall, PaLM 2 is a more powerful and versatile model, and is the first to be open to external users (the public) through the PaLM API

Note: The PaLM API is currently in public preview. Hence, production applications are not supported at this time.

Currently, the Google PaLM API is comprised of three endpoints: chat, text, and embeddings.

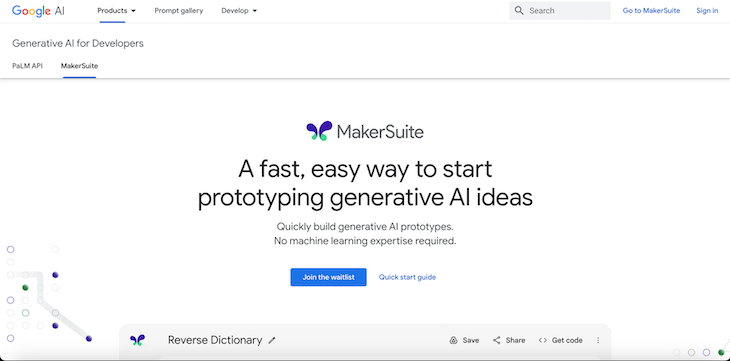

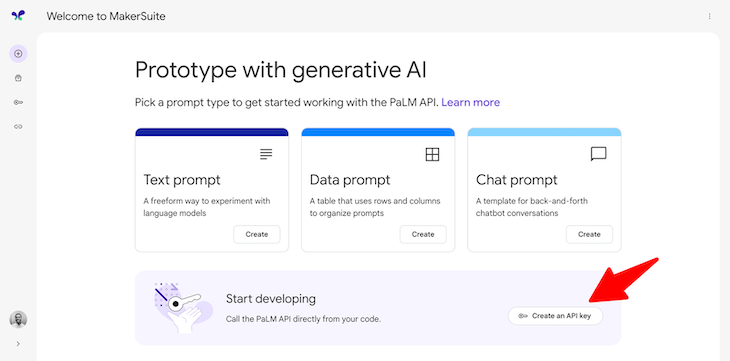

Alongside the PaLM API, Google also launched a new platform called MakerSuite.

MakerSuite is a tool that enables developers to start building large language model applications with Google’s generative language models quickly and easily. MakerSuite makes it simple to design prompts so you can quickly develop and share working prototypes of LLM-powered applications within minutes:

MakerSuite lets you quickly try out models and experiment with three types of prompts: text prompt, data prompt, and chat prompt.

No previous machine-learning expertise is required to use this tool and it’s all done right from the browser without any setup required. To learn more about this, visit the official website.

Both PaLM and GPT-3 are powerful language models that can handle various natural language processing tasks. At a basic level, PaLM is similar to rival transformer-based models, including OpenAI’s GPT-3 and GPT-4 models.

Here are some of the key differences between PaLM and GPT-3:

Currently, there are two ways to access the PaLM API. The first is to join the waitlist, and then get your API key via MakerSuite. The second way is to use Vertex AI if you are already a Google Cloud customer.

Quick note:

At the time of writing this article, the PaLM API is currently only available in the United States. It is planned to be made available in other regions in the future.

Using the PaLM API directly can be challenging and time-consuming, especially if you need to integrate it with your Firebase apps. That’s why Google has also released several PaLM API Firebase Extensions, which are pre-packaged, serverless solutions that let you quickly integrate the PaLM API into your app using Firebase Extensions.

The Chatbot with PaLM API Firebase Extension enables developers to establish and manage conversations between users and large language models through the PaLM API, using Cloud Firestore as the database.

With this extension, you can create chatbot applications that enhance user experience and interaction with natural responses. You can further customize the chatbot’s behavior by providing a prompt to define the chatbot’s persona, tone, and style.

To use this extension, you need to provide a Firestore collection path that will store the conversation history, which is represented as documents. Each document will contain a message field for the user input and a response field for the chatbot output.

The extension monitors the collection for new message entries and then queries the PaLM API for a suitable response while using the chat’s previous messages as context. The extension then writes the response back to the triggering document in the response field.

The Call PaLM API Securely Firebase Extension makes use of App Check to provide secure API endpoints. It allows developers to interact with the PaLM API from their Firebase apps. App Check is a service that provides additional security for your Firebase backend resources by blocking requests from unauthorized apps.

The extension stores the API key as a secret in Cloud Secret Manager, allowing the API endpoint to access it directly without including it in the request. The API endpoints are deployed as Firebase Callable functions, which require users to be signed in as a Firebase Auth user to be able to call the functions from their client applications successfully.

The deployed endpoint functions as a thin wrapper, enabling developers to send the same request body as they would when directly accessing the PaLM API. This extension supports all the PaLM API endpoints: chat, text, and embeddings.

The Language Tasks with PaLM API Extension is an extension that allows developers to use the PaLM API to perform various text-based tasks, such as text translation, classification, etc.

The extension operates by watching specific collections in Cloud Firestore, a scalable database for Firebase apps, for new documents. Users can create a custom prompt for each task, which is a text input that tells the PaLM API what to do. The prompt can also have handlebar templates, which are placeholders for variables that are filled with values from the document that triggered the extension.

For example, a prompt for translation could be:

Translate {{text}} from {{source_language}} to {{target_language}}

When a new document is added to the collection, the extension fills the variables with the relevant values from the document and sends the prompt to the PaLM API. The PaLM API then produces a response based on the prompt and writes it back to the document that triggered the extension in a configurable response field.

For example, a document with the following values:

text: "Hello world"

sourcelanguage: "English"

targetlanguage: "Spanish"

would receive a response like this:

Hola mundo

The extension can deal with multiple tasks with different prompts by installing multiple instances of the extension, each configured for a specific task and collection. The extension also provides tools and guidance for developers to build responsibly with generative AI, such as filters, monitoring, and evaluation.

The Language Tasks with PaLM API Extension is an effective and flexible way for Firebase developers to use the PaLM API to create natural language applications without writing custom code or managing API keys.

The Summarize Text with PaLM API Firebase Extension is an AI-powered tool designed to generate summaries for various applications, such as news articles, blog posts, product reviews, and more. You can use this extension to provide concise and informative summaries for your users or to extract key information from large texts.

The extension monitors a Firestore collection that contains documents with text to be summarized. The extension queries the PaLM API with a summarization prompt based on the text, and writes the summary back to the document in a configurable field.

The extension can be used for use cases such as summarizing customer feedback, abstracting long articles, or condensing user-generated content.

Let’s build a simple web app that allows users to enter some text and get a summary of it using the Summarize Text with PaLM API Firebase Extension.

To follow this tutorial, you will need:

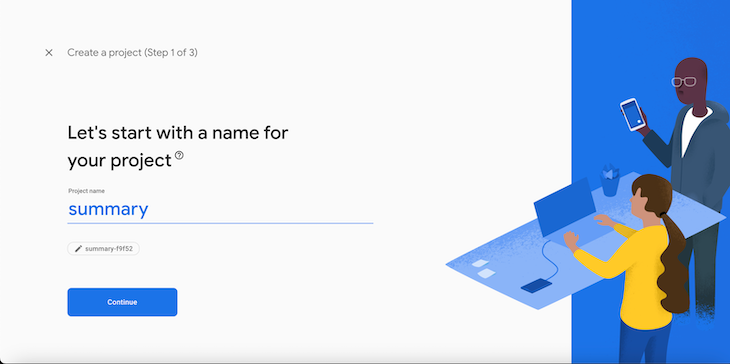

First, you need to create a Firebase project. To do that, first go to the Firebase console and create a new project or select an existing one. I will name my project “summary”:

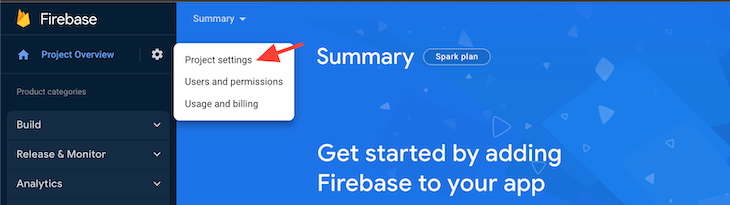

Next, click on the gear icon next to Project Overview and select Project settings:

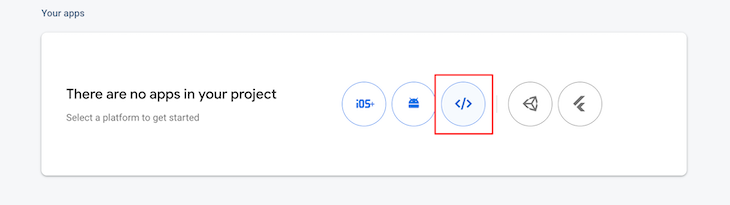

Then, you want to register a web app. To do so, scroll down to the Your apps section and click on the web icon:

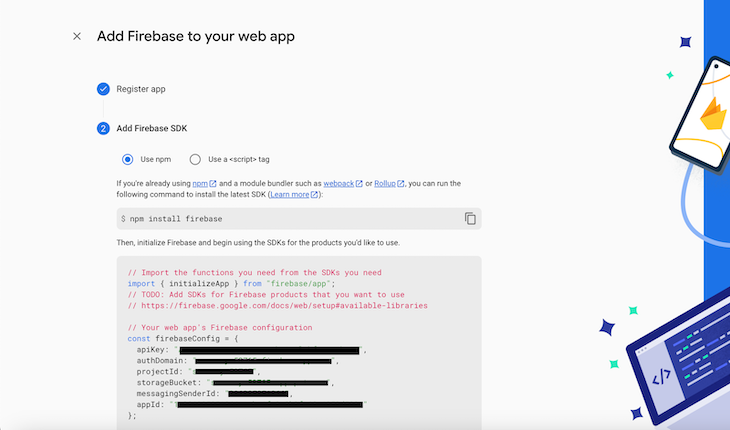

Give your app an App Nickname and register the app. You will then see your Firebase SDK config. Copy the Firebase configuration object that appears on the screen and save it for later use. Then, click continue to console:

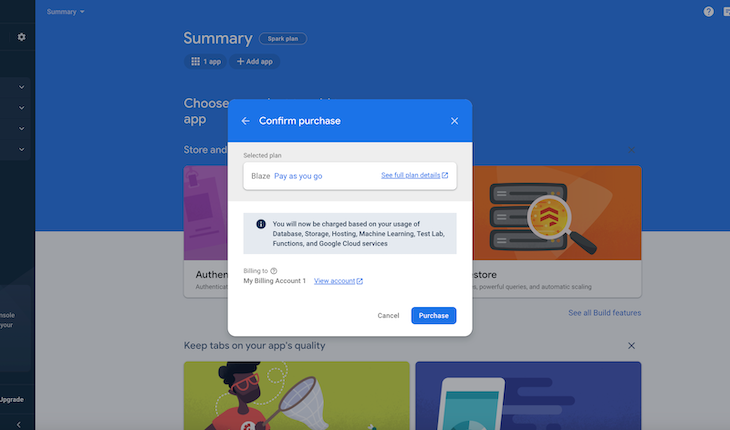

To be able to use extensions in Firebase, you need to upgrade the project from the Spark plan to the Blaze plan. To do this, navigate to the bottom of the dashboard and click on Upgrade. Select your plan of choice, and your billing accounts. You can skip the Set a billing budget step. Finally, confirm your purchase:

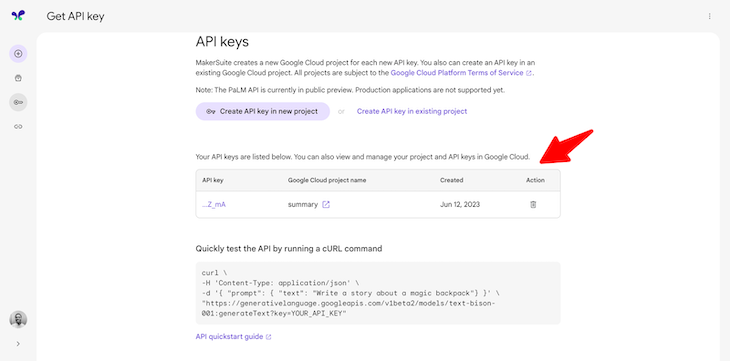

After joining the waitlist, you will receive an email to get access to the PaLM API and MakerSuite. Assuming you now have access to PaLM API, you need to create your API key:

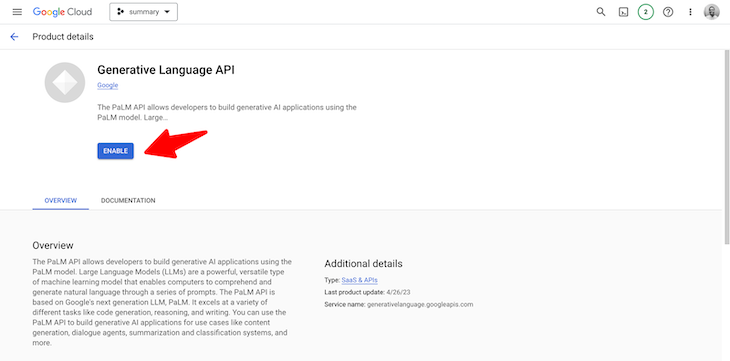

Once you have access, enable the Generative Language API in your Google Cloud Project before installing the extension:

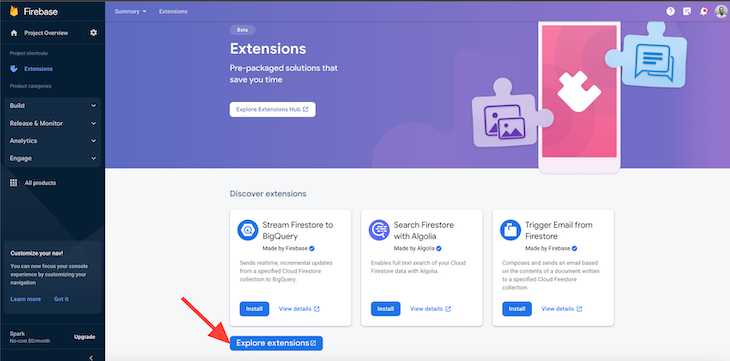

Then, navigate to Cloud Firestore and create a new database using “docs” as the collection name. Finally, we’ll install the extension. In the left sidebar, navigate to Extensions and click on Explore extensions:

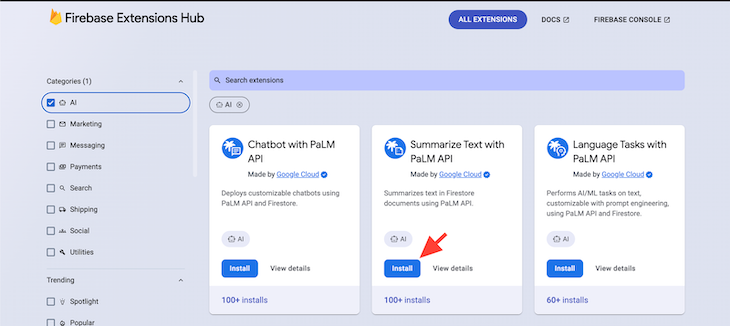

Then, filter extensions by AI, and click Install:

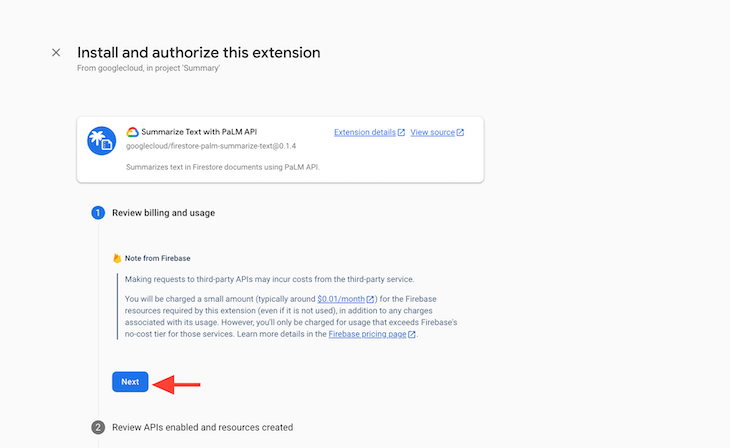

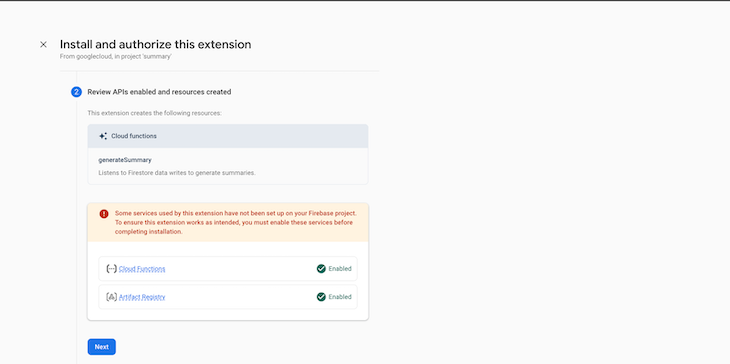

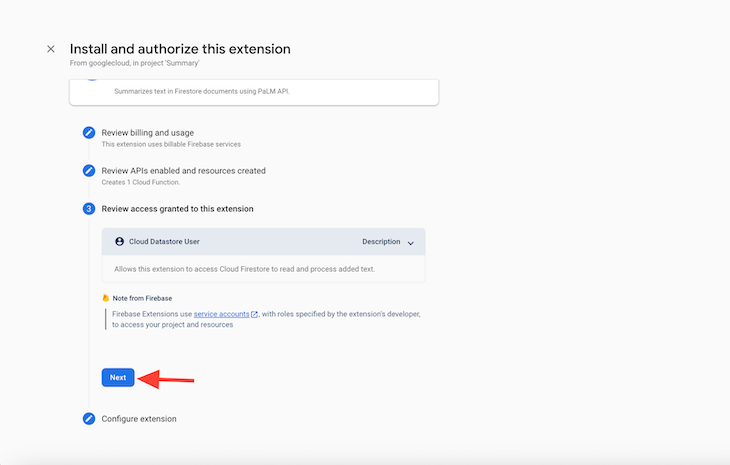

Next, select your project. You will be navigated to the page below:

For option 2, click Enable to enable our Cloud functions:

Then, add a Collection Name. This is the path to the Firestore collection that will store the text documents to summarize. For this, I will be using docs. Next, add a Text field. This is the name of the document field that contains the text to summarize. I will be using text.

Then, add a Response field. This is the name of the field in the document to store the summary. For this, I will be using summary.

Finally, add Target summary length. This is the desired number of summary sentences. For this, I will be using the default, which is 10.

Select your preferred Cloud Functions Location, and then click Install extension:

Finally, just wait for the installation to complete!

npm create vite@latest

Wait for the app to be created and then change into its directory:

cd text-summarizer

Run this command to install Firebase as a dependency:

npm install firebase

Open the file src/App.jsx and replace its contents with this code:

import { useEffect, useState } from 'react';

import { initializeApp } from 'firebase/app';

import {

getFirestore,

collection,

addDoc,

onSnapshot,

query,

orderBy,

limit,

} from 'firebase/firestore';

const firebaseConfig = {

// your configs

};

/// Initialize Firebase

const app = initializeApp(firebaseConfig);

const db = getFirestore(app);

const docRef = collection(db, 'docs');

function App() {

const [text, setText] = useState('');

const [summary, setsummary] = useState('');

useEffect(() => {

const unsubscribe = onSnapshot(

query(docRef, orderBy('createdAt', 'desc'), limit(1)),

(snapshot) => {

snapshot.forEach((doc) => {

const data = doc.data();

setsummary(data.summary);

});

}

);

return () => unsubscribe();

}, []);

const handleInputChange = (e) => {

setText(e.target.value);

};

const handleSubmit = async (e) => {

e.preventDefault();

try {

await addDoc(docRef, { text, createdAt: new Date() });

console.log('Text added successfully');

} catch (error) {

console.error('Error adding text:', error);

}

setText('');

};

return (

<div>

<h2>Text Summarizer App</h2>

<form onSubmit={handleSubmit}>

<textarea

type='text'

value={text}

onChange={handleInputChange}

placeholder='Enter text'

/>

<br />

<br />

<button

style={{

backgroundColor: 'white',

color: 'black',

}}

type='submit'

>

Submit

</button>

</form>

<br />

<h3>Summary:</h3>

<div className='summary' style={{ fontSize: '12px' }}>

{summary}

</div>

</div>

);

}

export default App;

In the code above, we first initialize the Firebase using the firebaseConfig object. Then we set up a form that takes in a text and sends the data to our Firestore database once the Submit button is clicked.

After this, we set up a useEffect Hook that queries the docs collection for the latest data entered and renders its summary field, which is generated by the Firebase extension to a div in the component.

Now, test the app:

And that’s it! We’ve successfully built a text summary app using the Summarize Text with PaLM API Firebase AI Extension!

This article has provided you with valuable insights into the world of Generative AI and the recently launched AI extensions powered by the PaLM 2 API.

We looked at four of the released extensions, and built a sample app to see how we could practically integrate one of them. You can take this a bit further and try integrating the other extensions into your applications.

I hope you found this article enjoyable and informative. Your feedback is greatly appreciated, so please share your thoughts in the comments below. Happy coding!

Solve coordination problems in Islands architecture using event-driven patterns instead of localStorage polling.

Signal Forms in Angular 21 replace FormGroup pain and ControlValueAccessor complexity with a cleaner, reactive model built on signals.

Discover what’s new in The Replay, LogRocket’s newsletter for dev and engineering leaders, in the February 25th issue.

Explore how the Universal Commerce Protocol (UCP) allows AI agents to connect with merchants, handle checkout sessions, and securely process payments in real-world e-commerce flows.

Would you be interested in joining LogRocket's developer community?

Join LogRocket’s Content Advisory Board. You’ll help inform the type of content we create and get access to exclusive meetups, social accreditation, and swag.

Sign up now