Editor’s note: This article was last updated on 4 July 2022 to bring the information up-to-date with Node v18.

In this article, we are going to learn what memory leaks are, what causes them, and their implications in a Node.js application.

Although Node works well with many applications thanks to its scalability, it has some limitations with regards to the heap size. To improve the efficiency of Node apps, it is important to understand why memory leaks occur and, even more so, how to debug them.

Understanding memory management reduces the likelihood of wasting your application’s resources, but the elusive nature of memory leaks and the unexpected effects they can have on performance means it is critical to understand how Node manages memory.

The Replay is a weekly newsletter for dev and engineering leaders.

Delivered once a week, it's your curated guide to the most important conversations around frontend dev, emerging AI tools, and the state of modern software.

Performance is critical to the adoption and usage of an application, which makes memory management an important facet of software development. For this reason, Node has some built-in memory management mechanisms related to object lifetimes.

For instance, Node dynamically allocates memory to objects when they are created and frees the space when these objects are not in use. Once the memory has been freed, it can be reused for other computations.

The allocation and deallocation of memory is predominantly handled by the garbage collector (GC). Garbage collection refers to the process of finding all the live values and returning memory used by dead values to the system so they can be recycled later on.

The Node GC uses the heap data structure to store memory references to objects as they are created. This heap has a finite size, and the GC computes how fast the resources were depleted to dictate whether or not there could be a memory leak.

Every memory allocation brings you closer to a garbage collector pause. The GC identifies dead memory regions or unreachable objects through a chain of pointers from a live object, then reallocates or releases the memory to the OS.

On a lower level, Node uses the V8 JavaScript engine. In its own words, “V8 is Google’s open-source, high-performance JavaScript and WebAssembly engine, written in C++.” V8 executes code and manages the memory required for its runtime execution.

The management is done by allocating and freeing memory as required by the program. And while the Node GC does a considerably good job at managing memory, leaks still occur for various reasons.

Memory leaks occur when long-lived objects are attached to expectedly short-lived objects.

A real-life example of how a memory can leak is shown in this code snippet:

const requests = new Map();

app.get( "/", (req,res) => {

requests.set(req.id, req);

res.status(200).send("Hello World");

});

The above example is likely to cause a memory leak because the variable requests, which holds a new instance of the Map object, is global. Thus, every time a request hits the server, there is a memory allocation to the object.

The guaranteed memory allocation to the new instance means that the object will live forever. The application will eventually run out of memory and crash when the number of requests consumes memory beyond the resources available to the application.

Memory leaks can be problematic if they go unnoticed, especially in a production environment. When incomprehensible CPU and memory usage increases in an application, chances are, there is a memory leak.

You probably can relate to this: memory usage grows to the point that an application becomes unresponsive. This happens when the memory is full and there is no space left for memory allocation, causing a server failure.

When this happens, most of us tend to restart the application, and voilà! All the performance issues are solved. However, this temporary solution does not get rid of the bug but rather overlooks it, which could trigger unexpected side effects, especially when the server is under heavy load.

In many cases, there is no clear understanding as to why a memory leak happened. In fact, such observations might be overlooked at their moment of occurrence, especially during development.

The assumption is that it will be fixed later once functionality has been achieved. These occurrences might not bug most people at that particular moment, and they tend to move on. Just keep in mind that memory leaks are not that obvious, and when the memory grows endlessly, it is good to debug the code to check for a correlation between memory usage and response time.

One such debugging strategy is to look at the necessary conditions in object lifetimes. Even though the performance of a program could be stable or seemingly optimal, there is a possibility that some aspects of it trigger memory leakage.

The version of code that runs correctly one day might leak memory in the future due to a change in load, a new integration, or a change in the environment in which the application is run.

In the context of memory management, garbage refers to all values that cannot be reached in memory, and as we mentioned earlier, garbage collection refers to the process of identifying live values and returning the memory used by dead values to the system.

This means that the garbage collector determines which objects should be deallocated by tracing which objects are reachable by a chain of references from certain “root” objects; the rest is considered garbage. The main aim of garbage collection is to reduce memory leaks in a program.

But garbage collection does not solve memory leakage entirely because garbage collection only collects what it knows not to be in use. Objects that are reachable from the roots are not considered garbage.

GC is the most convenient method for handling memory leaks, although one of the downsides is that it consumes additional resources in the process of deciding which space to free. This would thereby slow down processes, affecting the app’s performance.

Memory leaks are not only elusive, but also hard to identify and debug, especially when working with APIs. In this section, we are going to learn how to catch memory leaks using the tools available.

We are also going to discuss suitable methods for debugging leaks in a production environment — methods that will not break the code. Memory leaks that you catch in development are easier to debug than those that make it to production.

If you suspect a memory leak in your application, chances are high that it could be a result of the uncapped increase in the app’s resident set size (RSS), which makes it rise without leveling off. As a result, the RSS becomes too high for the application to handle the workload, which could cause it to crash without an “out of memory” warning.

These are clear indicators that a program could have a memory leak. To manage and/or debug such occurrences, there are some tools that could be used to make the endeavor more fruitful.

The node-heapdump module is good for post-mortem debugging. It generates heap dumps on your SIGUSR2. To help catch bugs easily in a development environment, add node-heapdump as a dependency to your project like so:

npm install heapdump --save

Then, add it in your root file:

const heapdump = require("heapdump");

You are now set to use node-heapdump to take some heap snapshots. You can call the function:

heapdump.writeSnapshot(function(err, filename){

console.log("Sample dump written to", filename);

});

Once you have the snapshots saved to file, you can compare them and get a hint of what is causing a memory leak in your application.

v8.writeHeapSnapshot methodInstead of using a third-party package for capturing heap snapshots as we did in the previous subsection, you can also use the built-in v8 module.

The writeHeapSnapshot method of the v8 module writes the V8 heap to a JSON file, which you can use with Chrome DevTools. According to the Node documentation, the JSON schema generated by the writeHeapSnapshot method is undocumented and specific to the V8 engine, and may vary from one version of V8 to another:

require(v8).writeHeapSnapshot();

The v8 module is not globally available. Therefore, import it before invoking writeHeapSnapshot as in the above example. It is available in Node v.11.13.0 and later versions. For earlier versions of Node, you need to use the node-heapdump package described in the previous section.

Heap snapshots are an effective way to debug leaks in a production environment. They allow developers to record the heap and analyze them later.

However, note that this approach has a potential downsides in production, because it could trigger a latency spike. Taking heap snapshots can be expensive, because we have to do a complete garbage collection after every snapshot. There is even a possibility of crashing your application.

Clinic.js is a handy toolset to diagnose and pinpoint performance bottlenecks in your Node applications. It is an open-source tool developed by NearForm.

To use it, you need to install it from npm. The specific tool for diagnosing memory leaks is the Clinic.js HeapProfiler:

npm install -g clinic clinic heapprofiler --help

The Clinic.js HeapProfiler uses flame graphs to highlight memory allocations. You can use it with tools such as AutoCannon to simulate HTTP load when profiling. It will compile the results into an HTML file you can view in the browser, and you can interpret the flame graph to pinpoint the leaky functionality in your Node application.

process.memoryUsage methodThe process.memoryUsage method provides a simple way of monitoring memory usage in your Node applications.

The method returns an object with the following properties:

rss, or resident set size, refers to the amount of space occupied in the main memory for the process, which includes code segment, heap, and stack. If your RSS is going up, there is a likelihood your application is leaking memoryheapTotal, the total amount of memory available for JavaScript objectsheapUsed, the total amount of memory occupied by JavaScript objectsexternal, the amount of memory consumed by off-heap data (buffers) used by Node; this is where objects, strings, and closures are storedarrayBuffers, the amount of memory allocation for ArrayBuffers and SharedArrayBuffers (the external memory size also includes this memory value)According to the documentation, the value of the arrayBuffers property may be zero when you use Node as an embedded library because allocations for ArrayBuffers may not be tracked.

For instance, this code:

console.log(process.memoryUsage());

Will return something like this:

{

rss: 4935680,

heapTotal:1826816,

heapUsed:650472,

external: 49879,

arrayBuffers: 17310,

}

This shows you how much memory is being consumed by your application. In a production environment, this is not a good method to use because it opens the browser page and shows you the data.

Node Inspector is a debugger interface for Node applications. Run Node with the --inspect flag to use it, and it starts listening for a debugging client. It is one of the simplest ways of capturing heap snapshots with Chrome DevTools. To get the hang of how Node Inspector works, you can read more about it here.

The section below explains how you can use Node Inspector with Chrome DevTools.

Chrome DevTools can be really helpful in catching and debugging memory leaks. To open the dev tools, open Chrome, click the hamburger icon, select More tools, then click Developer Tools.

Chrome offers a range of tools to help debug your memory and performance issues, including allocation timelines, sampling heap profiler, and heap snapshots, just to name a few.

To set up Chrome DevTools to debug a Node application, you’ll need:

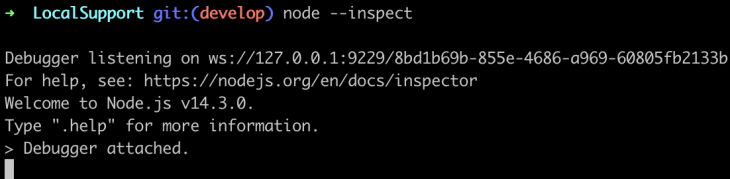

Open your Node project on your terminal and type node --inspect to enable node-inspector.

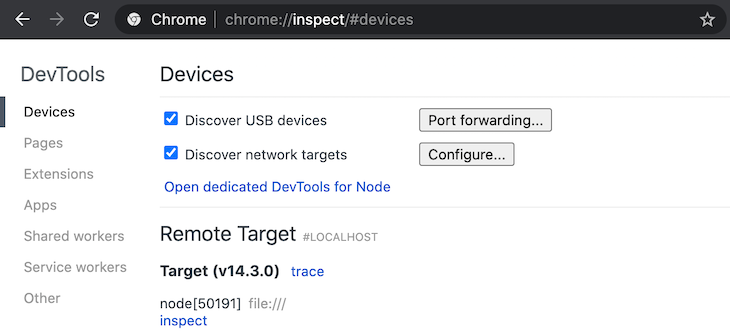

In your browser, type about:inspect. This should open a window like the one below:

Finally, click on Open dedicated DevTools for Node to start debugging your code.

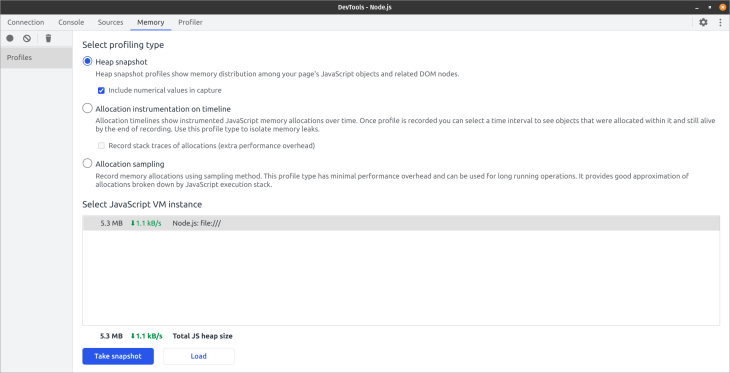

At the bottom of the dedicated DevTools window, there is a button for taking a heap snapshot profile of a running application and loading a heap snapshot file.

In a development environment, you can use the Take snapshot button to take a heap snapshot profile of your running application. The heap snapshot option is checked by default. You can also load heap snapshots from a file.

We all care about performance and keeping our pages fast, making sure that we are using just the minimum amount of memory necessary. Memory profiling can be fun, but at times, it feels like a black box.

It’s hard to avoid memory leaks because you can’t really understand how your objects will be used over time. However, there are ways to mitigate memory leaks in a Node application. Below are common pitfalls that developers fall into when writing applications.

JavaScript objects to DOM object references are excellent until the DOM object links back to such a JavaScript object, forming a reference cycle. This becomes problematic, especially in long-running apps, because memory is not being released from the cycled objects, thereby causing a memory leak. To ensure there is no direct reference from DOM elements to the real event handler, you should indirect event handlers through an array.

Circular referencing means that an object calls itself, creating a loop. This bounds the object to live forever, which could eventually lead to a memory leak.

Here’s an example of an object referencing itself:

var obj = {}

obj.a = a;

In the example above, obj is an empty object, and a is a property that back-references to the same object.

Normally, when this happens, the object references itself, hence a circular loop. This can be problematic at times, because what we’ve basically done is bind this function to exist forever. As long as the global variables exist, so does the local variable.

This type of behavior will cause a memory leak that’s impossible to fix. The best way is to just get rid of object references.

Binding event listeners to many elements makes an application slower. Therefore, consider using event delegation if you need to listen for the same event on many DOM elements similarly in the browser environment. You can bind the event handler to a parent element and then use the event.target property in the handler to locate where the event happened and take appropriate action.

However, as usual, the above performance optimization advice has a caveat. It is best practice to run performance tests to ensure applying the above optimizations result in performance gains for your specific use case.

The cache stores data for faster and easier retrieval when it’s needed later. When computations are slow, caching can be a good way to improve performance. The memory-cache module is a good tool for in-memory caching in your Node applications.

It’s hard to avoid memory leaks, because many programs increase their memory footprint as you run them. The key insight is understanding the expected object’s lifetime and learning how to use the tools available to effectively mitigate memory leaks.

Monitor failed and slow network requests in production

Monitor failed and slow network requests in productionDeploying a Node-based web app or website is the easy part. Making sure your Node instance continues to serve resources to your app is where things get tougher. If you’re interested in ensuring requests to the backend or third-party services are successful, try LogRocket.

LogRocket lets you replay user sessions, eliminating guesswork around why bugs happen by showing exactly what users experienced. It captures console logs, errors, network requests, and pixel-perfect DOM recordings — compatible with all frameworks.

LogRocket's Galileo AI watches sessions for you, instantly identifying and explaining user struggles with automated monitoring of your entire product experience.

LogRocket instruments your app to record baseline performance timings such as page load time, time to first byte, slow network requests, and also logs Redux, NgRx, and Vuex actions/state. Start monitoring for free.

Compare the top AI development tools and models of February 2026. View updated rankings, feature breakdowns, and find the best fit for you.

Broken npm packages often fail due to small packaging mistakes. This guide shows how to use Publint to validate exports, entry points, and module formats before publishing.

Discover what’s new in The Replay, LogRocket’s newsletter for dev and engineering leaders, in the February 11th issue.

Cut React LCP from 28s to ~1s with a four-phase framework covering bundle analysis, React optimizations, SSR, and asset/image tuning.

Hey there, want to help make our blog better?

Join LogRocket’s Content Advisory Board. You’ll help inform the type of content we create and get access to exclusive meetups, social accreditation, and swag.

Sign up now

4 Replies to "Understanding memory leaks in Node.js apps"

Very interesting article. Im starting in nodejs and i believe this is very important to consider

Very useful article. Thanks.

Great article Faith.

Looking forward to more.

Nice article, thank you