Understanding how Javascript works is key to writing efficient Javascript.

Forget about insignificant millisecond improvements: mis-using object properties can lead to a 7x slowdown of a simple one-liner.

Given Javascript’s ubiquity across all levels of the software stack (a la MEAN or replacements 1, 2, 3,) insignificant slowdowns can plague any if not all levels of your infrastructure — not just your website’s menu animation.

There are a number of ways to write more efficient Javascript, but in this article we’ll focus on Javascript optimization methods that are compiler-friendly, meaning the source code makes compiler optimizations easy and effective.

We’ll narrow our discussion to V8 — the Javascript engine that powers Electron, Node.js, and Google Chrome. To understand compiler-friendly optimizations, we first need to discuss how Javascript is compiled.

Javascript execution in V8 is divided into three stages:

The first stage is beyond the scope of this article, but the second and third stages have direct implications on writing optimized Javascript.

We will discuss these optimization methods and how your code can leverage (or misuse) those optimizations. By understanding the basics of Javascript execution, you will not only understand these performance recommendations but also learn how to uncover some of your own.

In reality, the second and third stages are closely coupled. These two stages operate within the just-in-time (JIT) paradigm. To understand the significance of JIT, we will examine prior methods of translating source code to machine code.

To execute any program, the computer must translate source code to a machine language that the machine can run.

There are two methods to accomplish this translation.

The first option involves the use of an interpreter. The interpreter effectively translates and executes line-by-line.

The second method is to use a compiler. The compiler instantly translates all source code into machine language before executing. Each method has its place, given the pros and cons described below.

Interpreters operate using a read-eval-print loop (REPL) — this method features a number of favorable properties:

However, these benefits come at the cost of slow execution due to (1) the overhead of eval, as opposed to running machine code, and (2) the inability to optimize across parts of the program.

More formally, the interpreter cannot recognize duplicate effort when processing different code segments. If you run the same line of code 100 times through an interpreter, the interpreter will translate and execute that same line of code 100 times — needlessly re-translating 99 times.

In sum, interpreters are simple and quick to start but slow to execute.

By contrast, compilers translate all source code at once before execution.

The Replay is a weekly newsletter for dev and engineering leaders.

Delivered once a week, it's your curated guide to the most important conversations around frontend dev, emerging AI tools, and the state of modern software.

With increased complexity, compilers can make global optimizations (e.g., share machine code for repeated lines of code). This affords compilers their only advantage over interpreters — faster execution time.

Essentially, compilers are complex and slow to start but fast to execute.

A just-in-time compiler attempts to combine the best parts of both interpreters and compilers, making both translation and execution fast.

The basic idea is to avoid retranslation where possible. To start, a profiler simply runs the code through an interpreter. During execution, the profiler keeps track of warm code segments, which run a few times, and hot code segments, which run many, many times.

JIT sends warm code segments off to a baseline compiler, reusing the compiled code where possible.

JIT also sends hot code segments to an optimizing compiler. This compiler uses information gathered by the interpreter to (a) make assumptions and (b) make optimizations based on those assumptions (e.g., object properties always appear in a particular order).

However, if those assumptions are invalidated, the optimizing compiler performs deoptimization, meaning it discards the optimized code.

Optimization and deoptimization cycles are expensive and gave rise to a class of Javascript optimization methods described in detail below.

JIT also introduces overhead memory costs associated with storing optimized machine code and the profiler’s execution information. Although this cannot be ameliorated by optimized Javascript, this memory cost motivates Ignition, the V8 interpreter.

V8’s Ignition and TurboFan perform the following functions:

The JIT compiler exhibits overhead memory consumption. Ignition addresses this by achieving three objectives (slides): reducing memory usage, reducing startup time, and reducing complexity.

All three objectives are accomplished by compiling AST to bytecode and collecting feedback during program execution.

Both the AST and bytecode are exposed to the TurboFan optimizing compiler.

With its release in 2008, the V8 engine initially compiled source code directly to machine code, skipping intermediate bytecode representation. At release, V8 was 10x faster than competition, per a Google London keynote (Mcllroy, Oct ’16).

However, TurboFan today accepts Ignition’s intermediate bytecode and is 10x faster than it was in 2008. The same keynote presents past iterations of a V8 compiler and their downfalls:

Per a separate Google Munich technical talk (Titzer, May ’16), TurboFan optimizes for peak performance, static type information usage, separation of compiler frontend, middle, and backend, and testability. This culminates in a key contribution, called a sea (or soup) of nodes.

With the sea of nodes, nodes represent computation and edges represent dependencies.

Unlike a Control Flow Graph (CFG), the sea of nodes relaxes evaluation order for most operations. Like a CFG, control edges and effect edges for stateful operations constrain execution order where needed.

Titzer further refines this definition to be a soup of nodes, where control flow subgraphs are further relaxed. This provides a number of advantages — for example, this avoids redundant code elimination.

Graph reductions are applied to this soup of nodes, with either bottom-up or top-down graph transformations.

The TurboFan pipeline follows 4 steps to translate bytecode into machine code. Note that optimizations in the pipeline below are performed based on feedback collected by Ignition:

TurboFan’s online, JIT-style compilations and optimizations concludes V8’s translation from source code to machine code.

TurboFan’s optimizations improve the net performance of Javascript by mitigating the impact of bad Javascript. Nevertheless, understanding these optimizations can provide further speedups.

Here are 7 tips for improving performance by leveraging optimizations in V8. The first four focus on reducing deoptimization.

Tip 1: Declare object properties in constructor

Changing object properties results in new hidden classes. Take the following example from Google I/O 2012.

class Point {

constructor(x, y) {

this.x = x;

this.y = y;

}

}

var p1 = new Point(11, 22); // hidden class Point created

var p2 = new Point(33, 44);

p1.z = 55; // another hidden class Point created

As you can see, p1 and p2 now have different hidden classes. This foils TurboFan’s attempts to optimize: specifically, any method that accepts the Point object is now deoptimized.

All those functions are re-optimized with both hidden classes. This is true of any modification to the object shape.

Tip 2: Keep object property ordering constant

Changing order of object properties results in new hidden classes, as ordering is included in object shape.

const a1 = { a: 1 }; # hidden class a1 created

a1.b = 3;

const a2 = { b: 3 }; # different hidden class a2 created

a2.a = 1;

Above, a1 and a2 now have different hidden classes as well. Fixing the order allows the compiler to reuse the same hidden class, as the added fields (including the ordering) are used to generate the ID of the hidden class.

Tip 3: Fix function argument types

Functions change object shape based on the value type at a specific argument position. If this type changes, the function is deoptimized and re-optimized.

After seeing four different object shapes, the function becomes megamorphic, so TurboFan does not attempt to optimize the function.

Take the example below.

function add(x, y) {

return x + y

}

add(1, 2); # monomorphic

add("a", "b"); # polymorphic

add(true, false);

add([], []);

add({}, {}); # megamorphic

TurboFan will no longer optimize add after L9.

Tip 4: Declare classes in script scope

Do not define classes in the function scope. Take the following example, illustrating this pathological case:

function createPoint(x, y) {

class Point {

constructor(x, y) {

this.x = x;

this.y = y;

}

}

return new Point(x, y);

}

function length(point) {

...

}

Every time the function createPoint is called, a new Point prototype is created.

Each new prototype corresponds to a new object shape, so the length function thus sees a new object shape with each new point.

As before, after seeing 4 different object shapes, the function becomes megamorphic and TurboFan does not attempt to optimize length.

By placing class Point in the script scope, we can avoid creating new object shapes every time createPoint is called.

The next tip is a quirk in the V8 engine.

Tip 5: Use for ... in

This is a quirk of the V8 engine, a feature that was included in the original Crankshaft and later ported to Ignition and Turbofan.

The for…in loop is 4-6x faster than functional iteration, functional iteration with arrow functions, and Object.keys in a for loop.

Below are 2 refutations of former myths that are no longer relevant, due to modern-day V8 changes.

Tip 6: Irrelevant characters do not affect performance

Crankshaft formerly used byte count of a function to determine whether or not to inline a function. However, TurboFan is built on top of the AST and determines function size using the number of AST nodes instead.

As a result, irrelevant characters such as whitespace, comments, variable name length, and function signature do not affect performance of a function.

Tip 7: Try/catch/finally is not ruinous

Try blocks were formerly prone to costly optimization-deoptimization cycles. However, TurboFan today no longer exhibits significant performance hits when calling a function from within a try block.

While optimizing your JavaScript is step one, monitoring overall performance of your app is key. If you’re interested in understanding performance issues in your production app, try LogRocket.  https://logrocket.com/signup/

https://logrocket.com/signup/

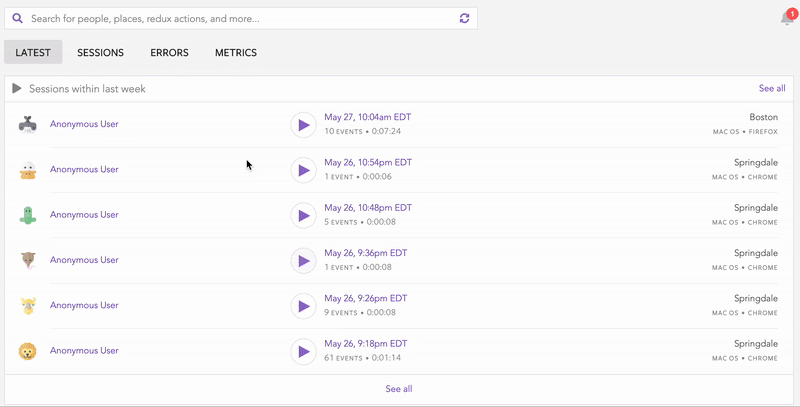

LogRocket is like a DVR for web apps, recording literally everything that happens on your site. Instead of guessing why problems happen, you can aggregate and report on performance issues to quickly understand the root cause.

LogRocket instruments your app to record requests/responses with headers + bodies along with contextual information about the user to get a full picture of an issue. It also records the HTML and CSS on the page, recreating pixel-perfect videos of even the most complex single-page apps.

Make performance a priority – Start monitoring for free.

In sum, optimization methods most often concentrate on reducing deoptimization and avoiding unoptimizable megamorphic functions.

With an understanding of the V8 engine framework, we can additionally deduce other optimization methods not listed above and reuse methods as much as possible to leverage inlining. You now have an understanding of Javascript compilation and its impact on your day-to-day Javascript usage.

Debugging code is always a tedious task. But the more you understand your errors, the easier it is to fix them.

LogRocket allows you to understand these errors in new and unique ways. Our frontend monitoring solution tracks user engagement with your JavaScript frontends to give you the ability to see exactly what the user did that led to an error.

LogRocket records console logs, page load times, stack traces, slow network requests/responses with headers + bodies, browser metadata, and custom logs. Understanding the impact of your JavaScript code will never be easier!

Solve coordination problems in Islands architecture using event-driven patterns instead of localStorage polling.

Signal Forms in Angular 21 replace FormGroup pain and ControlValueAccessor complexity with a cleaner, reactive model built on signals.

Discover what’s new in The Replay, LogRocket’s newsletter for dev and engineering leaders, in the February 25th issue.

Explore how the Universal Commerce Protocol (UCP) allows AI agents to connect with merchants, handle checkout sessions, and securely process payments in real-world e-commerce flows.

Would you be interested in joining LogRocket's developer community?

Join LogRocket’s Content Advisory Board. You’ll help inform the type of content we create and get access to exclusive meetups, social accreditation, and swag.

Sign up now