Claude 4 is Anthropic’s latest generation of advanced AI language models. It was designed to provide developers with a more powerful and reliable way to utilize AI in a safe environment. Existing models have been upgraded, and developers now have access to Claude Opus 4 and Claude Sonnet 4, which replace the previous third-generation models.

Opus 4 is the better model for complex tasks because it utilizes maximum intelligence for its reasoning capabilities. Claude Opus 4 excels at completing tasks that need deep understanding and analysis combined with problem-solving. This means that, when using Claude 4, you will have higher performance and better overall results for your projects.

On the other hand, Claude Sonnet 4 is better suited for everyday development use cases and provides a balance between performance and efficiency. It works well across the board and is good for chatbots, content creation, coding, and normal AI-related tasks.

Both models are built on Anthropic’s Constitutional AI framework, which means they have been trained to be useful, friendly, and truthful. As a result, they are well-suited for production applications where having a reliable and safe platform is important. These models also have many improvements over the previous generations because of their improved reasoning capabilities, which results in better accuracy, a larger context window, longer conversations, and more cost-effective benefits.

This article will teach you everything you need to know to start building apps with Anthropic’s new Claude 4 API. We will explore account setup, environment configuration, core API concepts, and advanced features. At the end of this guide, you should have a basic understanding of how to work with the Claude 4 API and how to integrate it into your applications.

The Replay is a weekly newsletter for dev and engineering leaders.

Delivered once a week, it's your curated guide to the most important conversations around frontend dev, emerging AI tools, and the state of modern software.

Claude 4 has so many advantages that solidify it as a favorite, even though developers have many other AI models to choose from. The API has reliable performance, high availability, and uptime, and is good at following prompts. The documentation is also very clear and organized.

Claude 4’s versatility means that it is suitable for a variety of applications, which can span across all types of environments and use cases:

Code assistance: Claude 4 is a standout performer when writing, debugging, and optimizing code in different programming languages. The models excel when used with complex algorithms, code improvement suggestions, and even when asked to generate complete functions, which have been based on natural language descriptions from a user’s input.

Content generation: Claude’s creative capabilities make it a good choice for writing blogs, creating marketing copy, doing technical documentation, as well as writing general fiction content. The model has been designed to be good at interpreting and understanding brand, context, tone, and voice, which leads to its high-quality content output.

AI agents and assistants: Claude is capable of handling context over a long conversation while also following up on complex instructions. These models can handle steps over multiple tasks and are great at integrating with external APIs, which creates a consistent personality.

Customer support: These models can be integrated with customer support to give intelligent, context-based responses to customer questions. The models are good at understanding questions and providing accurate responses while remaining professional.

Data analysis: Claude’s analytical capabilities can be used to process datasets, reports, and various insights.

Before you start coding with Claude, you first need to create an Anthropic account if you don’t already have one. Then you will be able to access the Anthropic dashboard with your developer account.

The dashboard is the main hub for writing prompts, managing your API keys, accessing the documentation, and monitoring your data usage.

A minimum amount of $5 credits is needed before you can start using the API:

API keys are the main method for implementing authentication with Claude 4. Creating an API key is simple once you navigate to the Get API Key section. From there, you can generate a key and give it a descriptive name, which will help you manage all the keys across your environments and projects.

Good security measures should always be taken into account when you are working with an API. When working with Claude, it’s a good idea to always:

Claude uses versioning headers, which makes it easy to differentiate between the different versions of the API. This ensures that there is consistent behavior as new features become available.

You can specify the version in your requests when using the anthorpic-version header. With this setup, it’s easier to guarantee that your application works as expected, regardless of which API version has been released.

It’s best practice to test new versions in a development environment before you go to production. You can learn more about this process in the Migrating to Claude 4 documentation.

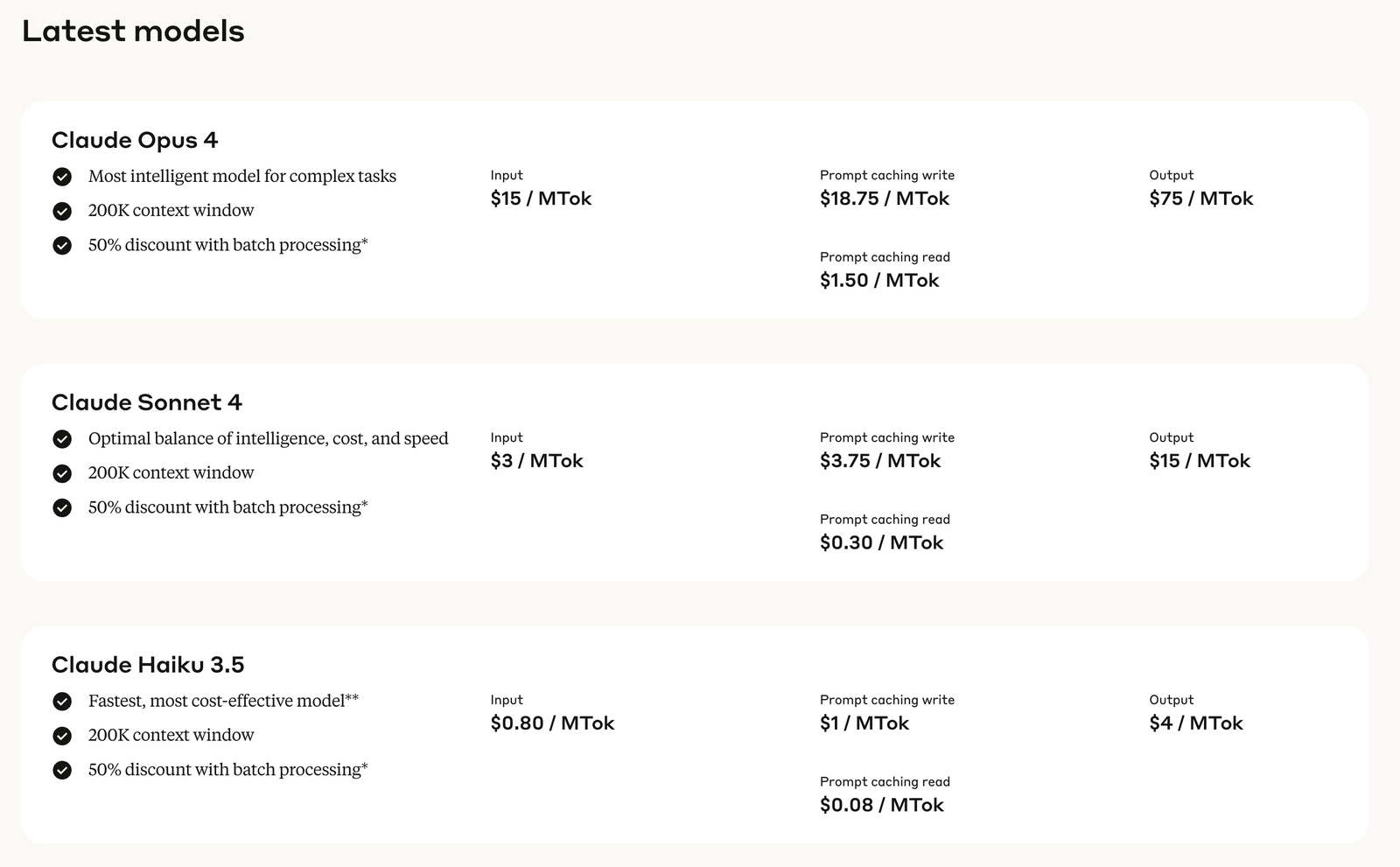

Claude 4 uses a token-based pricing model, which means you pay for the number of tokens that are processed in your requests and responses. These tokens are represented as pieces of text and can be comparable to words or parts of words. Understanding how tokenization works makes it easier to estimate your costs and optimize your usage.

Below, you can see a comparison of Anthropic’s leading AI models:

The input tokens are charged every time you send text to the API, and output tokens are charged for all text that Claude generates in the responses. Different rates are typically charged for input and output tokens; output tokens are slightly more expensive because of the computation costs required for generation.

Counting tokens can be difficult because it doesn’t always translate to word count. You also have to consider punctuation, spaces, and special characters, which also use tokens. Each model has a different price structure because they have different capabilities and computational requirements.

When thinking about cost optimization, it’s important to take into account what your goals are and which model will best suit your interests:

Claude 4 supports integration with most programming languages or frameworks when using the standard HTTP request. Anthropic also provides an official SDK for Python and JavaScript/TypeScript, which provides a streamlined developer experience.

Python continues to be the most popular choice for AI applications because of its large ecosystem. The Python SDK integrates well with popular frameworks such as Django, Flask, and FastAPI.

JavaScript and TypeScript are good choices for web applications, Node backends, and full-stack development. The JavaScript SDK can be used with frameworks and libraries like React, Vue, Angular, Express, and many others.

As for other programming languages, the REST API can be used directly with HTTP clients. This includes languages like Go, Java, C#, Ruby, and more. These languages all have great HTTP libraries that can work seamlessly with Claude 4.

Installing the official SDKs is straightforward when using normal package managers. For Python development, it’s common to use the following command:

pip install anthropic

With this command, we can install the official Anthropic Python library, which gives us a clean interface to work with the Claude 4 API. The library is capable of handling authentication, error handling, and request formatting.

For Node.js and TypeScript development, we would run this command:

npm install @anthropic-ai/sdk

The JavaScript SDK provides TypeScript definitions that work out of the box and seamlessly with JavaScript and TypeScript projects.

You can read more in the official Claude documentation.

The official SDK is the best way to interact with the Claude 4 API because it provides the best development experience. However, there are a few other ways to work with it, as outlined here:

When using Python for development, I recommend using PyCharm, VS Code, Jupyter notebooks, or a similar Python IDE. Install the Anthropic SDK and configure your API key to be used as an environment variable. It’s common practice to create a .env file for local development.

JavaScript/TypeScript development can be achieved in any general-purpose IDE or code editor like VS Code, Cursor, or Windsurf; they all have good support for IntelliSense and debugging features. Setting up your project for TypeScript can be beneficial because it means that you can take advantage of the SDK’s type definitions.

Regardless of the setup you choose, ensure that you have installed the relevant plugins and extensions so that you are ready for the development process and maintaining code quality.

It’s common practice to use an authentication method when interacting with an API for security purposes. The Claude 4 API is no different, with authentication recommended for implementation. To do so, you can include your API key in the x-api-key header for every request you make. Fortunately, the official SDKs handle this automatically as soon as you have configured them with your API key.

When using the Python SDK, your code might look like this:

import anthropic

client = anthropic.Anthropic(

api_key="your-api-key-here",

)

This code is self-explanatory and requires you to insert your API key.

For the JavaScript SDK, the code is quite similar to the Python version:

import Anthropic from '@anthropic-ai/sdk';

const anthropic = new Anthropic({

apiKey: 'your-api-key-here',

});

As mentioned earlier, you should never hardcode your API keys into the source code. It’s best practice to use an environment variable instead of some type of secure configuration management system. The majority of deployment platforms have a secure way to manage sensitive configurations.

The Messages API is the primary interface for interacting with the Claude 4 API. It uses a conversation format that works by having a user send a series of messages, which then receive a response from Claude. With this type of design, it becomes natural to build a conversational application as well as maintain a context across multiple exchanges.

The messages inside the conversation have a role of either “user” or “assistant” alongside the content. The “user” role defines input from your application, while “assistant” is defined as Claude’s responses.

The Claude API can support different types of content, including plain text and structured data. As with most use cases, plain text is usually enough. But the API’s flexibility lets you perform more complex interactions as needed.

Let’s see an overview of what it looks like when running your first Claude 4 API call. In this example, we can see the basic structure and the required parameters.

Using the Python SDK:

import anthropic

client = anthropic.Anthropic(api_key="your-api-key")

message = client.messages.create(

model="claude-sonnet-4-20250514",

max_tokens=1000,

temperature=0,

messages=[

{

"role": "user",

"content": "Hello, Claude! Can you help me understand how to use your API?"

}

]

)

print(message.content)

Like before, your API key is required for authentication, and you need to define the model you are using as well as the tokens, temperature, and the message. In this example, the message is printed in the logs.

Using the JavaScript SDK:

import Anthropic from '@anthropic-ai/sdk';

const anthropic = new Anthropic({

apiKey: 'your-api-key',

});

const msg = await anthropic.messages.create({

model: 'claude-sonnet-4-20250514',

max_tokens: 1000,

temperature: 0,

messages: [

{

role: 'user',

content: 'Hello, Claude! Can you help me understand how to use your API?',

},

],

});

console.log(msg.content);

The code is much the same in this JavaScript example, the only difference being the syntax.

Response handling is an important aspect when building any application. The Claude 4 API can return structured responses that include generated content, the metadata, plus the request. In the official documentation, you can find the structured error types, which make it easy to handle all of the different error conditions.

An exciting new addition to Claude 4 is its code execution capability, which lets Claude write and run code as it solves problems, analyzes data, and provides more accurate data results. With this feature, Claude can now perform calculations, generate various visualizations, and work with different types of data, all in real time.

This new tool also supports Python, and it can handle different types of libraries for data analysis, visualization, and scientific computing. So any applications that require these types of capabilities can benefit considerably, especially in the case of chart and graph generation.

The Files API is another feature improvement from Claude 4. It allows users to upload and work with different types of media, including documents, images, and more. With this feature enabled, applications can process uploaded content, analyze it, and extract information for further analysis.

Some of the formats supported by the Files API include PDFs, images, text files, as well as structured data formats. When files have been successfully uploaded, they can be referenced in conversations. This allows Claude to analyze, summarize, and answer questions that are related to them.

The Model Context Protocol (MCP) connector gives Claude 4 a standard way of extending its core functionality by giving it the power to connect to external data sources and tools. This protocol leads to a seamless way of integrating with databases, APIs, and other similar services while also remaining secure and reliable.

MCP gives developers the ability to create custom connectors that give Claude access to certain data sources or tools relevant to their apps. For example, connectors could be created for a company’s customer database, knowledge base, or APIs.

The protocol can handle authentication, data formatting, and error handling automatically on its own, and because of this, it becomes easier to extend Claude’s capabilities without having to do the manual labor of complex integrations, which can be time-consuming. Standardization also means that connectors can be shared and reused across different types of environments.

Caching in Claude 4 works for up to one hour, which greatly improves performance while also reducing costs for applications that use the same types of prompts repeatedly or work with large amounts of context data that doesn’t change often.

Prompt caching is very useful for apps that need to process large documents, as well as maintain a consistent system prompt, or when working with template-based interactivity. Caching the processed prompt means that repeat requests that use the same cached content can be done faster, which results in more cost savings for the user.

The cache system is smart and is able to automatically handle cache invalidation and its management. Developers are able to take advantage of this new feature because prompts can now be structured to maximize cache hit rates, which is useful for apps that have a predictable usage pattern.

With streaming responses, your application can display Claude’s output while it’s being generated instead of waiting for the complete response to come through. This feature improves the user experience, especially when working with long content generation, by making applications feel more interactive and responsive.

Streaming is fairly easy to implement with the official SDKs because the streaming interface can provide real-time access to tokens when they are generated, which allows you to update your user interface when needed.

When building chatbot interfaces, streaming is very valuable because it creates a more natural conversation, which users have become more used to when using a modern application. Content generation tools and other apps where users have to wait for long outputs can also benefit from streaming.

The vision capabilities in Claude allow it to analyze and understand images, which opens up new possibilities for applications with visual content. The new model can describe images and answer questions about visual elements while also extracting text from images and analyzing graphs, charts, and diagrams.

Different types of image formats can be processed, including multiple images in a single request. With this type of capability, applications that require document processing, visual analysis, and accessibility can get good usage.

Claude 4 tends to perform best when images are clear, high resolution, and relevant for the task, so it’s worth taking this into account when doing data analysis.

System prompts let you customize Claude’s behavior, personality, and responses. This can be tailored to your specific application. When you give clear instructions on how you want Claude to work, you can create a consistent, branded experience that best aligns with your app’s requirements.

Good system prompts should be clear, specific, and provide strong examples of the type of behavior that is needed. Prompts should define the context, role, and constraints needed for Claude’s responses.

System prompts are important for customer-facing applications where you have to be consistent and maintain brand alignment. Testing is always a good idea as you try to find and work on prompts that offer the value you are looking for.

Applications that require large volumes of requests to be processed or handle time-sensitive tasks can also benefit from Claude 4. Batch processing and asynchronous operations allow users to submit a variety of requests efficiently while also handling the responses when they are ready, in contrast to blocking individual requests.

Batch processing is especially useful in cases where applications have to analyze large datasets, process multiple documents at the same time, or generate content for many users. Because it uses an asynchronous method, these operations allow apps to stay responsive as Claude works in the background, making requests.

When using batch processing, it’s good to consider factors like rate limiting, error handling for requests, and progress tracking for operations that have been running for a long time. Scalability and user experience are enhanced when batch processing has been integrated effectively.

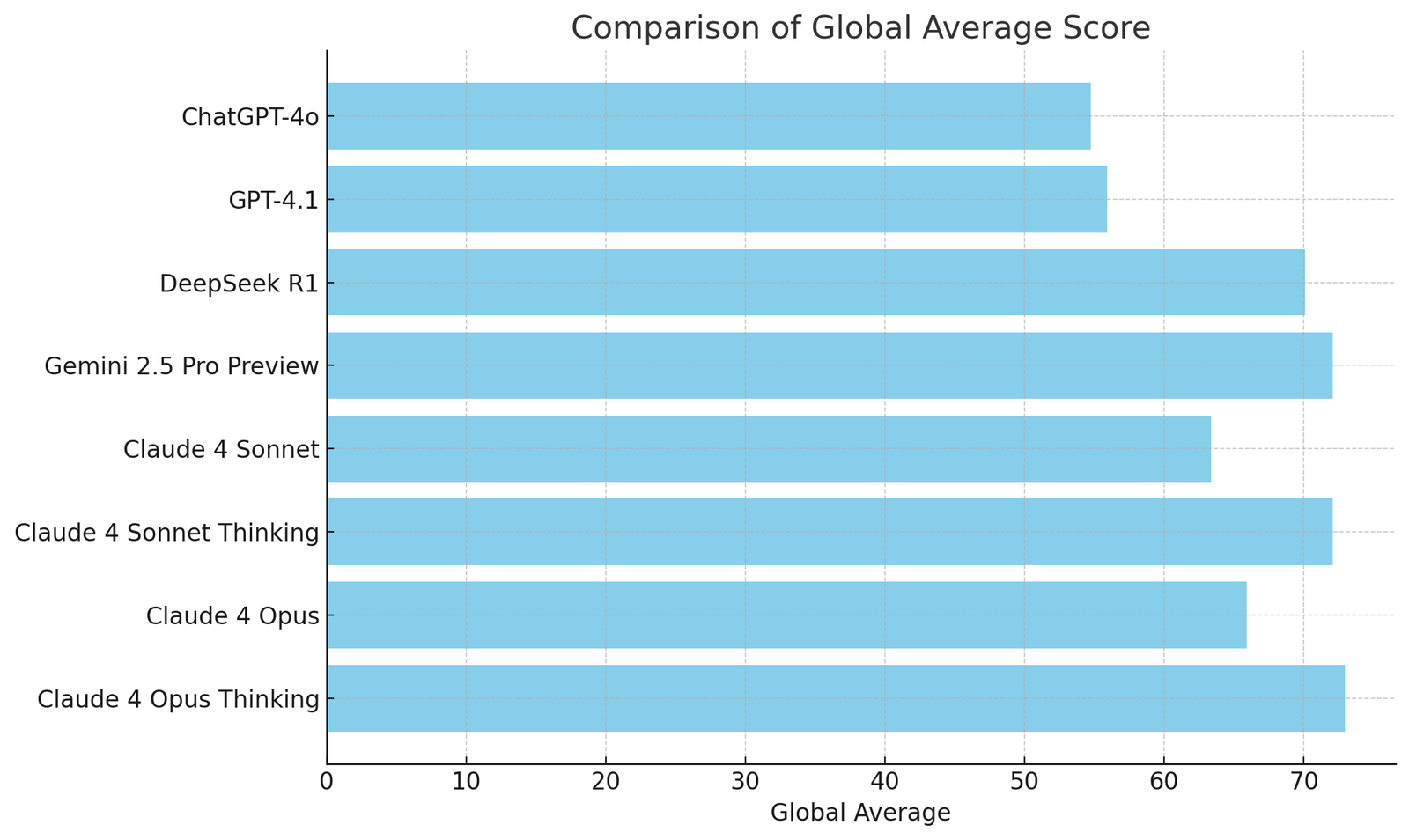

Many companies, including Google, DeepSeek, and OpenAI, offer leading AI models, each with distinct strengths and capabilities. In this section, we will review how these models compare to Anthropic’s Claude 4 models.

The performance benchmark and model comparison data were checked in June 2025. As the models improve, the data is likely to change, so bear that in mind.

For a more in-depth and up-to-date comparison, I recommend you check out these popular AI LLM leaderboard platforms:

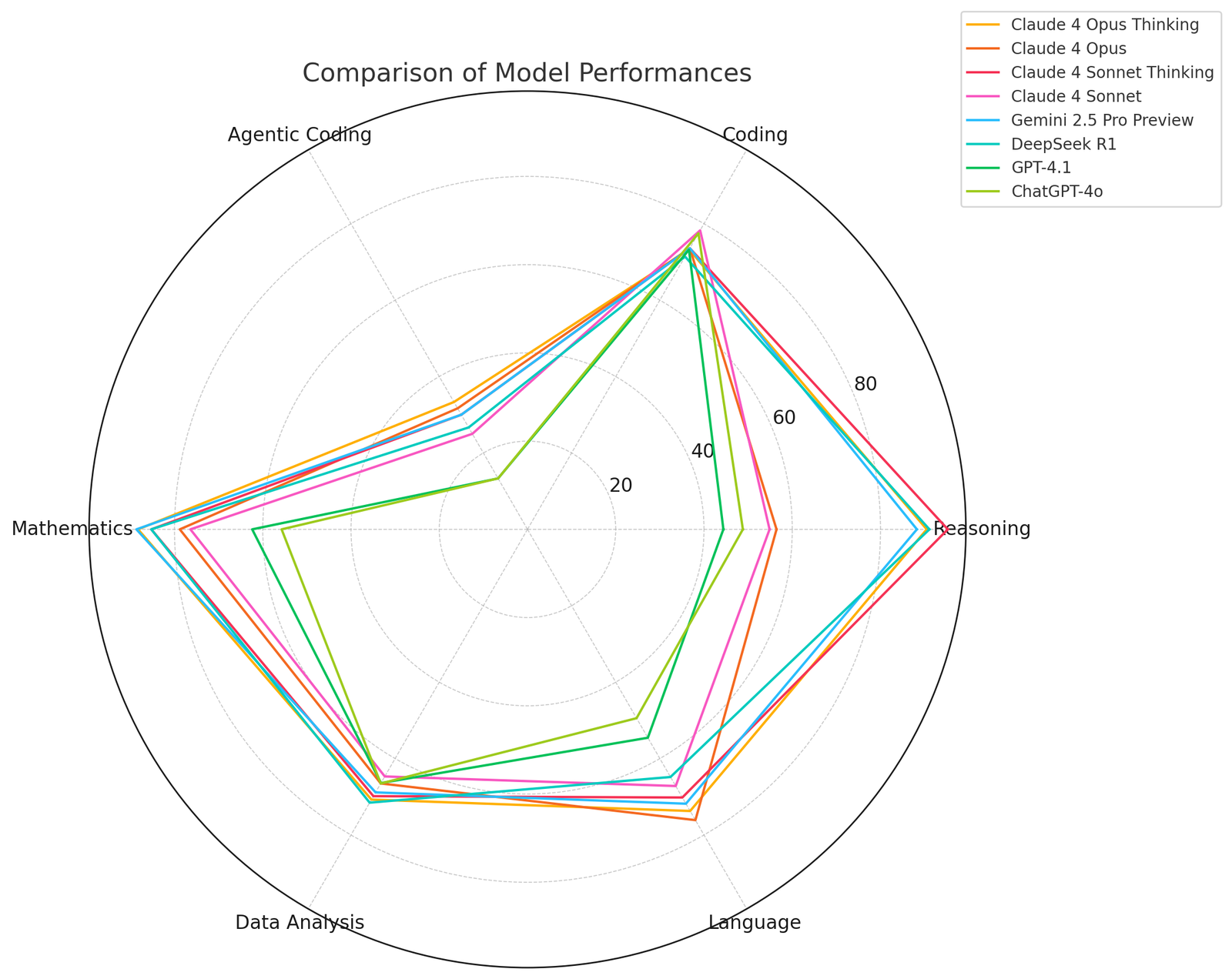

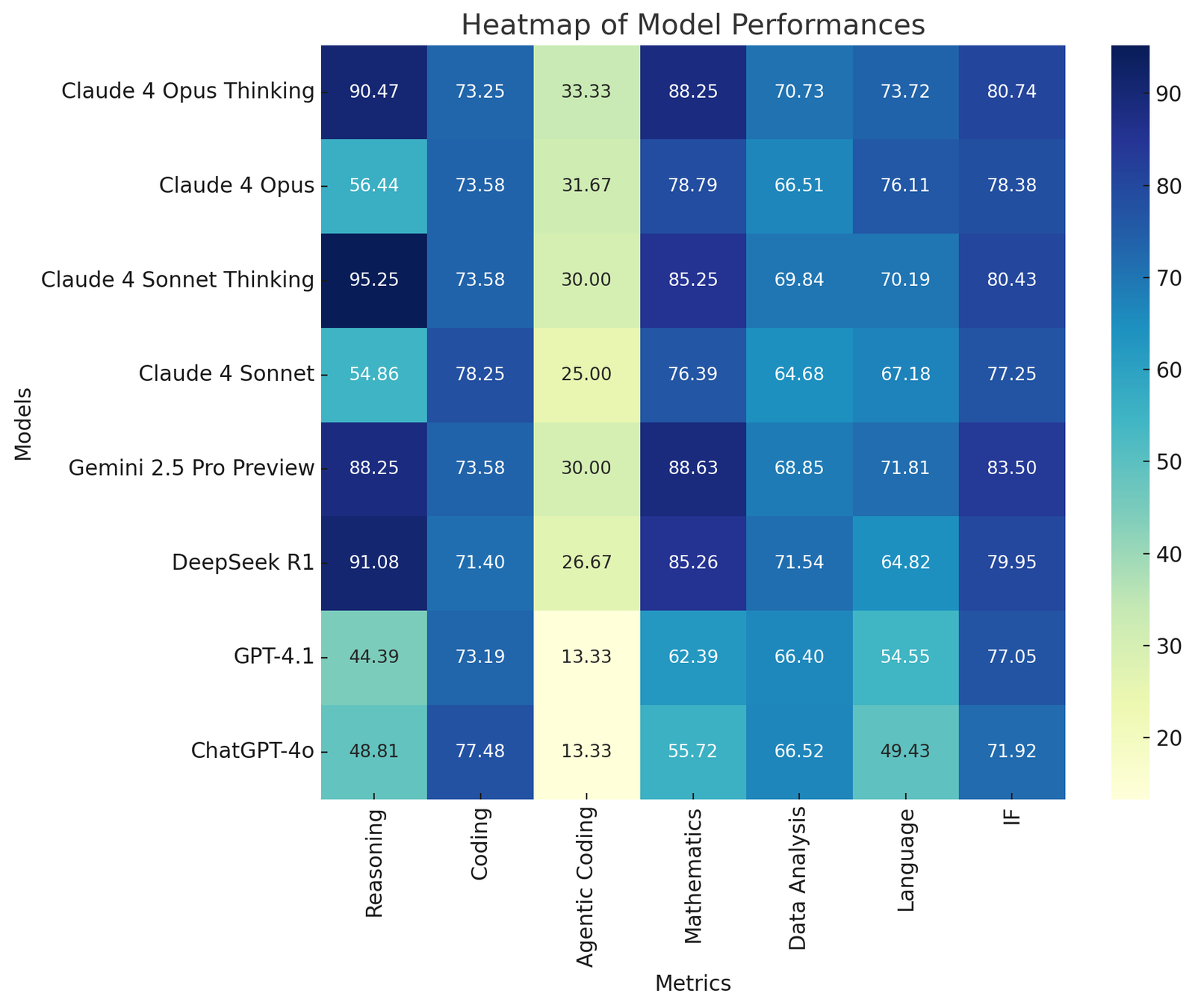

This performance data is based on data from LiveBench June 2025 data:

| Model | Organization | Global Average | Reasoning | Coding | Agentic coding | Mathematics | Data analysis | Language | IF |

|---|---|---|---|---|---|---|---|---|---|

| Claude 4 Opus Thinking | Anthropic | 72.93 | 90.47 | 73.25 | 33.33 | 88.25 | 70.73 | 73.72 | 80.74 |

| Claude 4 Opus | Anthropic | 65.93 | 56.44 | 73.58 | 31.67 | 78.79 | 66.51 | 76.11 | 78.38 |

| Claude 4 Sonnet Thinking | Anthropic | 72.08 | 95.25 | 73.58 | 30.00 | 85.25 | 69.84 | 70.19 | 80.43 |

| Claude 4 Sonnet | Anthropic | 63.37 | 54.86 | 78.25 | 25.00 | 76.39 | 64.68 | 67.18 | 77.25 |

| Gemini 2.5 Pro Preview (2025-05-06) | 72.09 | 88.25 | 73.58 | 30.00 | 88.63 | 68.85 | 71.81 | 83.50 | |

| DeepSeek R1 (2025-05-28) | DeepSeek | 70.10 | 91.08 | 71.40 | 26.67 | 85.26 | 71.54 | 64.82 | 79.95 |

| GPT-4.1 | OpenAI | 55.90 | 44.39 | 73.19 | 13.33 | 62.39 | 66.40 | 54.55 | 77.05 |

| ChatGPT-4o | OpenAI | 54.74 | 48.81 | 77.48 | 13.33 | 55.72 | 66.52 | 49.43 | 71.92 |

This diagram shows how these models compare when it comes to Global Average Score metrics:

This diagram shows how these models compare when it comes to Model Performance:

As we can see, this data shows that the Claude and Gemini models excel in most categories, although OpenAI models are not too far behind. DeepSeek is good all around, across the board, too.

When we take a look at a summary for the benchmark tests, we can see the differences between the models and how the size of the context window can be highly beneficial in many use cases:

| Model | SWE-bench Verified | Context Window | Primary strengths |

|---|---|---|---|

| Claude Opus 4 | 72.5% (79.4% high-compute) | 200K | Best coding, sustained performance |

| Claude Sonnet 4 | 72.7% (80.2% parallel compute) | 200K | Balanced performance/efficiency |

| Gemini 2.5 Pro | 63.2% | 1M+ | Advanced reasoning, multimodal |

| DeepSeek R1 | 36.8% | 128K | Open source, reasoning |

| GPT-4.1 | 54.6% | 1M | Large context, instruction following |

| GPT-4o | 33.2% | 128K | Multimodal, speed |

*With thinking capabilities enabled

Sources for benchmark data:

Now let’s look at the top three LLMs across a few popular categories: AI agent performance, content creation, code assistance, and data analysis.

1. Claude Opus 4: In tests, this model sets new records with a seven-hour autonomous coding run, where it was able to complete complex software engineering tasks with very little human intervention. The model was good at working on its own and had good memory management across extended workflows

2. Gemini 2.5 Pro: Google’s best model has strong agentic capabilities with built-in reasoning and tool use. The fact that the model has a 1 M+ token context window results in a very thorough understanding of complex multi-step tasks

3. Claude Sonnet 4: This model is very reliable when used in production agent deployments as it has an excellent cost-to-effectiveness ratio. It can score 35.5% on Terminal-bench (41.3% with parallel test-time compute)

1. Gemini 2.5 Pro: Google’s Gemini 2.5 Pro leads the LMArena leaderboard for human preference when it comes to content quality, and it demonstrates top-of-the-line style and creativity in text generation

2. Claude Sonnet 4: Claude Sonnet 4 offers high-quality control with content generation and has reduced hallucination in most tests

3. GPT-4.1: OpenAI’s GPT 4.1 model improves upon GPT-4o with better consistency in content generation tasks

1. Claude Opus 4: Anthropic’s Claude Opus 4 is an industry leader when used for coding, with a performance of 72.5% on SWE-bench Verified, and it is capable of outperforming GPT-4o and Gemini 2.5 Pro in multi-step code generation and debugging tests

2. Claude Sonnet 4: This renowned model has state-of-the-art performance with a 72.7% score on the SWE-bench test, delivering better coding performance than models that are bigger when using parallel test-time compute

3. DeepSeek R1: DeepSeek R1 is great at outperforming competitors in algorithmic challenges with scores of (87% vs. 82% on LeetCode Hard problems), but it can struggle with some framework-specific patterns

1. Gemini 2.5 Pro: It’s hard to beat Gemini 2.5 Pro in data analysis tests, as this model has superior multimodal data processing capabilities because of the 1 M+ token context for analysing large datasets and detailed document reading

2. Claude Opus 4: With its extended thinking capabilities, this model can utilise and access memory files for persistent analysis contexts across long-running data processing tasks

3. GPT-4.1: The 1M token context window means that the processing of large documents and datasets can be done with a better understanding of a long prompt

In this table, we can see some of their best use cases:

| Model | Context Window | Best use cases |

|---|---|---|

| Claude Opus 4 | 200K | Complex coding, AI agents |

| Claude Sonnet 4 | 200K | Production coding, balanced use |

| Gemini 2.5 Pro | 1M+ | Content, data analysis, research |

| DeepSeek R1 | 128K | Open-source projects |

| GPT-4.1 | 1M | Enterprise, long documents |

| GPT-4o | 128K | Multimodal, general purpose |

The benchmarking data confirms that the Claude 4 models still dominate in many coding tasks, and that Gemini 2.5 Pro excels in reasoning and content creation. Context window size and specific capabilities can contrast greatly across models, so making the choice depends on your own tailored use case and requirements.

After reading through this walkthrough guide, it becomes clear that the new Claude 4 API is a significant advancement over the previous generations and offers many new capabilities for developers. Having access to the powerful language, code execution, and vision capabilities means that the platform is very versatile when building AI applications.

The API has an extensive, well-thought-out SDK with detailed documentation. With a transparent token-based pricing model, developers can balance cost and features by finding the right model for their projects. New capabilities like the Files API, MCP connectors, and the extended caching open up doors to new opportunities that were not possible in the past.

Claude’s enhanced reasoning capabilities and the improvements made in Claude Sonnet 4 and Claude Opus 4 mean that building AI-powered applications has reached a new level. The official Anthropic documentation provides detailed information about the new API capabilities, best practices, and troubleshooting tips, which are worth exploring as you improve your AI skills.

React Server Components and the Next.js App Router enable streaming and smaller client bundles, but only when used correctly. This article explores six common mistakes that block streaming, bloat hydration, and create stale UI in production.

Gil Fink (SparXis CEO) joins PodRocket to break down today’s most common web rendering patterns: SSR, CSR, static rednering, and islands/resumability.

@container scroll-state: Replace JS scroll listeners nowCSS @container scroll-state lets you build sticky headers, snapping carousels, and scroll indicators without JavaScript. Here’s how to replace scroll listeners with clean, declarative state queries.

Explore 10 Web APIs that replace common JavaScript libraries and reduce npm dependencies, bundle size, and performance overhead.

Hey there, want to help make our blog better?

Join LogRocket’s Content Advisory Board. You’ll help inform the type of content we create and get access to exclusive meetups, social accreditation, and swag.

Sign up now