A growing trend over the last several years, Serverless Architecture allows you to pass your code to cloud providers that have their own servers that you can run your code from. Therefore, you don’t have to worry about how the server works, how it’s maintained, or how it’s scaled. Instead, you can just focus on writing your business logic and pay only for the number of times your code is run.

The most well known tool for Serverless Architecture is AWS Lambda, a serverless function service from AWS. However, the other big cloud providers like GCP and Azure have their own offerings as well.

In this article, we’ll learn how to deploy a Next.js application to AWS Lambda in Serverless Mode, helping us maximize speed and scalability. Let’s get started!

AWS Lambda@Edge?Since v8, Next.js has included Serverless Mode. With Serverless Mode in Next.js, developers can continue to use the developer-friendly, integrated environment of Next.js for building applications, while also taking advantage of Serverless Architecture by breaking up the app into smaller pieces at deployment.

Serverless Mode includes several built in configurations, but these can be somewhat complex to execute. However, deploying to Vercel and Netlify is quite straightforward.

Many startups and smaller companies enjoy all their infrastructure on a larger cloud platform, implementing granular permissions to control access to certain features, like the budget. This infrastructure is quite complex, and its widespread use explains why cloud architects are so in demand these days. In this situation, we can make our lives much easier with the Serverless Framework.

The Serverless Framework is a tool to make deploying to cloud platforms a lot easier, automating provision by allowing you to define your projects infrastructure as yml.

Serverless Components are plugins for the Serverless Framework that have pre-defined steps for building typical project structures. Serverless Next.js is a Serverless Component for easily deploying Next.js apps to AWS. At the time of writing, Serverless Next.js supports self hosting only for AWS, but eventually, it will support other cloud providers as well.

To follow along with the remainder of the tutorial, you’ll need the following:

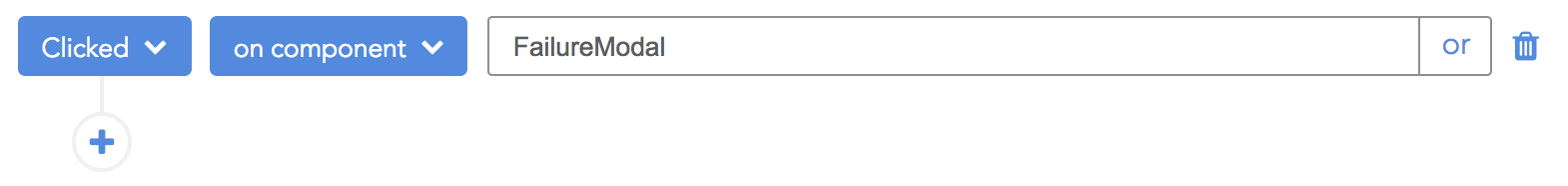

User and Role with access to Lambda, SQS, and CloudfrontIn the root of your Next.js project in the folder with the package.json file, create a serverless.yml file with the following code:

# serverless.yml myNextApplication: component: "@sls-next/[email protected]" # use latest version, currently 3.6.0

Then, we’ll run the serverless command below, which will read the serverless yml, download the component, and run it inside your project. Make sure to include your AWS credential as .env variables in the command:

AWS_ACCESS_KEY_ID=accesskey AWS_SECRET_ACCESS_KEY=sshhh serverless

The command above will deploy your Next.js app to AWS in Serverless Mode. Every API endpoint and page will be configured as an AWS Lambda@Edge function, giving you the speed, costs, and scalability benefits of Serverless Architecture without requiring the complex configuration, API gateways, and Lambda functions on the AWS console.

AWS Lambda@Edge?AWS Lambda functions are serverless functions that are deployed through the AWS Cloudfront CDN. Serverless functions distributed on the CDN are more performant than regular AWS Lambda functions deployed to a particular AWS region.

All static routes in your Next.js application will be pre-rendered and distributed on CloudFront to maximize speed. The result is a dynamic application that can run near the speed of a static site because the dynamic parts are being distributed by the CDN as well, pretty cool!

At the time of writing, the following features are not yet supported by the Serverless Next.js Component:

Note that although ISR is supported, it requires your AWS Deployment User and Roles to have access to Lambda and SQS.

In this article, we explored deploying a Next.js application to AWS Lambda using the Serverless Framework. Next.js provides a great developer environment that allows us to take advantage of static, server-side, and client-side code. With Serverless Next.js, we can easily deploy our application to a Serverless Architecture to maximize speed and scalability.

I hope you enjoyed this article, and be sure to leave a comment if you have any questions. Happy coding!

Debugging Next applications can be difficult, especially when users experience issues that are difficult to reproduce. If you’re interested in monitoring and tracking state, automatically surfacing JavaScript errors, and tracking slow network requests and component load time, try LogRocket.

LogRocket is like a DVR for web and mobile apps, recording literally everything that happens on your Next.js app. Instead of guessing why problems happen, you can aggregate and report on what state your application was in when an issue occurred. LogRocket also monitors your app's performance, reporting with metrics like client CPU load, client memory usage, and more.

The LogRocket Redux middleware package adds an extra layer of visibility into your user sessions. LogRocket logs all actions and state from your Redux stores.

Modernize how you debug your Next.js apps — start monitoring for free.

Would you be interested in joining LogRocket's developer community?

Join LogRocket’s Content Advisory Board. You’ll help inform the type of content we create and get access to exclusive meetups, social accreditation, and swag.

Sign up now

Get to know RxJS features, benefits, and more to help you understand what it is, how it works, and why you should use it.

Explore how to effectively break down a monolithic application into microservices using feature flags and Flagsmith.

Native dialog and popover elements have their own well-defined roles in modern-day frontend web development. Dialog elements are known to […]

LlamaIndex provides tools for ingesting, processing, and implementing complex query workflows that combine data access with LLM prompting.

One Reply to "Deploying Next.js to AWS using Serverless Next.js"

Thanks for this great article. You said `Make sure to include your AWS credential as .env variables in the command` but I think these credentials can be stored in github settings and then use git action to deploy.