A web server is a piece of software that accepts a network request from a user agent, typically a web browser, and returns either the response for the request or an error message. Two dominant solutions for HTTP servers today are Apache and Nginx. However, a new player in the space, Caddy Web Server, is gaining traction for its ease of use. So, which is the best web server for you?

In this article, we’ll examine each web server, comparing the performance, customizability, and architecture of each. By the end of this tutorial, you should be familiar with the strengths of each web server and have a better grasp of which one is best suited for your project.

Let’s get started!

The Apache HTTP Server, maintained by the Apache Software Foundation, was released in 1995 and quickly became the world’s favorite web server. Most often used as part of the LAMP stack, Linux, Apache, MySQL, and PHP, Apache is available for both Unix and Windows operating systems.

Open sourced and written in C, Apache is based on a modular architecture that allows a system administrator to select what modules to apply either during compilation or at runtime, easily configuring how the server should operate. As a result, Apache caters to a wide range of use cases, from serving dynamic content to acting as a load balancer for supported protocols like HTTP and WebSockets.

Apache’s core functionalities include binding to ports on a machine and accepting and processing requests. However, these tasks are isolated by default through a set of Multi-Processing Modules (MPMs) that are included in the software package.

The MPM architecture offers more options for customization depending on the needs of a particular site and the machine’s capabilities. For example, worker or event MPMs can replace the older preform MPM, which uses one thread per connection and doesn’t scale well when concurrency is required.

Besides the modules that are shipped as part of the server distribution, there is an abundance of third-party modules for Apache that you can use to extend its functionality.

You can find Apache’s main configuration in a .conf file: /etc/apache2/apache2.conf on Debian-based Linux distributions and /etc/httpd/httpd.conf on Fedora and Red Hat Enterprise Linux.

However, to specify an alternate configuration file and achieve the desired behavior, you can use the -f flag and any of the available directives. Divide the server configuration into several .conf files and add them using the Include directive. Keep in mind that Apache may recognize changes to the main configuration file only after restarting.

You can also change server configuration at the directory level using an .htaccess file, allowing you to customize behavior for individual websites without changing the main configuration. However, .htaccess files can increase TTFB and CPU usage, degrading performance. When possible, avoid using .htaccess files, and you can disable them altogether by setting the AllowOverride directive to none.

With origins tracing back to the C10K problem, which refers to a web server’s inability to support over 10,000 concurrent users, Nginx was developed with performance in mind. One of its original goals focused on speed, an area in which Apache was believed to be lacking.

First appearing publicly in 2004 as open-source software under the 2-clause BSD license, Nginx expanded in 2011 with a commercial variant for the enterprise called Nginx Plus.

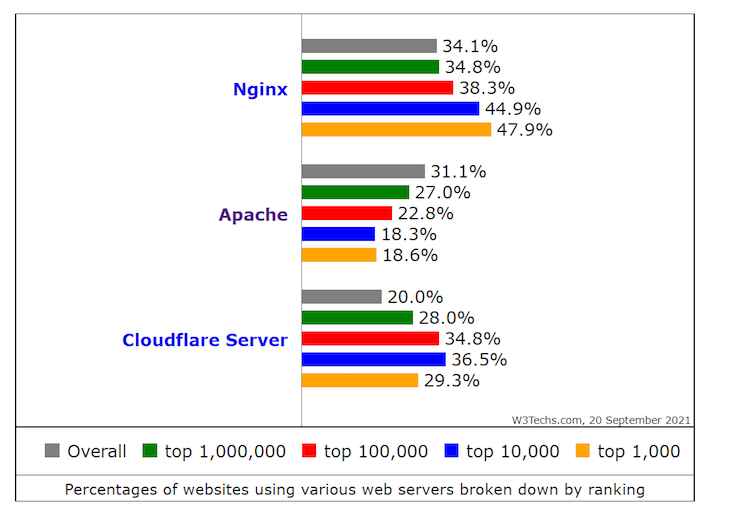

Nginx is currently being used on over 40 percent of the top 10,000 websites. When you consider that Cloudflare Server also uses Nginx under the hood for content delivery, the figure is even higher:

The recommended default configuration involves setting the worker_processes directive to auto. To use hardware resources efficiently, one worker process is created per CPU.

On a Unix operating system, the configuration files for Nginx are located in the /etc/nginx/ directory with nginx.conf as the main configuration file. Nginx uses directives for its configuration, which are grouped into blocks or contexts. Here’s a skeleton of the configuration file:

user www-data;

worker_processes auto;

pid /run/nginx.pid;

include /etc/nginx/modules-enabled/*.conf;

events {

. . .

}

http {

. . .

}

When high performance and scalability are critical requirements, Nginx is frequently the go-to web server. Nginx uses an asynchronous, event-driven, and non-blocking architecture. It follows a multi-process model in which one master process creates several worker processes for handling all network events:

$ ps aux -P | grep nginx root 19199 0.0 0.0 55284 1484 ? Ss 13:02 0:00 nginx: master process /usr/sbin/nginx www-data 19200 0.0 0.0 55848 5140 ? S 13:02 0:00 nginx: worker process www-data 19201 0.0 0.0 55848 5140 ? S 13:02 0:00 nginx: worker process www-data 19202 0.0 0.0 55848 5140 ? S 13:02 0:00 nginx: worker process www-data 19203 0.0 0.0 55848 5140 ? S 13:02 0:00 nginx: worker process

The master process controls the workers’ behaviors and carries out privileged operations like binding to network ports and applying configuration, allowing Nginx to support thousands of incoming network connections per worker process. Instead of creating new threads or processes for each connection, you only need a new file descriptor and a small amount of additional memory.

Caddy is an open-source web server platform designed to be simple, easy to use, and secure. Written in Go with zero dependencies, Caddy is easy to download and runs on almost every platform that Go compiles on.

By default, Caddy comes with support for automatic HTTPS by provisioning and renewing certificates through Let’s Encrypt. Of the three web servers we’ve reviewed, Caddy is the only one to provide these features out of the box, and it also comes with an automatic redirection of HTTP traffic to HTTPS.

Compared to Apache and Nginx, Caddy’s configuration files are much smaller. Additionally, Caddy runs on TLS 1.3, the newest standard in transport security.

Installing Caddy is straightforward. Simply download the static binary for your preferred platform on GitHub or follow the instructions on the installation document. To launch the Caddy server daemon, run caddy run in the terminal. However, nothing will happen without a configuration file.

Caddy uses JSON for its configuration but also supports several configuration adapters. The standard for setting up configuration is through a Caddyfile. Here’s a simple “Hello World” configuration, which binds to port 3000:

:3000 {

respond "Hello, world!"

}

For the changes to take effect, you’ll need to stop the server by pressing Ctrl+C and restarting it with caddy run. Alternately, you can apply the new configuration to a running server by executing caddy reload in a separate terminal. The latter approach is preferable for avoiding downtime.

Accessing http://localhost:3000 in your browser or through curl should produce the expected “Hello, world!” message:

$ curl http://localhost:3000 Hello, world!

Caddy provides the following directives:

file_server: implements a static file serverphp_fastcgi: proxies requests to a PHP FastCGIreverse_proxy: directs incoming traffic to one or more backends with load balancing, health checks, and automatic retriesIn terms of performance, Caddy has been shown to be competitive with Apache but behind Nginx both in terms of requests handled per second and stability under load.

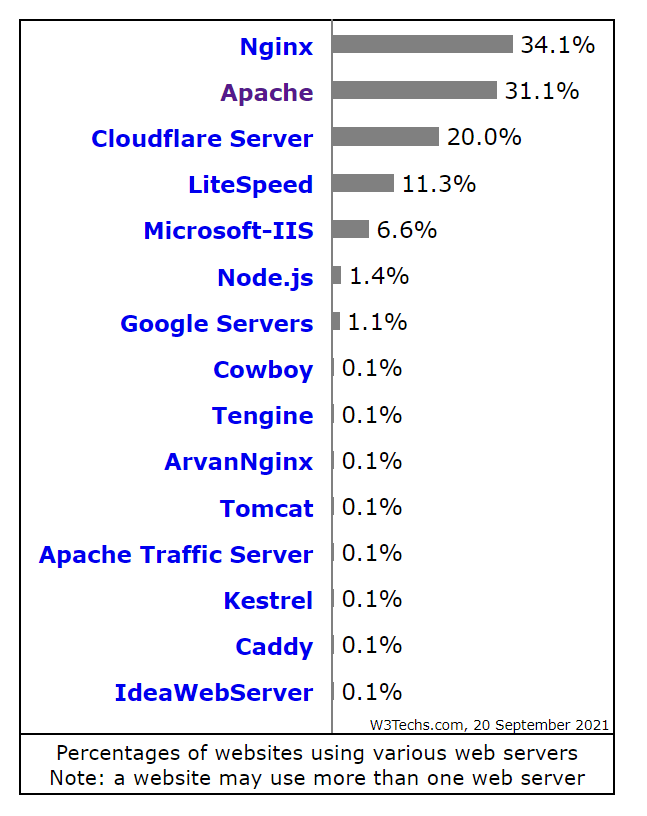

If Nginx is being used for performance optimization, it may not be possible to replace it with Caddy without observing some degradation in performance. Another possible downside to Caddy is that it currently has a small market share, possibly limiting resources for education and troubleshooting:

In this article, we discussed some of the key traits of Caddy, Apache, and Nginx to help you choose the web server that best suits your project’s needs.

If your primary concern is performance, or you plan to serve a large amount of static content, Nginx is likely your best option. While Caddy is easy to configure and performant for most use cases, if you need flexibility and customization, Apache is your best bet.

Keep in mind that you can also combine any two web servers for a great result. For example, you can serve static files with Nginx and process dynamic requests with Apache or Caddy. Thanks for reading, and happy coding!

Install LogRocket via npm or script tag. LogRocket.init() must be called client-side, not

server-side

$ npm i --save logrocket

// Code:

import LogRocket from 'logrocket';

LogRocket.init('app/id');

// Add to your HTML:

<script src="https://cdn.lr-ingest.com/LogRocket.min.js"></script>

<script>window.LogRocket && window.LogRocket.init('app/id');</script>

Would you be interested in joining LogRocket's developer community?

Join LogRocket’s Content Advisory Board. You’ll help inform the type of content we create and get access to exclusive meetups, social accreditation, and swag.

Sign up now

Discover how to use Gemini CLI, Google’s new open-source AI agent that brings Gemini directly to your terminal.

This article explores several proven patterns for writing safer, cleaner, and more readable code in React and TypeScript.

A breakdown of the wrapper and container CSS classes, how they’re used in real-world code, and when it makes sense to use one over the other.

This guide walks you through creating a web UI for an AI agent that browses, clicks, and extracts info from websites powered by Stagehand and Gemini.

5 Replies to "Comparing the best web servers: Caddy, Apache, and Nginx"

I thought that Caddy had better performance than nginx

first i used apache , then through nginx for my web site xetaitot.com . WordPress is probably more suitable for Nginx!

Since most of us use WordPress like dynamic sites, Nginx is the best choice for us.

I find that the performance difference isn’t such a big issue. I’m mostly using it to reverse-proxy requests where some requests go to a backend API service, others to static content. That’s just my own take.

I’ve personally used Caddy very rarely as a proxy for a single service.

However, when it comes to orchestrating a microservice mesh, Traefik (based on Caddy) has been an easy-to-use option with out-of-the-box observability and acceptable performance.