In its recent WWDC Conference, Apple introduced some serious new advancements to Apple Intelligence. One of the world’s largest software companies is evolving how it deploys AI within its products. With this evolution comes a host of new considerations for frontend developers and UX designers.

Hundreds of millions of users globally access their digital lives daily through iPhones, iPads, MacBooks, and other Apple devices. With such a vast and engaged user base, understanding and leveraging Apple’s unique approach to AI is paramount for creating future-forward, relevant, and compelling app experiences.

Now this shift requires appropriate reflection in the mindset when it comes to designing new modern web applications.

In this article, we will:

Let’s dive in!

The Replay is a weekly newsletter for dev and engineering leaders.

Delivered once a week, it's your curated guide to the most important conversations around frontend dev, emerging AI tools, and the state of modern software.

Before going further, let’s first understand what Apple Intelligence is and what kind of AI it is.

We’re used to chatbots. They’re almost synonymous with the idea of artificial intelligence in the minds of many people.

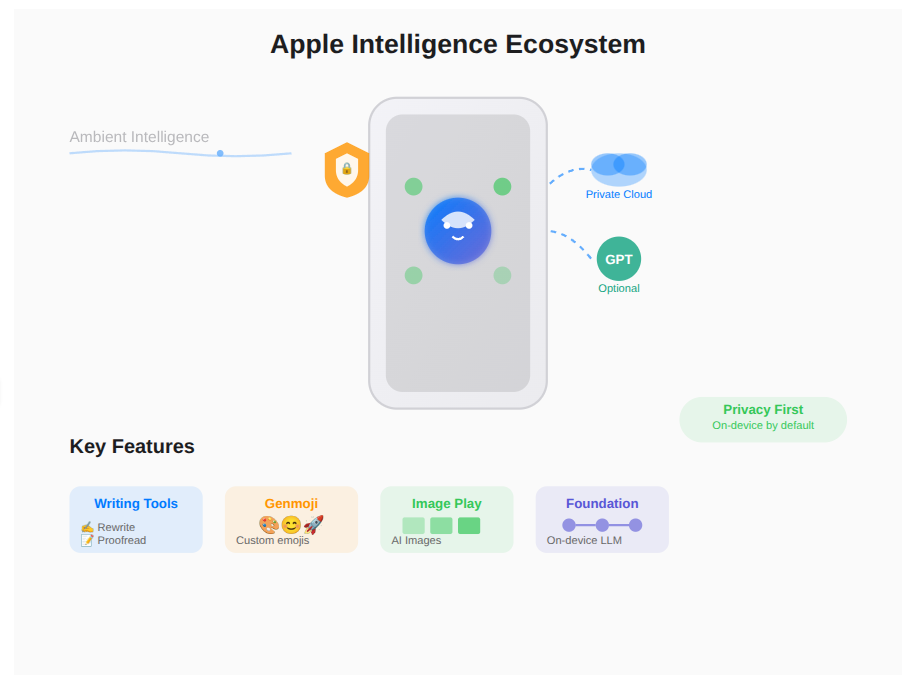

Many have suggested that Apple was lagging behind in the AI race. Instead of coming up with a new chatbot, Apple is positioning AI as more of an ambient entity: an assistant that’s present all the time.

Apple Intelligence is not a chatbot app that you open, ask questions, and get responses; it’s directly integrated into your Apple device. It’s context-aware and can assist you with tasks. Now, AI can help you reply to emails directly, record conversations and give summaries, react and operate on what’s seen on the phone’s screen, etc.

Apple Intelligence does this directly on-device most of the time. We say most of the time, because there are other options like extending the capabilities via using Private Cloud Compute or directly interacting with ChatGPT. With these advancements come privacy questions. On-device AI usage is private, and Apple claims that Private Cloud Compute is private too.

Now, with the latest release, we’ve learned that using the Foundation Models framework, developers can use embedded intelligence even offline. This is big, and it requires a shift in how we should approach UX.

AI is now embedded and can do things it hadn’t before; read the screen, access different apps, and chain together actions.

Developers will need to approach their design in a more intent-based way. Now, apps might have to take into account what the user intends to do, rather than what is predefined by the dev team:

Apple’s AI feels more like a personal assistant than an answer-spitting AI we’re so used to now.

Privacy is a prime concern here. In using the on-device AI, user data does not leave the device. Apple also stressed that the Private Compute Cloud functionality was private, as well.

This functionality can also tap into ChatGPT if required. Here, you have to think about whether you want to present that specific information to the external LLM or not. This flexibility between private and more general approaches is a win for user choice and experience.

Genmojis and image generation are another big selling point, as Apple continues to pursue personal exploration and expression through AI. Users are incentivized to have and create unique, personalized experiences.

Here are three lessons that frontend developers can take from Apple’s new innovations:

Apple Intelligence allows developers to benefit from Writing Tools, Genmoji, and Image Playground. Writing Tools help users rewrite, proofread, and summarize text, but it can also be customized according to the app’s needs.

Genmoji allows users to generate a new emoji, which enables them to express their emotions more thoroughly.

With Image Playground, apps can allow users to play with images using AI. For example, users can remove unwanted background elements.

You can also go further with creating your own models. Here are a few of the highlights of the Apple Intelligence release:

With Foundation Models Framework, you get direct access to the on-device LLM at the code of Apple Intelligence. By using Foundation Models, you can summarize text, extract text, classify it, generate new text, call specific tools in your app, and so on. One important thing to note is that this feature is available offline; it’s free of charge for developers to use, and it has native support for Swift.

Now with direct ChatGPT integration, users can interact with ChatGPT inside their apps. Each supporting Apple product comes with the ChatGPT free tier, so users don’t need to open an account for it. For premium models, users would need to log in to their accounts.

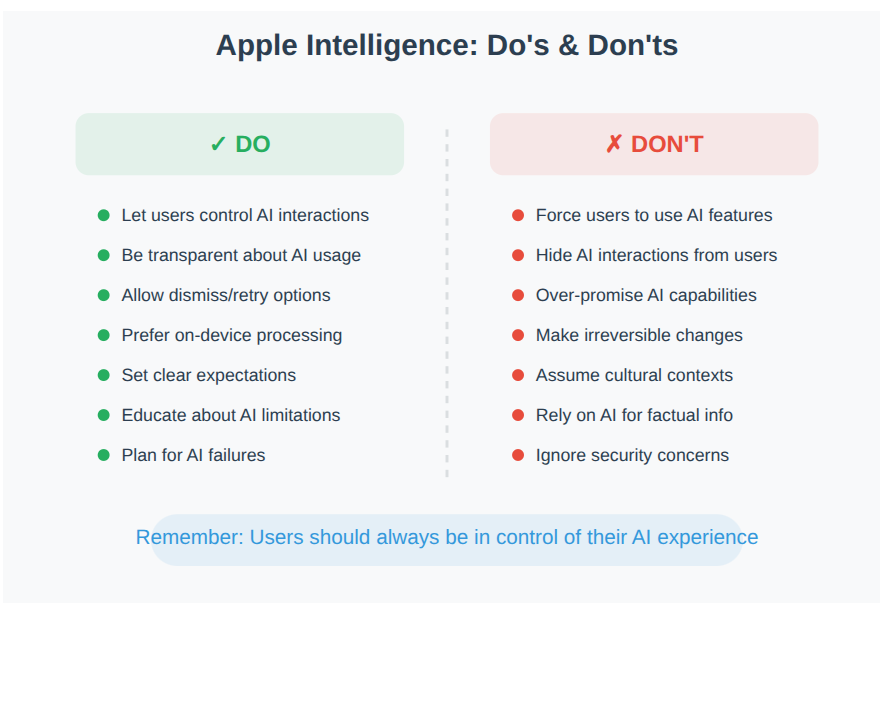

The power of ChatGPT comes with a cost, though. While on-device processing and Private Cloud Computing stress privacy, giving user data to a third party is a whole different act. When designing applications, the developer team should inform the user what is shared with third parties, and when.

Now AI can “see” the user’s screen and react to it.

For example, a user might be browsing social media and they see a comedy show that they’d like to attend. Now, instead of taking a screenshot of the event, saving the details, and updating their calendar, the user will be able to ask AI to do that for them.

This ubiquitousness of AI brings different design implications with it. Now, everything on the screen is subject to AI. It can look at images, alter them, find specific items in them, assess location based on background etc.

To sum it up, Apple Intelligence can:

Building with Apple Intelligence in mind requires a shift in how we think of application design. We can describe this new way of thinking as “intent-based app design.”

Instead of following a predefined set of actions, the user will express their intents in myriad ways and expect the applications to behave accordingly.

To help illustrate the idea, let’s go over some hypothetical examples.

The user opens your app to perform a task.

User expresses an intent (verbally, through text, or even implicitly through context), and Apple Intelligence (via Siri or other system-level features) either fulfills that intent directly or suggests your app as the best tool for the job, with the relevant part of your app pre-loaded.

An example could be a user taking a picture of their kid’s dance school schedule, and Apple Intelligence would take that picture and use the information it gathers from there to add the performance date to the user’s calendar. It’ll then send them reminder notifications before the event date.

Another example could be Apple Intelligence making sure that the user never forgets their anniversary. The user’s intent could be something like “look through my phone, gather all information, and arrange my schedule accordingly.”

In developing such applications, developers will be required to approach user privacy with extra care. Sensitive information should never be shared without the user’s consent.

Apps wait for user input.

Apps, powered by Apple Intelligence, anticipate user needs based on context (location, time, calendar, communication, photos, etc.) and proactively offer relevant functionalities or information.

Developers will have to consider how the app can “listen” to the user’s environment and suggest relevant features before the user even thinks to open the app.

This requires thinking about data privacy and user control. Like the previous example, the user may or may not be happy with the contents of their images being run through an LLM. On top of that, devs should be even more careful to inform their user about whether they want to make use of Private Cloud Computing, or tap into ChatGPT.

For another example, think of a weather app that sends notifications to the user when it’s going to rain to remind them to bring their umbrellas with them.

Your app’s value is primarily contained within its own UI.

Your app’s valuable features are surfaced across the entire Apple ecosystem: in Siri, Spotlight, Messages, Mail, Notes, Photos, and even new “Smart Overlays” or “Visual Intelligence” capabilities.

Example: Your app suggests Genmoji as the user types. Where will it work? Will it also work when writing emails as it does in chat messages? What kind of content can it suggest? Will there be contextual differences regarding different platforms and situations? Generative AI can be dangerous and create confusion.

Users create all content from scratch or select from pre-defined options.

Apple Intelligence provides powerful generative capabilities (text, images, emojis, summaries, etc.) that users can leverage directly within your app or through system-wide tools.

Think of a mobile game where the environment changes depending on where you are located. In this case, the game would create new backgrounds. Or, when interacting with characters, they wouldn’t say the same things all the time, but change their behavior depending on, say, current world events.

In such a scenario, since the tone of the app is subject to change, developers will have to adapt the styling accordingly. Just adding a dark mode will not be enough anymore.

Now that we have an understanding of how the design could shift for this new era of AI that connects and assists, let’s talk about some best practices and pitfalls to avoid.

The following best practices are not just good design principles; they represent crucial considerations for developers getting ready for this new era of the omnipresent, embedded, generative AI.

Now that deeply integrated AI is at hand, with its on-device, offline processing and absolute privacy when needed, developers will face rather unique challenges. These recommendations strive to address the challenges and opportunities of the new era of omnipresent AI.

Everyone who’ll dabble with the Foundation Models Framework, experiment with Visual Intelligence, and tap into ChatGPT features should take these into account:

Like with many great innovations, we’re still in “wait and see” mode. However, Apple’s WWDC announcement wasn’t just another tech update; it was a foundational shift, signaling clear directions for the future of interface design that developers must understand and adapt to now.

To understand the shift, we might need to think of the difference between the old, static websites of the 90s and today’s modern applications. Their content and capabilities foresaw the design mindset that is used towards them. A similar thing is happening with Apple’s understanding of AI.

We can identify several key points that directly interact with our design approach:

Apple decided to shift its attention from the chatbot race to integrating AI as an omnipresent, context-aware personal assistant. AI is not seen as an interactive encyclopedia but a helper that reads information, anticipate, and addresses pain points. This shift signals that future interfaces must prioritize intent-based interactions. In that way of thinking, the user’s goal drives the system.

From on-device processing, where the data never leaves the device, to Private Cloud Compute, Apple shows that it takes privacy seriously. New design thinking should follow this decision.

With features like Genmoji and Image Playground, Apple brings generative AI into daily life. The easier it is to use something, the more people will use it. Since users can now create images that represent their input, developers should think about designing interfaces that beckon the user to experiment with AI: more creativity and more uniqueness. The more personal, the better.

Considering that via the Foundation Models Framework, AI can be used even offline, we are witnessing a shift in how AI is perceived. Apple is directly integrating the AI into their OS, which means that core features like Siri, Photos, Messages etc. can be used with AI, even offline!

One thing that bears repeating is the interaction between AI (the robot), and the human. Now, in building applications, you’ll have to think about all the prompts the user (human) can give, and all the output the AI (the robot) provides in return. Things will go awry in the most unexpected ways, and developers and designers will need to think in broader terms.

But overall, it’s nice to see we’re moving past the “only chatbots” stage of AI usage. Now AI can do more than just answer questions and write buggy code; it can be everywhere at once.

Apple Intelligence marks a pivotal moment, shifting AI from a siloed tool to an ambient, privacy-first assistant woven into the fabric of Apple platforms.

For front-end developers and UX designers, this isn’t just a new set of features; it’s an invitation to redefine how users interact with technology. With direct access to on-device foundation models, powerful Writing Tools, and innovative Image Playground APIs, the opportunities to create truly intelligent, personalized, and intuitive experiences are immense and immediately available.

Now is the time to explore and test these groundbreaking capabilities. Dive into the developer documentation, experiment with the new frameworks, and begin crafting the next generation of intelligent applications that seamlessly assist users while respecting their privacy.

The future of interface design is here, and it’s intelligent.

Discover five practical ways to scale knowledge sharing across engineering teams and reduce onboarding time, bottlenecks, and lost context.

Discover what’s new in The Replay, LogRocket’s newsletter for dev and engineering leaders, in the March 4th issue.

Paige, Jack, Paul, and Noel dig into the biggest shifts reshaping web development right now, from OpenClaw’s foundation move to AI-powered browsers and the growing mental load of agent-driven workflows.

Check out alternatives to the Headless UI library to find unstyled components to optimize your website’s performance without compromising your design.

Would you be interested in joining LogRocket's developer community?

Join LogRocket’s Content Advisory Board. You’ll help inform the type of content we create and get access to exclusive meetups, social accreditation, and swag.

Sign up now