As more teams rush to integrate AI agents into their products, frontend developers often face a frustrating gap: the back-end intelligence is evolving fast, but the UI patterns to support it are lagging behind. Traditional input-output interfaces struggle to keep up with agents that can reason, revise, and act autonomously.

Agentic AI refers to AI systems capable of reasoning, acting autonomously, and adapting to their environment or task. As these agents shift from passive tools to dynamic collaborators, the design patterns underpinning them become increasingly critical.

This article outlines six emerging architectural patterns that define how these agents are built and operated. These patterns enable powerful applications in search, automation, coding, and more. We also explore how these back-end capabilities shape UI/UX on the front end — highlighting opportunities and challenges for frontend developers working with intelligent systems.

The Replay is a weekly newsletter for dev and engineering leaders.

Delivered once a week, it's your curated guide to the most important conversations around frontend dev, emerging AI tools, and the state of modern software.

These six core design patterns form the technical backbone of agentic AI systems. Each offers a different take on how intelligent agents reason, act, collaborate, or retrieve information — and they shape how front-end teams will need to support, visualize, and work with these capabilities.

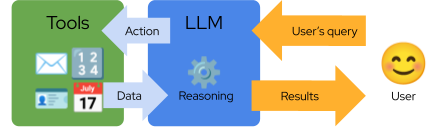

The ReAct (Reasoning and Acting) model is a foundational pattern where agents alternate between reasoning steps — using large language models (LLMs) — and taking actions, such as querying a tool or external service.

This looped process of “thinking and doing” mimics human problem-solving and serves as the blueprint for most current AI agents. It’s also the baseline architecture for using LLM-based agents — the LLM “reasons” about how to solve a problem and then executes that plan via a tool.

In a sense, this serves as the abstract blueprint for implementing all the various agentic architectures described below.

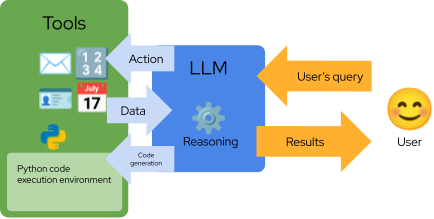

Developed by Manus AI, this architecture enables agents to directly generate and execute Python code — rather than relying on rigid JSON-based actions.

This opens the door to more complex reasoning and execution. While JSON merely conveys structured data, Python allows for both data representation and manipulation. This architecture enables more complex reasoning and dynamic task execution.

For example, when you ask ChatGPT to create a PowerPoint presentation, it may generate and execute Python code to build the file, which you can then download. This ability to operate beyond pre-defined functions creates more flexible, autonomous workflows.

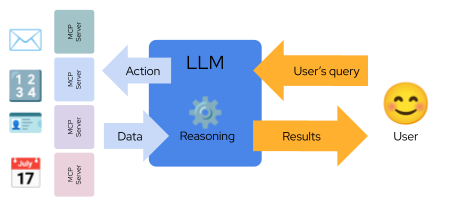

In this pattern, agents interact with cloud infrastructure through lightweight execution standards such as MCP (Multi-Cloud Platform).

MCP allows agents to access and orchestrate tools across hundreds of cloud platforms, enabling massive scalability and integration without requiring deep infrastructure expertise. From a dev standpoint, this allows developers to build thin layers of orchestration logic on top of powerful, distributed services — making the agent far more extensible with minimal overhead:

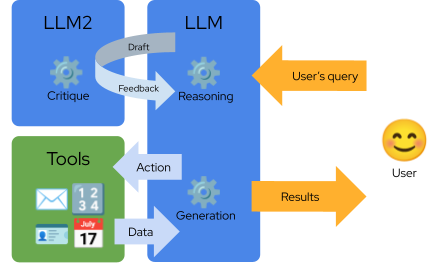

Inspired by metacognitive strategies, self-reflective agents can review and critique their own outputs to improve performance over time.

Typically, this involves a second LLM acting as a kind of internal reviewer or conscience. The agent evaluates its own proposed actions, iteratively refining them until they meet a quality threshold. This not only boosts accuracy but introduces a mechanism for learning from failure — moving closer to human-like problem-solving loops:

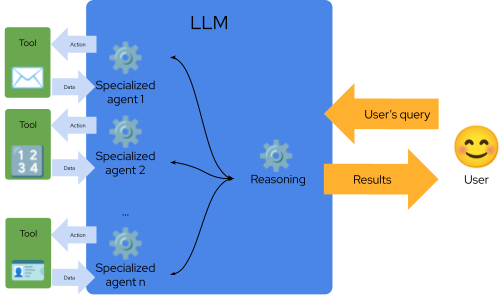

Rather than relying on a single generalist agent, this pattern involves multiple specialized agents collaborating on a task.

Each agent brings a distinct skillset — often through tailored prompts or tools — and contributes to a shared output. This is especially valuable during development and debugging, as responsibilities are clearly divided. For instance, when a specific output seems off, it’s easier to isolate which agent handled that step.

This pattern also opens the door to more modular, composable agent systems — much like microservices in software architecture.

This pattern combines retrieval mechanisms with generative capabilities. Agents access external knowledge — typically from a vector database or API — then synthesize that data into meaningful responses.

By blending search with generation, Agentic RAG systems produce more accurate and informed responses. We have a detailed article on RAG that explores its various flavors in depth.

Designing user interfaces for Agentic AI systems requires rethinking the typical request-response model. These agents don’t just answer queries — they explain, revise, and coordinate with other systems. That means the front end must be transparent, adaptive, and collaborative.

Below are practical examples of how different Agentic patterns shape front-end design and user interaction flows:

Use a dual-pane conversational interface:

This creates a clear, step-by-step user journey and avoids a “black-box” experience. Including animated “thinking” states or progress indicators enhances transparency and sets user expectations.

CodeAct UIs resemble live coding environments:

Include contextual buttons like “Explain this code” or “Edit and rerun” to preserve user control. Because the architecture depends on code as the core execution format, code visibility and editability become central to the UX — especially for technical users.

These agents require modular, task-based layouts:

This allows users to trace decisions and understand how tools are leveraged. The challenge lies in representing a wide variety of tools without cluttering the interface.

Here, versioning and revision history are critical:

Let users accept, reject, or comment on revisions — creating a feedback loop that mirrors how humans co-edit and iterate.

UIs must visualize agent collaboration and task flow:

This transparency empowers users to interact with specific agents — e.g., reassigning tasks or retrying underperforming steps.

Best served by split views:

Include a “Show Sources” toggle to help users distinguish between factual grounding and hallucination. This is especially useful in technical Q&A, tutoring, or research scenarios.

Altogether, these UI patterns show how the front-end must evolve in tandem with agentic back-end architectures — not only to support functionality, but also to create a collaborative and transparent relationship between humans and intelligent systems.

Keep in mind that all these variants can ultimately boil down to a chat-based UI, but we think that using a chat-based UI can create inertia that we might want to avoid.

For your quick reference, I’ve built this table comparing all six architectures:

| PATTERN | CORE CAPABILITY | WHAT MAKES IT DIFFERENT | IDEAL USE CASE | UI IMPLICATIONS |

| ReAct | Reason + act loop | Alternates thinking with tool use | Search agents, email assistants | Two-pane view to expose reasoning and actions |

| CodeAct | Live code execution | Uses code generation as action | Report generation, API interaction | Console-style output, editable code cells |

| Modern Tool Use | Cloud orchestration | Agent-to-tool abstraction across services | Enterprise workflow automation | Modular dashboard with real-time status |

| Self-Reflection | Output self-critique | Agent uses feedback loops to improve | Content validation, QA | Versioned views with inline annotations |

| Multi-Agent | Specialized agent collaboration | Multiple agents with focused roles | Product design, document generation | Process flow with agent activity highlights |

| Agentic RAG | Contextual retrieval | Combines memory search with generation | Knowledge assistants, tutoring | Split view with sources and generated output |

Agentic architectures are powerful, but they’re not magic. With great autonomy comes great complexity. Here’s what you should keep an eye on:

Designing around these tradeoffs isn’t optional — it’s part of the job. Think of it as agentic gravity. You can’t defy it, but you can build smartly within it.

These six design patterns represent the current frontier in Agentic AI development. From reflective loops to multi-agent collaboration, they’re reshaping how frontend and backend systems interact.

As these systems evolve, we expect to see more autonomous agents with long-term memory, agent-specific GUIs with persistent state, and real-time feedback loops where users and agents co-adapt. UI systems that allow for granular transparency, reversibility, and human override will likely define successful implementations in production.

And as these patterns mature, expect to see hybrid models emerge — blending reasoning, retrieval, execution, and critique into tightly integrated systems. For developers, especially those working on the front end, understanding these patterns isn’t just helpful — it’s essential to building the next generation of intelligent, user-aligned applications.

Solve coordination problems in Islands architecture using event-driven patterns instead of localStorage polling.

Signal Forms in Angular 21 replace FormGroup pain and ControlValueAccessor complexity with a cleaner, reactive model built on signals.

Discover what’s new in The Replay, LogRocket’s newsletter for dev and engineering leaders, in the February 25th issue.

Explore how the Universal Commerce Protocol (UCP) allows AI agents to connect with merchants, handle checkout sessions, and securely process payments in real-world e-commerce flows.

Would you be interested in joining LogRocket's developer community?

Join LogRocket’s Content Advisory Board. You’ll help inform the type of content we create and get access to exclusive meetups, social accreditation, and swag.

Sign up now