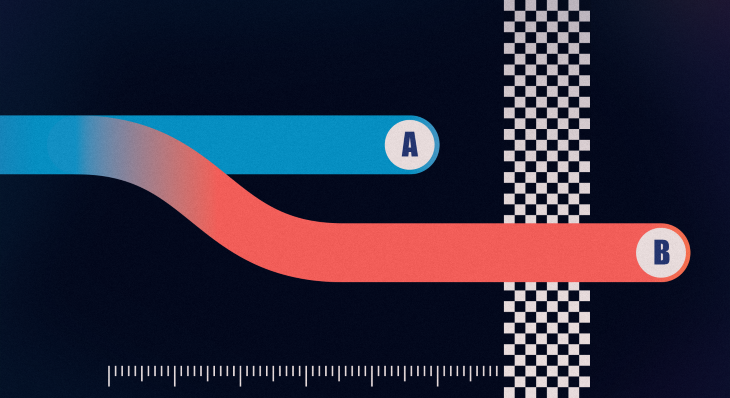

In general, A/B testing is a pretty clear concept: you create several versions of something, let your users interact with them, and observe the real-life usage. Each version is displayed to a separate part of the targeted audience so that you can compare the performance of A against B.

As a result, you get a “winner” backed up with data. Sounds nice, right? It significantly helps to reduce ambiguity and guesswork.

Since A/B testing can take the burden of making a hard decision between options and provide you with straightforward evidence, it is easy to get addicted to this practice and blindly test every new feature. Despite the apparent simplicity of the approach, it may give you convincing but actually misleading results.

Several simple rules and best practices can help you to introduce A/B testing into your process. Let’s briefly overview the steps involved.

Every test requires a clear idea of the purpose, so first of all:

What do we want to achieve?

For example, we might aim to increase upsell or stop audience loss, or start working on a completely new service — the goal will help you to define the success of the versions you are testing against the priorities. In case your new variation breaks the conversion but creates more recurring users, is it a disaster or a success?

How can we achieve it?

In simple form, it is something like “If we do “A” we expect an increase of “X.” The hypotheses vary in scale or maturity — it might be a slight improvement of the current version, a search for the most efficient feature introduction, or the next big idea validation.

How are we going to measure the success?

Your variations should have valid comparison criteria — conversion from Action A to Action B, user engagement, feature adoption, bounce rate, etc.

Experiments can take different shapes; let’s review some general examples:

A/B tests can be valuable only if done correctly. In this section, I’ll give the rules of thumb for experiment preparation.

While designing the experiment, try to focus on a single aspect you want to test to isolate its impact. Otherwise, it would be hard to understand the value of each change

Try to avoid running several experiments that can affect each other simultaneously because otherwise, once again, it would be unclear what specifically affected the metrics you observe. Or be ready for the multiplication of the variations, for example:

The same applies to your discounts test, where the data may become corrupted unless you take a new payment flow into account.

Additionally, the change should be bold if we want the result to be observable. We will talk about it a bit more in the audience section.

Since we use an A/B test to validate the hypothesis, we don’t have a hundred percent confidence in the variation we are going to introduce. So in order to shorten iteration cycle time, try to find the cheapest way to test it — anything that helps to reduce the implementation time without corrupting the hypothesis. Often, this means an imitation of the functionality, rather dirty hardcoded elements that pass by the design system or vice versa, building something using exclusively ready design system components or third-party tools.

When we talk about the audience, we cannot skip statistical significance — how likely are the results you get to be reliable and not just coming from random chance? I won’t mention the formulae here, you can find a pile of articles about this topic alone, and today’s analytics systems usually can highlight the significant results.

But before starting an experiment, you should check if you can allocate enough traffic. Simply put, the lower change in performance you expect, the higher the audience should be — a 2 percent conversion difference is much more likely to be an accident than a 20 percent one. By the way, that’s the reason I’ve recommended being bold while designing an experiment.

But you have to care not only about the amount of traffic but about the quality of the traffic as well. Try to eliminate the users who are not the direct targets for your experiment, so that their actions won’t bring the noise into your experiment dashboard. For example, if you test infinite scroll, include only those who have a significant list of items to evaluate the effect properly.

As was mentioned above, often the goal is to reduce the experimentation cycle time. You can save some time implementing the experiment for a limited number of regions (so that you don’t have to adjust it based on region or wait for translations) and/or limited categories (so that you can hardcode the content without sophisticated automation and database manipulations).

Generally, there are two types of user research methods:

A/B testing is mainly about quantitative data — usually, you track the performance of the variations by observing the metrics you’ve set up initially. Be aware that in the real world, some hypotheses may not behave as expected or may fail to make any significant difference in the results.

While looking at quantitative data, it’s important to remember that these numbers don’t reveal the motivations behind user behavior. Numbers are not going to lie but can be misleading, so it is often helpful to interpret them as symptoms of underlying user needs or issues.

And in case you are not sure about the diagnosis of the symptoms, the results you’ve got should be carefully examined — probably the losing idea is not a disaster, but it just wasn’t shaped properly. Sometimes it is useful to interview your users or watch the session replays with different variations to understand the reasons for such variations’ performance. Experiments often take several iterations to get better results.

A/B testing is a powerful tool that can significantly improve the performance of your product if used properly. As a quantitative research method, it displays the actual effectiveness of the design and business model, adding a safety gap and time to polish the solution. But don’t go all in with A/B testing exclusively, and always combine it with other research methods to get the maximum results.

And in case you were guessing, this is the only variation of this article.

Header image source: IconScout

LogRocket's Galileo AI watches sessions and understands user feedback for you, automating the most time-intensive parts of your job and giving you more time to focus on great design.

See how design choices, interactions, and issues affect your users — get a demo of LogRocket today.

I’ve reviewed “final” designs more times than I can count — and the copy almost always gives users a reason to hesitate.

The checkbox is one of the most common elements in UX design. Learn all about the feature, its states, and the types of selection.

Optimization fatigue is real. Here’s why designing only for metrics drains creativity, and how to bring the human back into UX.

Let’s explore why and when to use drag and drop, discussing real-world examples, platform-specific considerations, and accessibility tips.